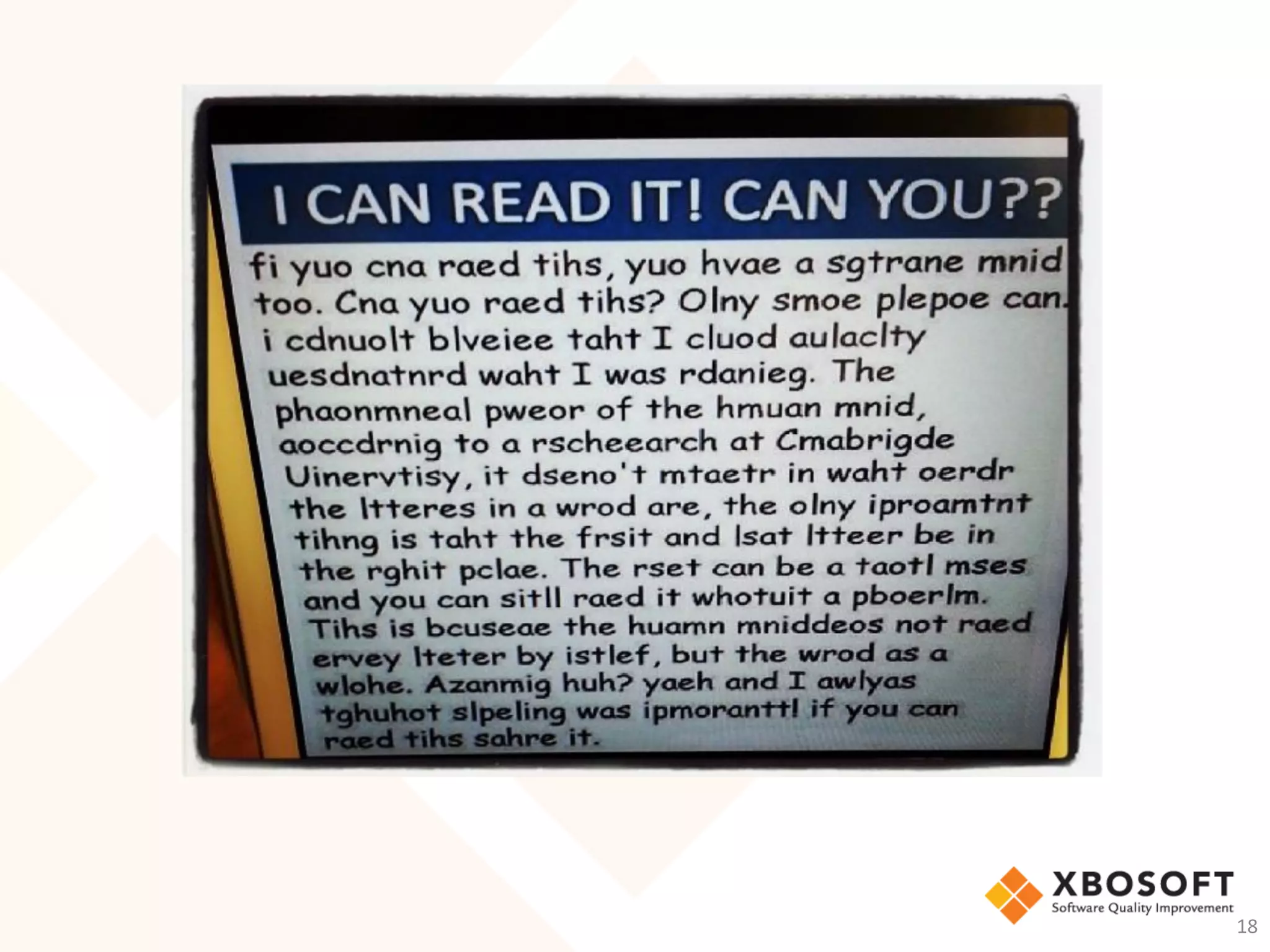

The document discusses cognitive biases that can cause testers to miss bugs. It explains that software testing involves both objective comparisons to specifications as well as subjective judgments, and that missed bugs result from errors in judgment influenced by cognitive biases. Some biases discussed include representative bias, confirmation bias, and anchoring effect. The document advocates managing cognitive biases through techniques like exploratory testing, which focuses more on intuition and learning than requirements coverage. It suggests testers, managers, and the QA profession shift focus from finding bugs to providing information.