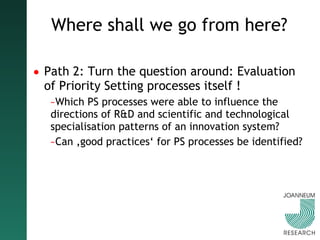

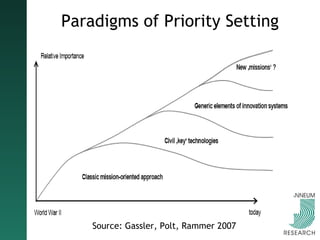

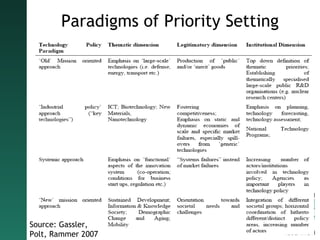

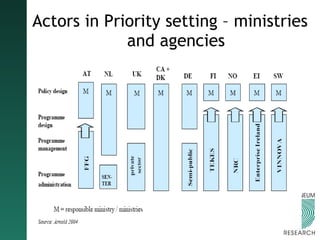

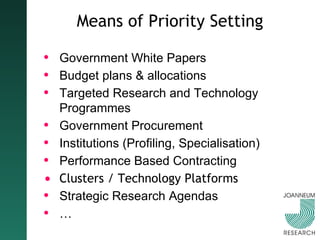

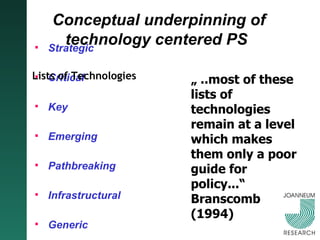

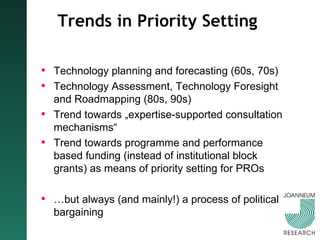

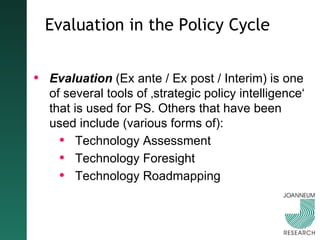

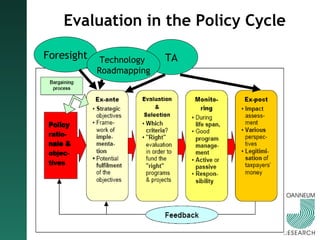

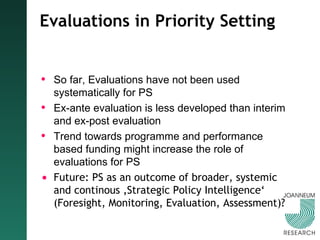

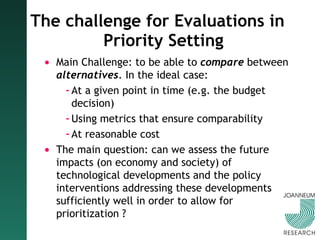

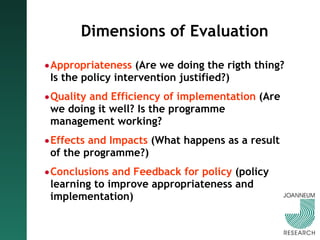

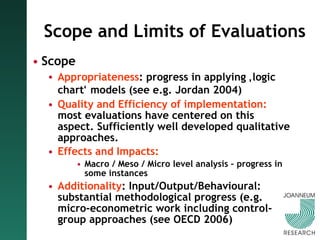

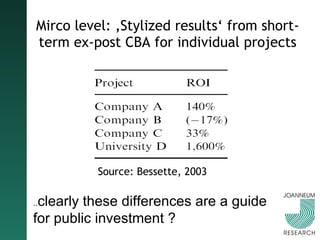

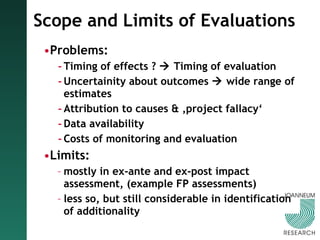

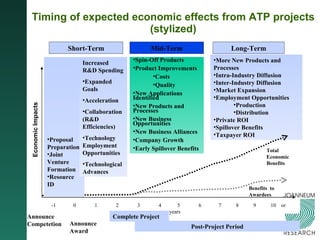

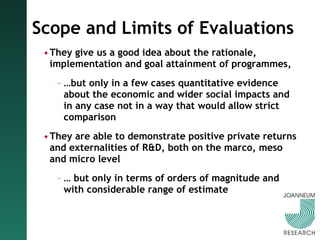

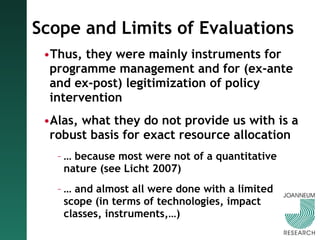

This document discusses issues related to priority setting in science and technology. It defines priority setting as the deliberate selection of certain activities, actors, or policies over others to impact resource allocation. Evaluation is defined as the systematic assessment of the rationale, implementation, and impact of policy interventions. The document outlines different dimensions of priority setting processes, paradigms, actors involved, and means used. It also discusses the role and limitations of evaluations in informing priority setting decisions.

![Issues in Priority Setting Wolfgang Polt Joanneum Research [email_address] OECD Workshop on Rethinking Evaluation in Science and Technology Paris 30.10.2007](https://image.slidesharecdn.com/wpprioritysettingparis2930oct07-12768966032104-phpapp01/75/Wp-Priority-Setting-Paris-29-30-Oct-07-1-2048.jpg)

![Towards realistic expectations Thus „ it is clear that the information requirements […] far exceed what is likely to be available in any practical situation and may in themselves place undue transaction costs upon the subsidy “ (Georghiou/Clarisse in OECD 2006) „… the precise alloaction […] is not important, as long as it is sufficiently diversified. Rather than attempting to refine the allocations, energy and resources may be more productively focused on ways to improve links within the research system.“ (PANNELL, 1999)](https://image.slidesharecdn.com/wpprioritysettingparis2930oct07-12768966032104-phpapp01/85/Wp-Priority-Setting-Paris-29-30-Oct-07-29-320.jpg)