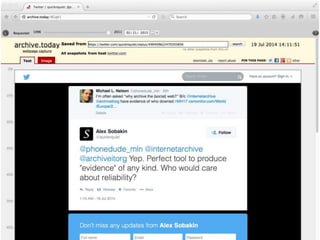

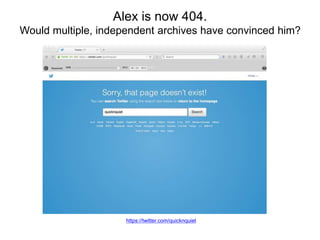

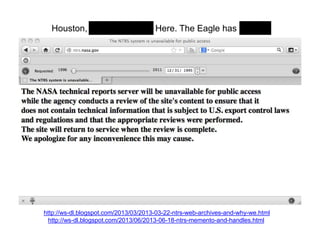

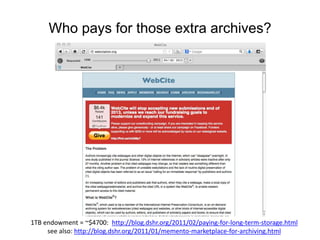

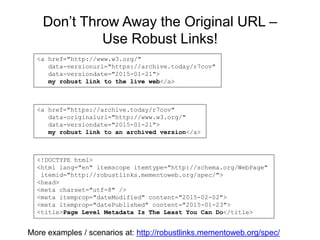

The document discusses the importance of having multiple web archives for effective digital preservation, countering misconceptions about web archiving and emphasizing the vulnerabilities of relying on a single archive. It highlights the complexity of web representation and the economic challenges facing preservation efforts, posing questions about who should fund multiple archives. The authors advocate for a more robust approach to archiving that includes preserving original URLs and enhancing the accessibility of archived content.