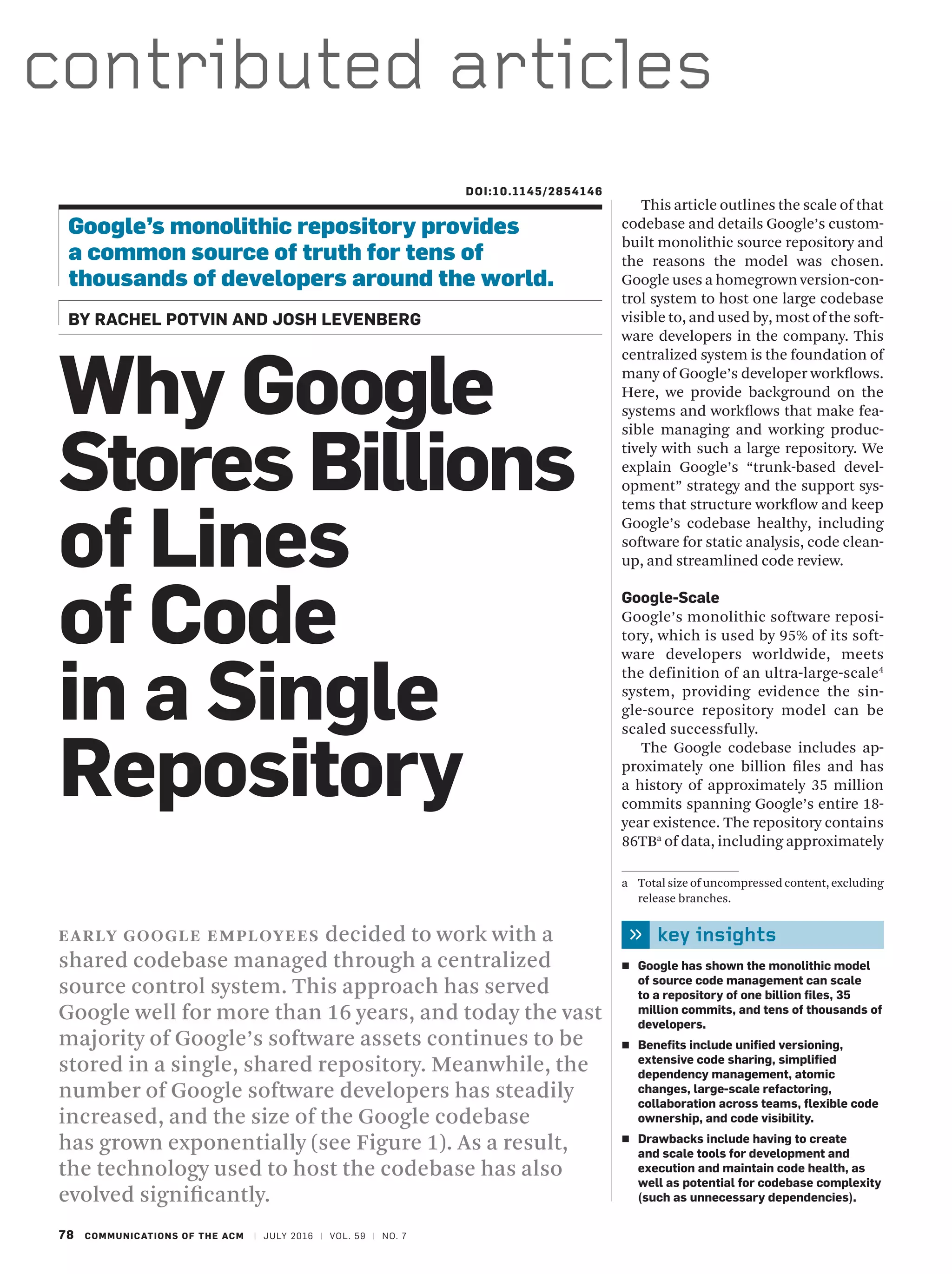

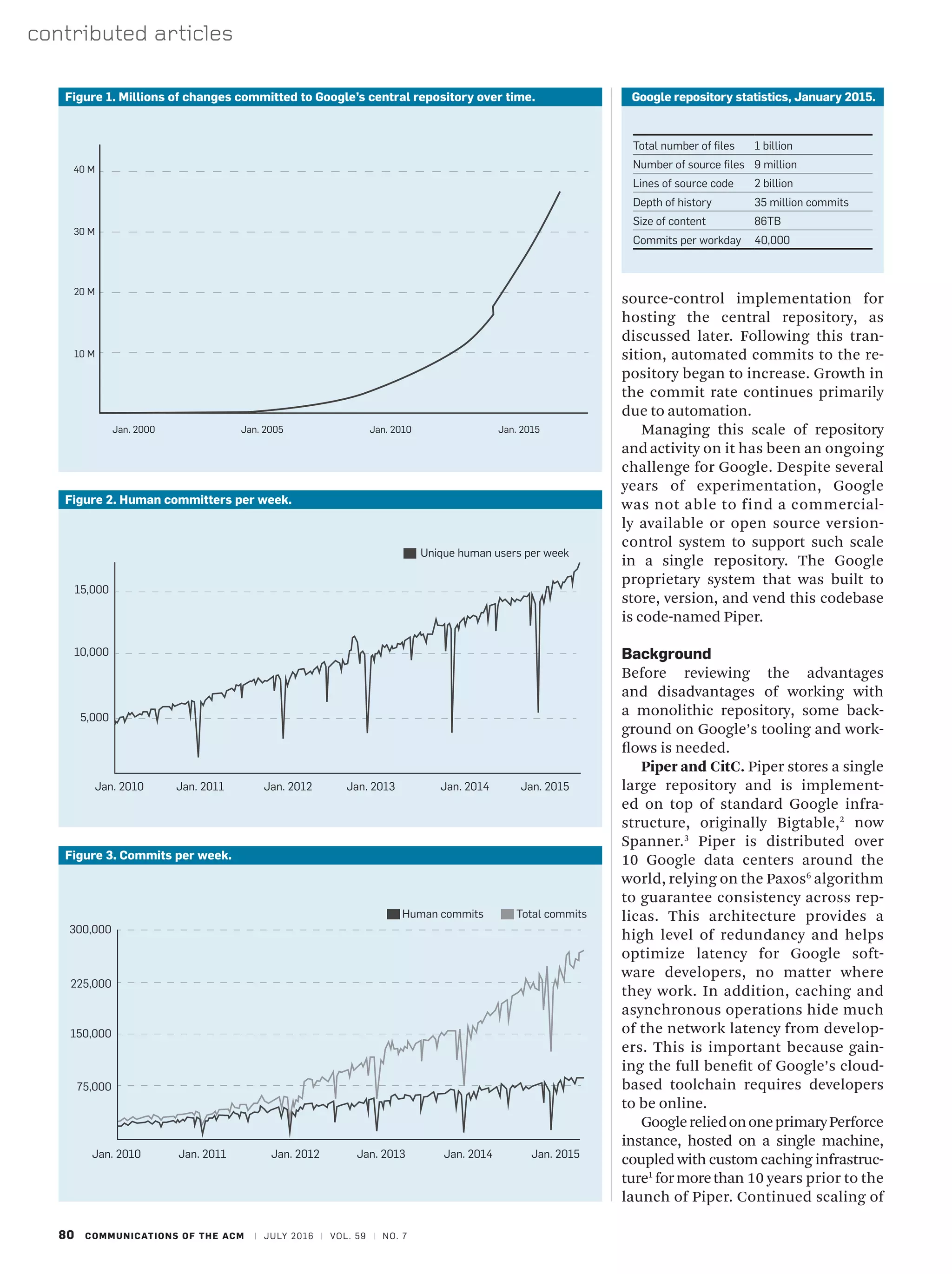

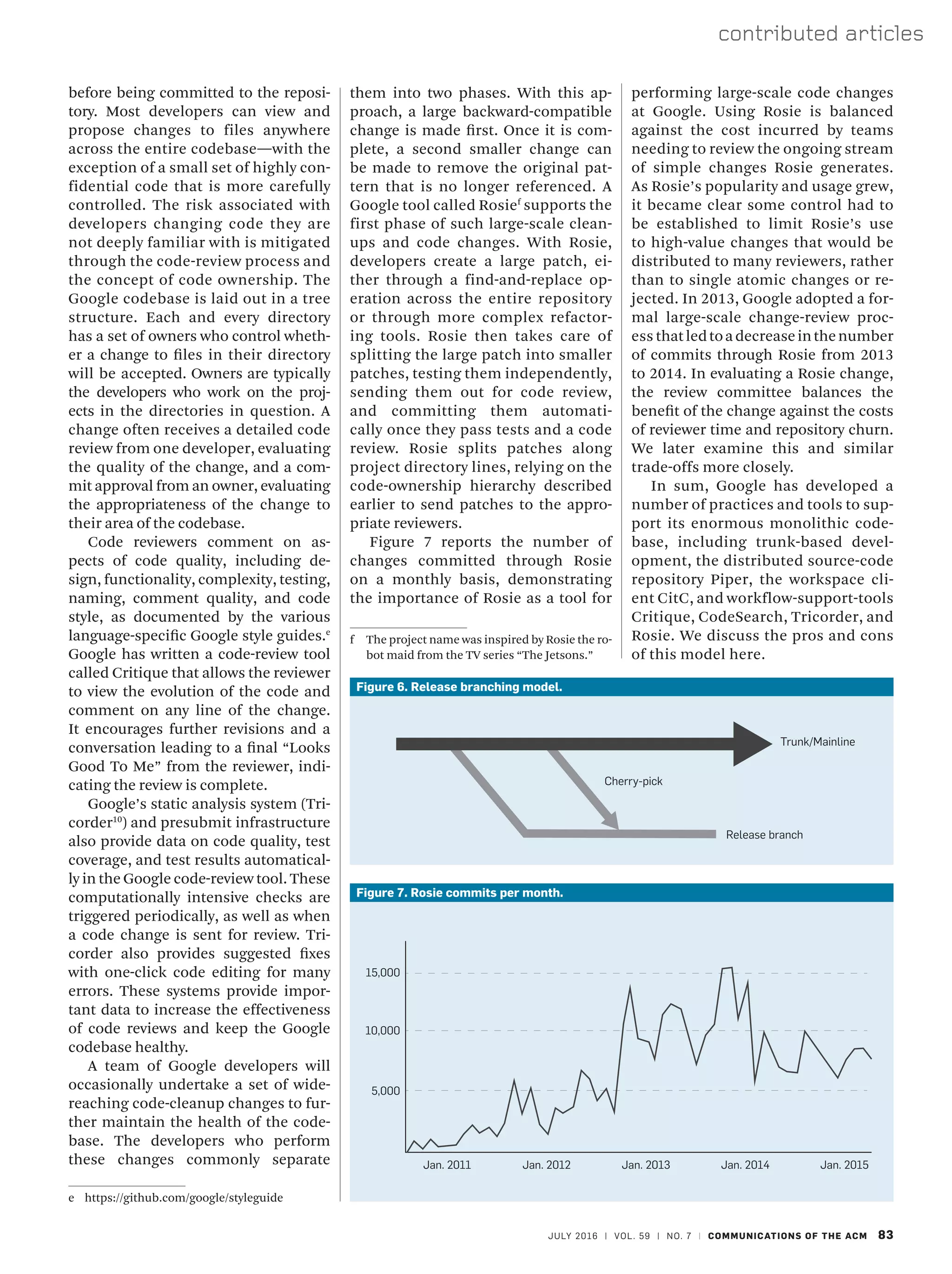

Google uses a single monolithic code repository called Piper to store its codebase, which includes over 1 billion files, 2 billion lines of code, and a history of 35 million commits from over 25,000 developers. Piper is a custom-built version control system that provides benefits like unified versioning, extensive code sharing, and simplified dependency management. It has shown that the monolithic model can successfully scale to an extremely large repository through tools like its distributed architecture, caching infrastructure, and cloud-based developer workflows supported by systems like CitC. Google employs trunk-based development where most work is done on the mainline trunk, with limited use of branches, to further maximize the advantages of the single codebase approach.