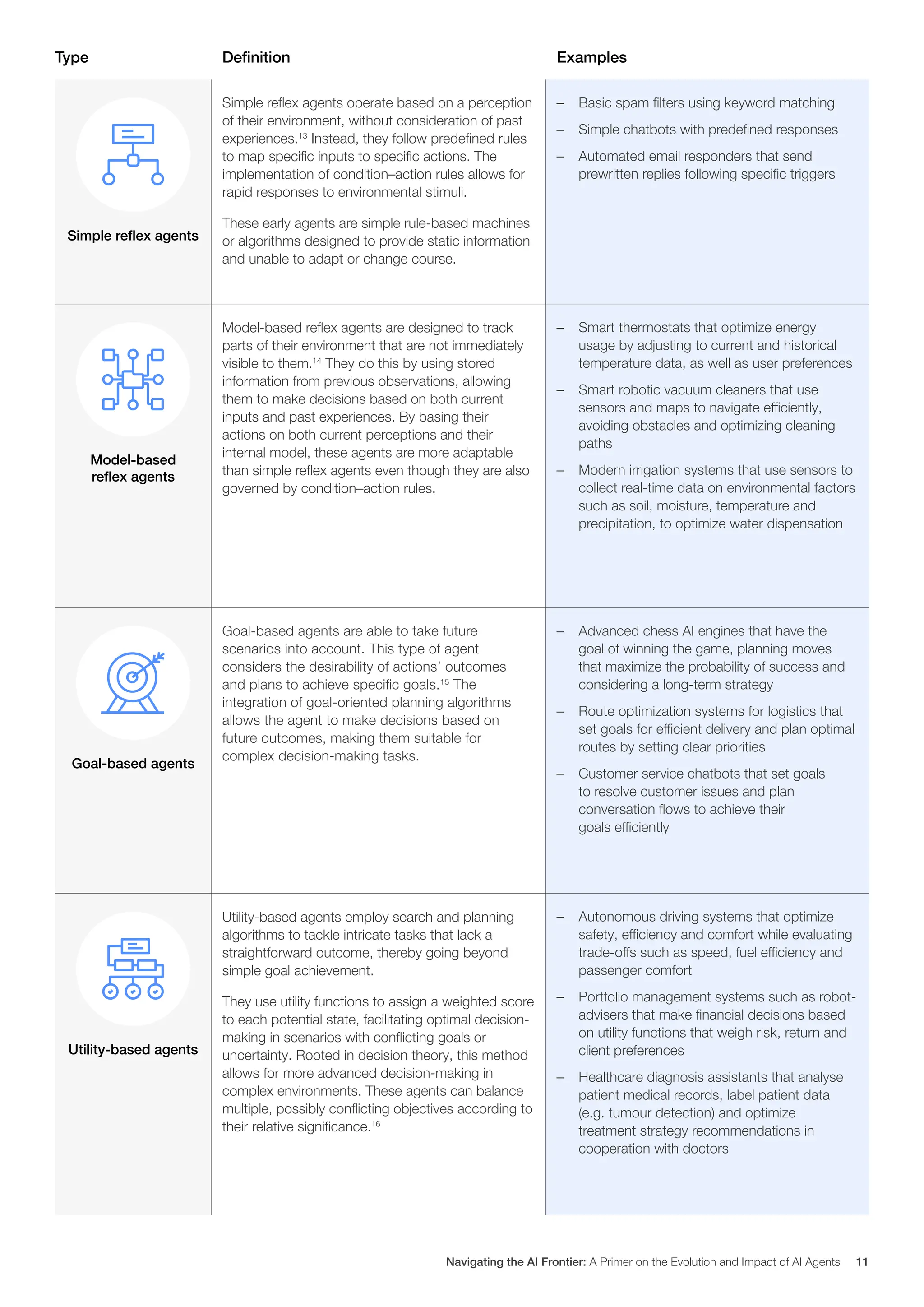

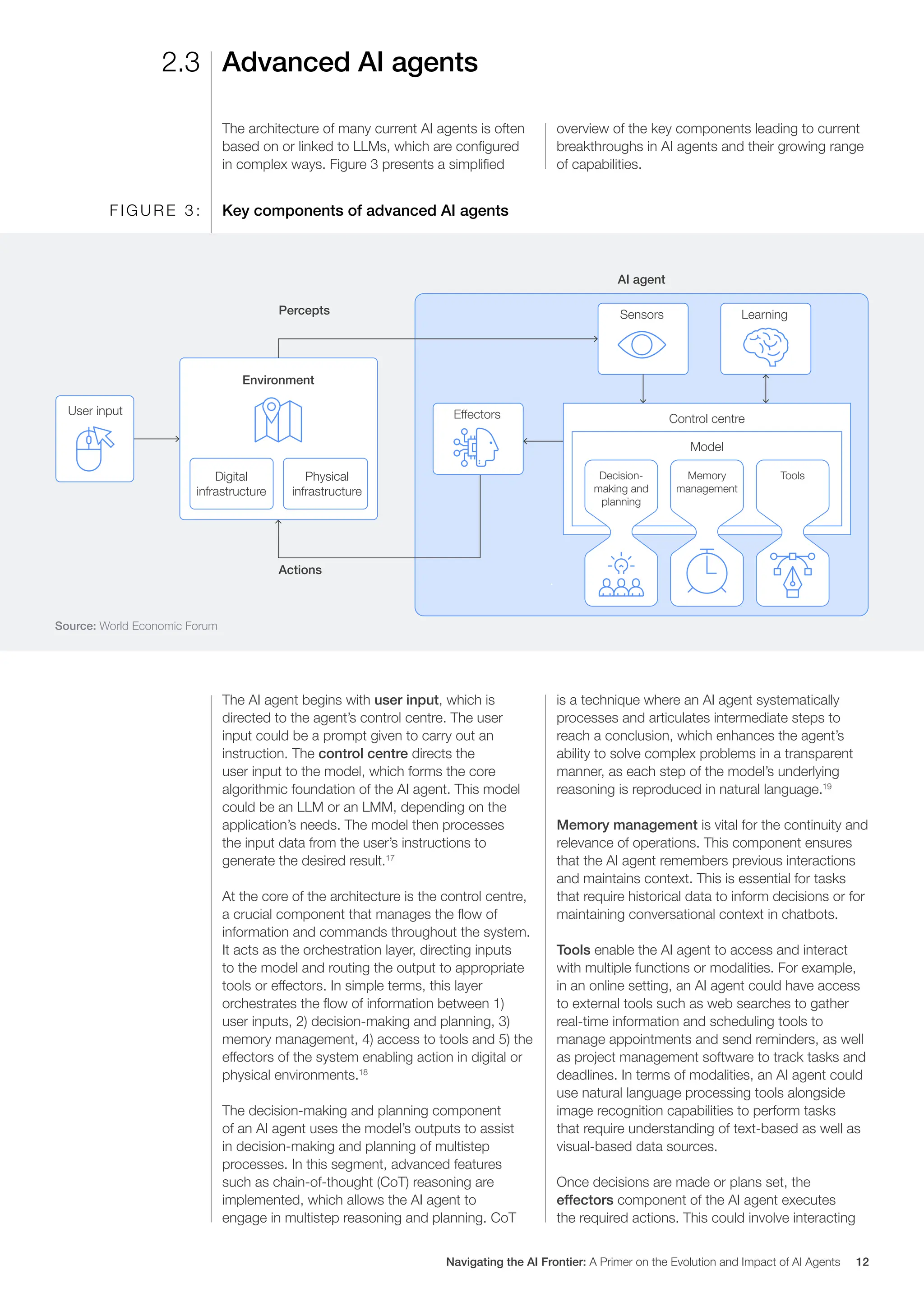

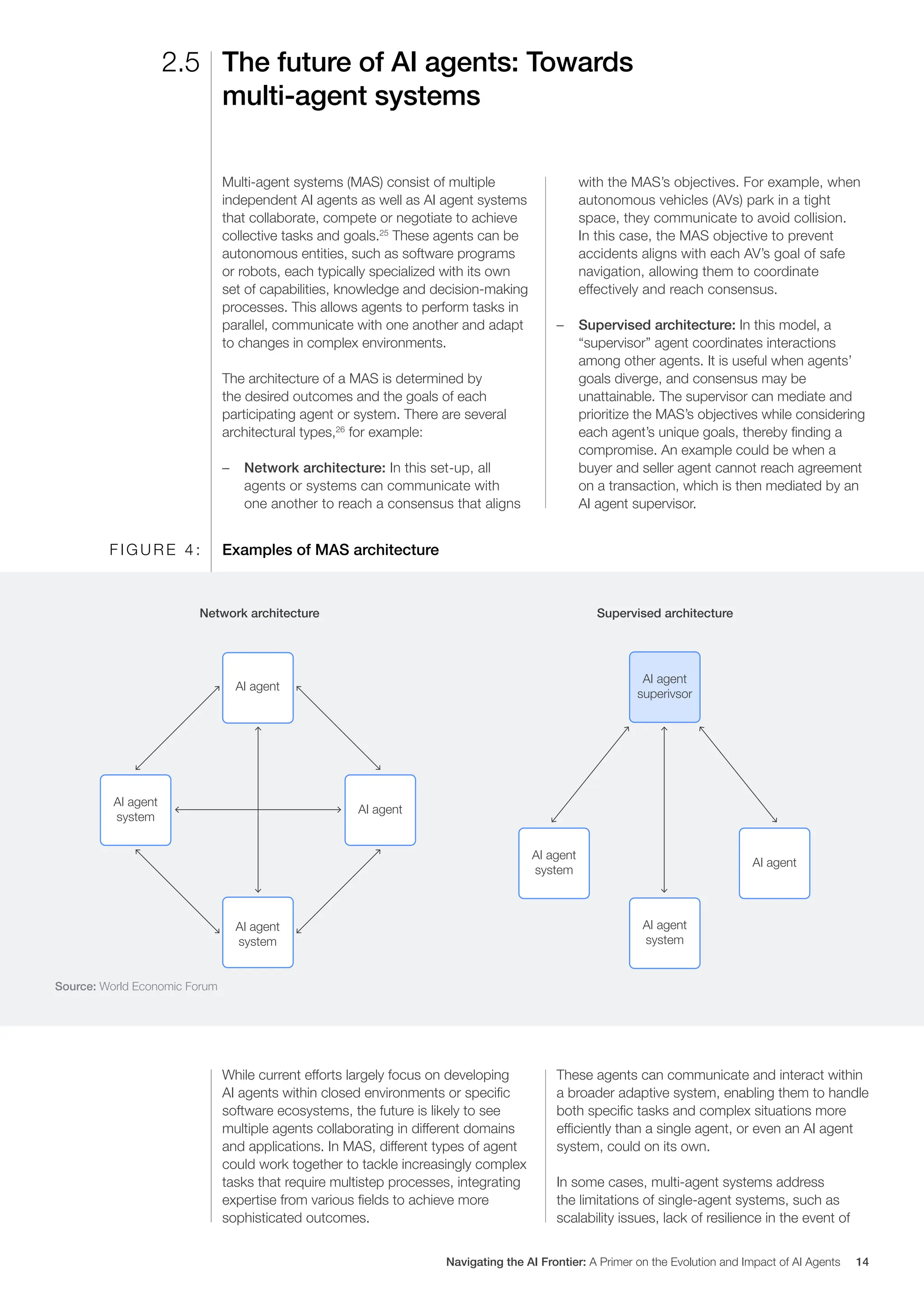

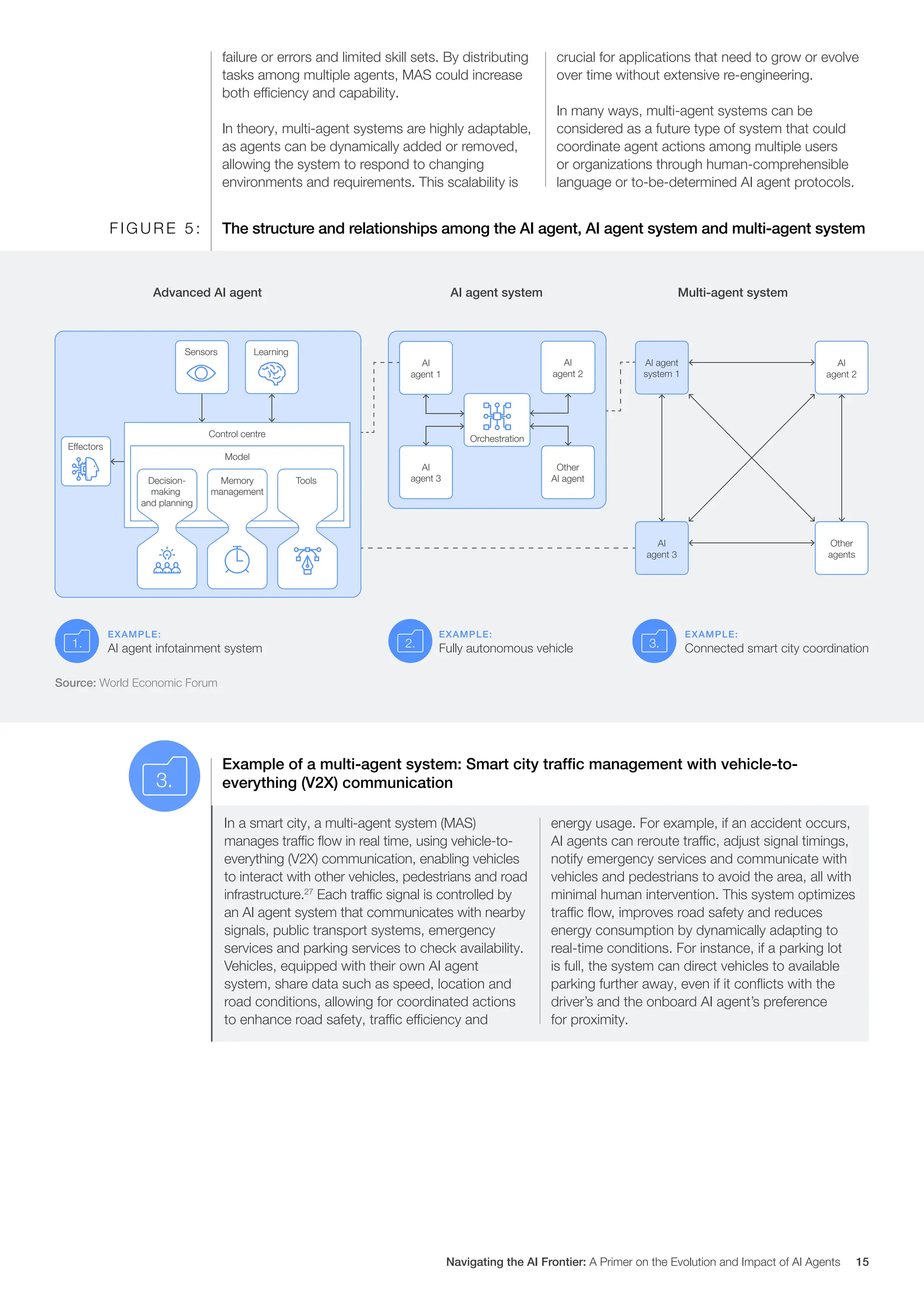

This white paper, published by the World Economic Forum in collaboration with Capgemini, explores the evolution, functionality, and implications of AI agents, highlighting their transformation from simple rule-based systems to advanced, autonomous entities capable of complex decision-making. It discusses the benefits of AI agents across various sectors, such as healthcare and education, while addressing associated risks, ethical considerations, and the need for robust governance frameworks to ensure responsible adoption. The paper emphasizes the importance of responsible AI development and the potential for AI agents to enhance human well-being while navigating the challenges of their integration into society.