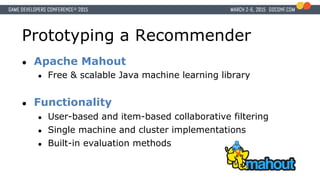

The document discusses building a recommendation system for EverQuest Landmark's marketplace using collaborative filtering. It describes prototyping recommendations using the Apache Mahout library, which involved encoding player data, evaluating algorithms, and integrating additional contextual features. Testing showed recommendations generated an 80% increase in recall over top sellers lists, and drove over 10% of item sales in the marketplace.