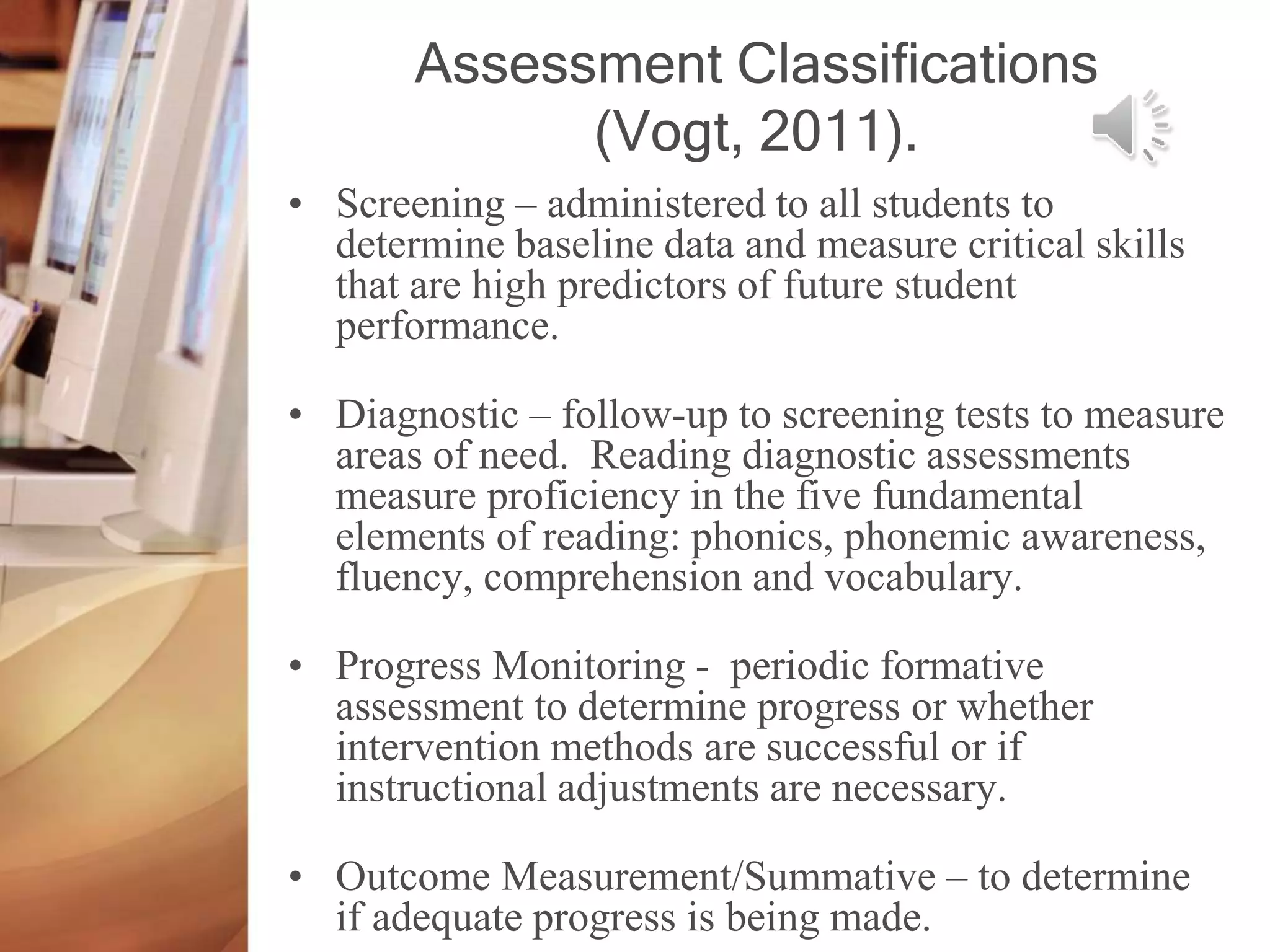

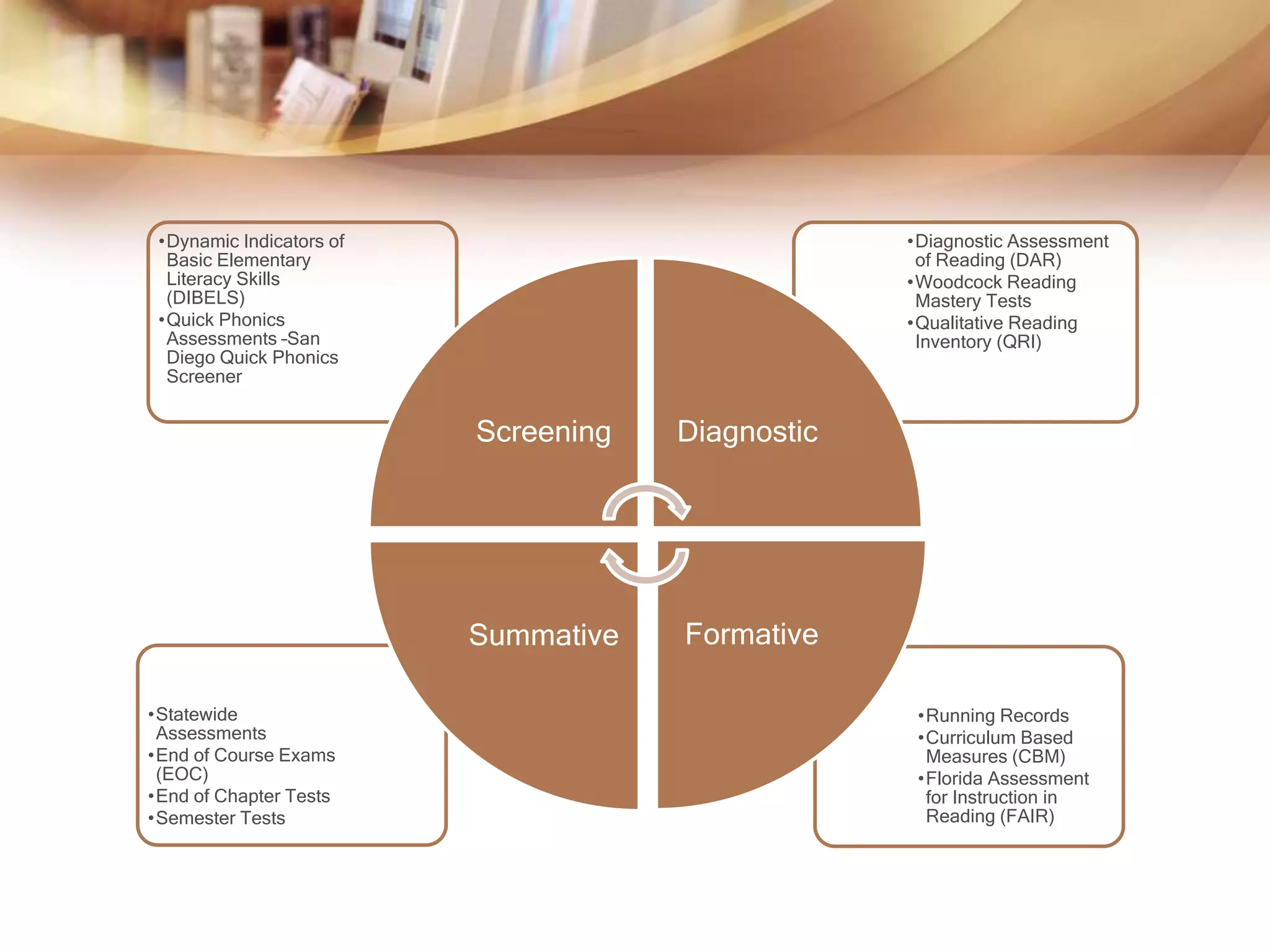

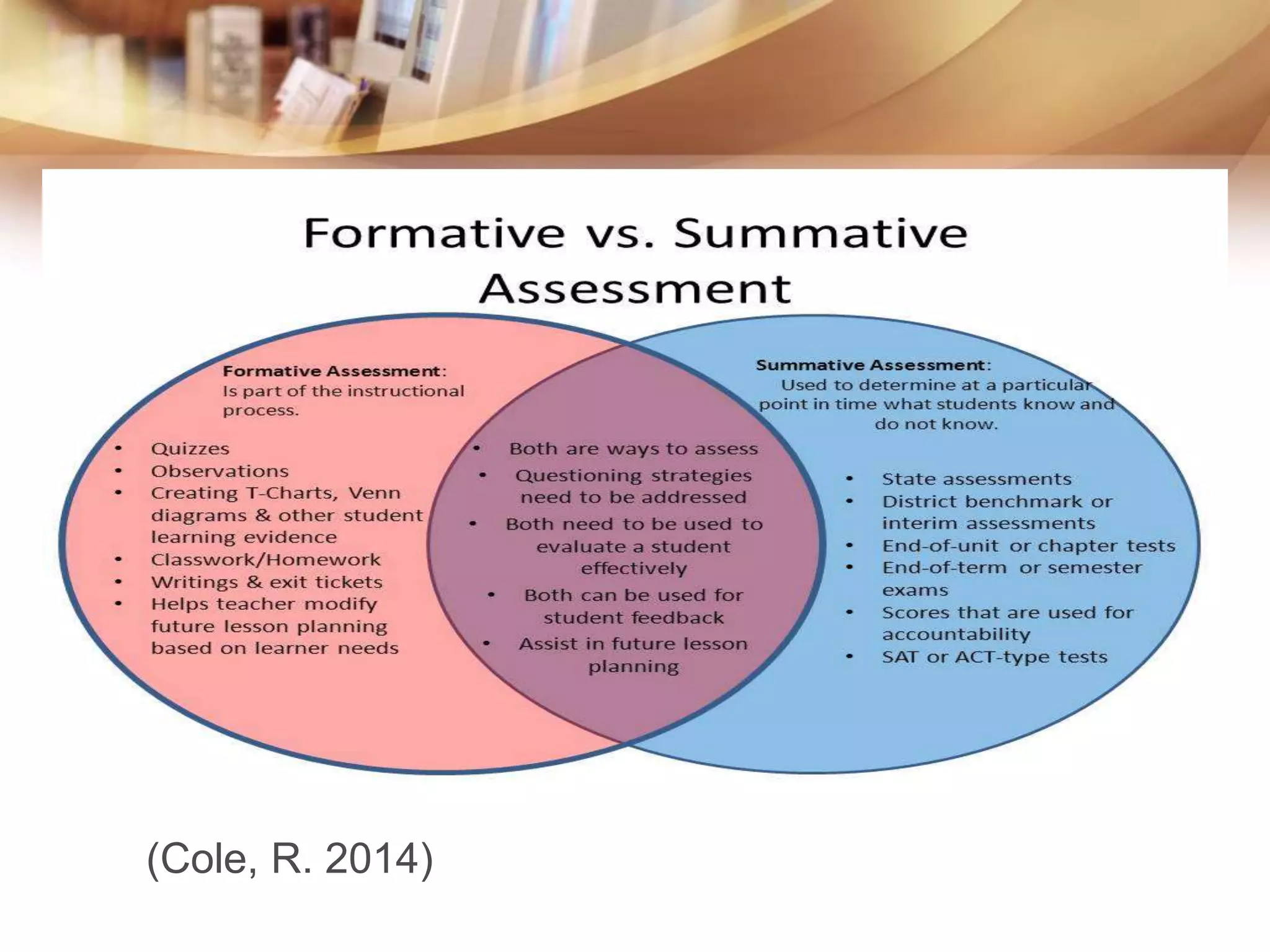

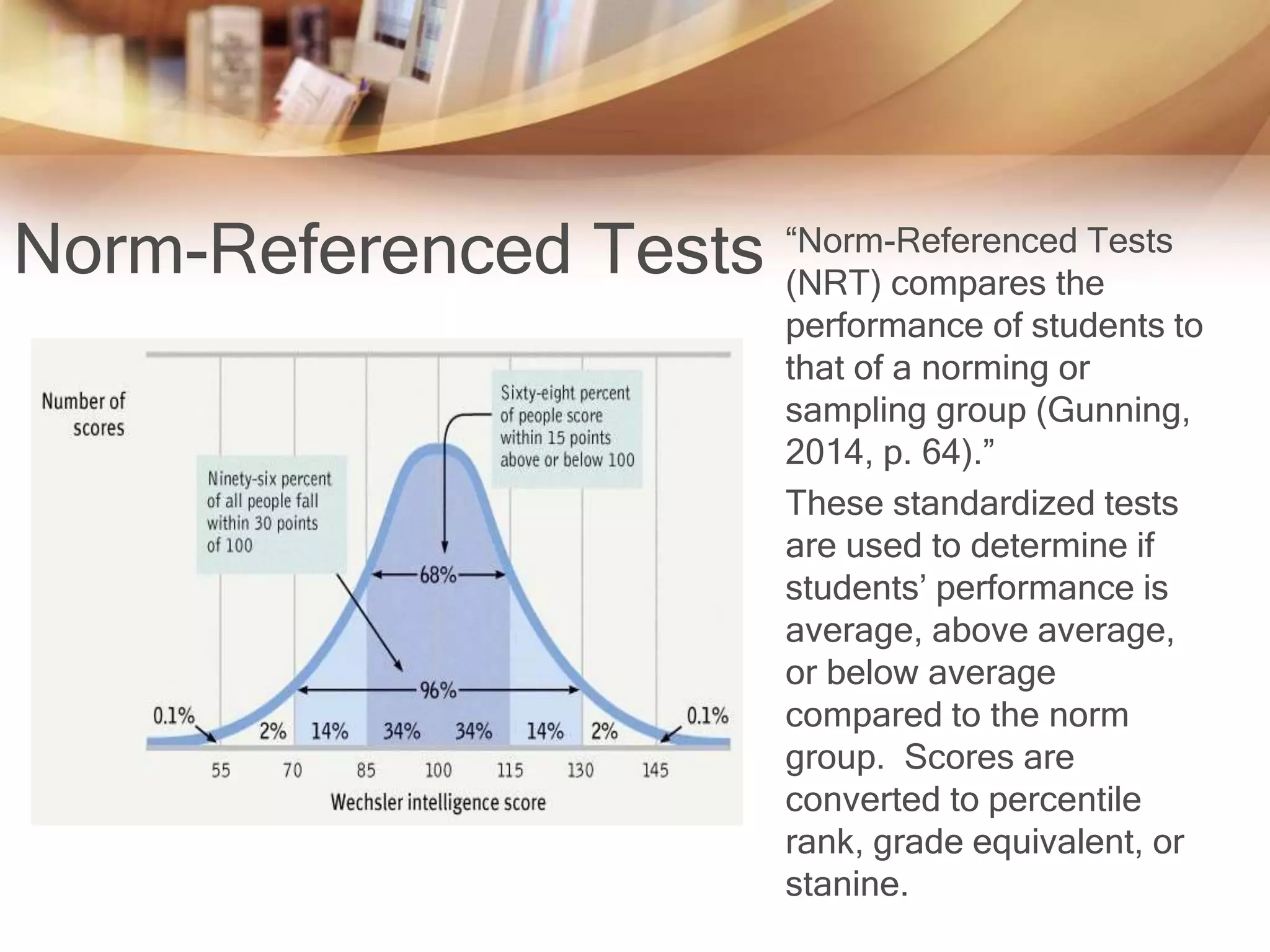

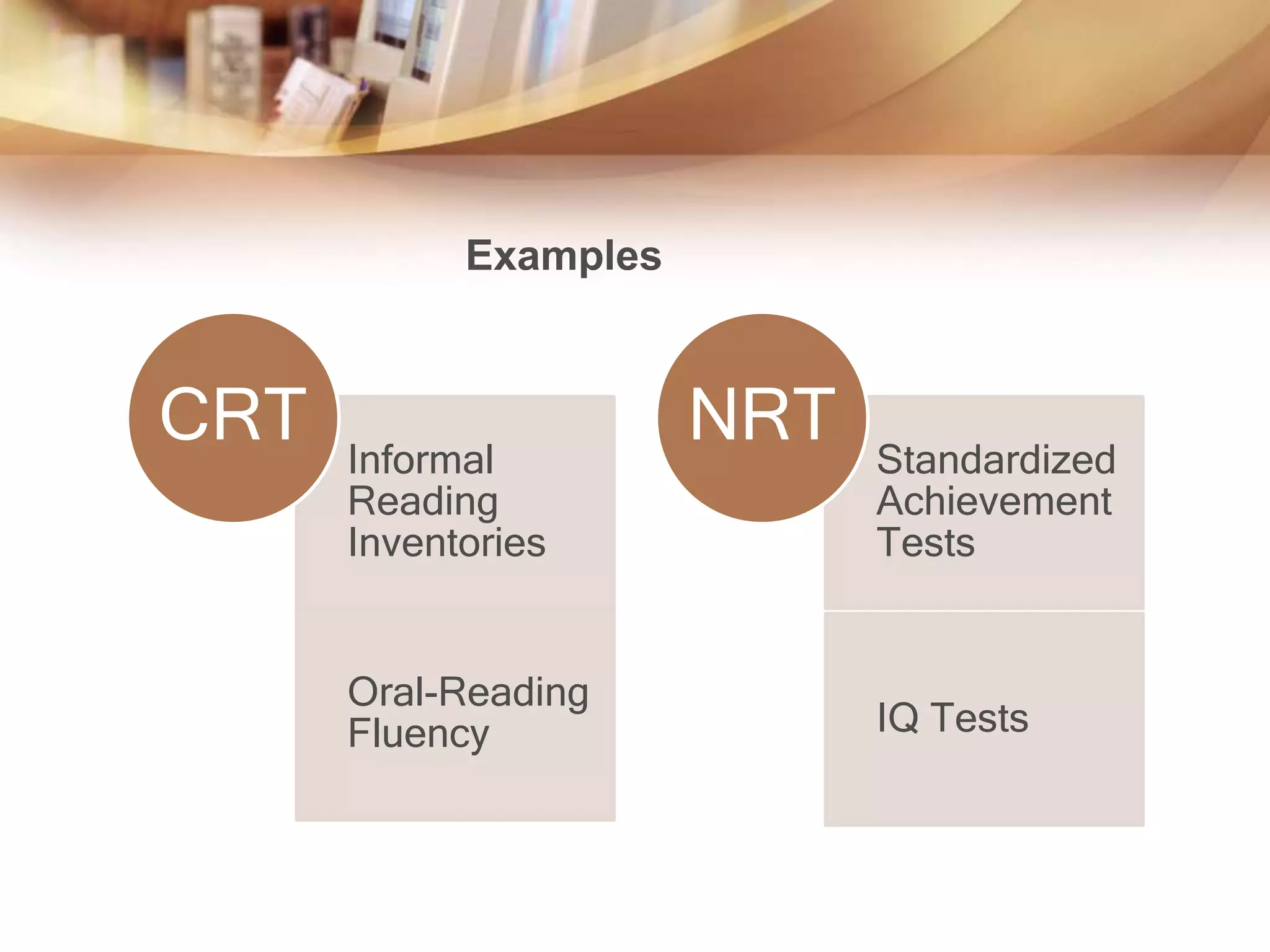

This document discusses different types of assessments used in education including screening, diagnostic, progress monitoring, and outcome/summative assessments. It also describes criterion-referenced tests which measure student performance against standards, and norm-referenced tests which compare students to a peer group. The document emphasizes the importance of reliability and validity in assessments so results can be used to accurately measure performance and drive instruction.