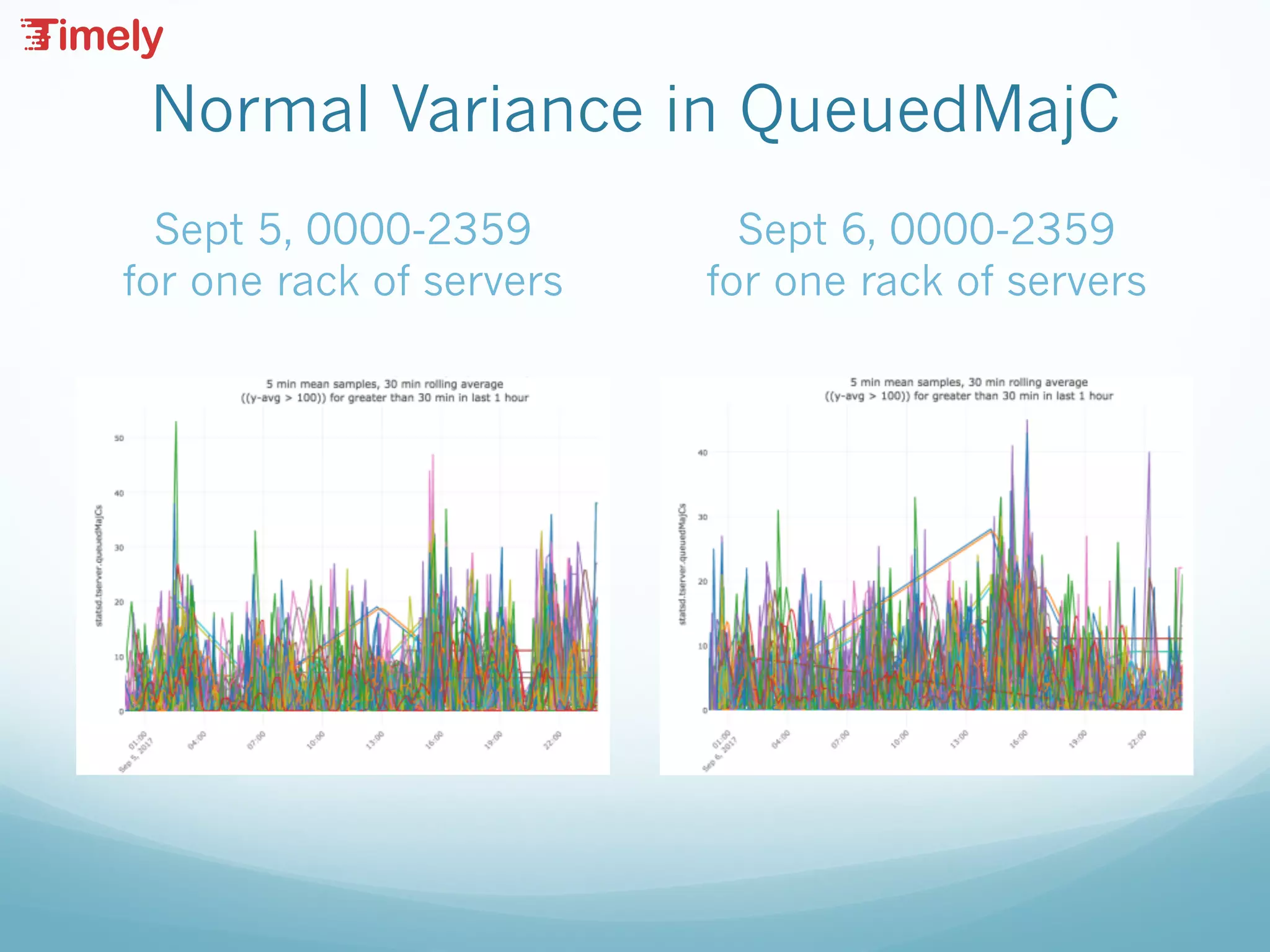

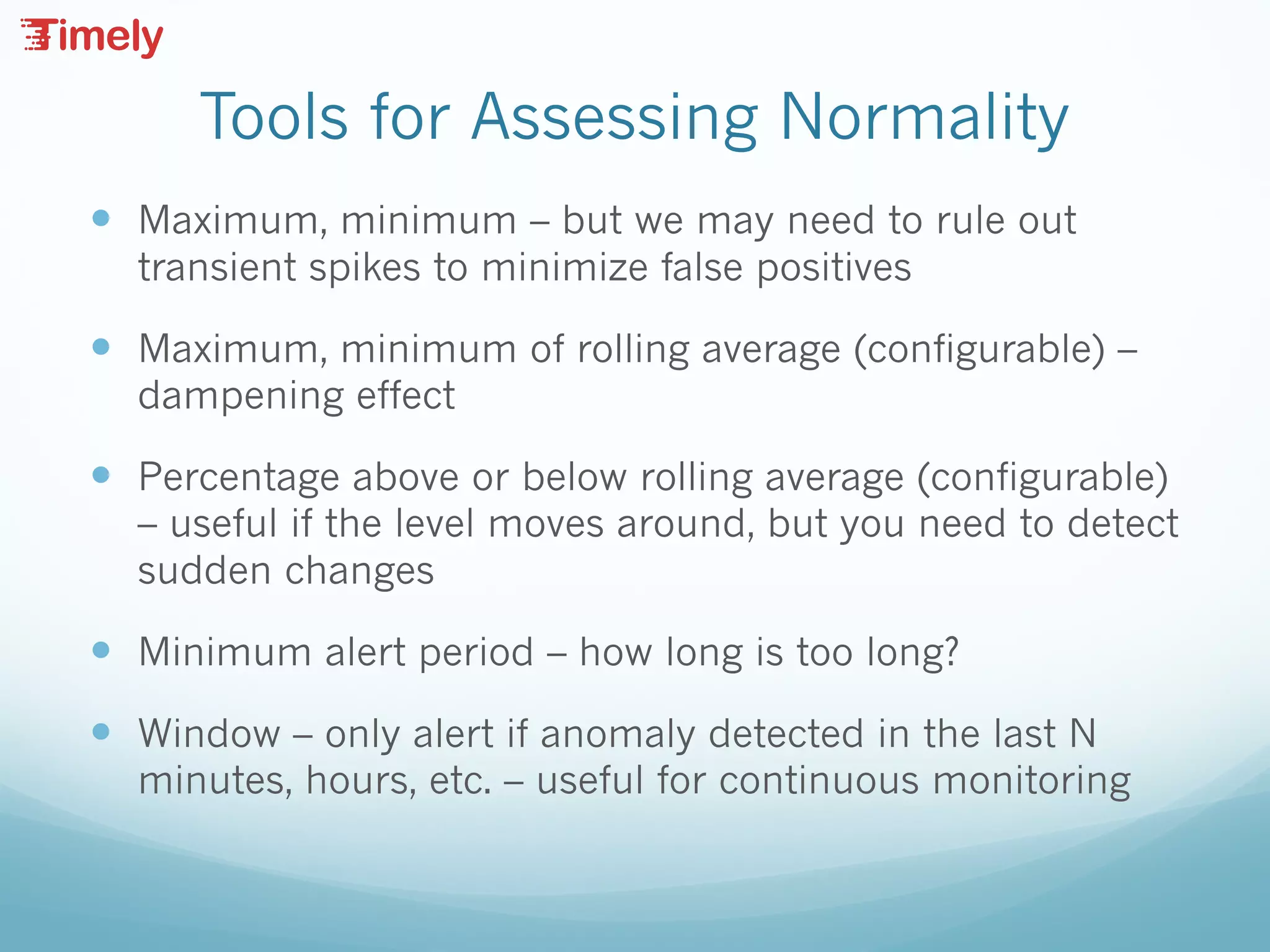

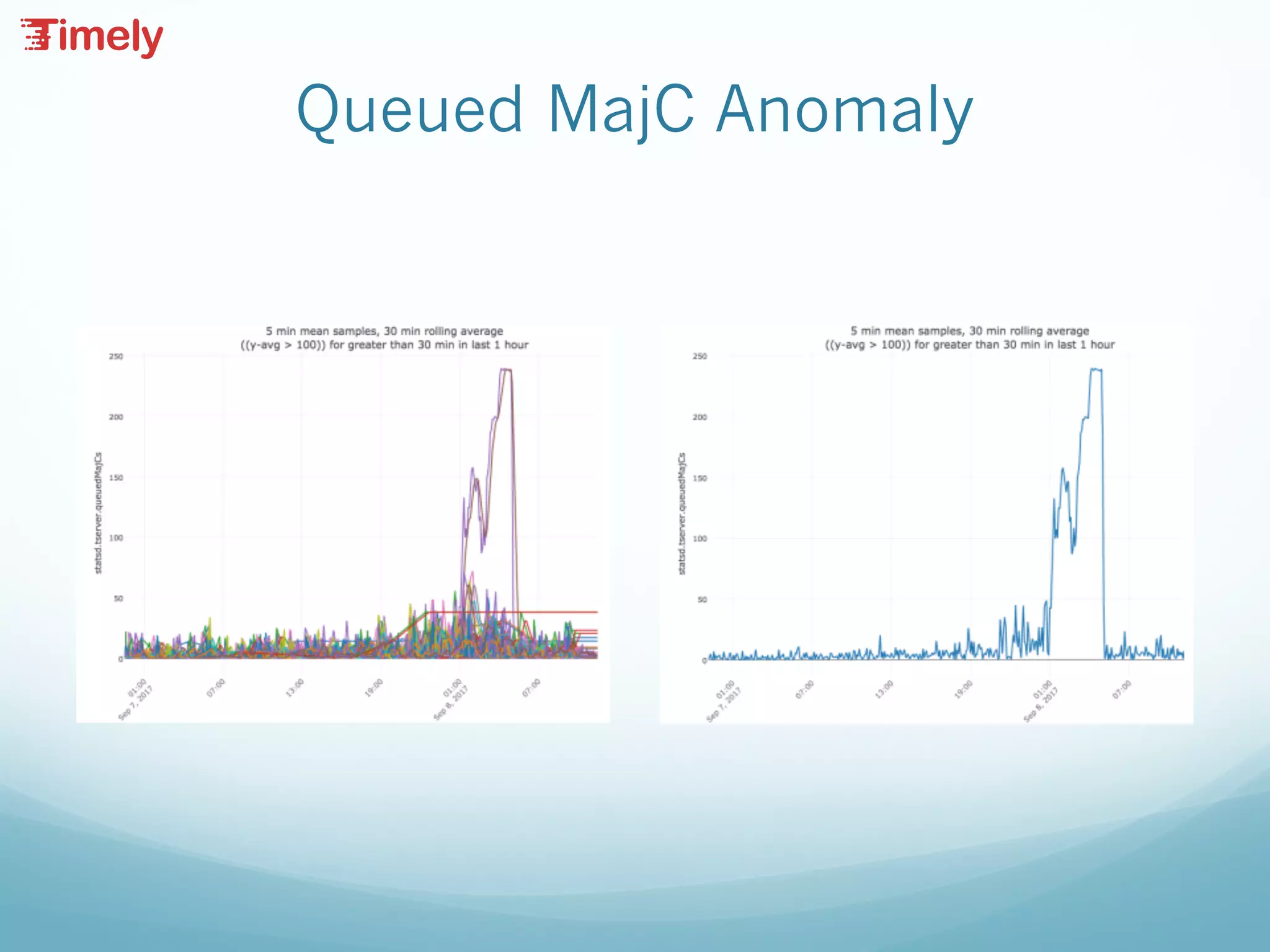

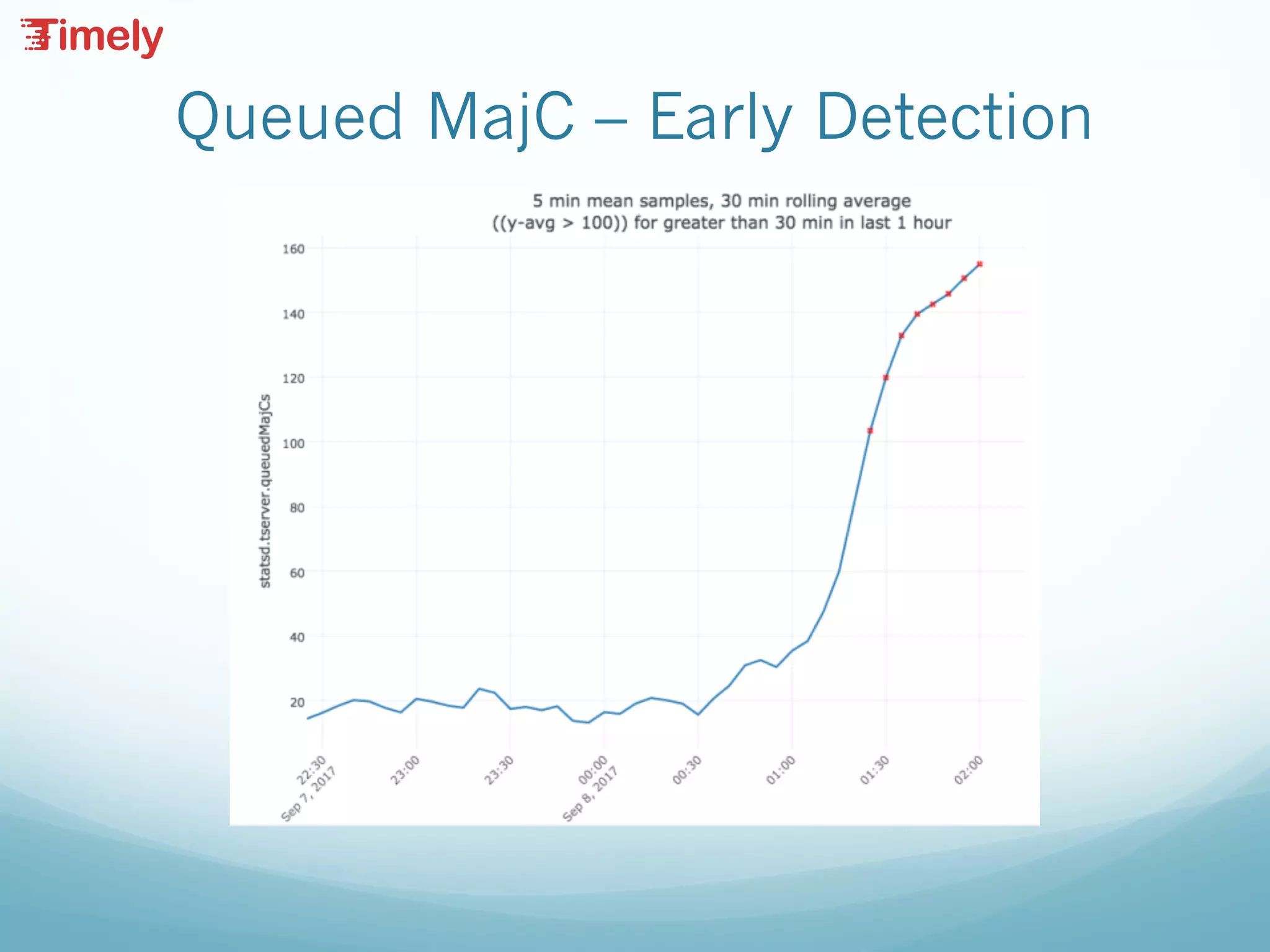

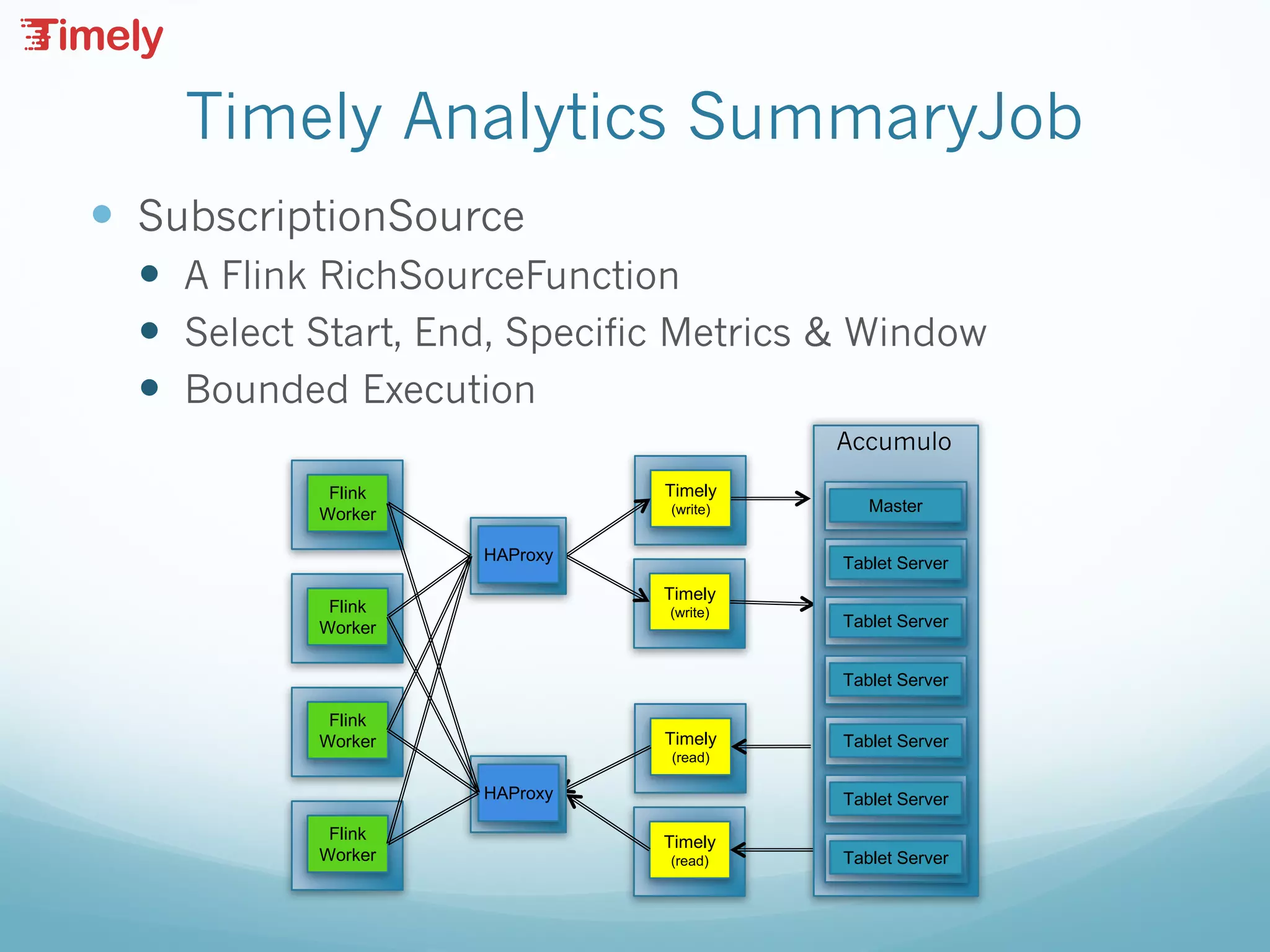

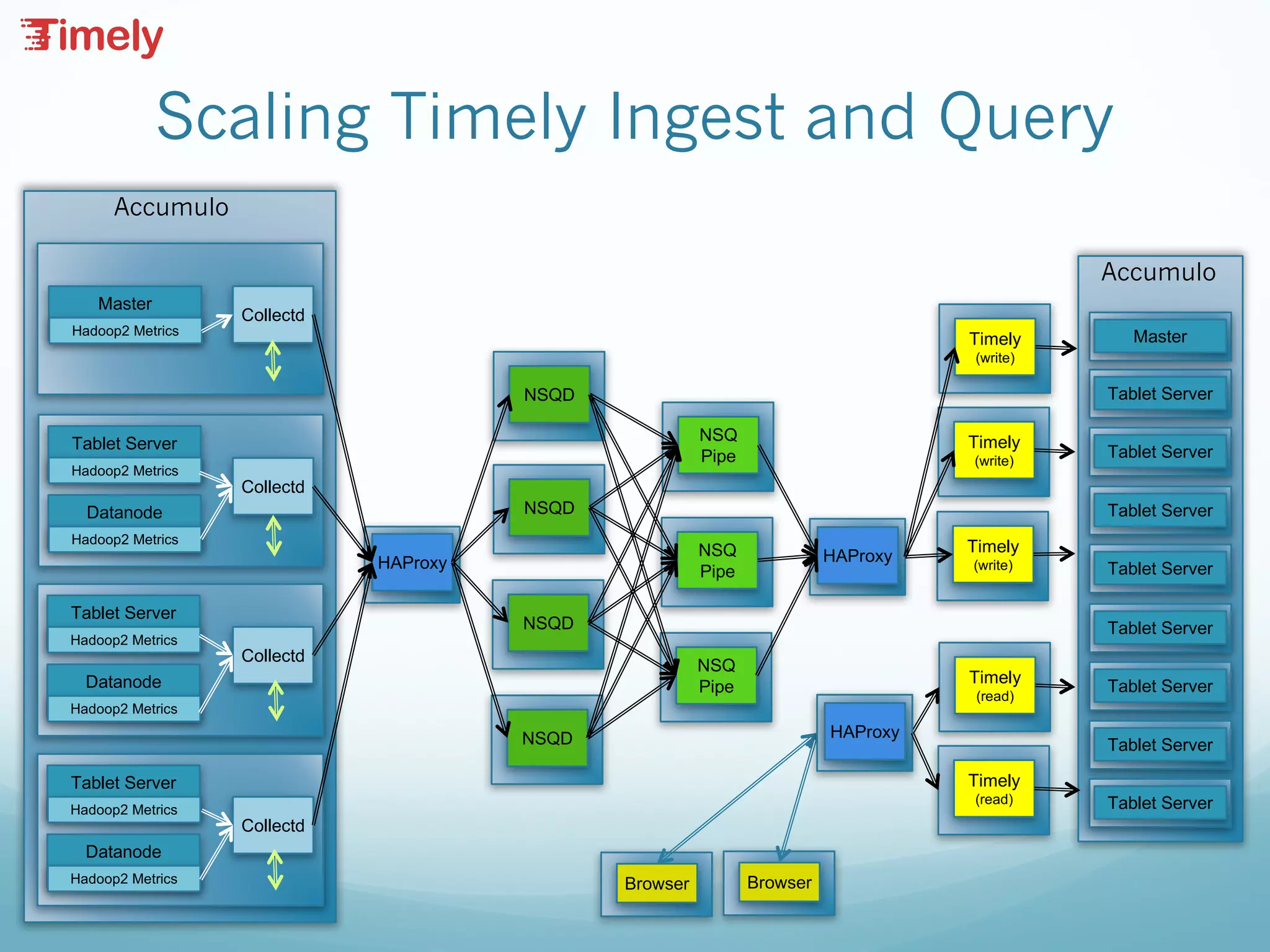

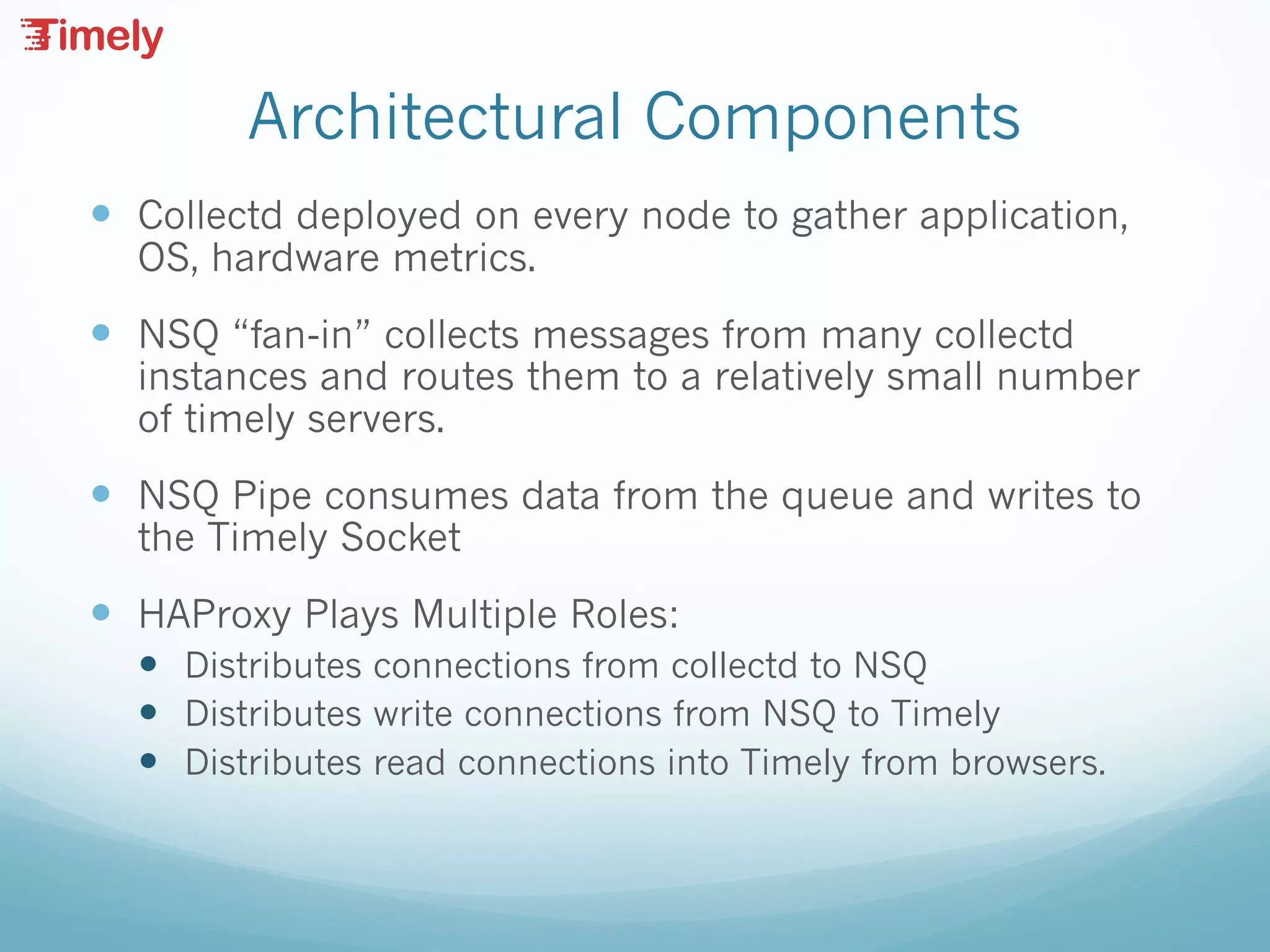

The document outlines the architecture and functioning of a scalable time series database (Timely) developed by Booz Allen Hamilton, which is built on Accumulo and designed for real-time metrics collection and analysis. It discusses key components such as collectd for metric gathering, NSQ for message routing, and Grafana for visualizations and alerts, while also addressing scaling strategies and challenges. Additionally, it shares lessons learned from deployment, monitoring, and data compaction issues encountered during implementation.

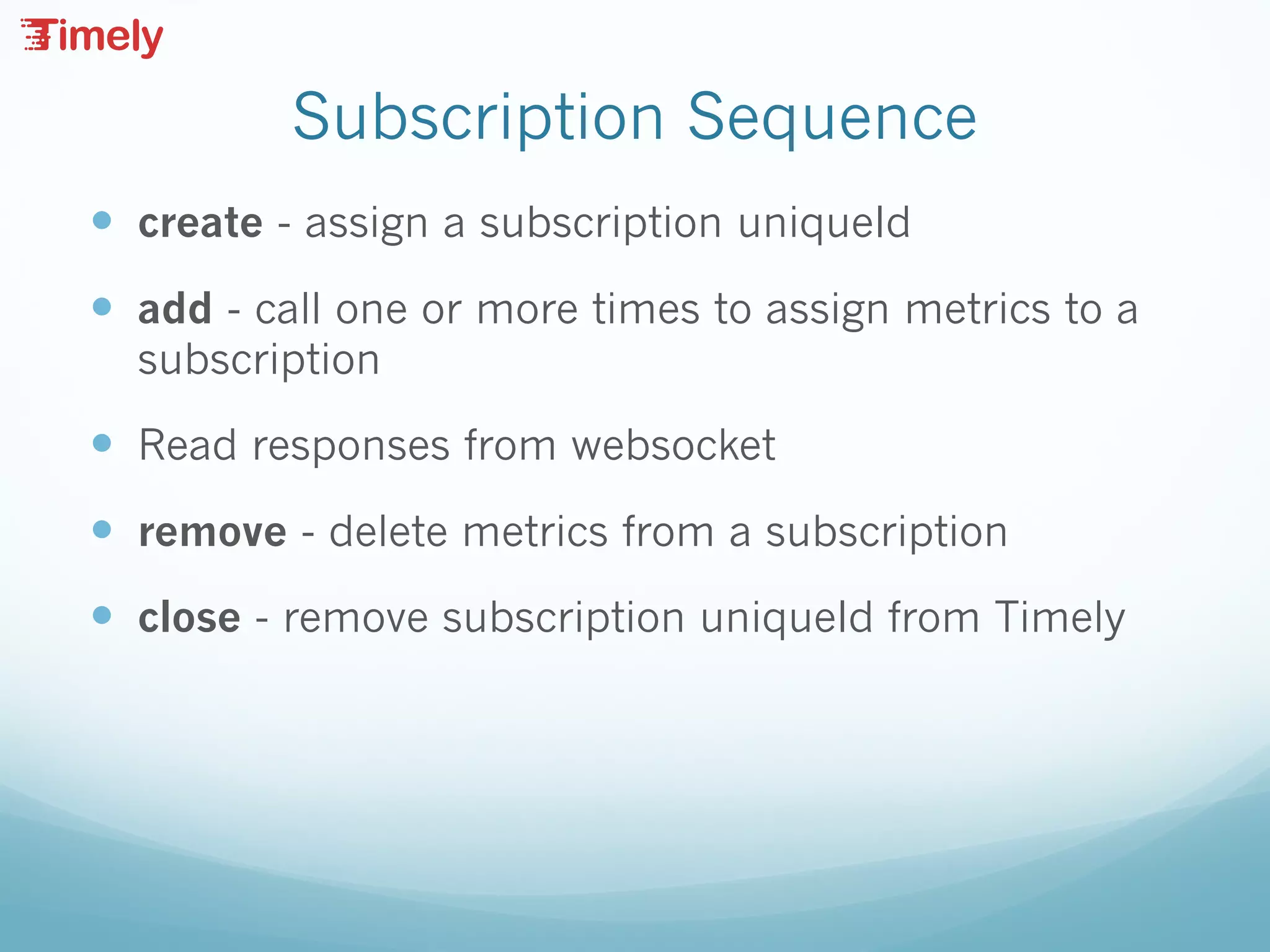

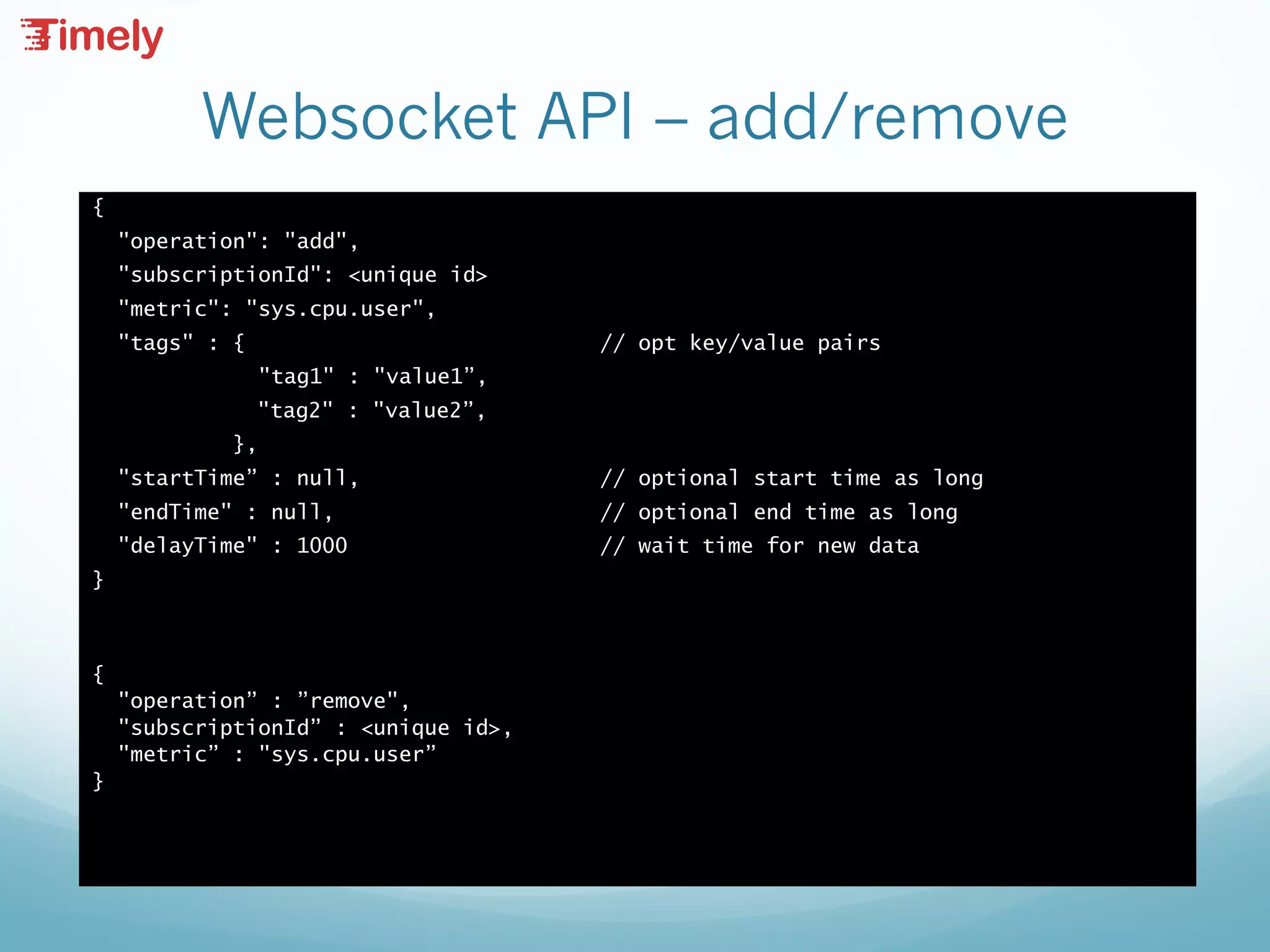

![Websocket API – response

{

"responses” :

[

{

"metric" : "sys.cpu.user",

"timestamp" : 1469028728091,

"value" : 1.0,

"tags” :

[

{

"key" : "rack",

"value" : "r1"

}

],

"subscriptionId” : <unique id>,

"complete” : false,

}

]

}](https://image.slidesharecdn.com/timelyyeartwo-171016182259/75/Timely-Year-Two-Lessons-Learned-Building-a-Scalable-Metrics-Analytic-System-12-2048.jpg)