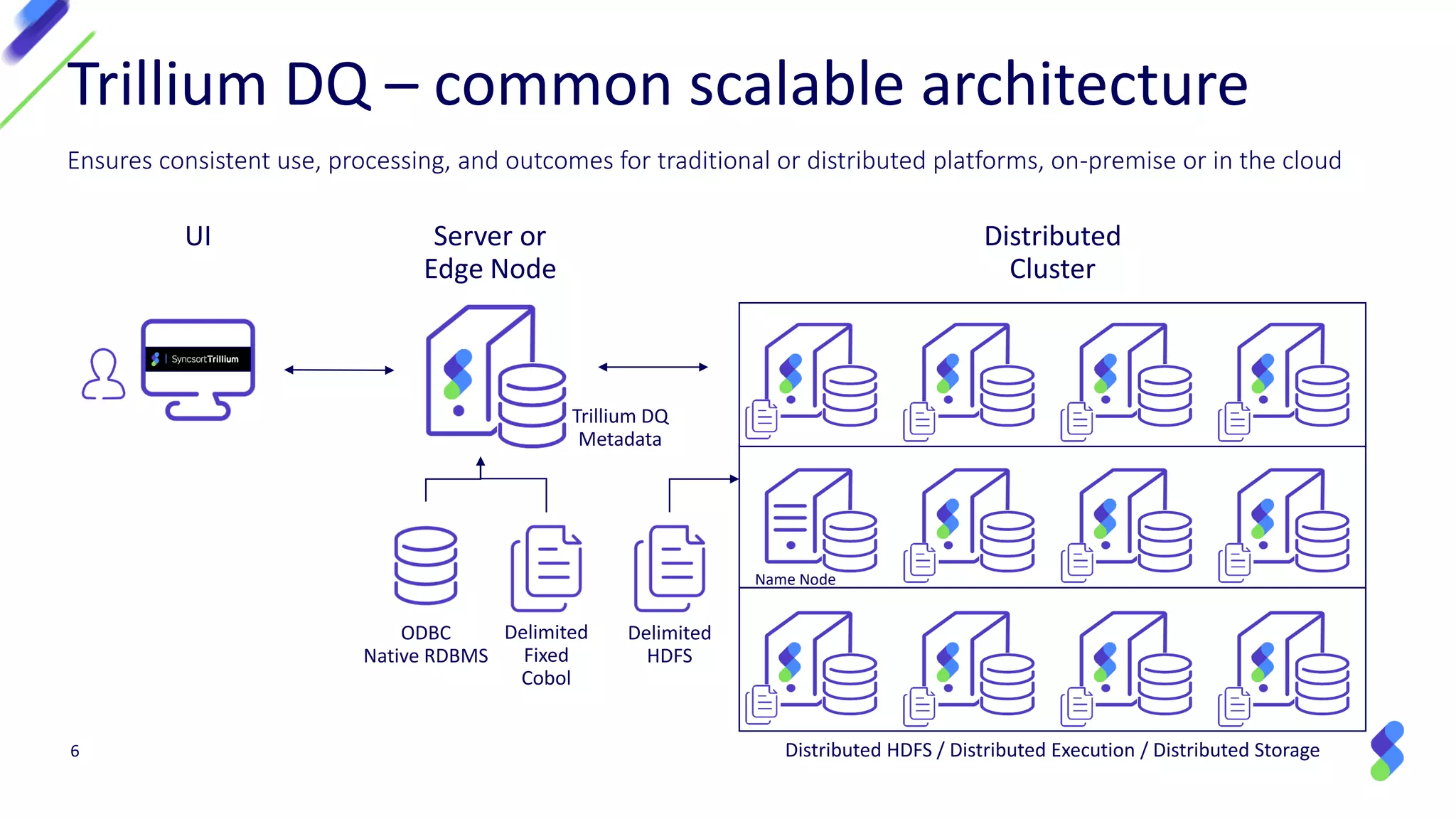

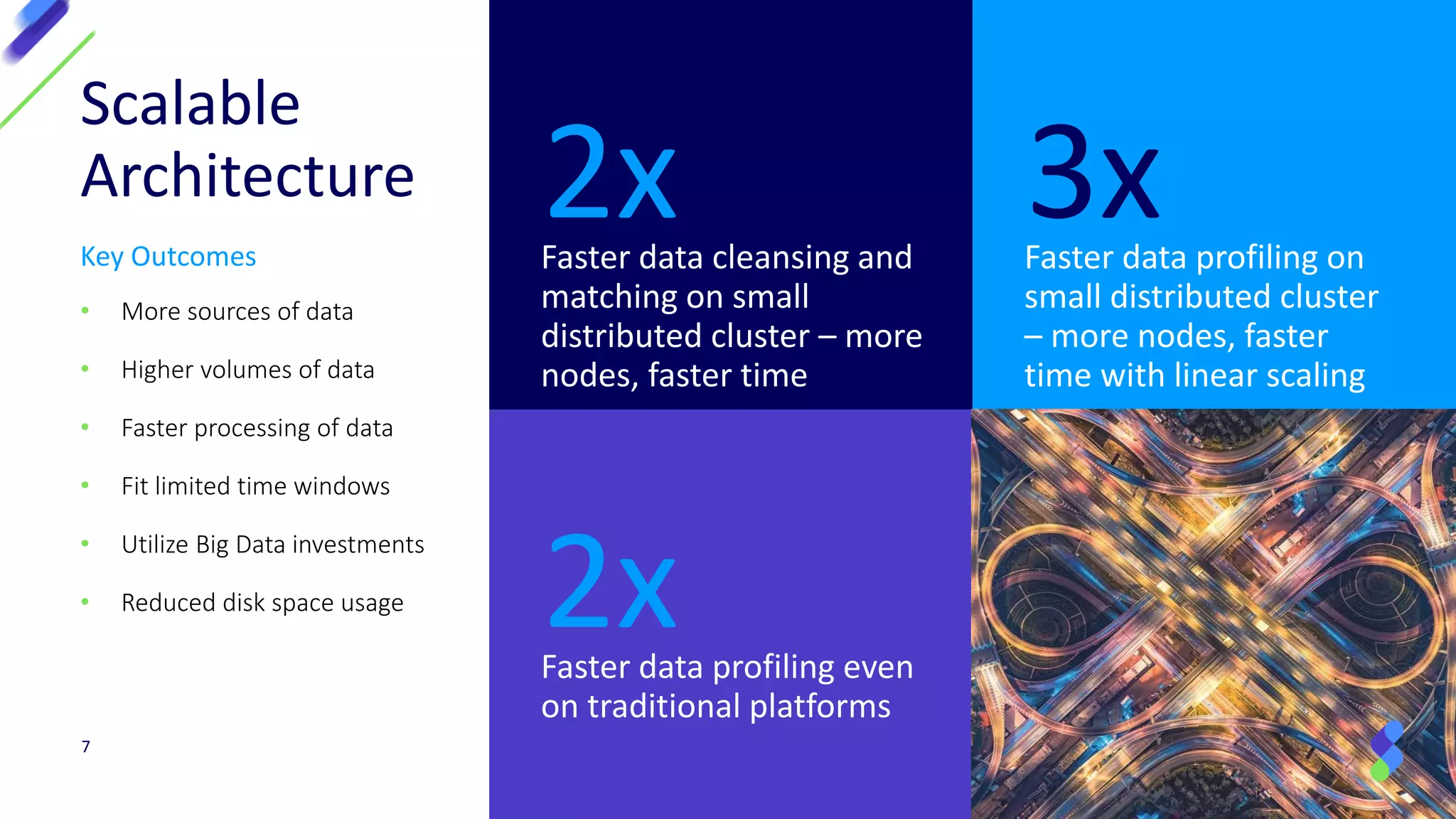

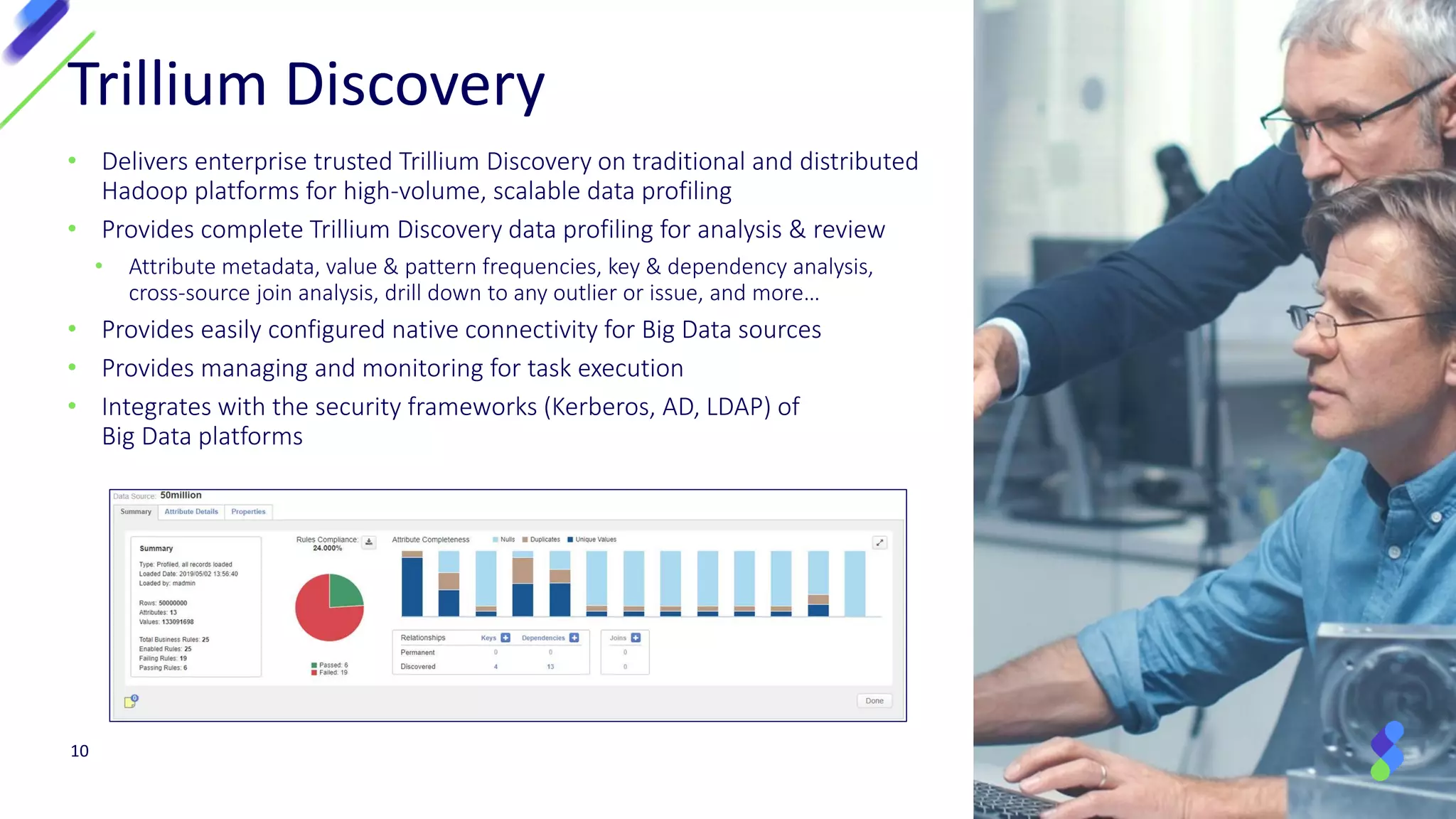

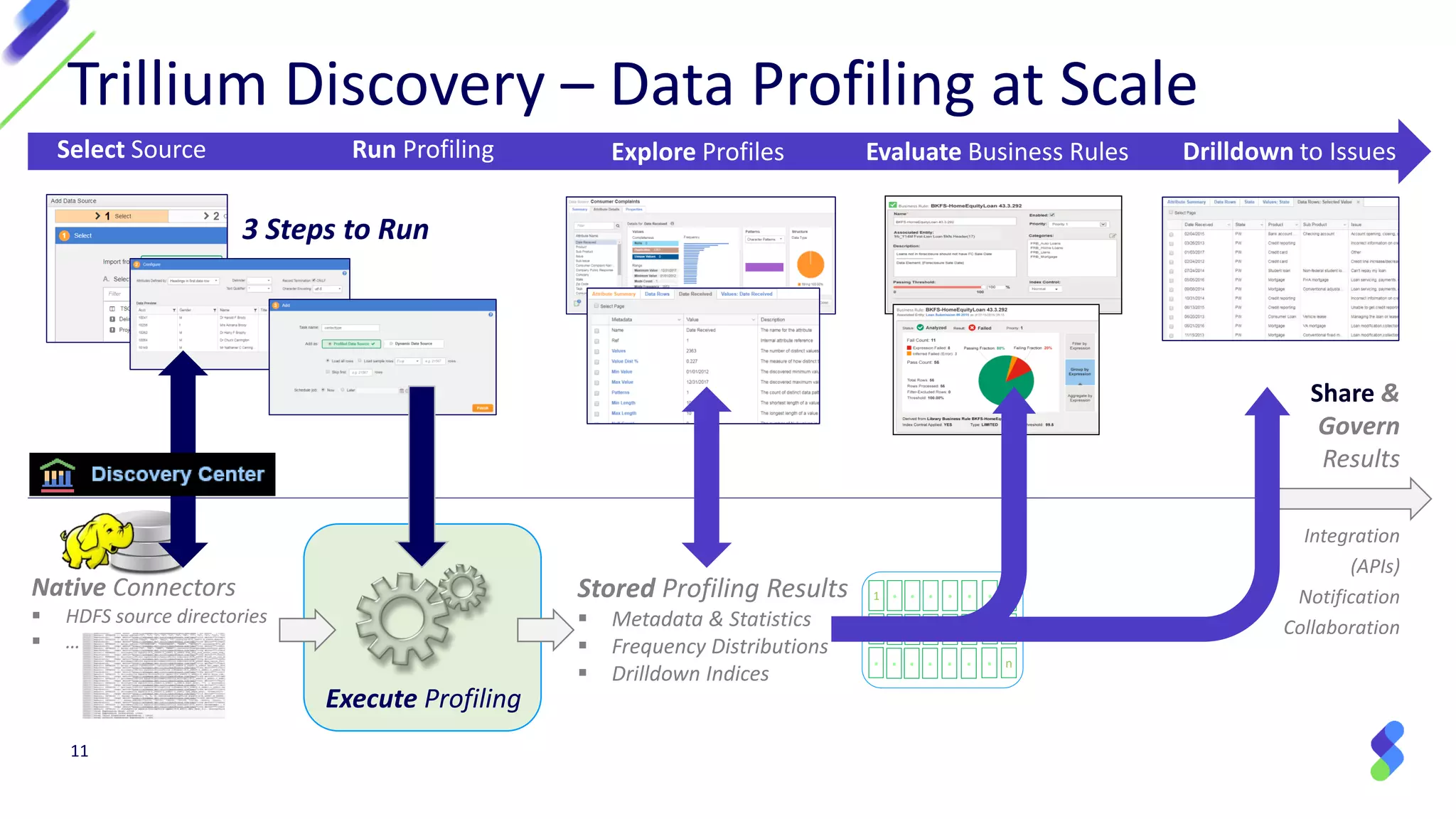

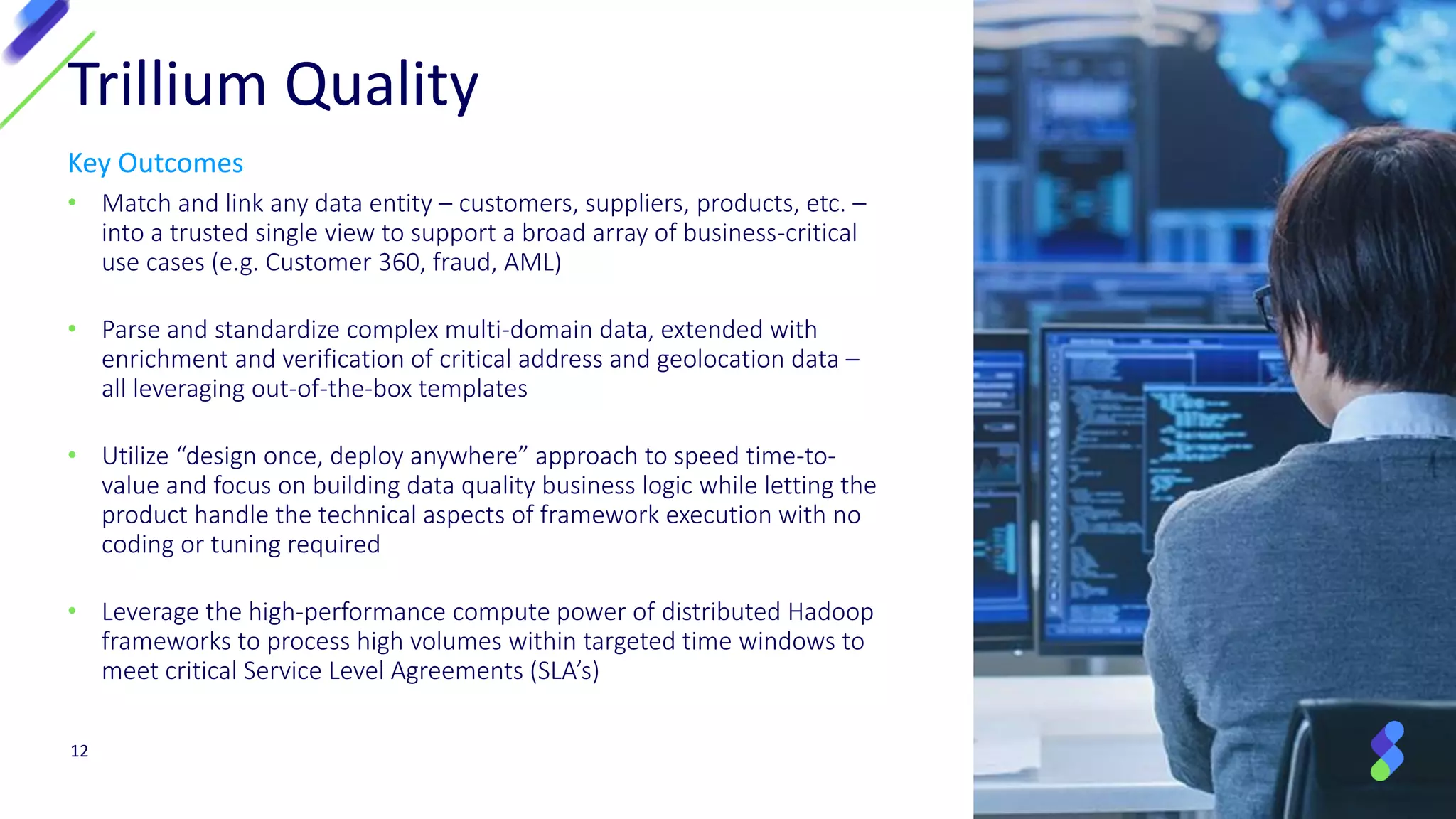

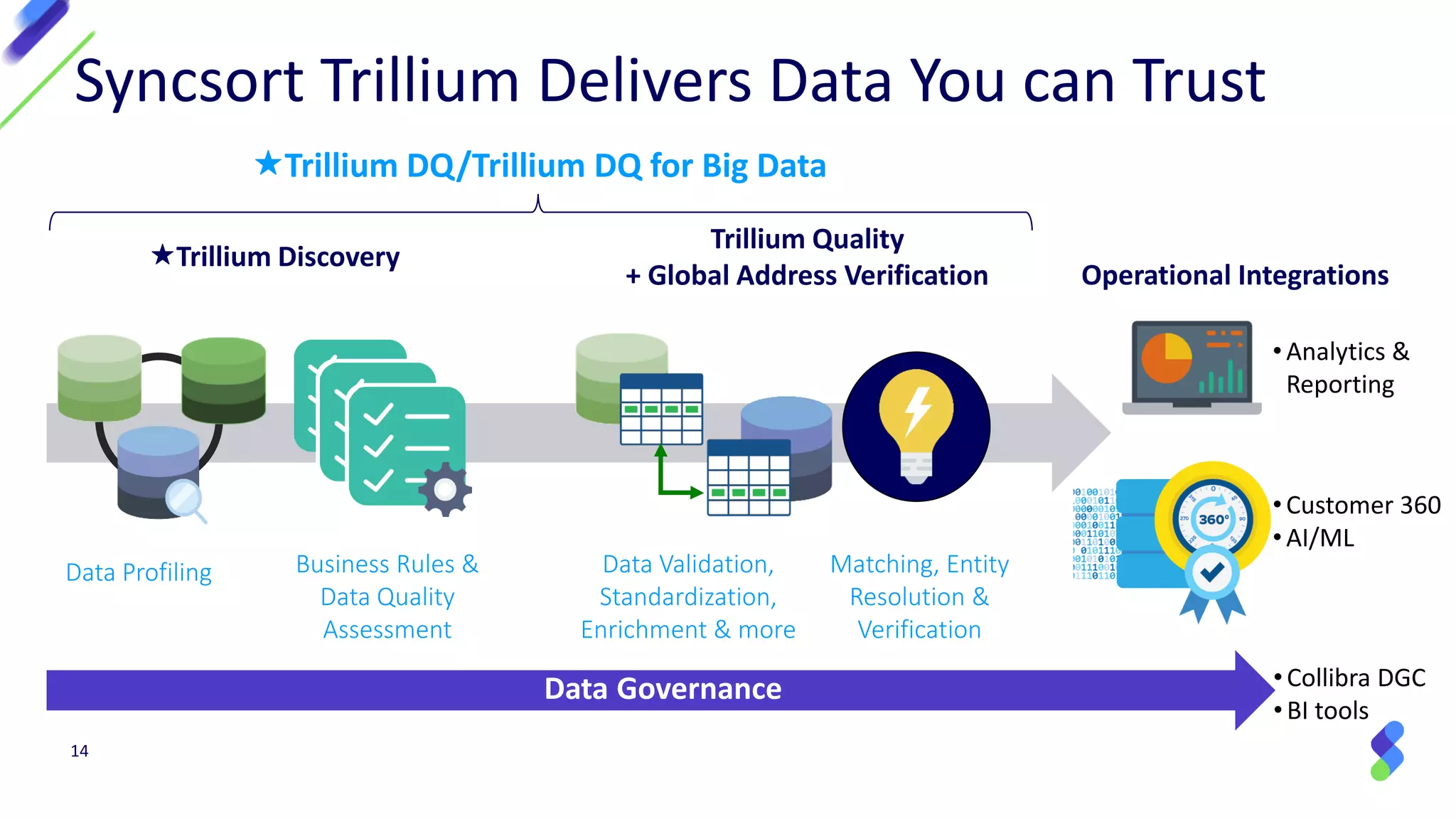

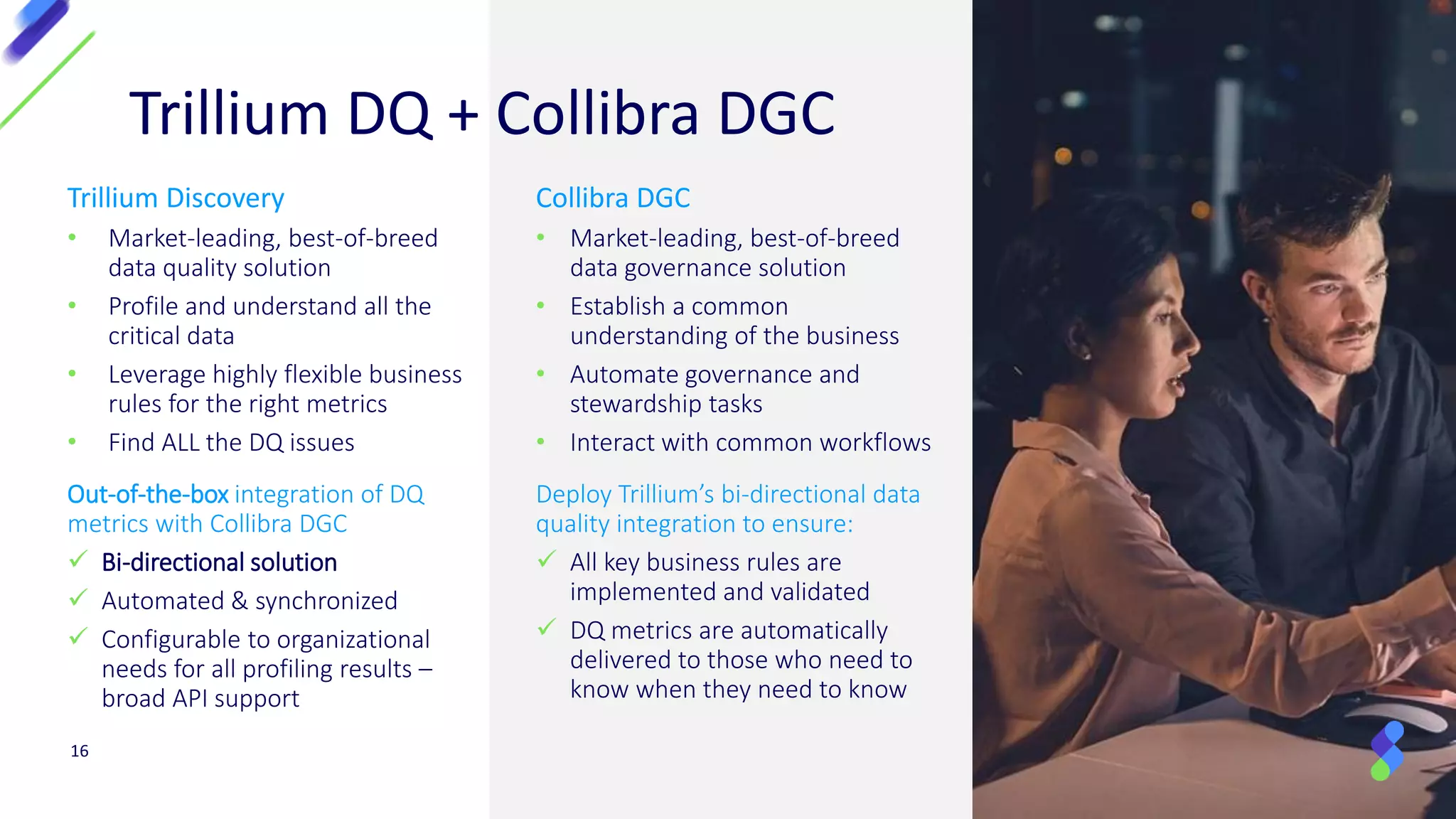

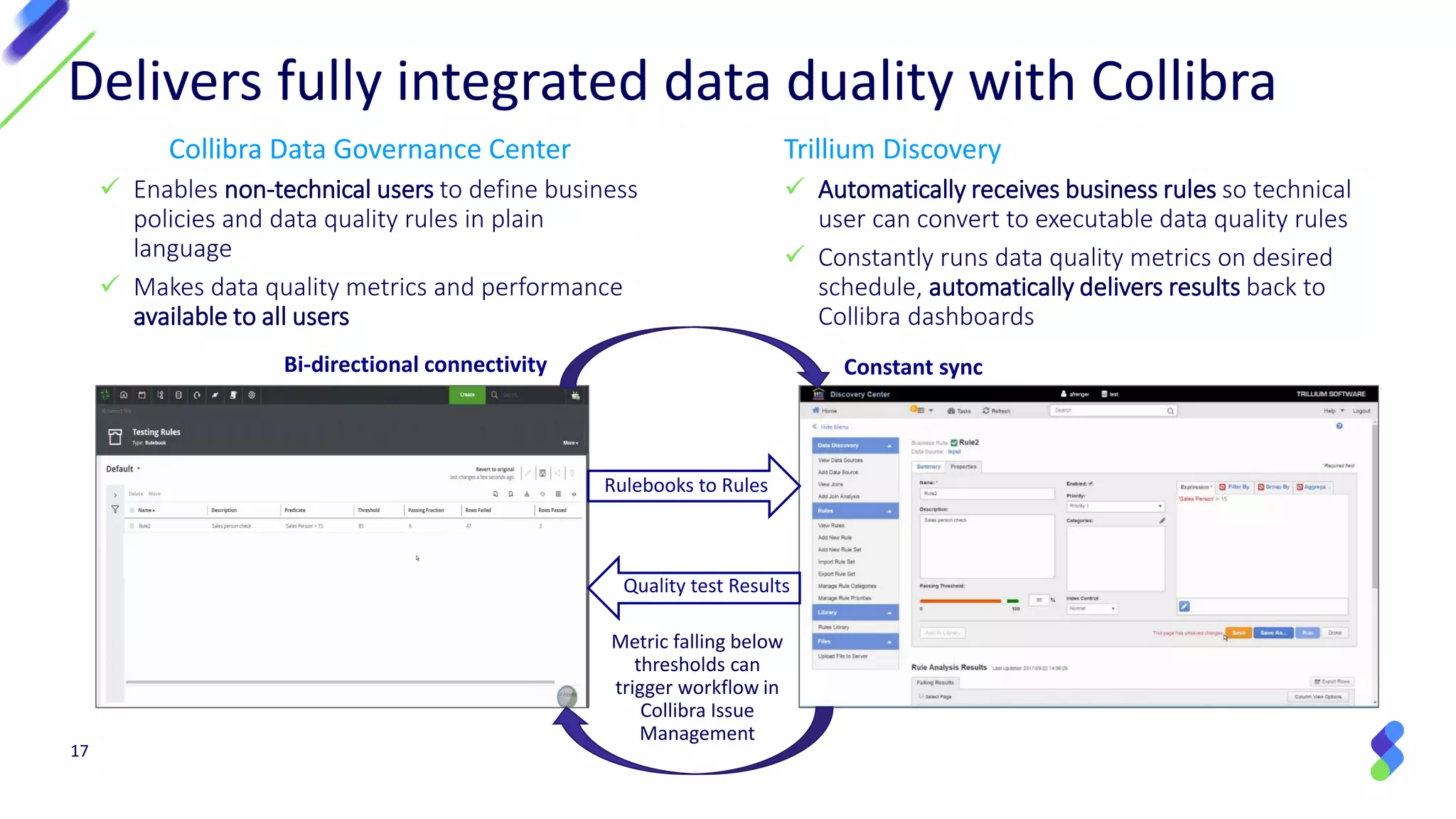

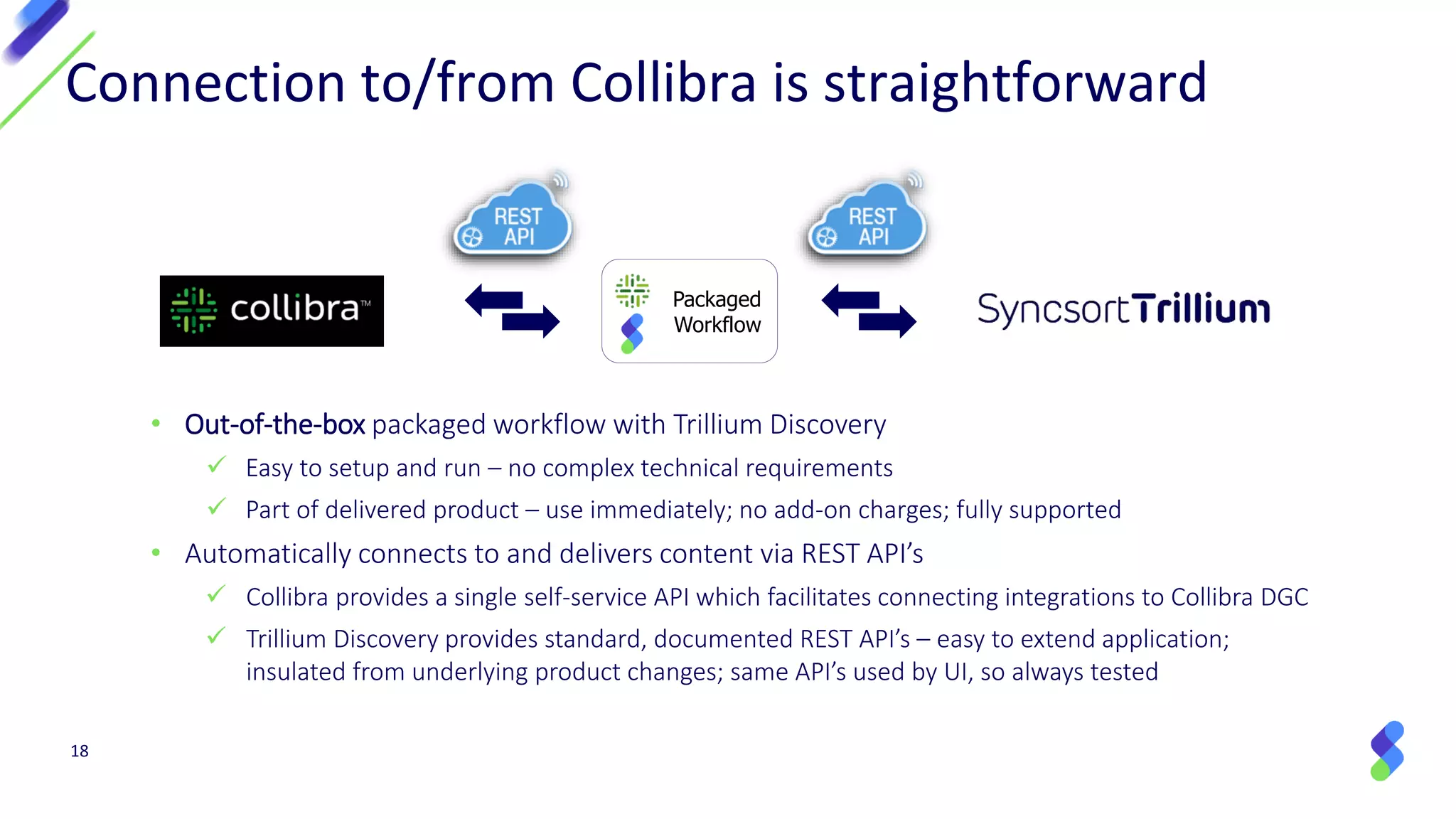

The document discusses the importance of data quality in big data analytics, highlighting challenges senior executives face in trusting their data and the stalling of AI/ML projects due to poor data quality. It introduces Trillium DQ, a scalable solution for data quality that integrates with platforms like Amazon EMR and provides efficient data processing and governance. The document emphasizes the need for organizations to leverage data quality capabilities to support decision-making and maintain corporate reputation.