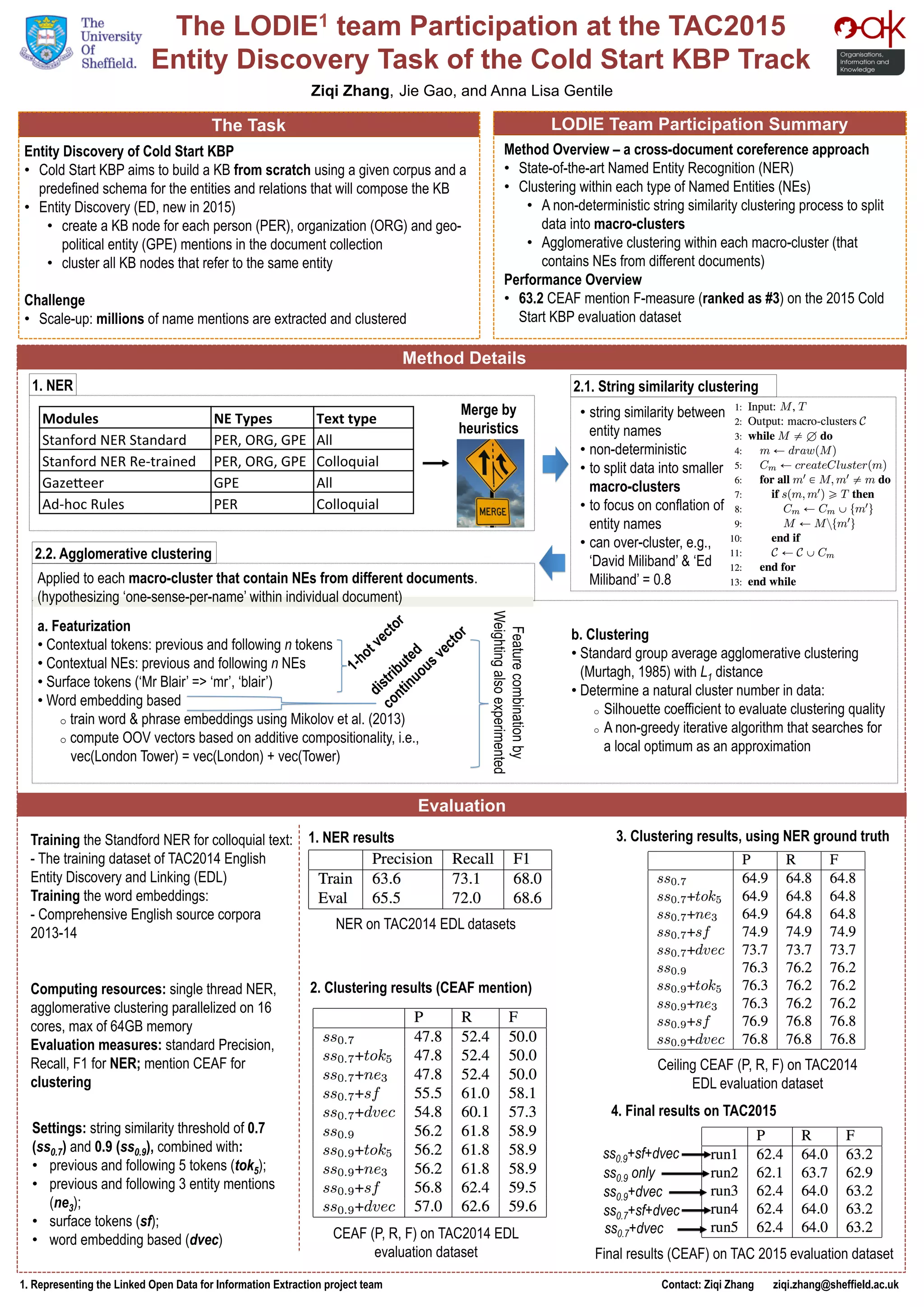

The document details the LODIE1 team's participation in the TAC2015 Cold Start KBP track, focusing on the entity discovery task that involves building a knowledge base from a corpus. It outlines the method of using a cross-document coreference approach for named entity recognition and clustering, achieving a CEAF mention F-measure of 63.2, ranking third in the evaluation. The evaluation processes and techniques used, such as string similarity clustering and agglomerative clustering, are described to assess performance.