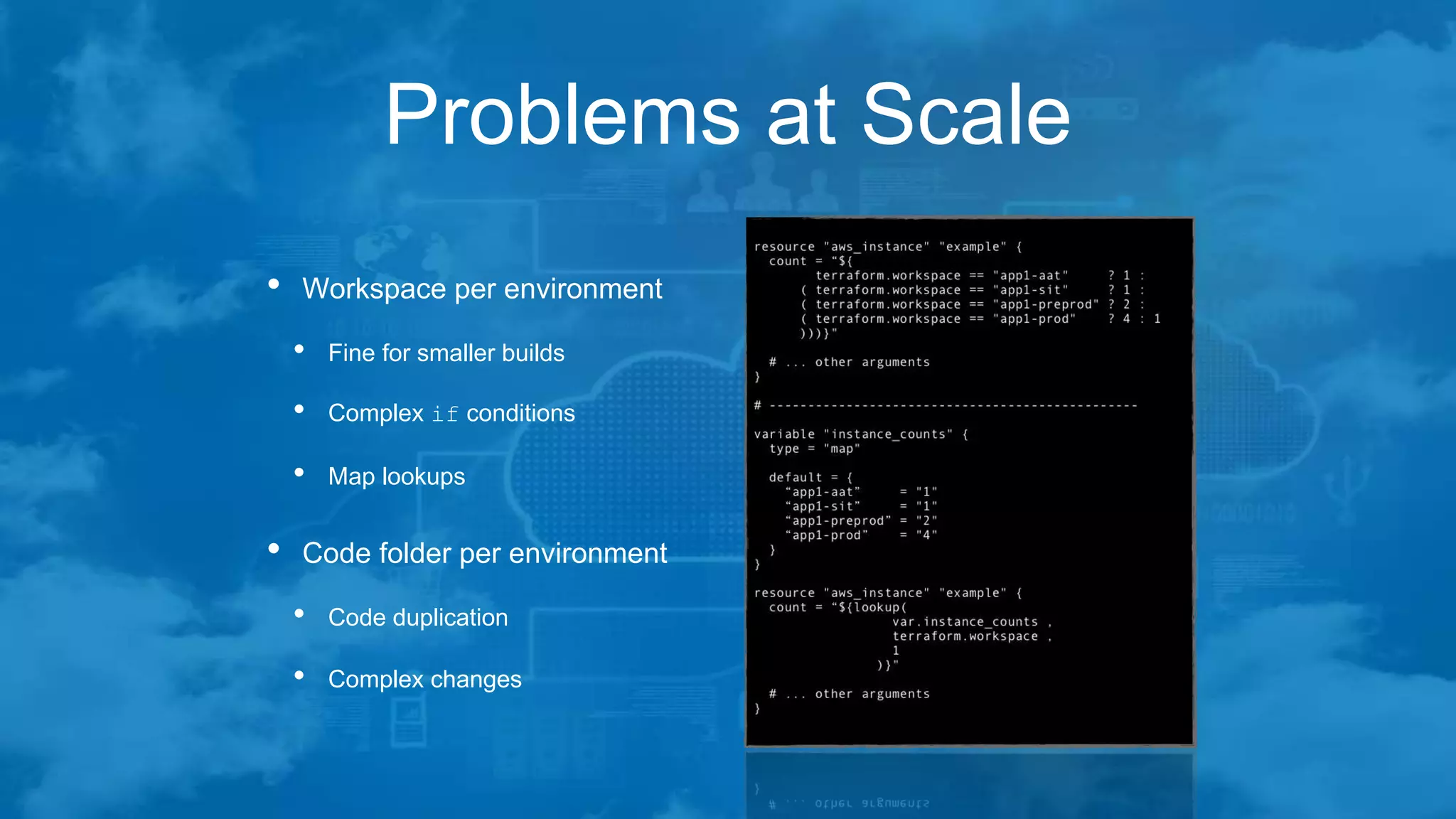

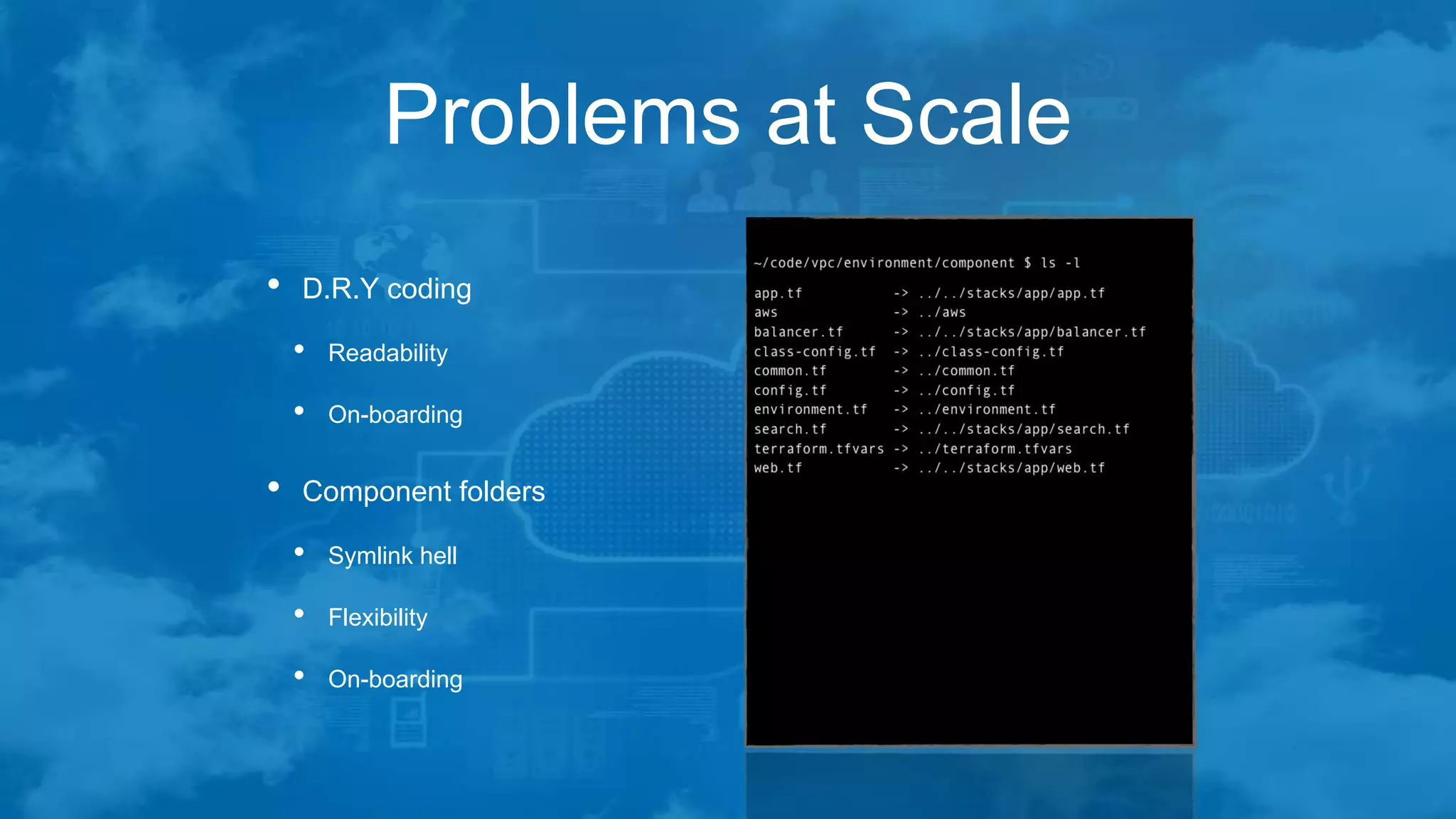

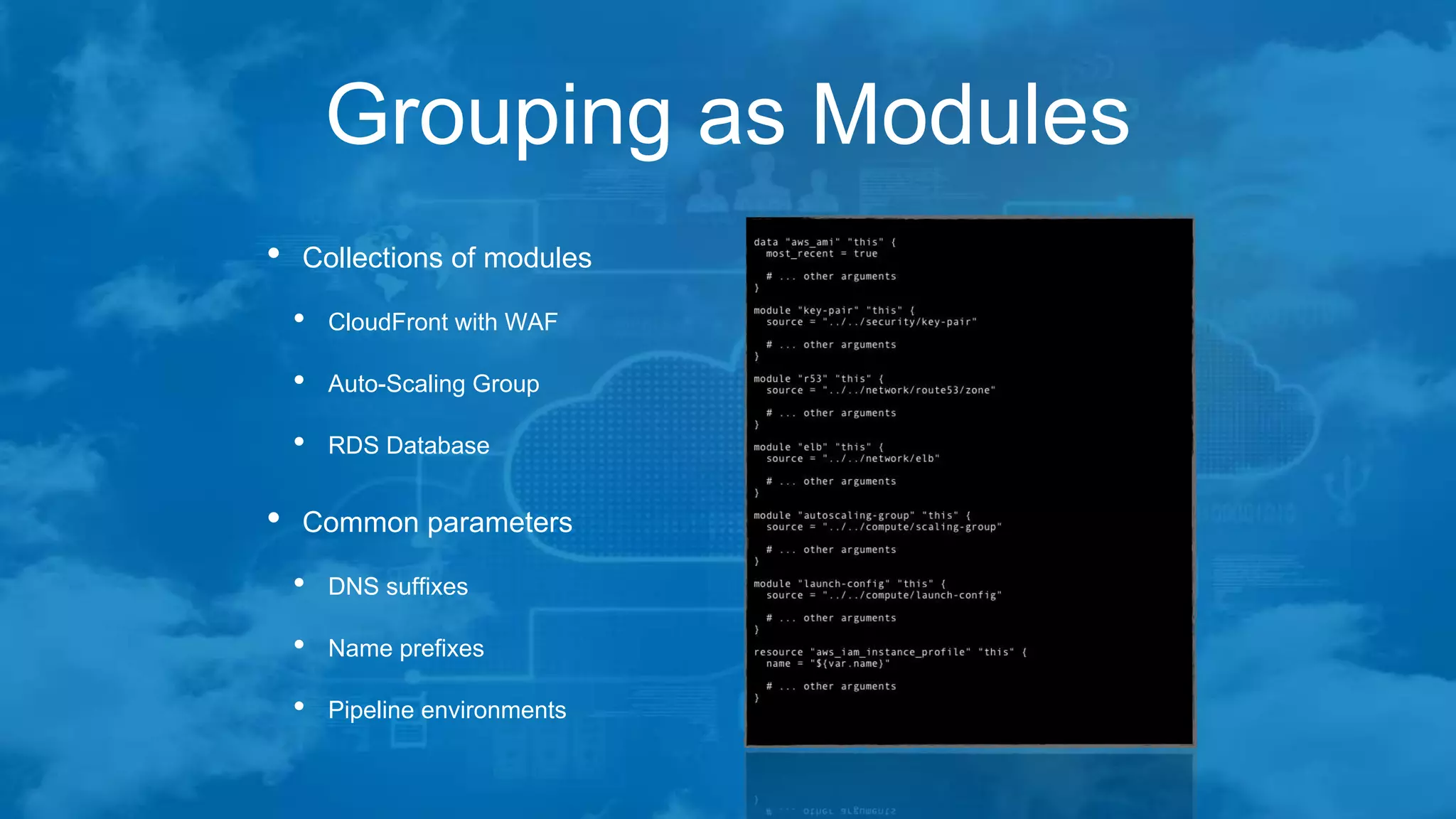

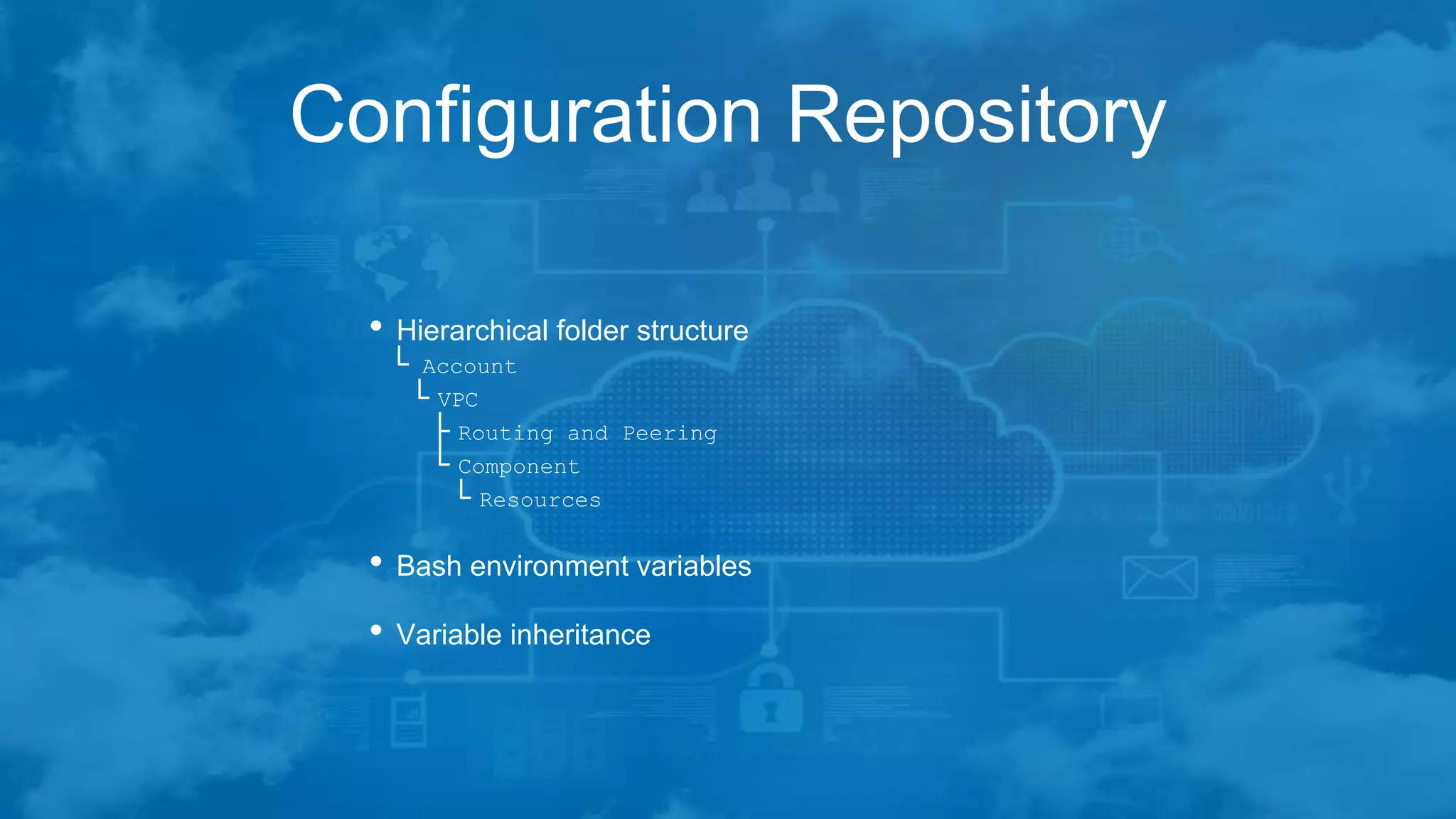

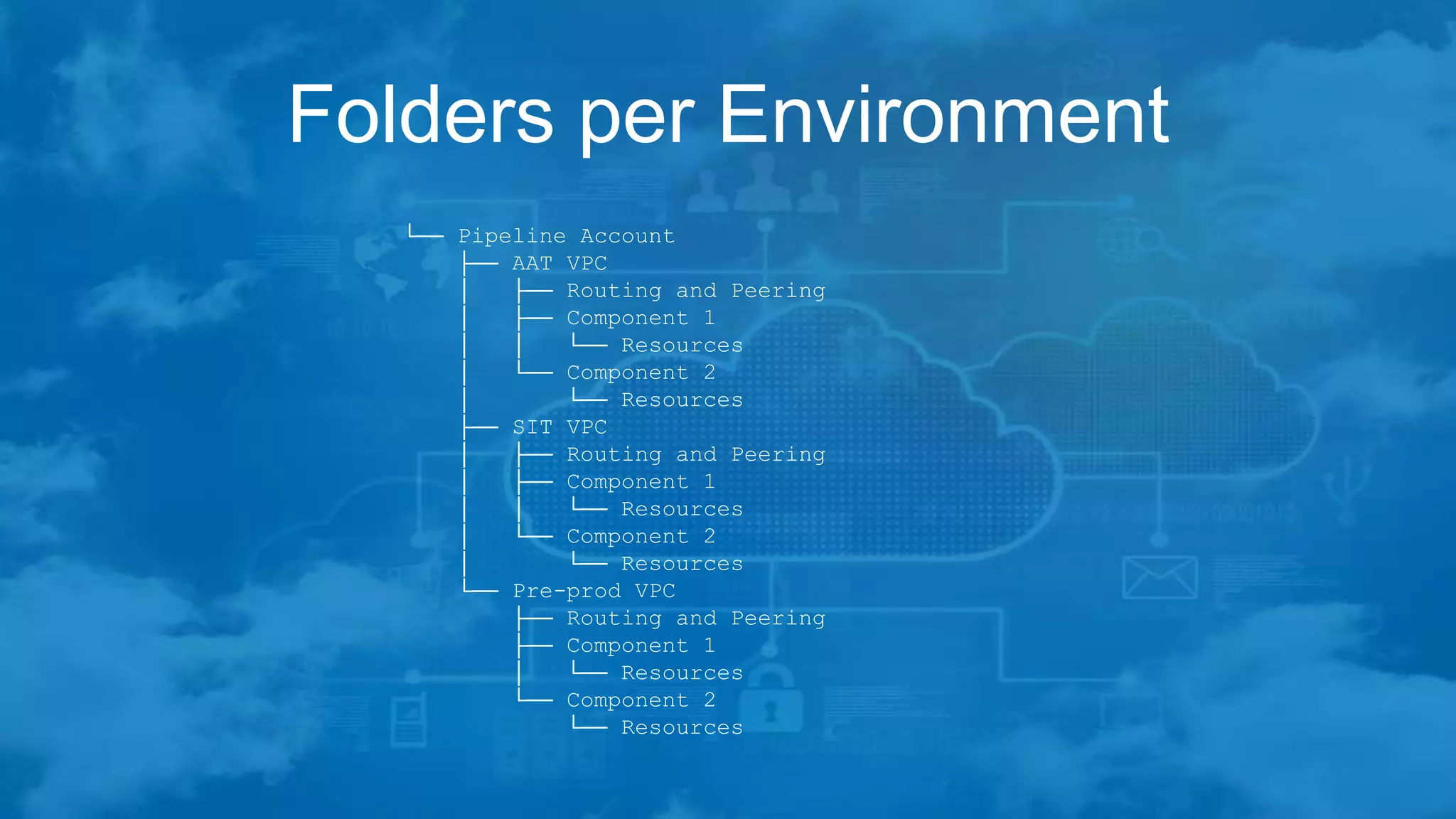

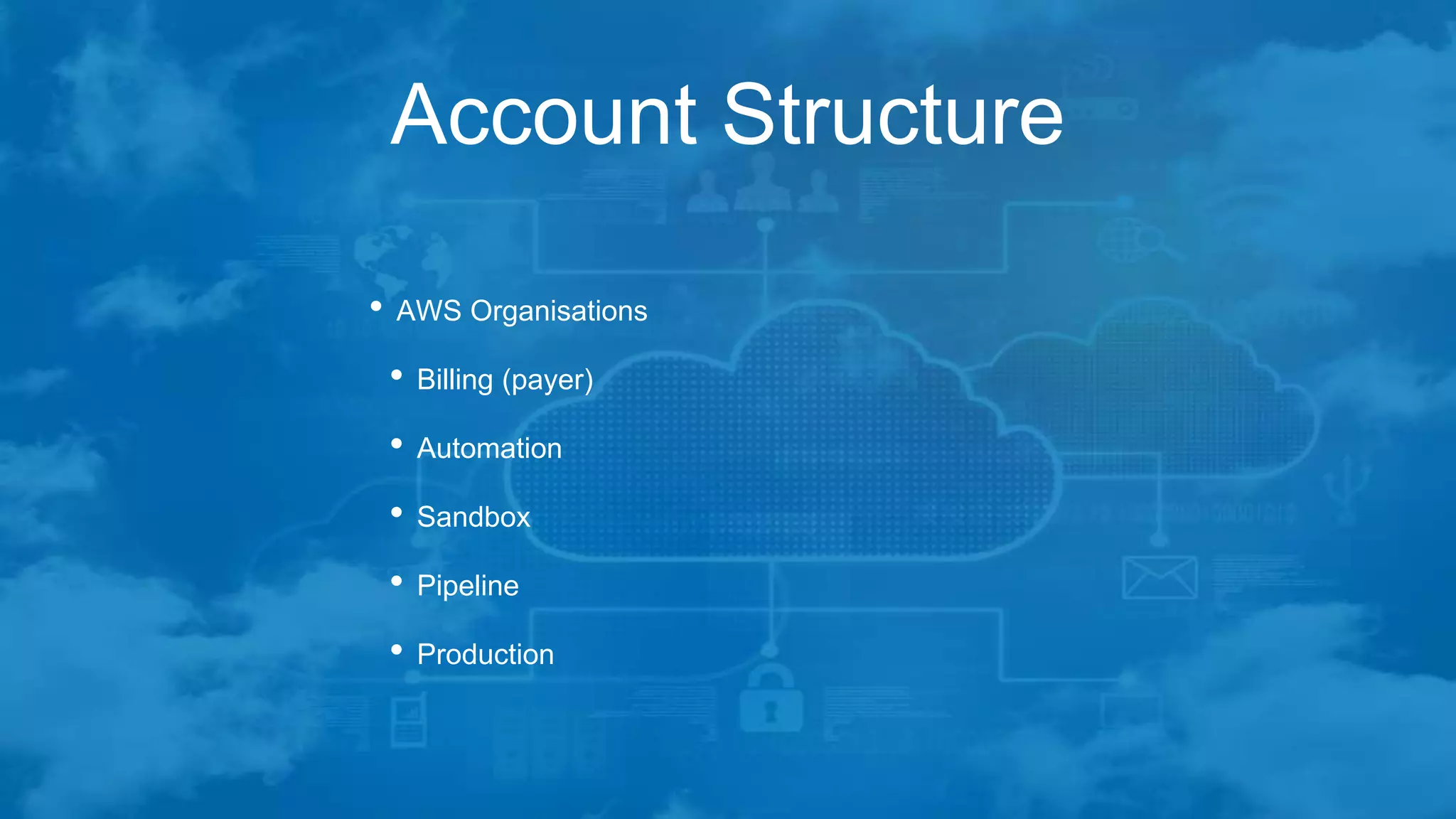

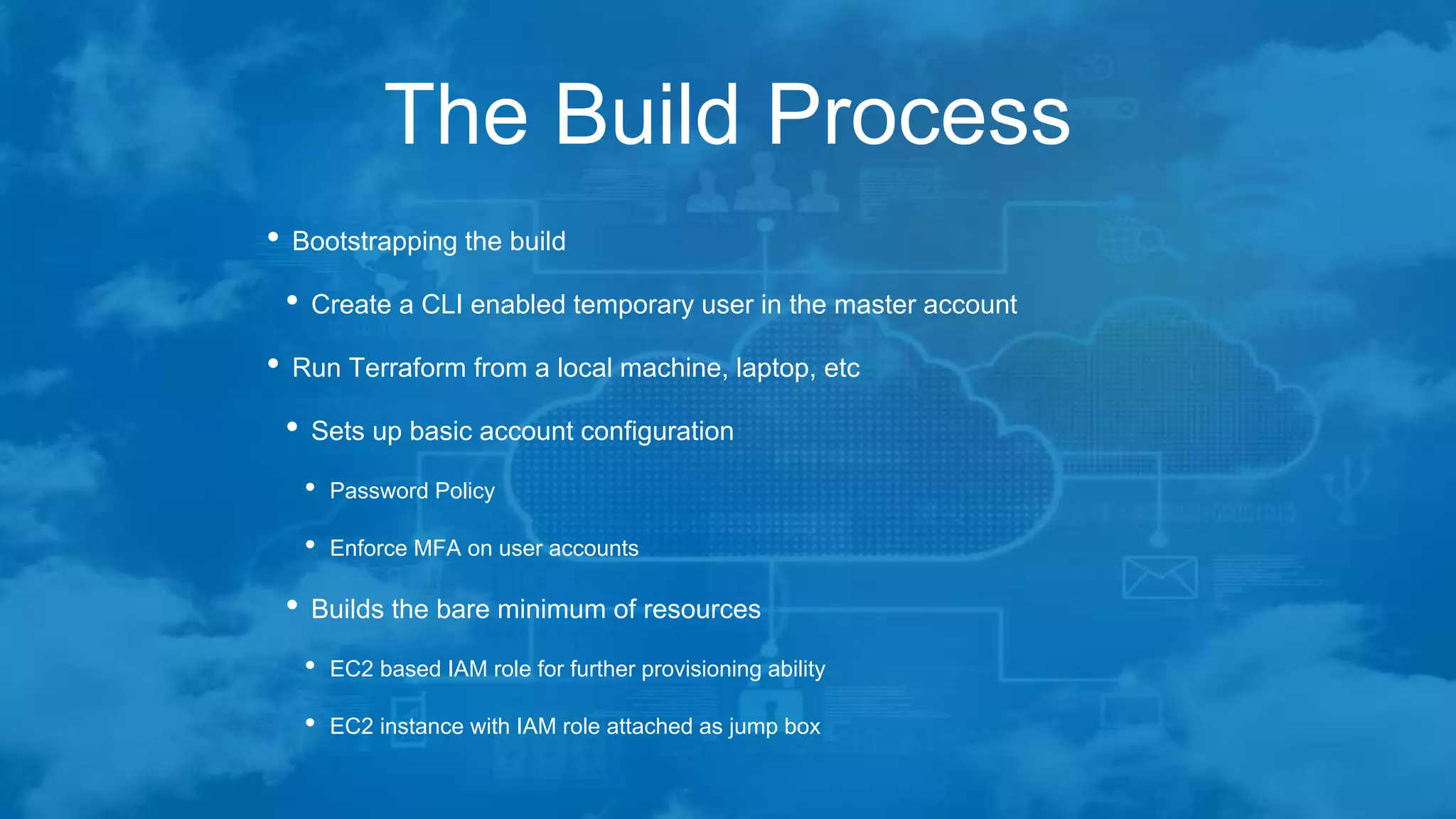

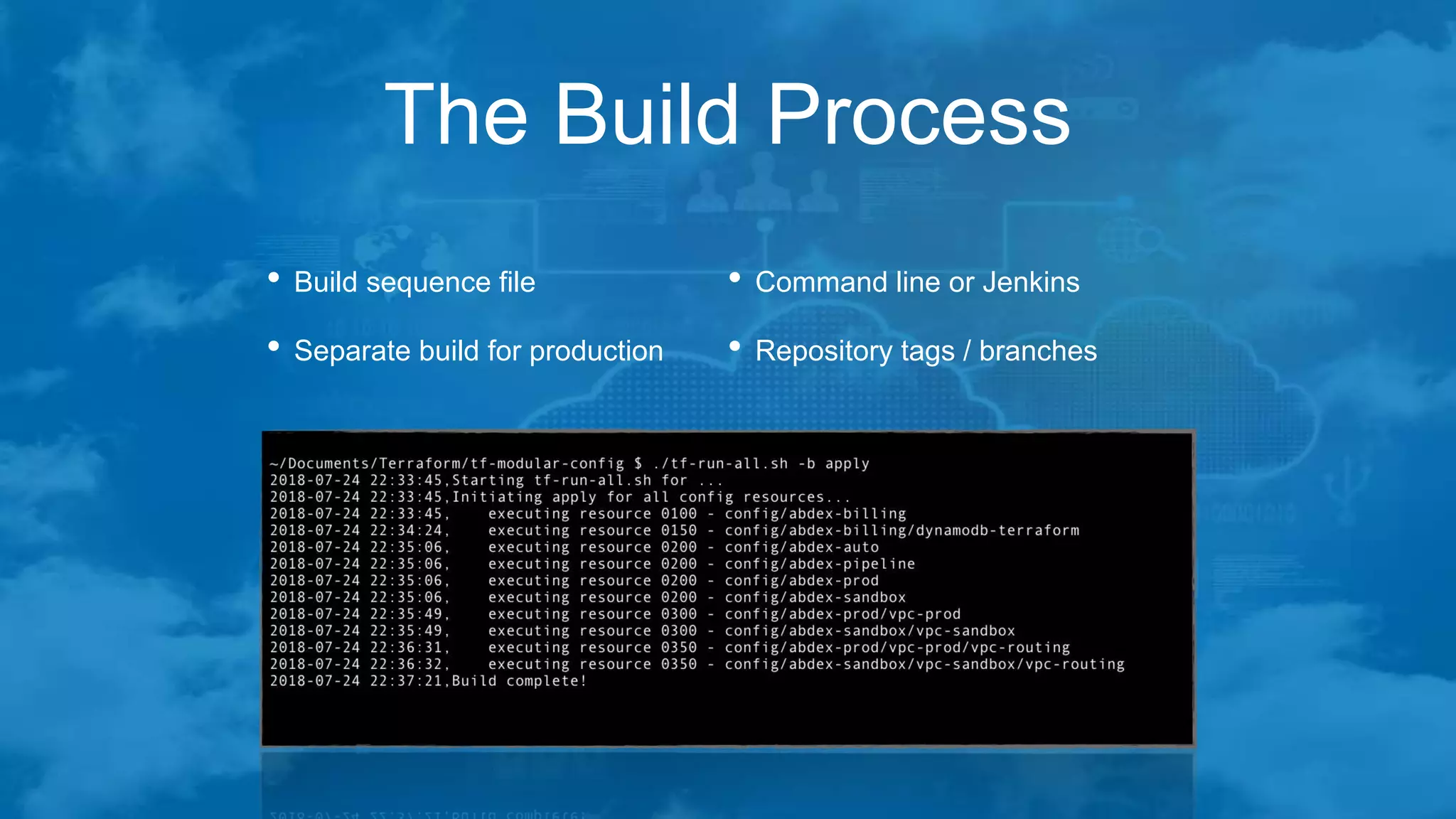

The document outlines best practices for managing Terraform at scale on AWS, addressing challenges like code complexity, variable duplication, and environment management. It suggests a hierarchical folder structure for organizing Terraform code and emphasizes the importance of security during the build process using EC2 jump boxes. Additionally, it highlights the author's extensive IT experience and provides contact information for networking.