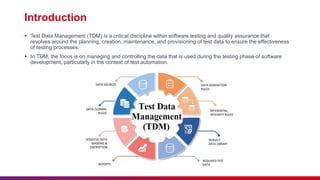

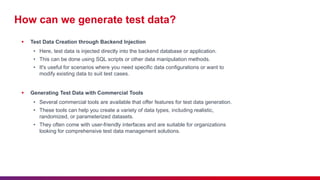

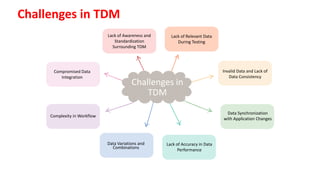

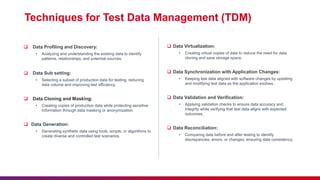

The document discusses effective test data management (TDM) techniques for test automation, emphasizing the importance of managing data used during software testing. It covers various types of test data, methods for generating test data, and best practices for implementing TDM, such as data masking and proper planning. Additionally, the document highlights challenges in TDM and the significance of data security and compliance.