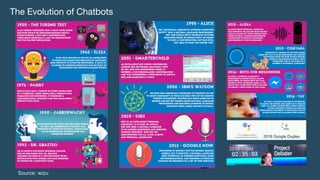

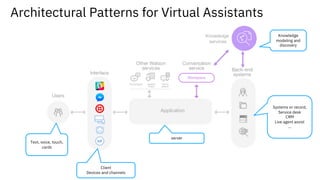

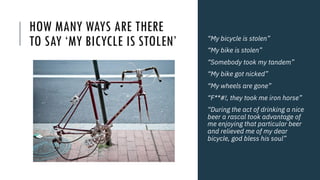

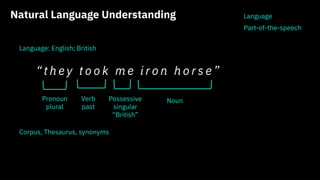

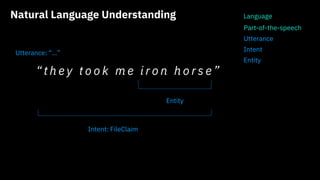

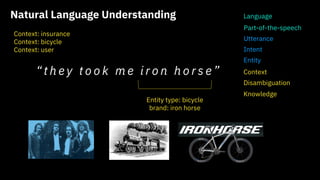

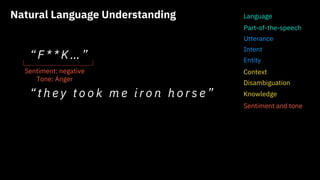

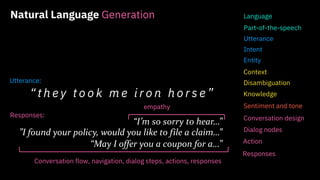

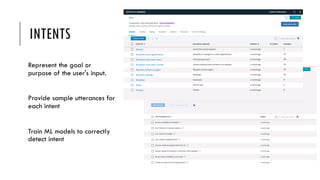

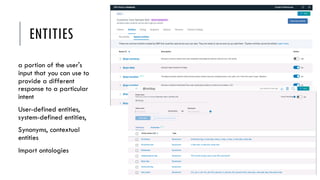

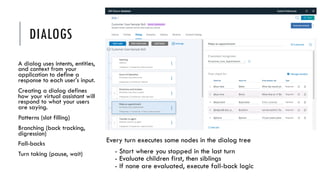

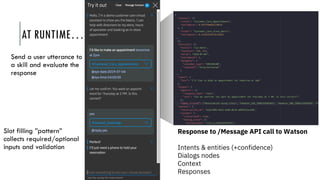

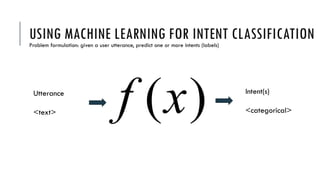

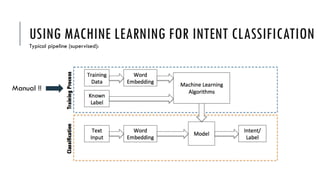

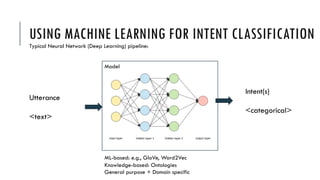

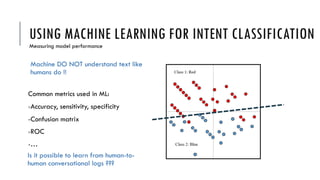

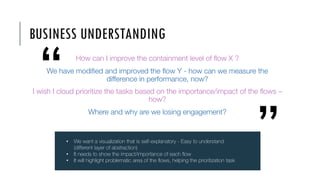

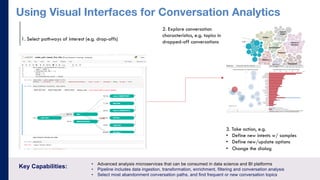

The document discusses the evolution of chatbots, emphasizing the significance of AI and natural language processing in enhancing customer experiences through conversational interfaces. It highlights the need for training machines to understand human dialogue and intent while addressing challenges in dialogue design and personalization. The presentation also covers organizational roles in chatbot development, performance analytics, and the importance of iterative improvement in conversation design.