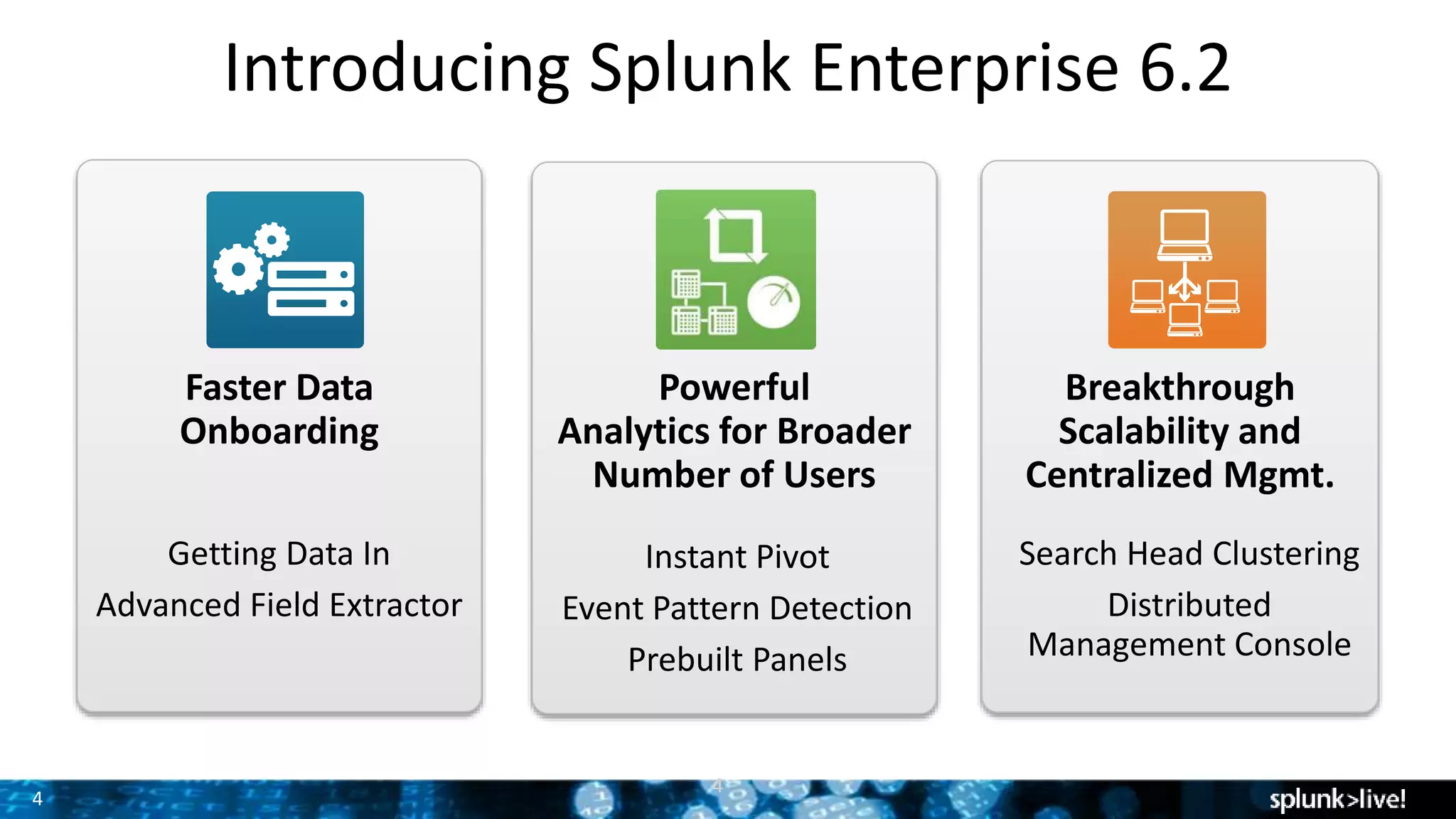

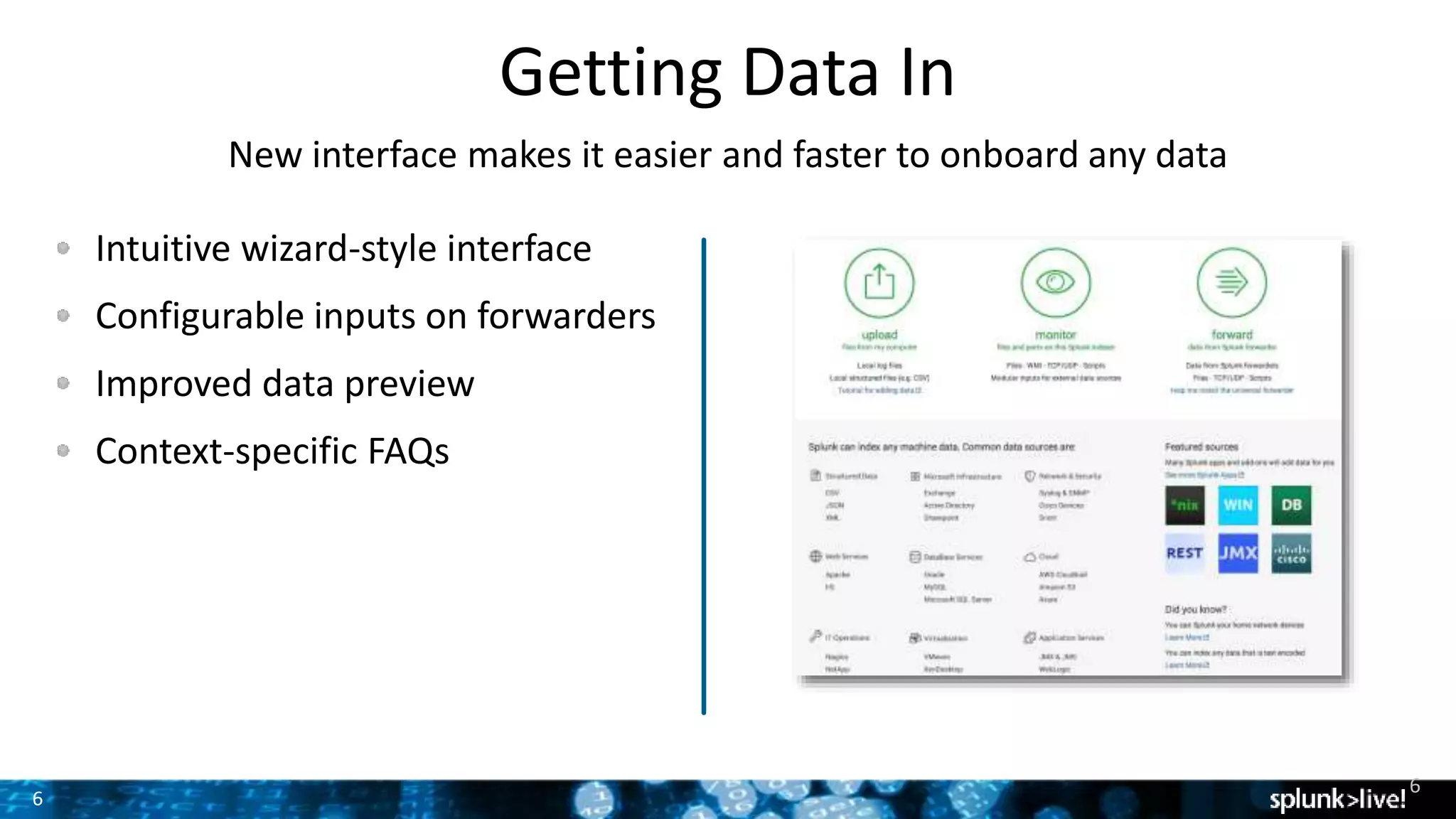

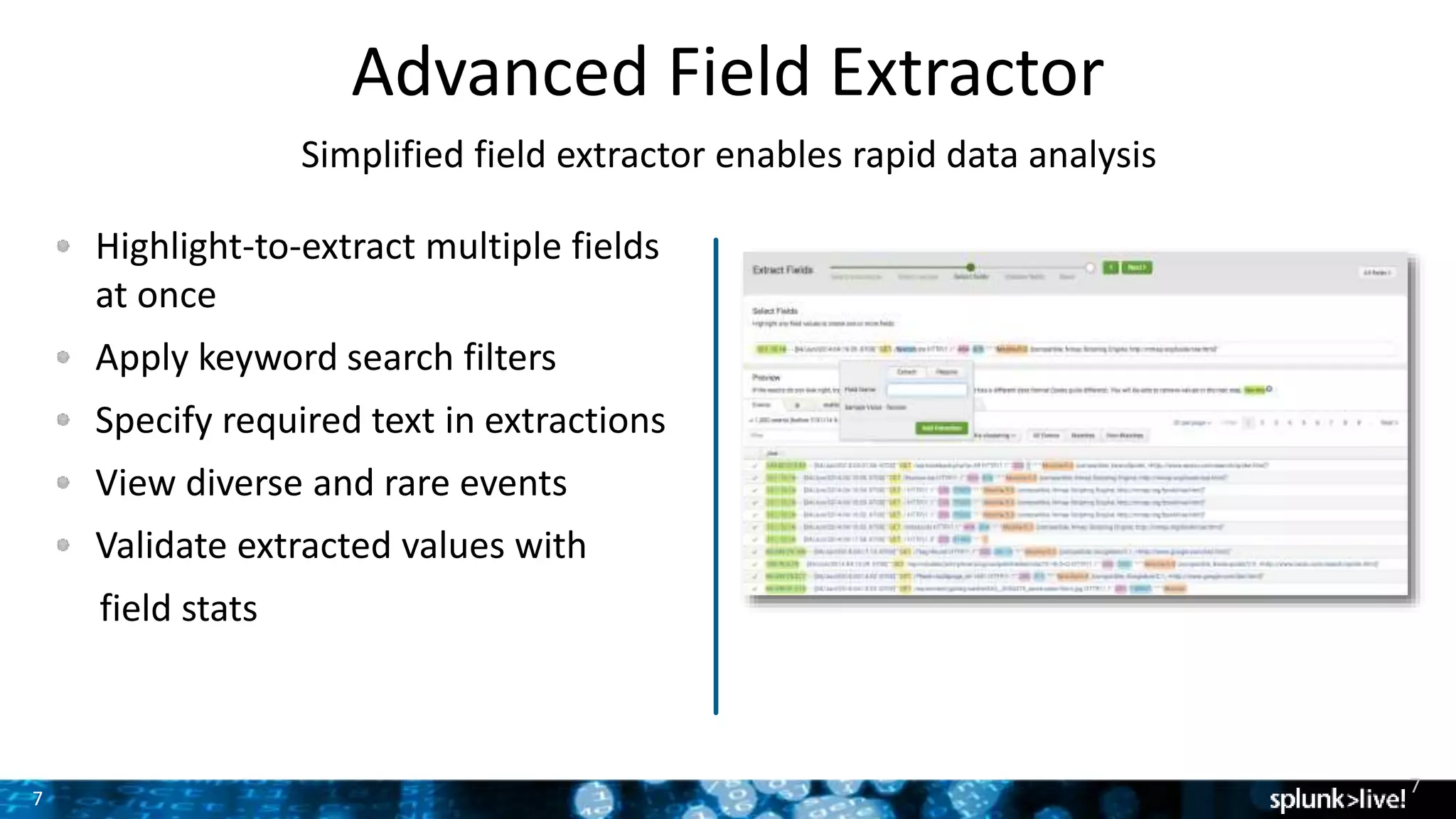

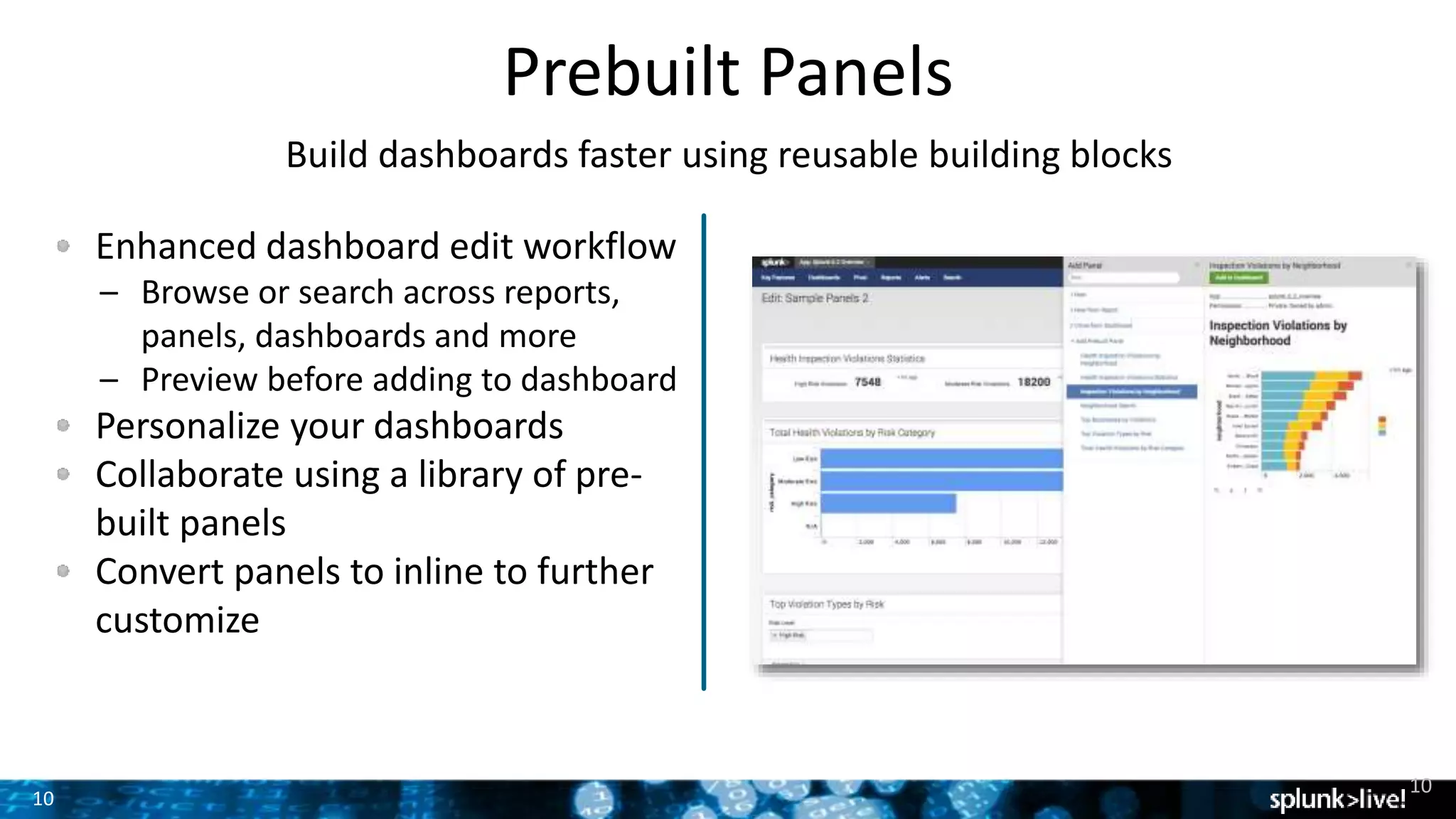

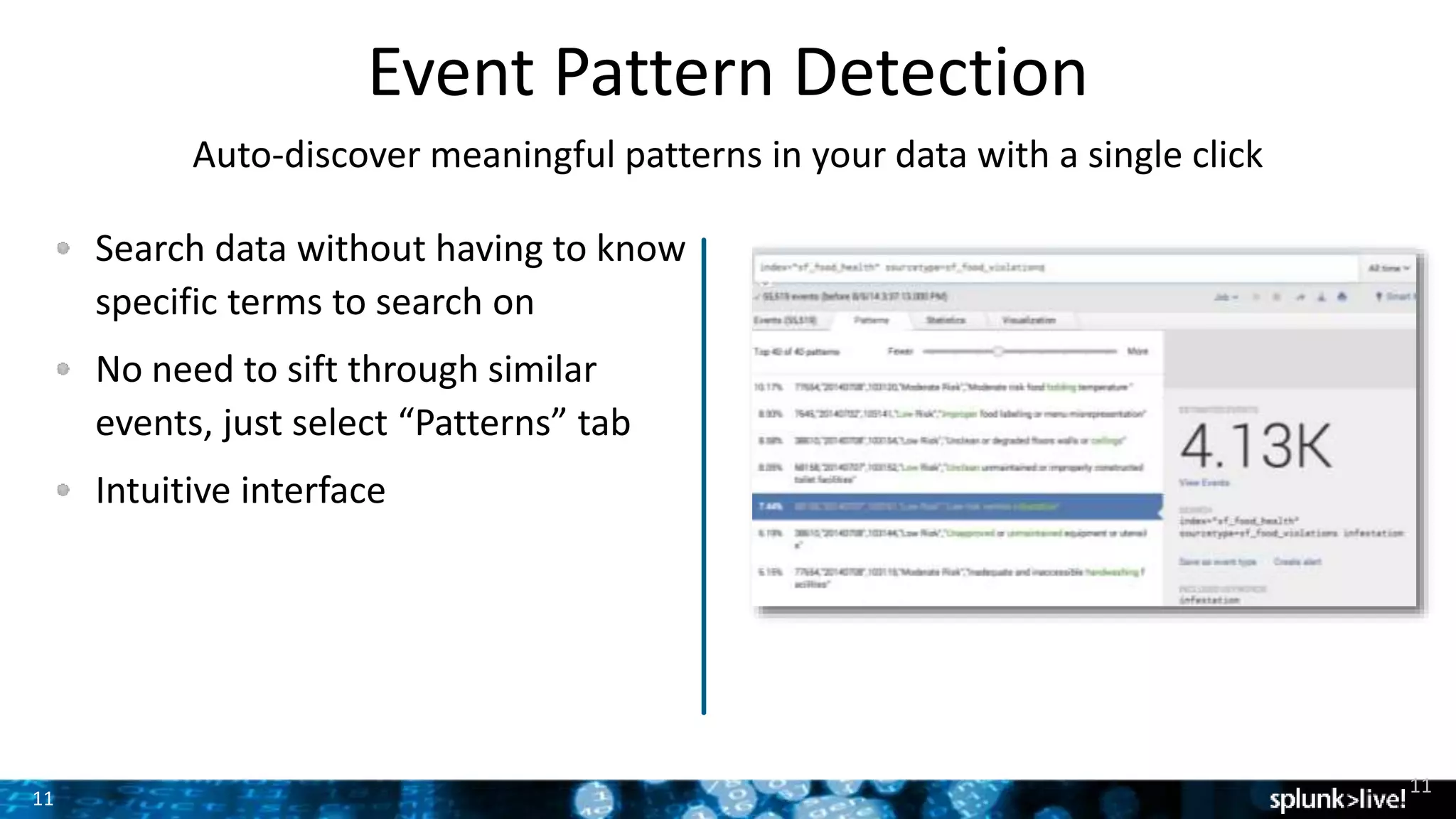

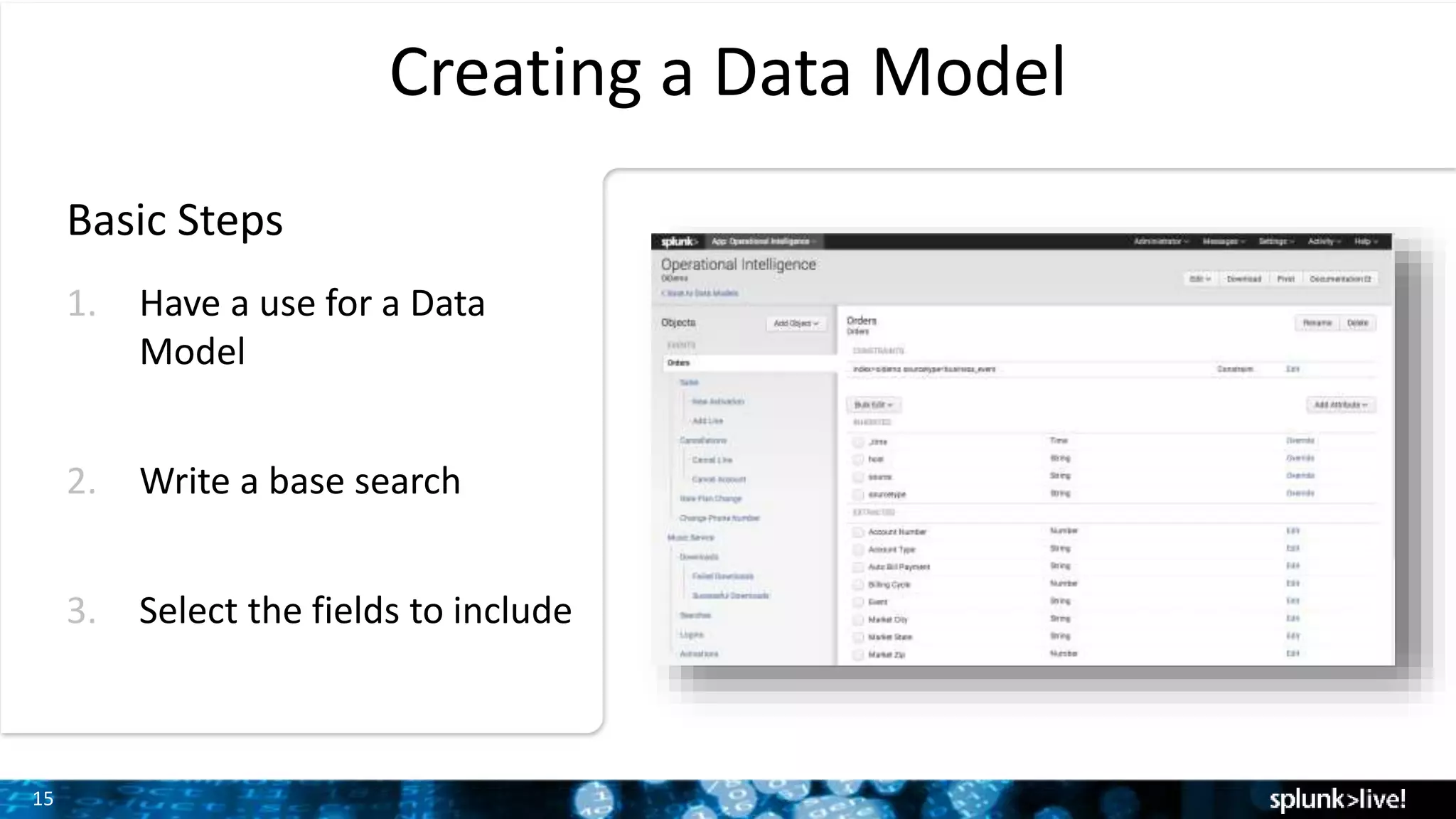

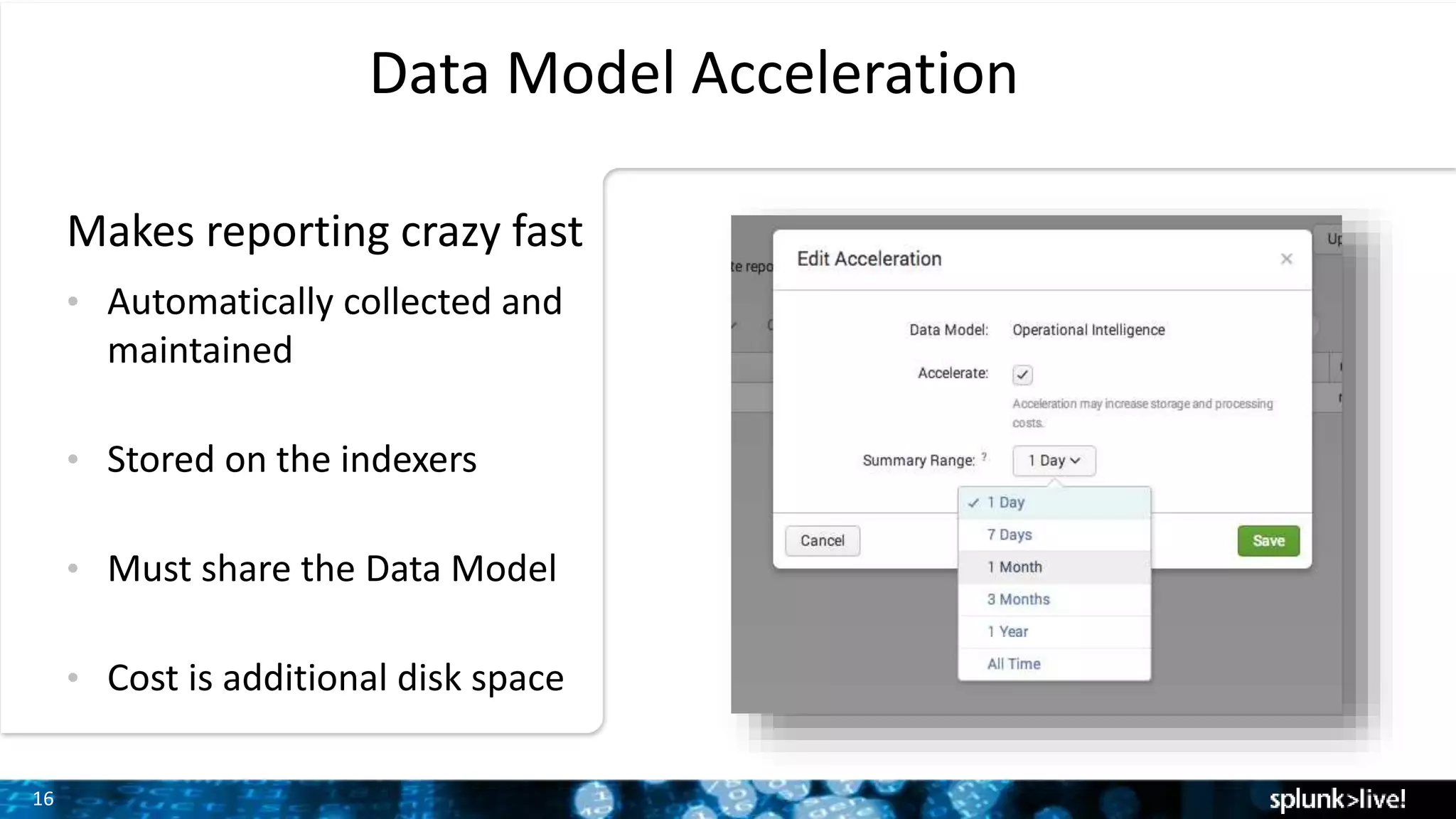

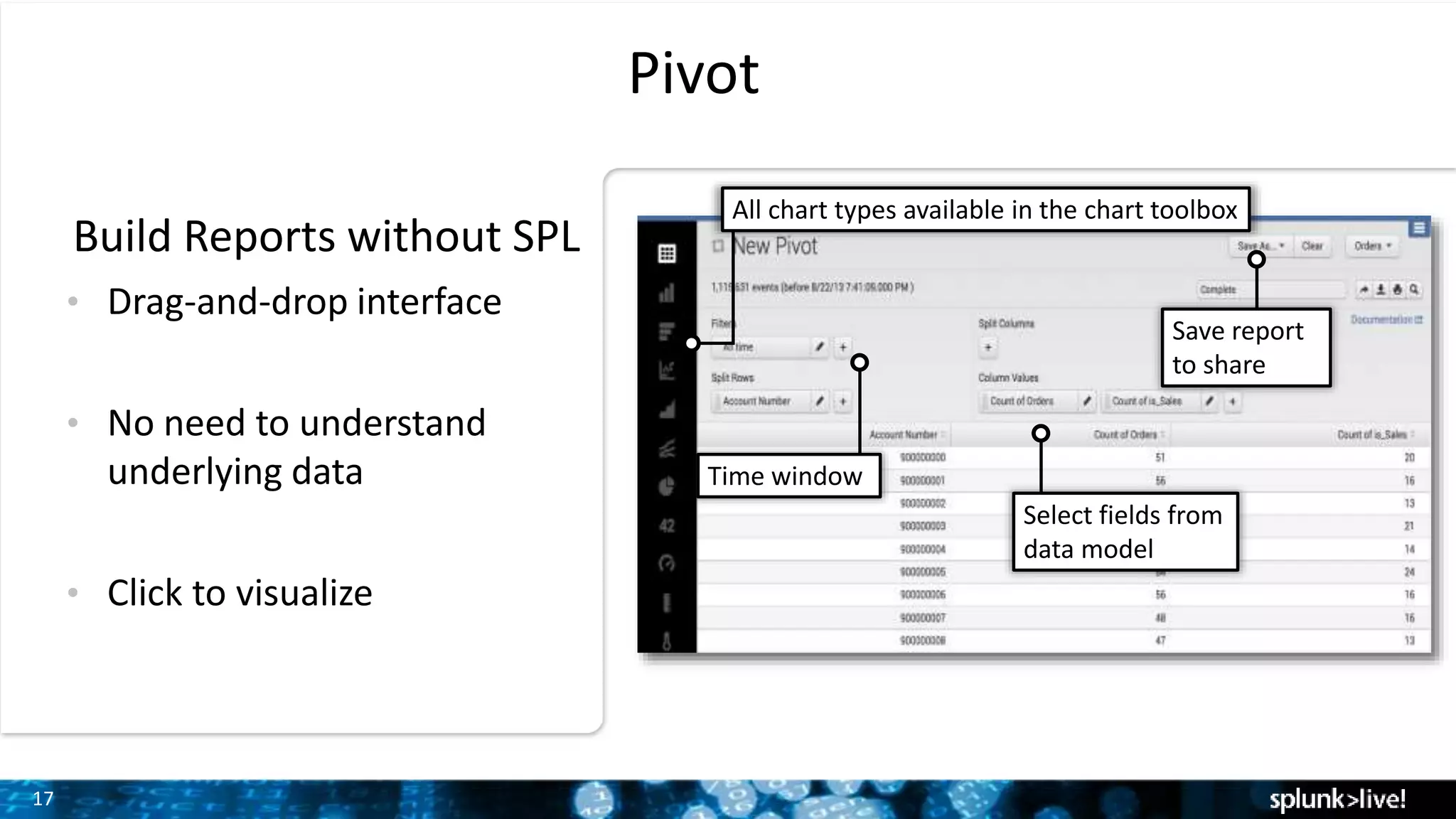

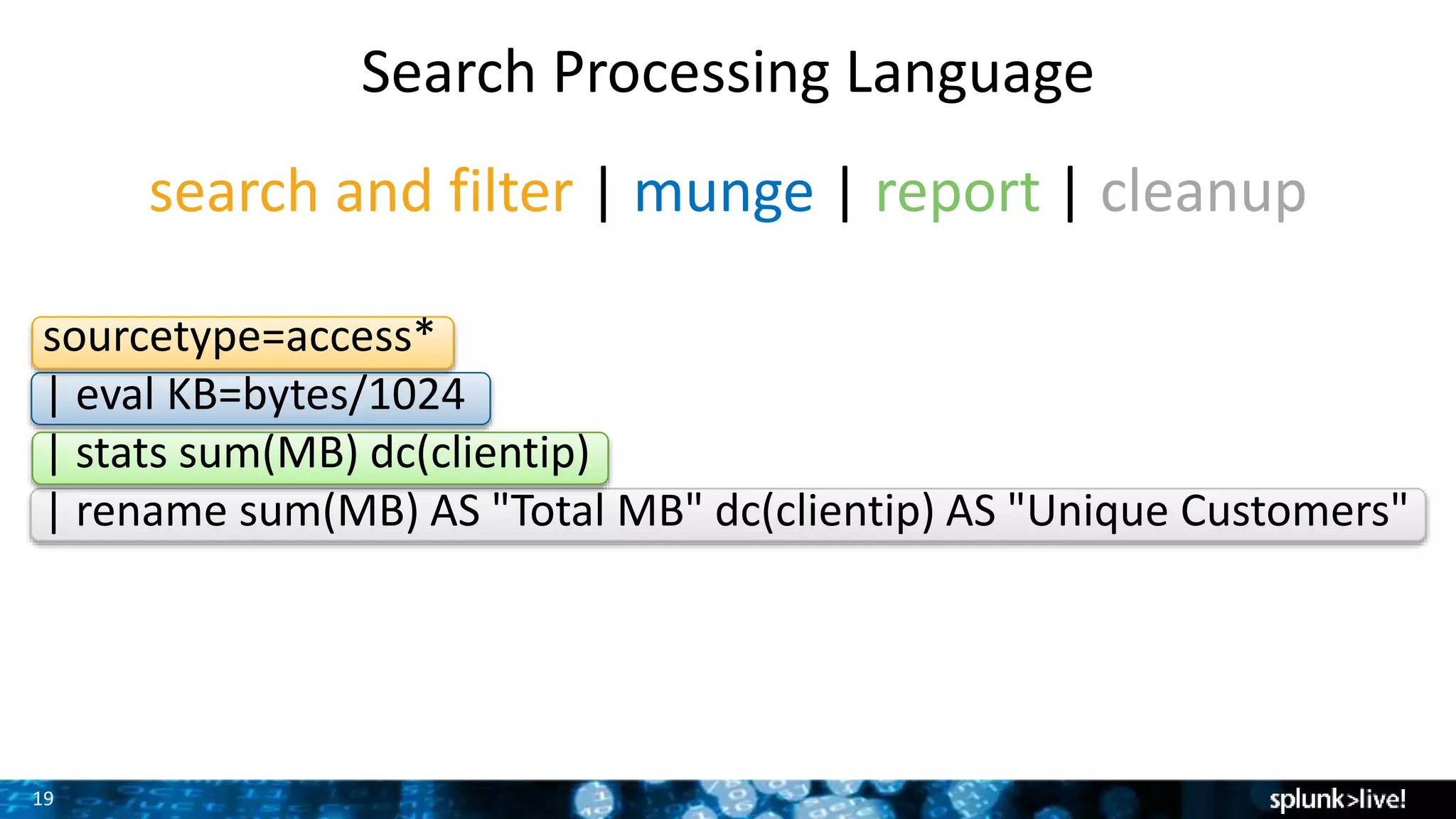

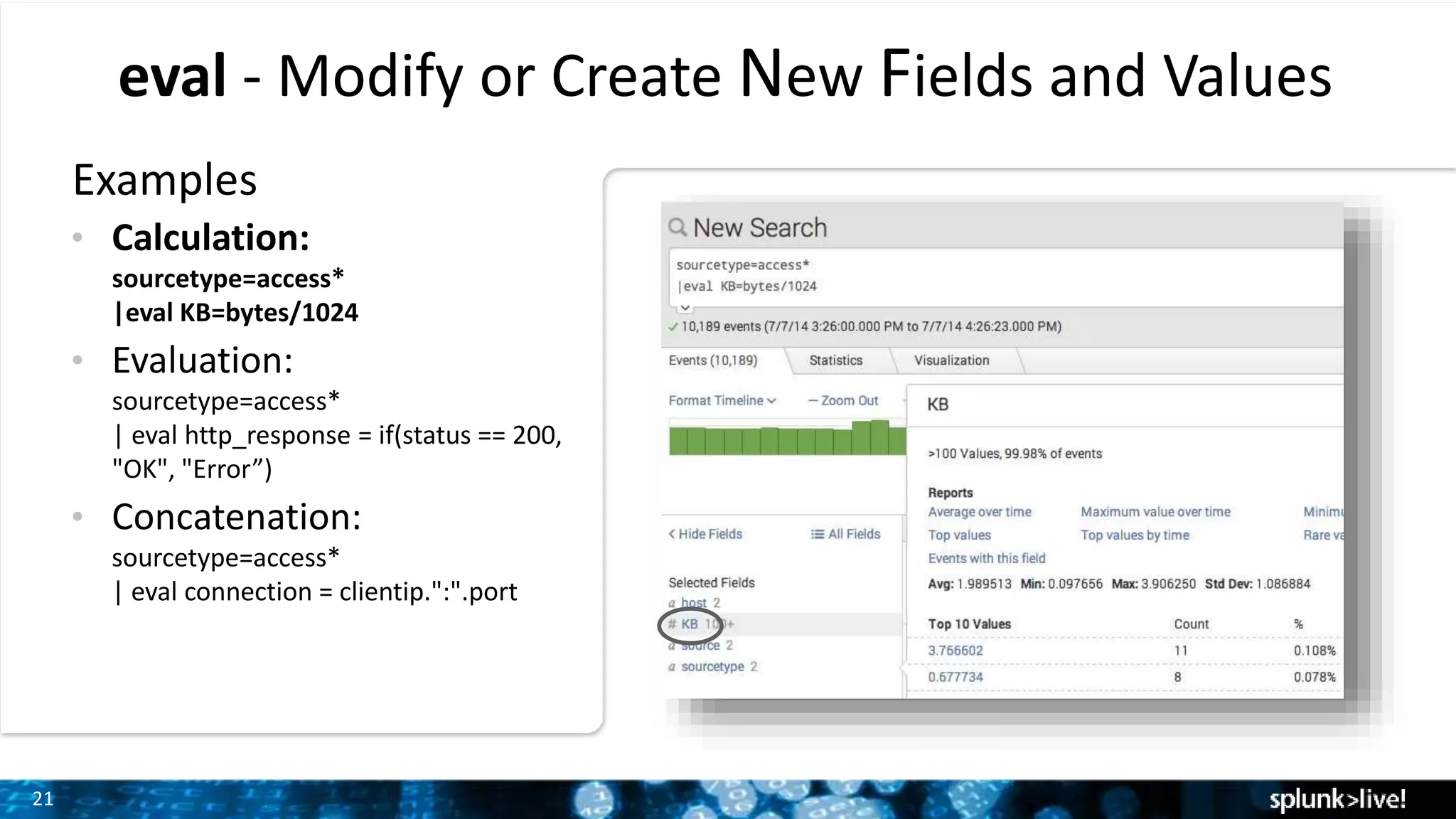

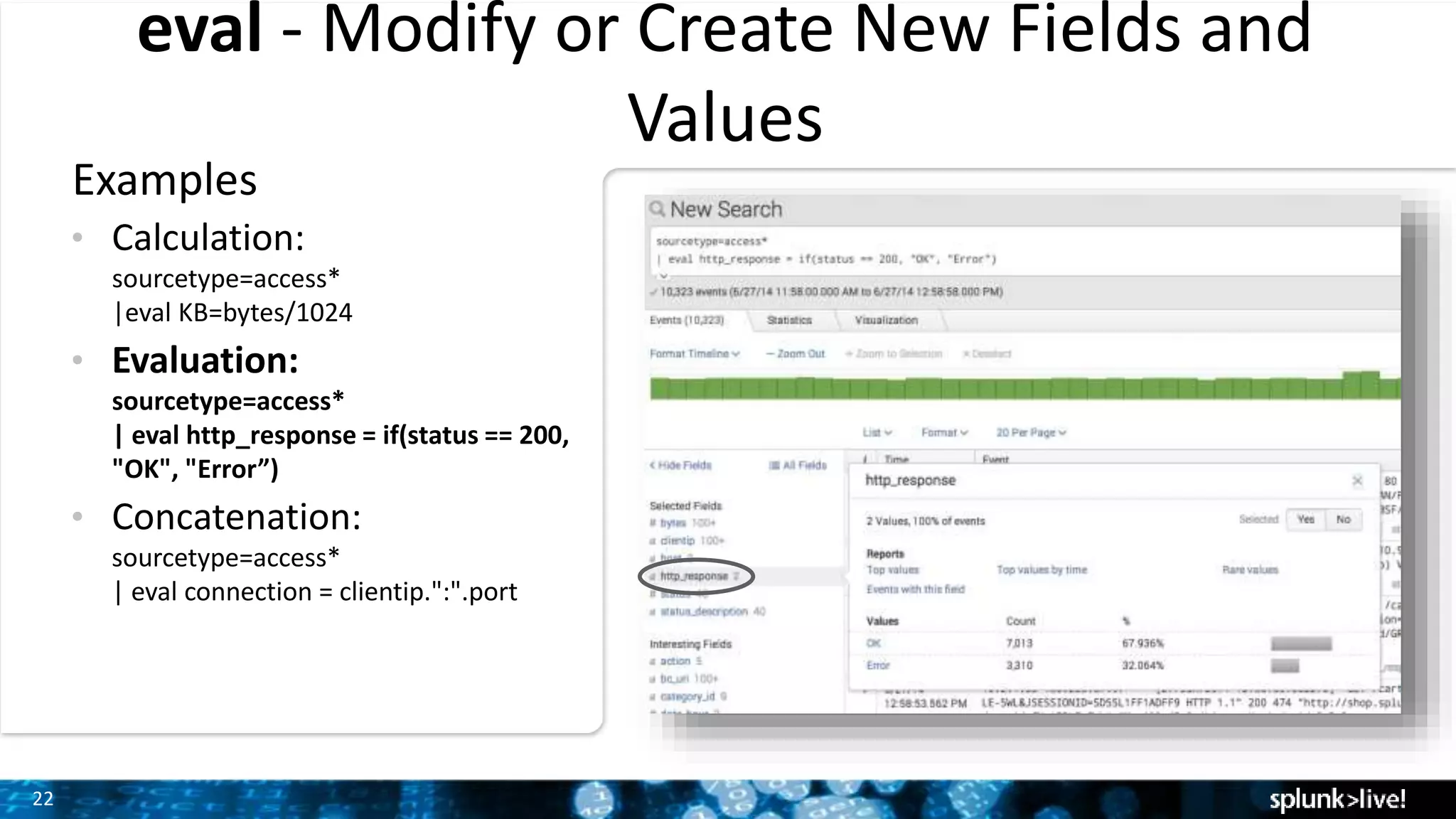

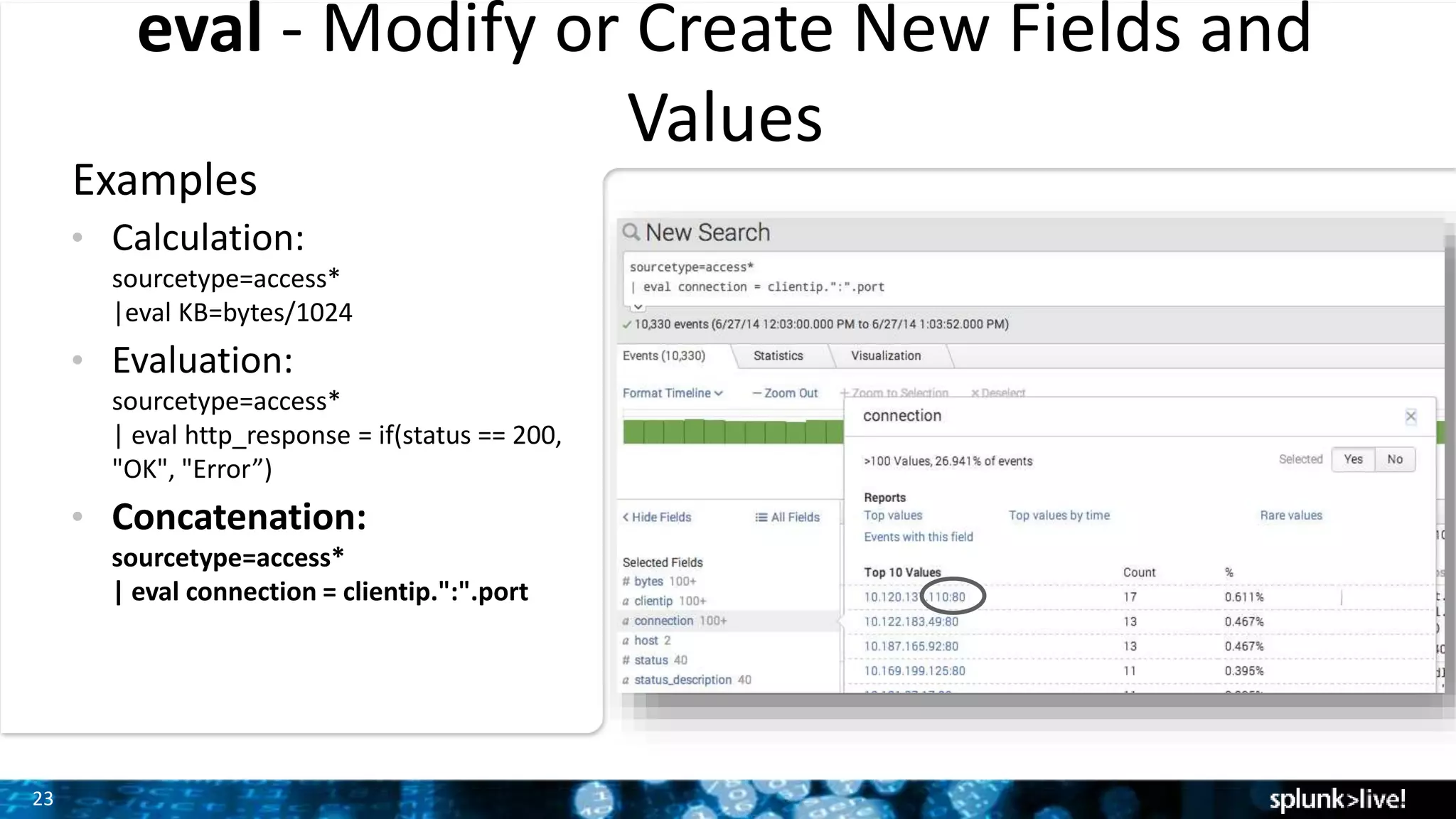

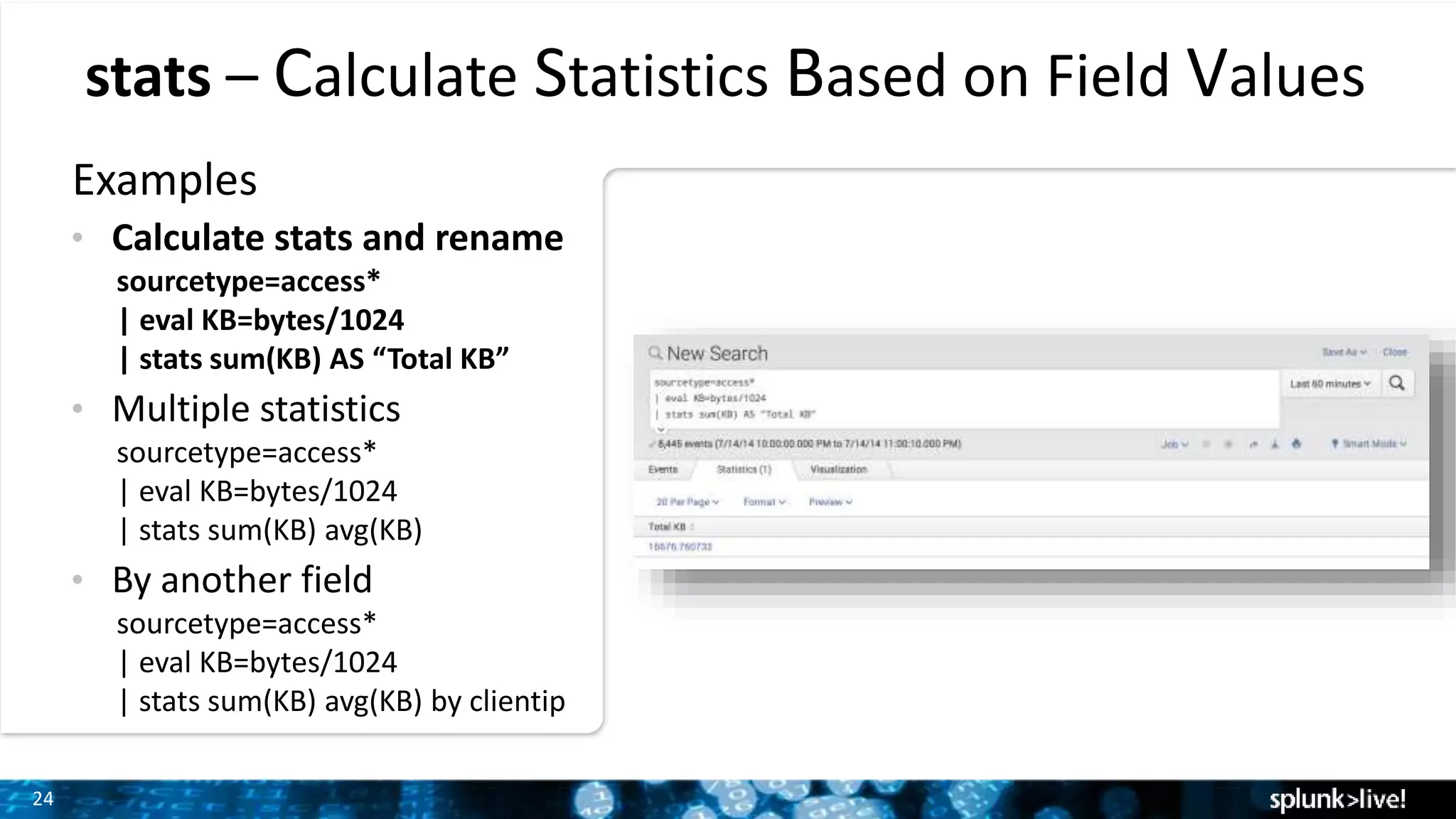

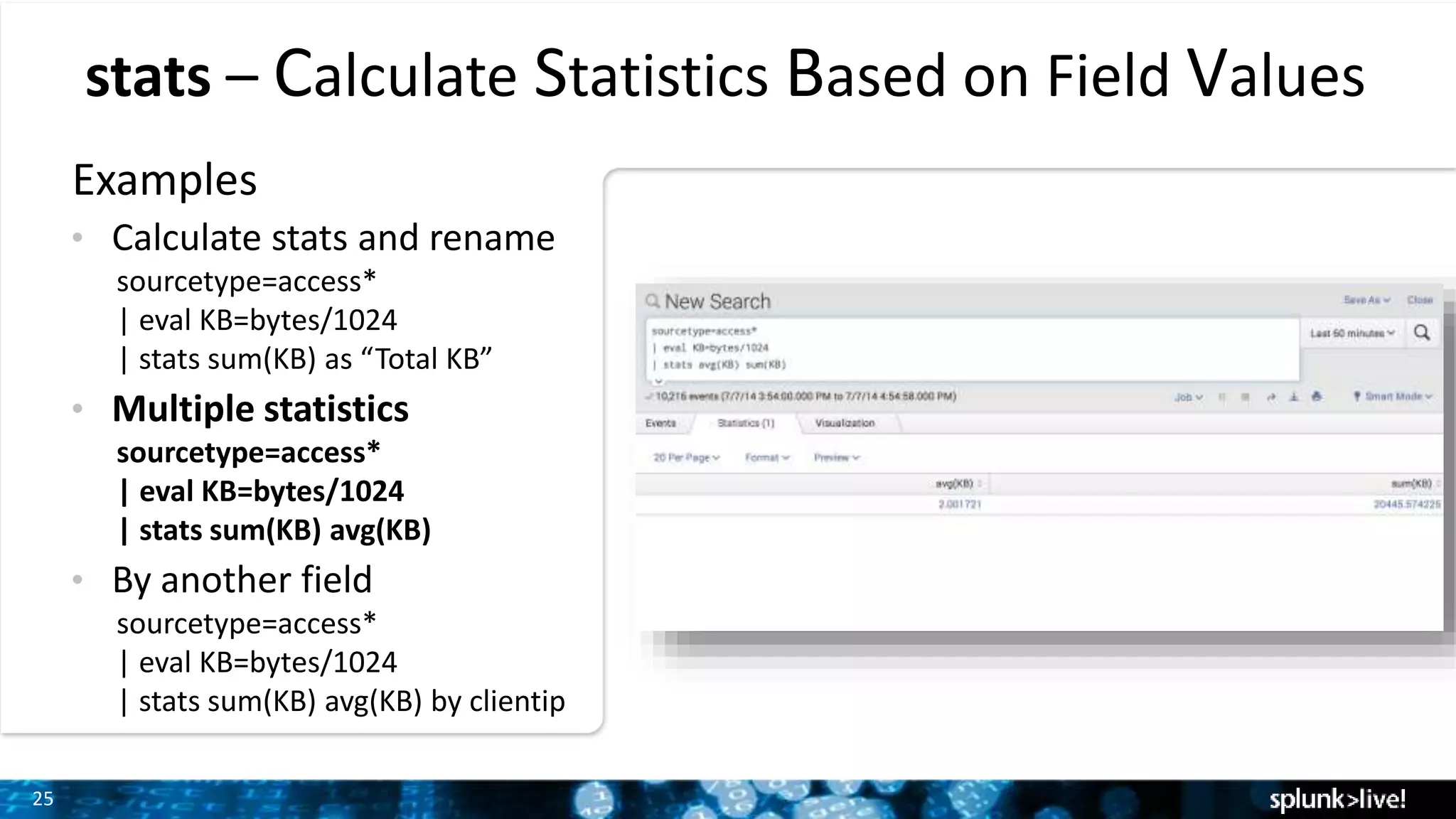

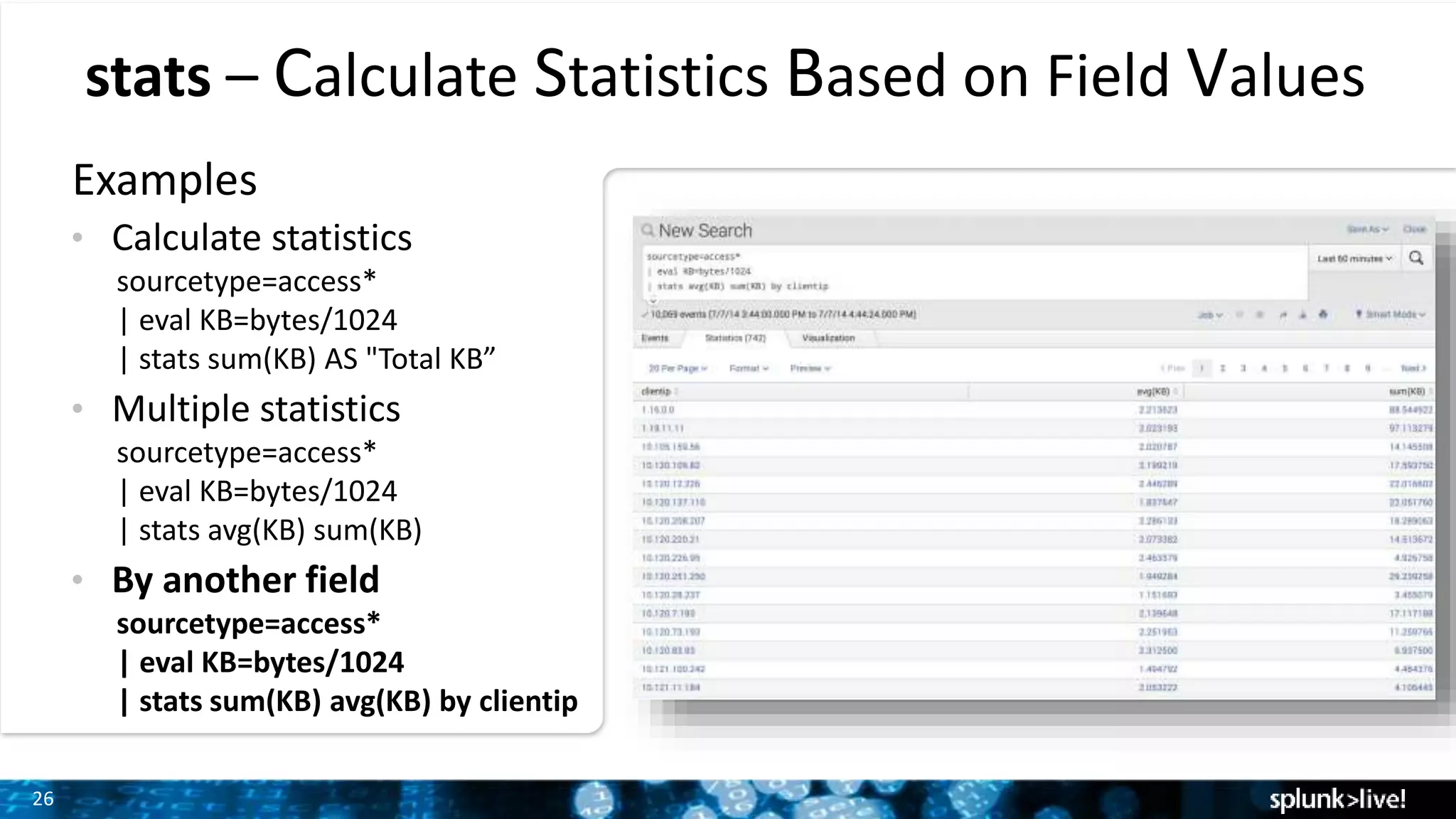

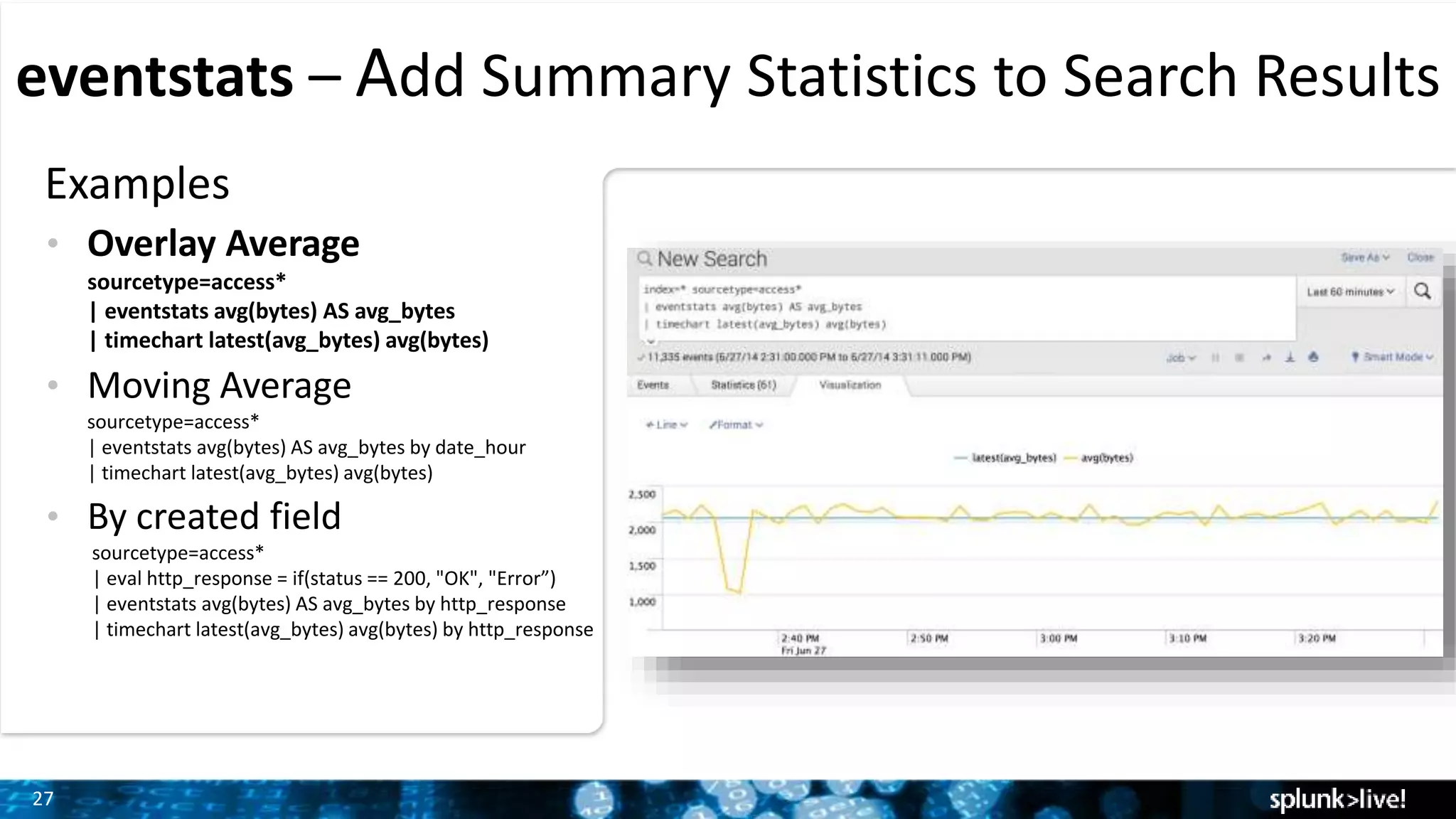

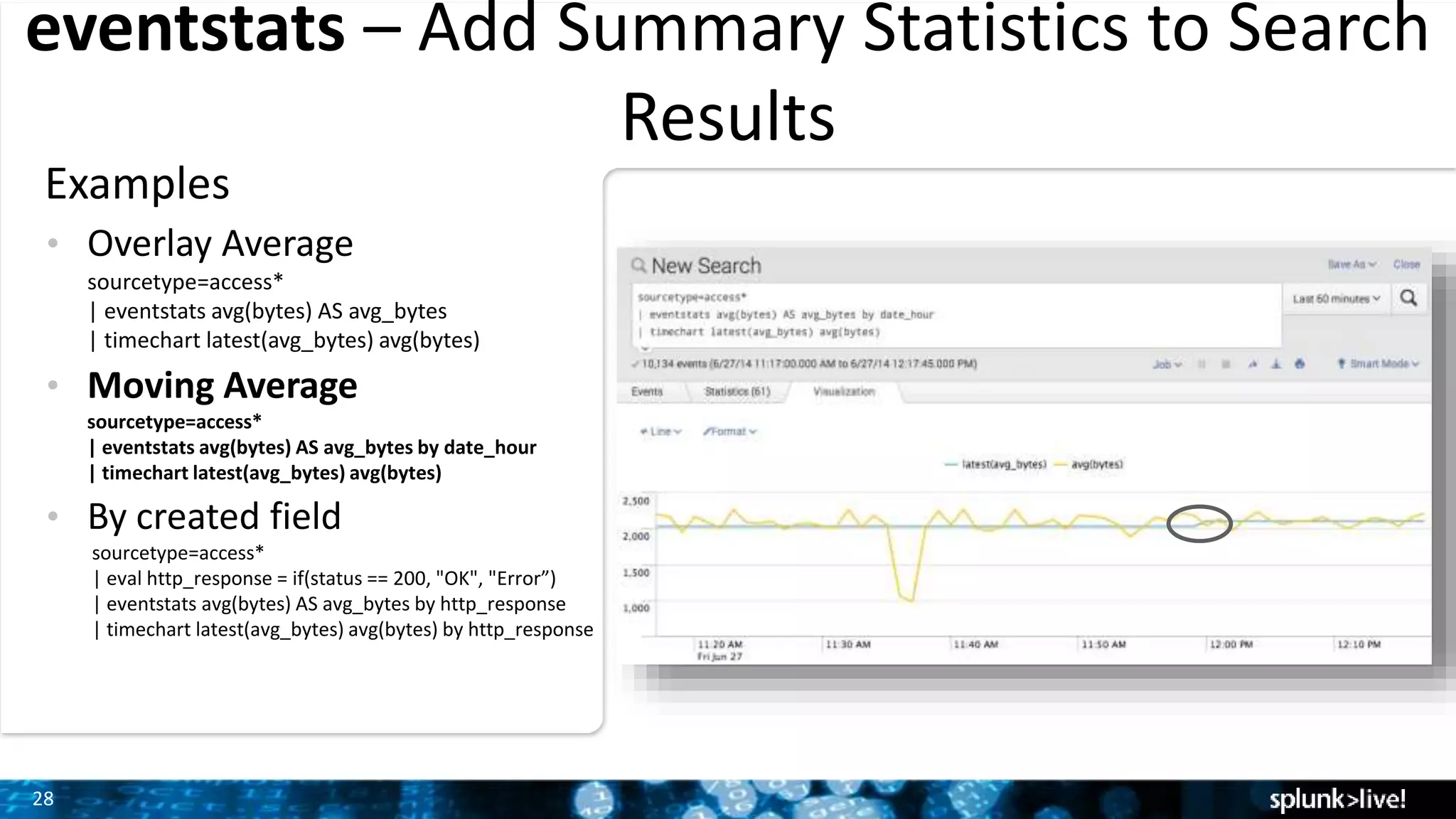

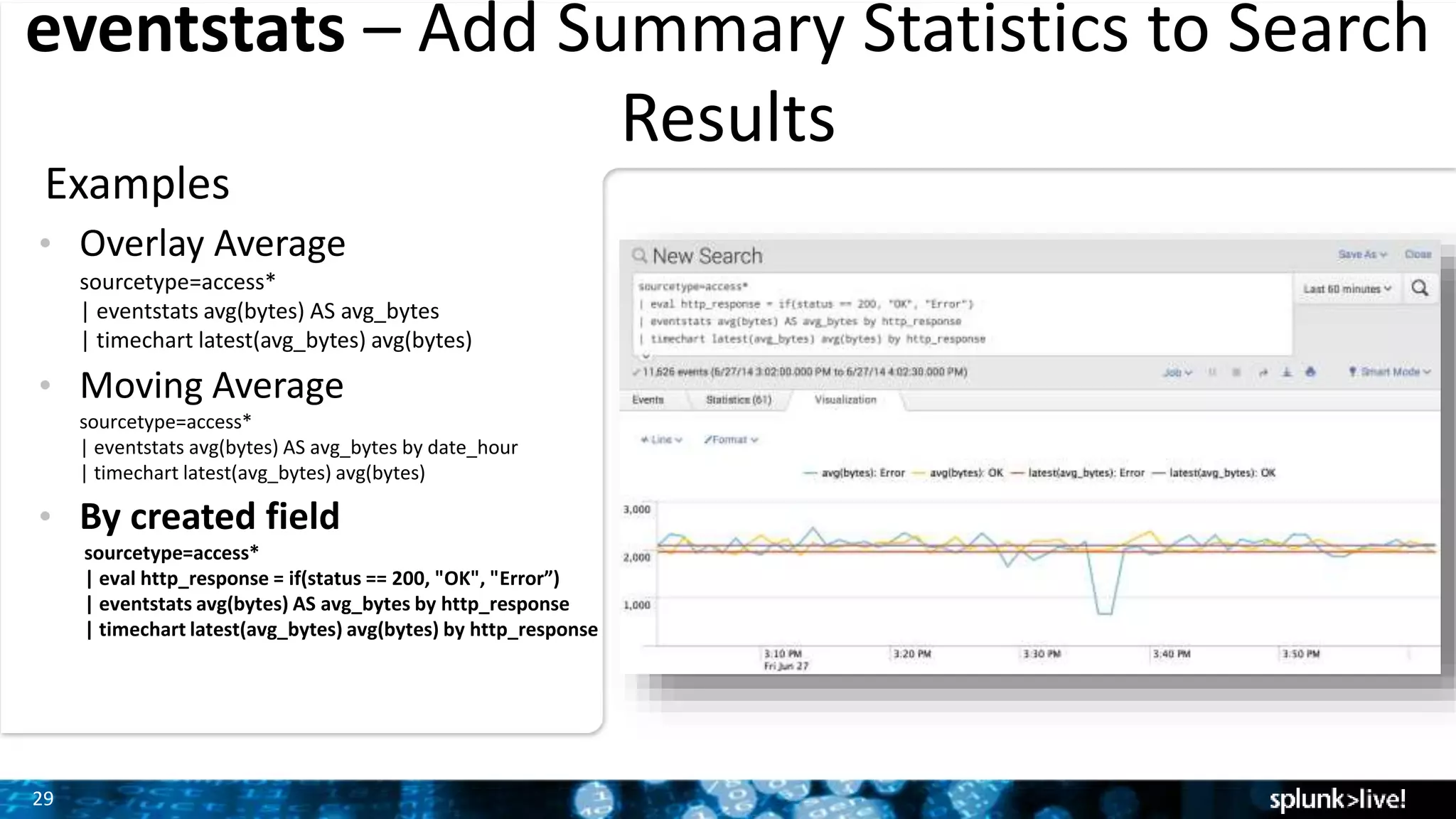

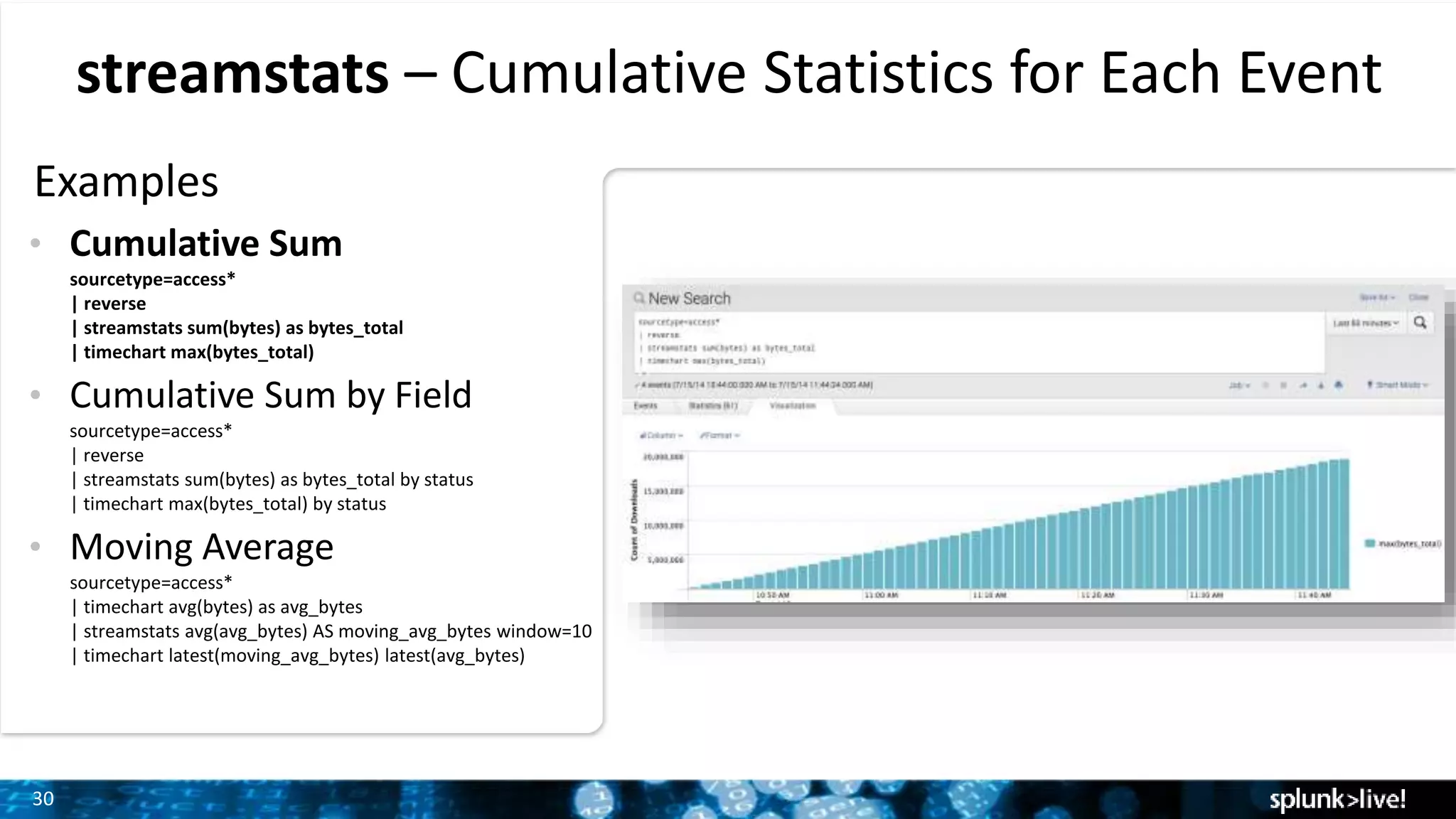

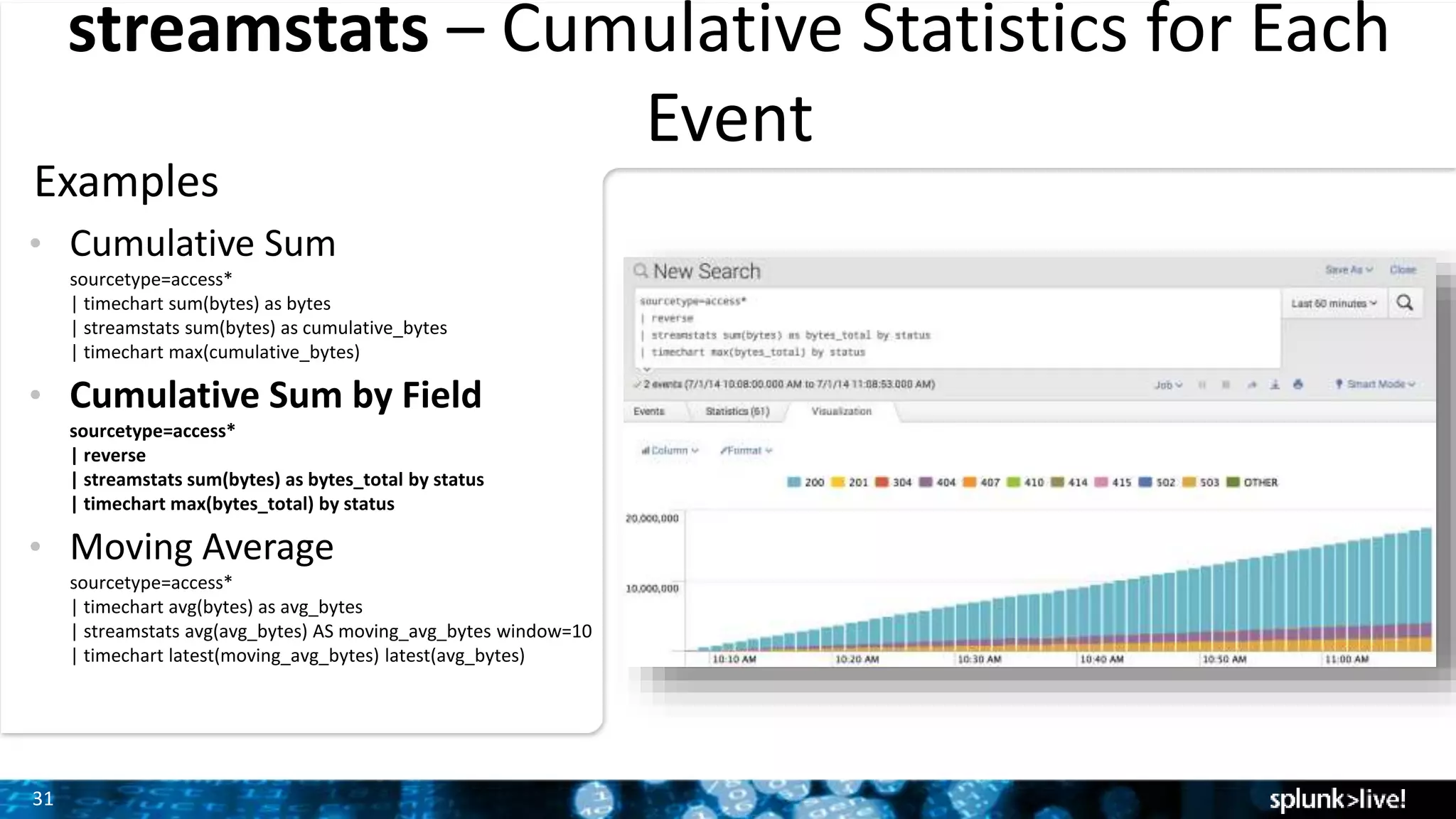

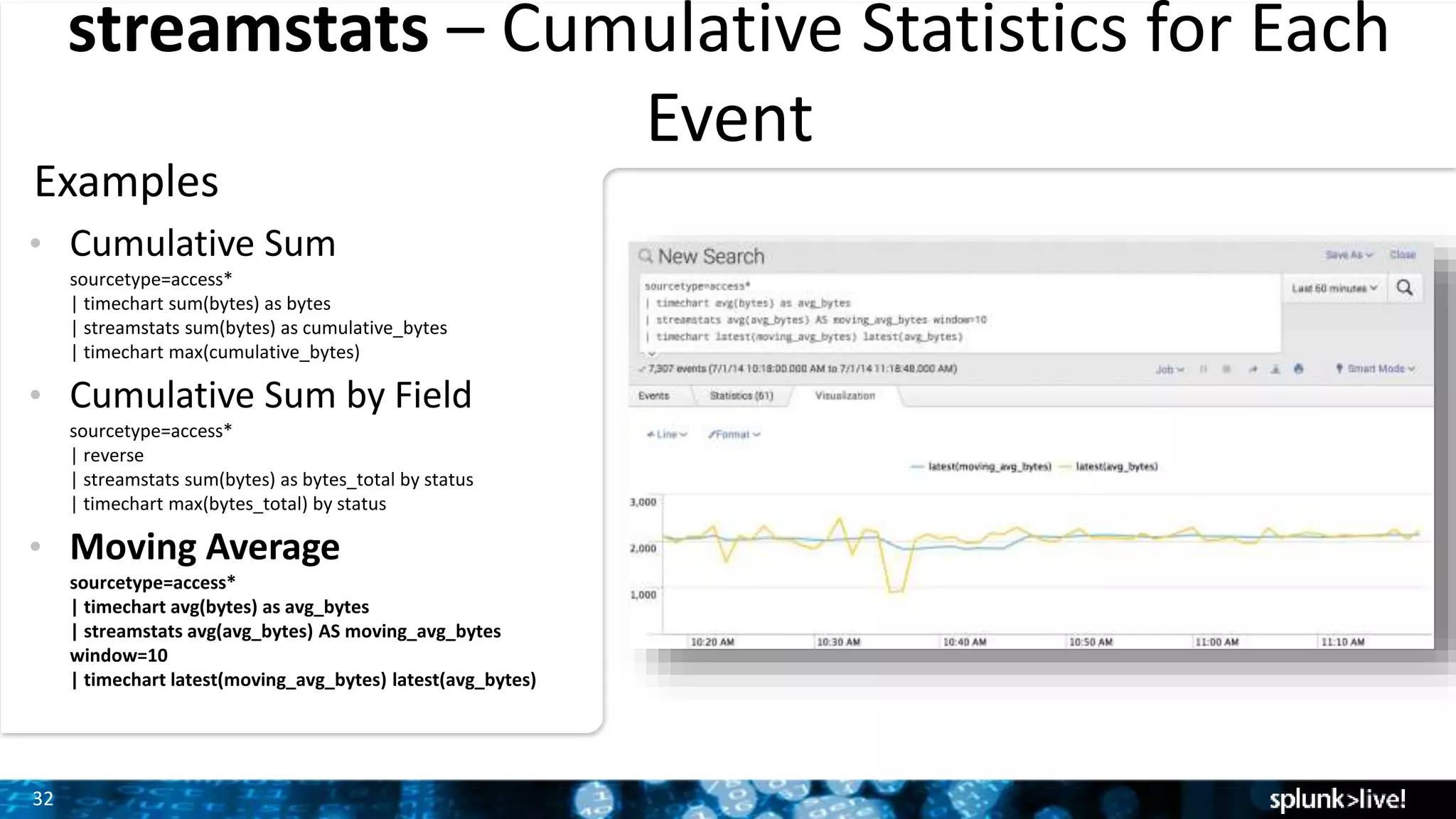

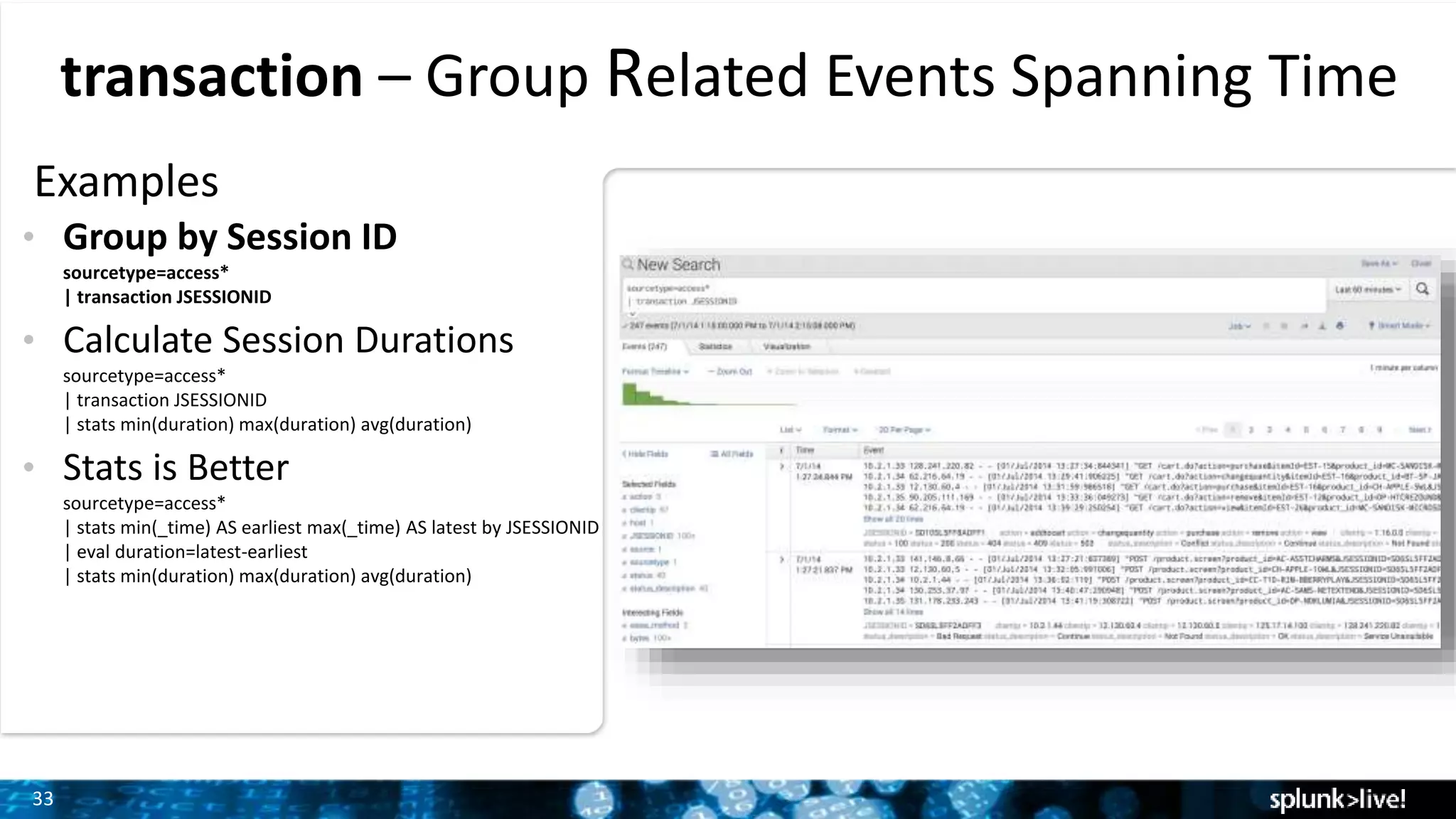

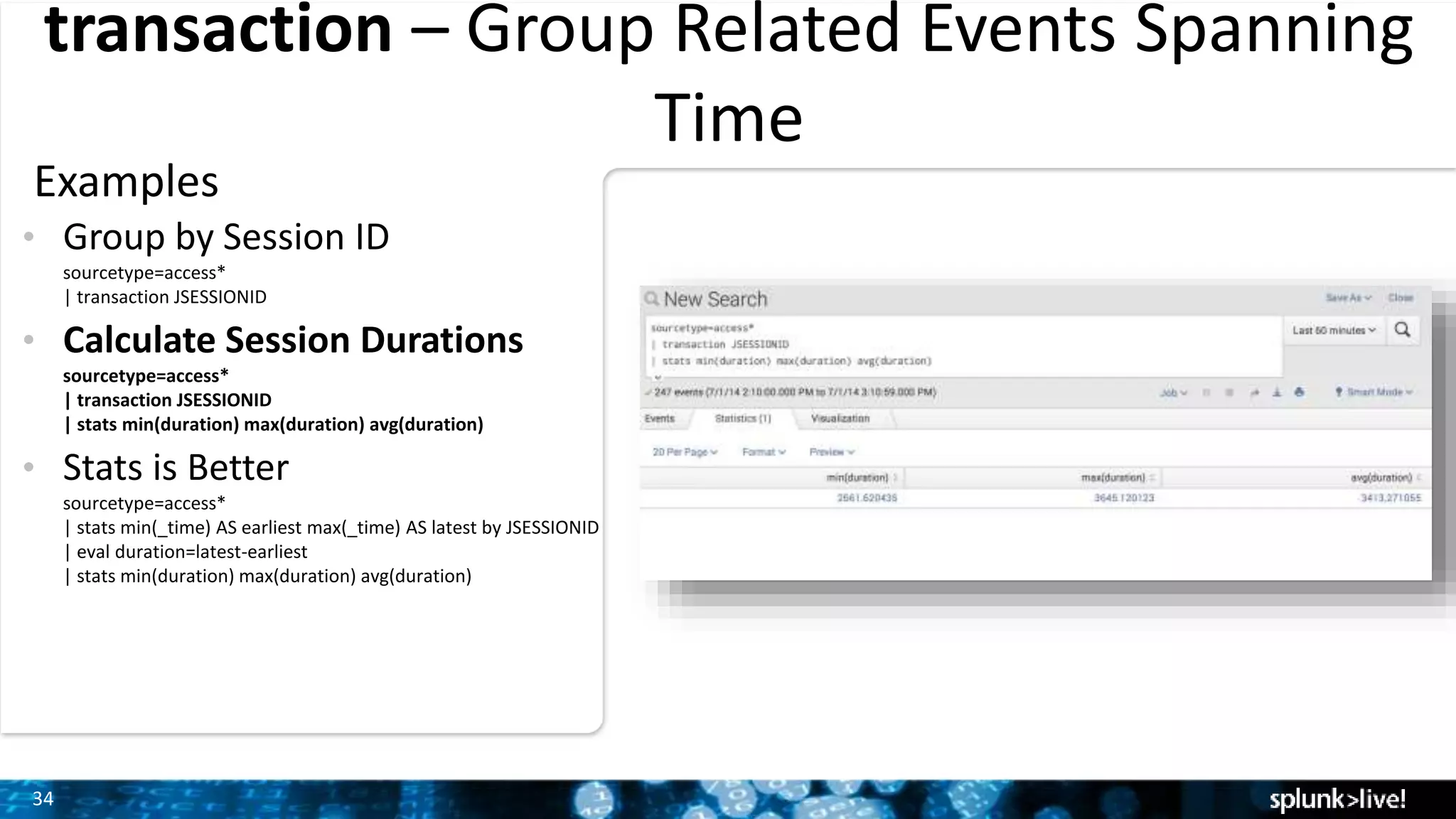

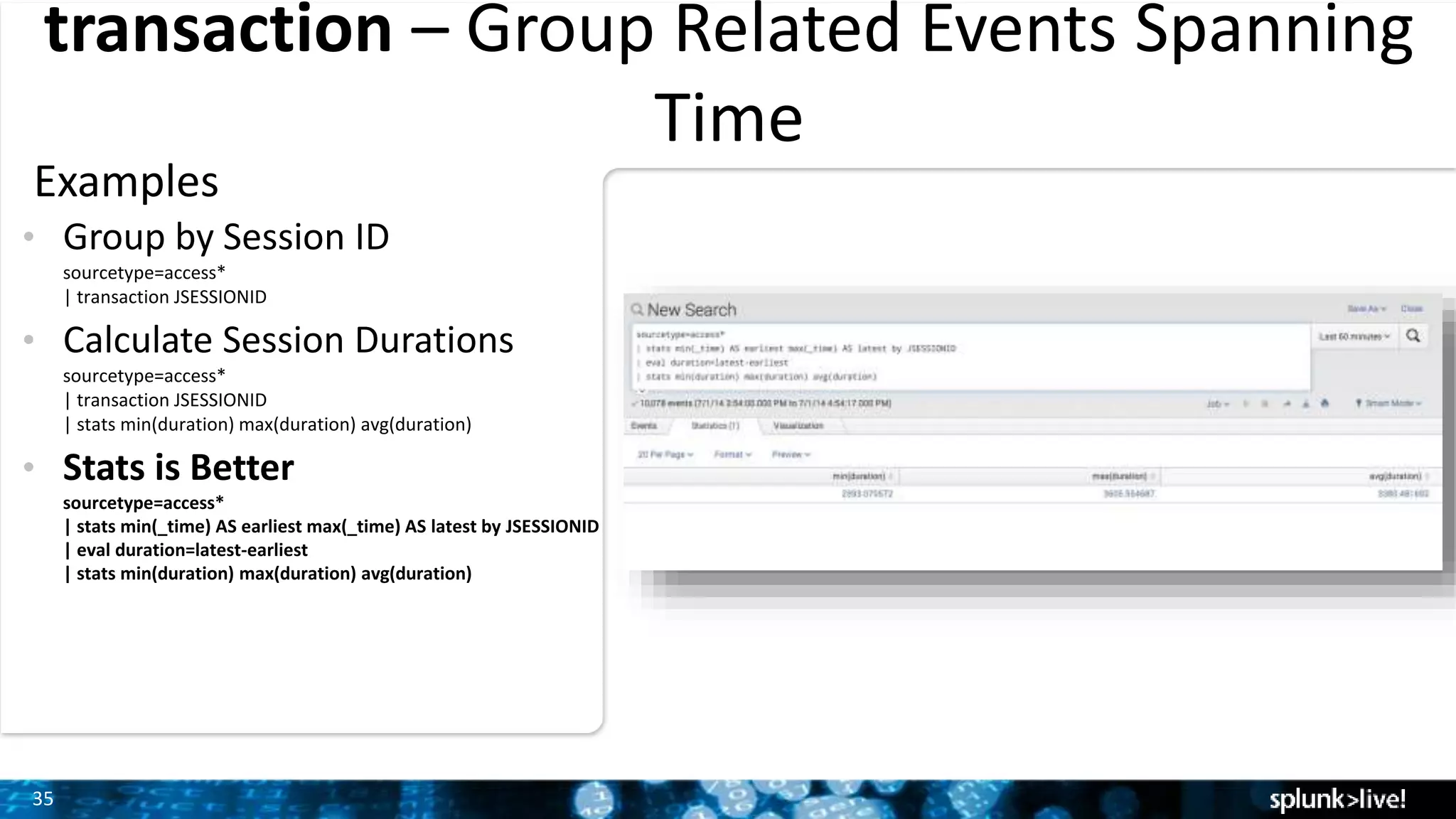

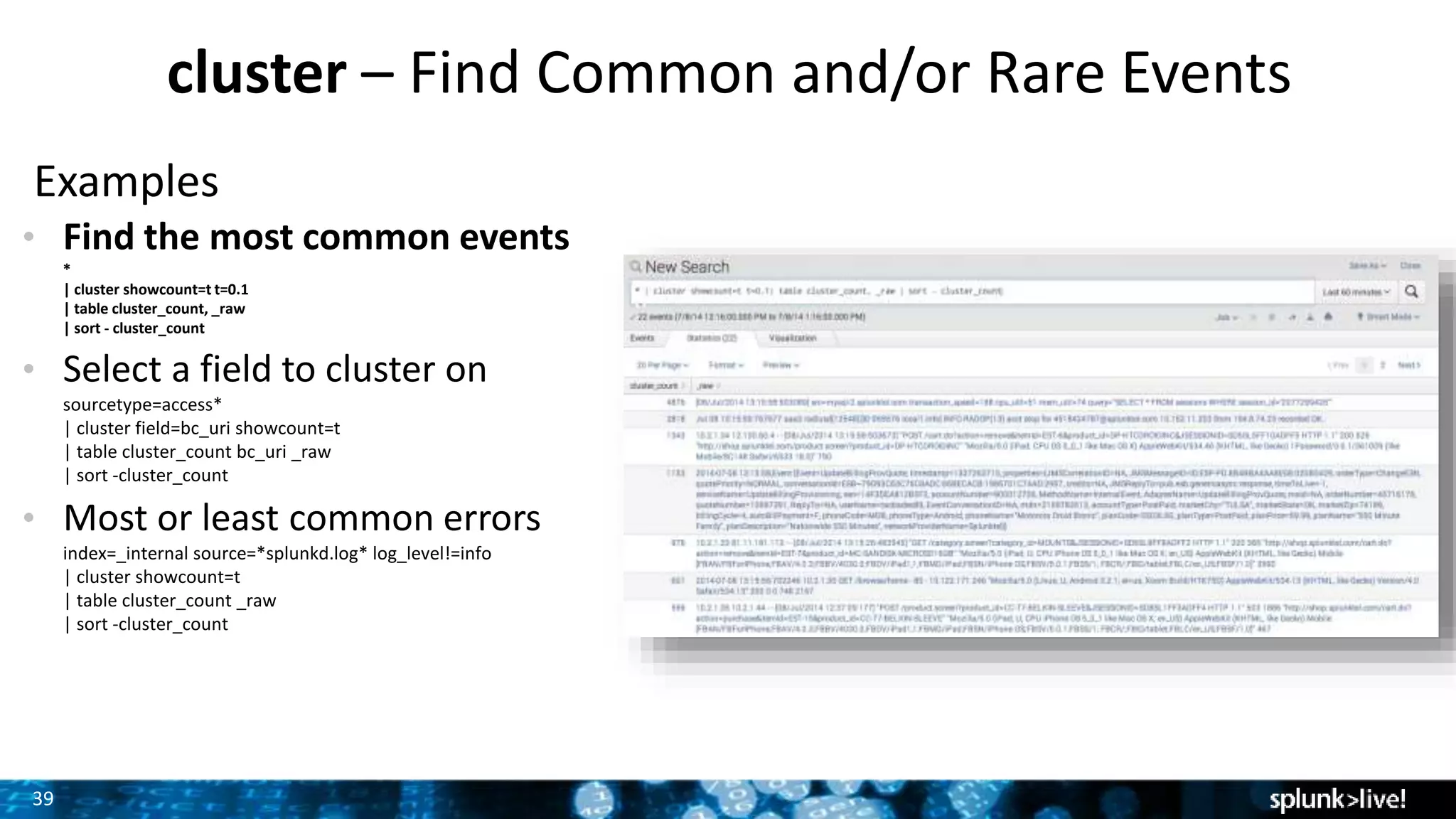

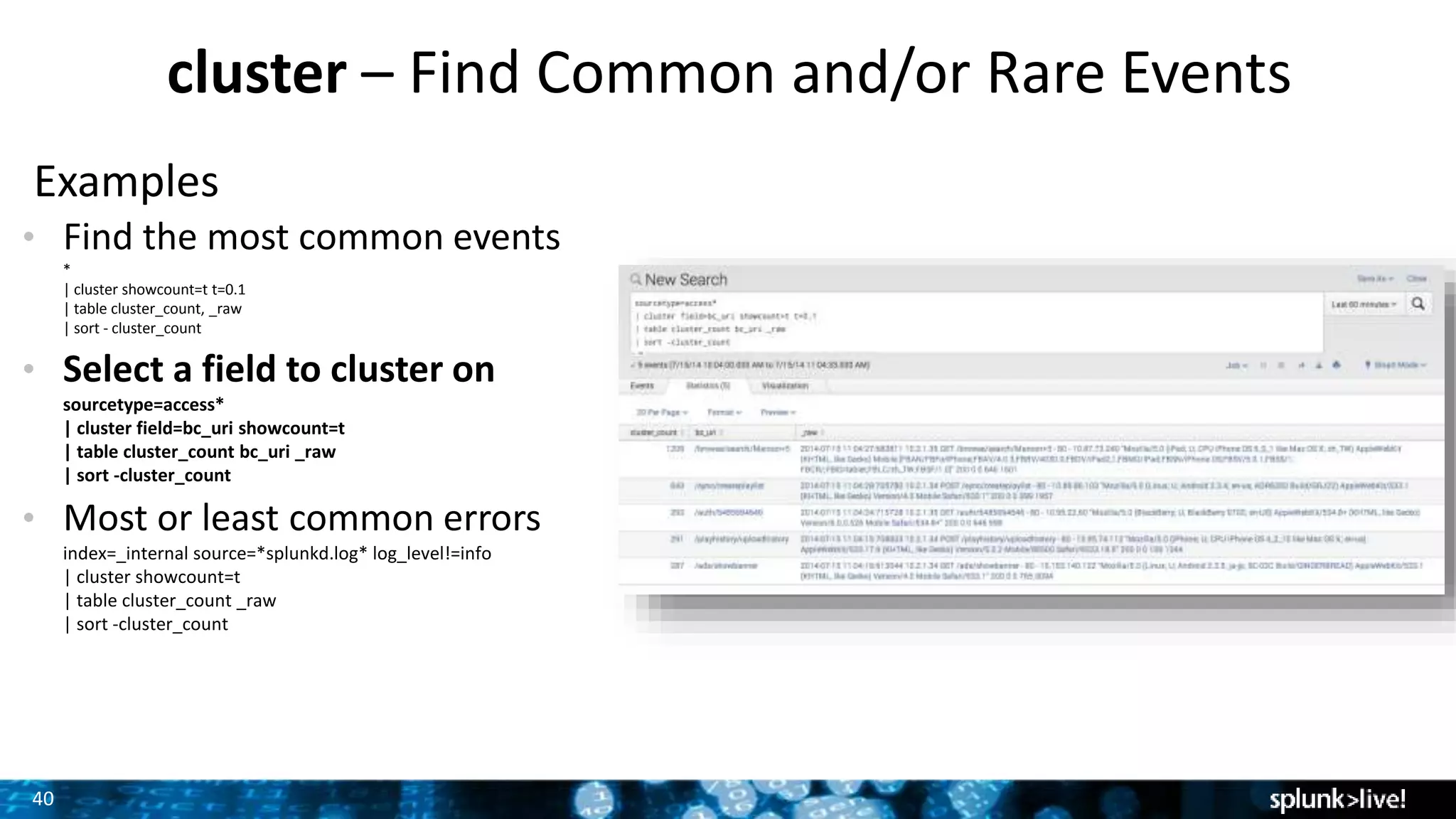

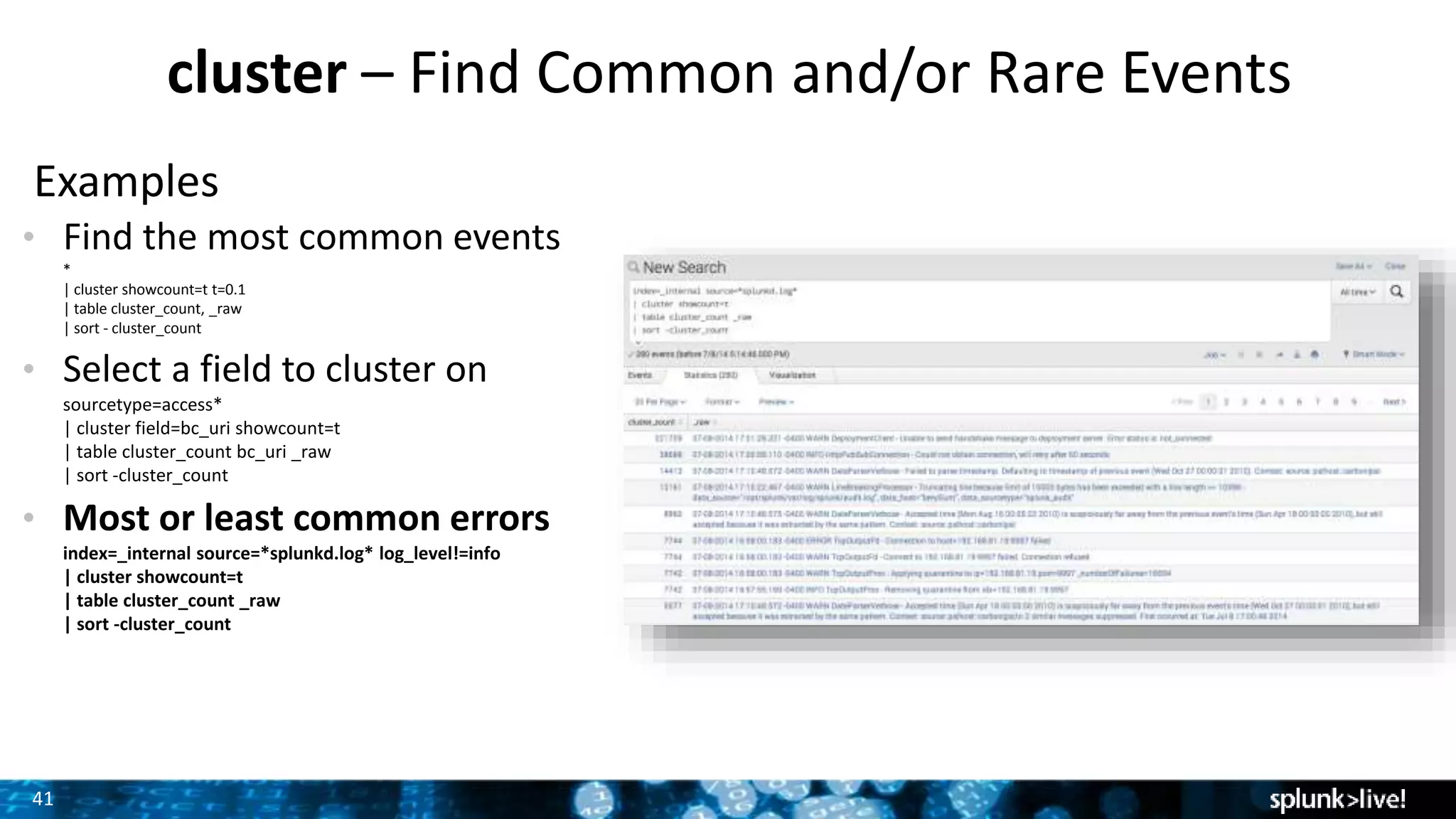

The document outlines the new features introduced in Splunk Enterprise 6.2, highlighting advancements in data management, analytics, and user interface enhancements. Key functionalities include an advanced field extractor, instant pivot capabilities, and the introduction of data models for streamlined reporting. The presentation also emphasizes the use of essential search commands and tools to enhance data analysis and operational intelligence.