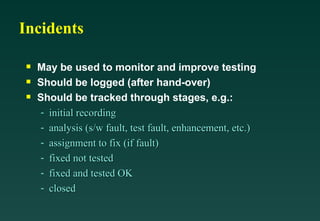

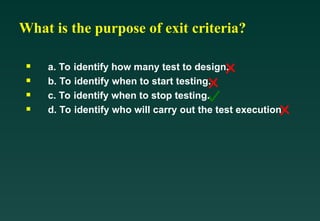

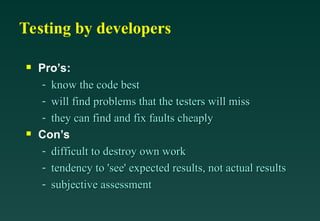

The document outlines key concepts of test management, emphasizing the importance of independence in testing, configuration management, and the estimation and monitoring of tests. It discusses various organizational structures for testing, highlighting the pros and cons of each, along with incident management and the role of standards in ensuring quality. The paper also addresses factors influencing the selection of test techniques and the critical role of communication and collaboration in the testing process.

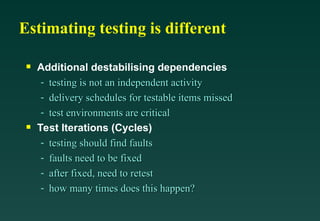

![Estimating iterations

past history

number of faults expected

- can predict from previous test effectiveness and previous

can predict from previous test effectiveness and previous

faults found (in test, review, Inspection)

faults found (in test, review, Inspection)

- % faults found in each iteration (nested faults)

% faults found in each iteration (nested faults)

- % fixed [in]correctly

% fixed [in]correctly

time to report faults

time waiting for fixes

how much in each iteration?](https://image.slidesharecdn.com/wells-fargo-chapter5-250203075611-625c46cc/85/Software-Testing-ISTQB-study-material-ppt-26-320.jpg)

![What actions can you take?

What can you affect?

- resource allocation

resource allocation

- number of test iterations

number of test iterations

- tests included in an

tests included in an

iteration

iteration

- entry / exit criteria

entry / exit criteria

applied

applied

- release date

release date

What can you not

affect:

- number of faults already there

number of faults already there

What can you affect

indirectly?

- rework effort

rework effort

- which faults to be fixed [first]

which faults to be fixed [first]

- quality of fixes (entry criteria

quality of fixes (entry criteria

to retest)

to retest)](https://image.slidesharecdn.com/wells-fargo-chapter5-250203075611-625c46cc/85/Software-Testing-ISTQB-study-material-ppt-36-320.jpg)