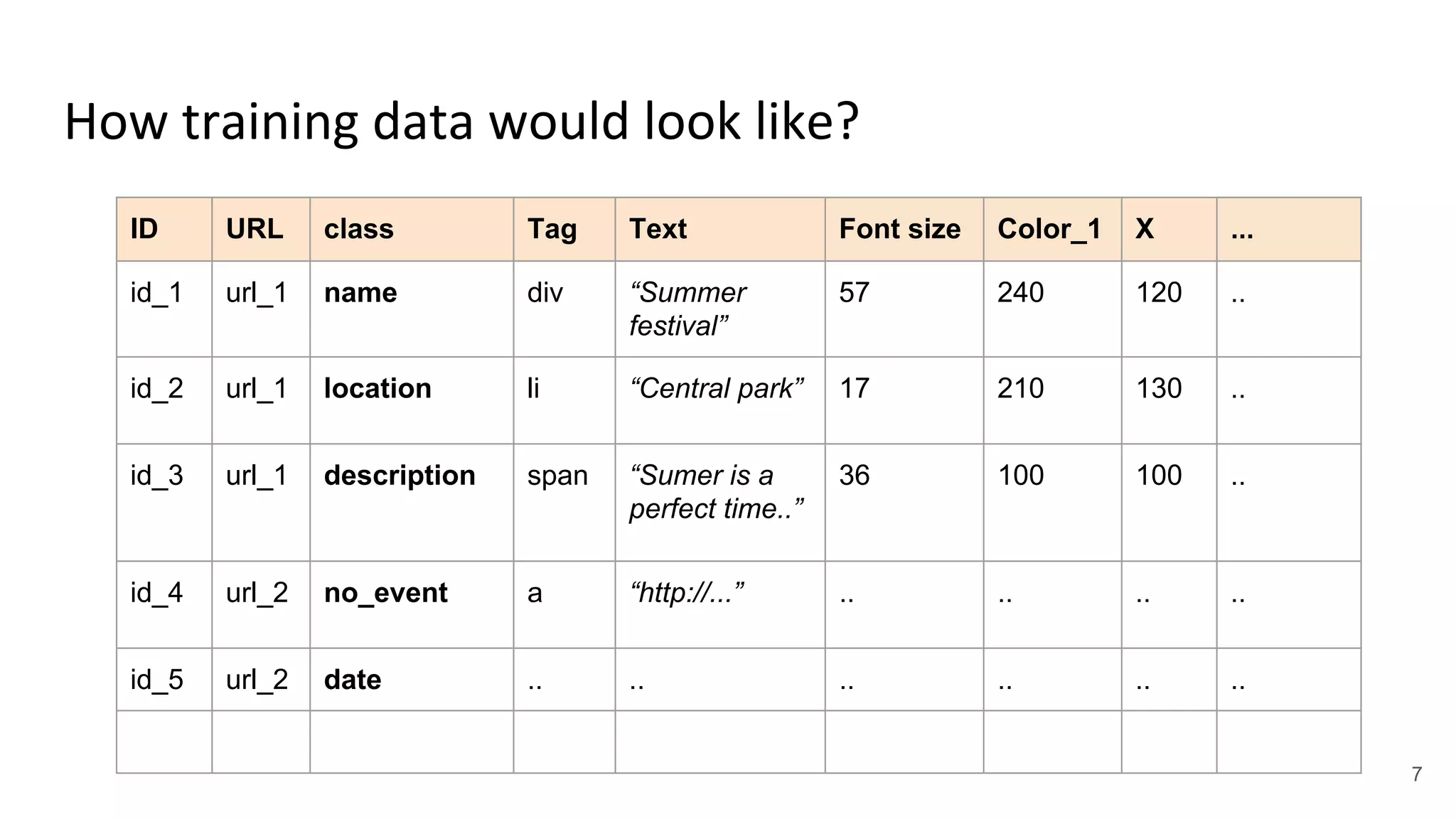

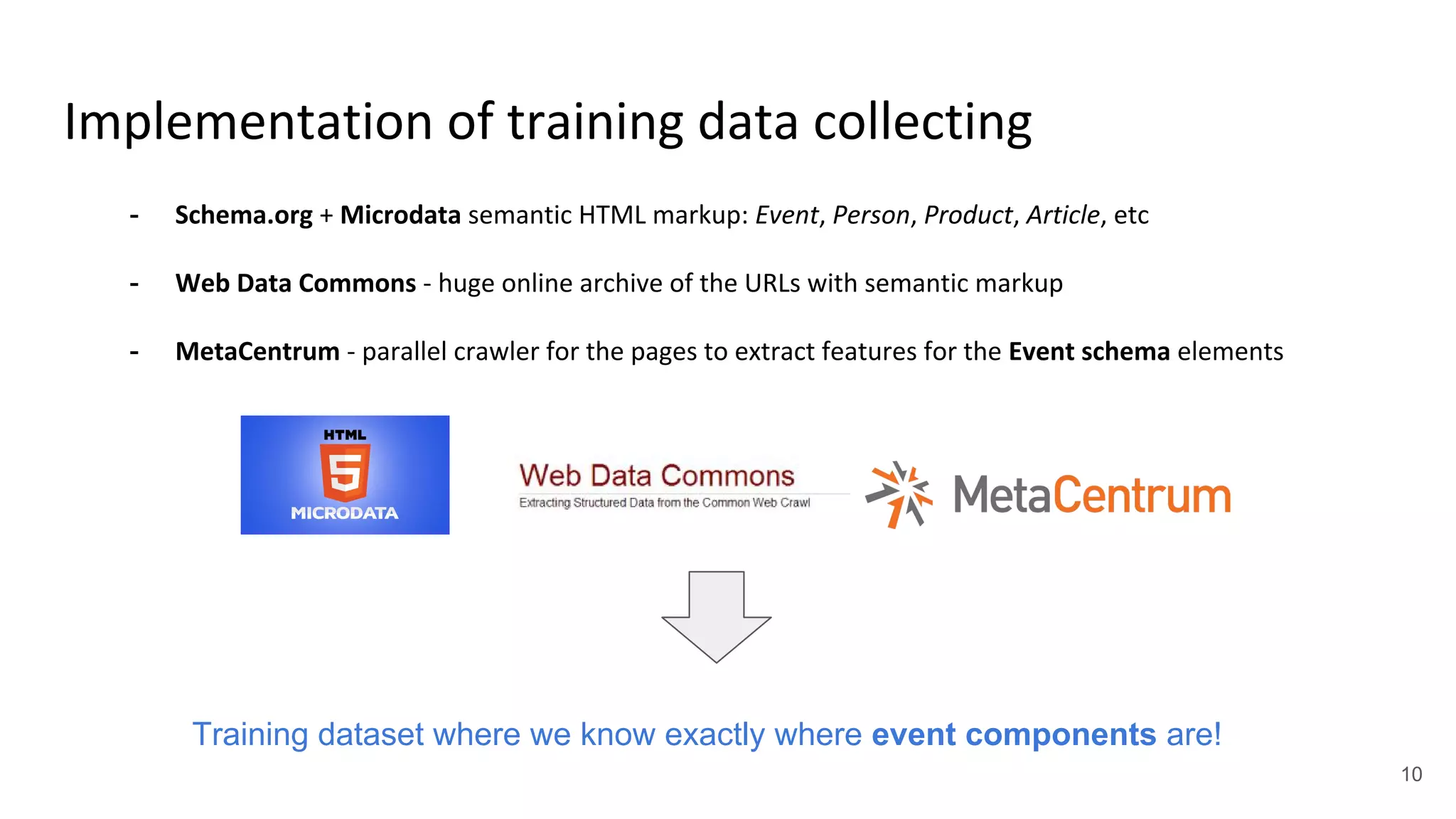

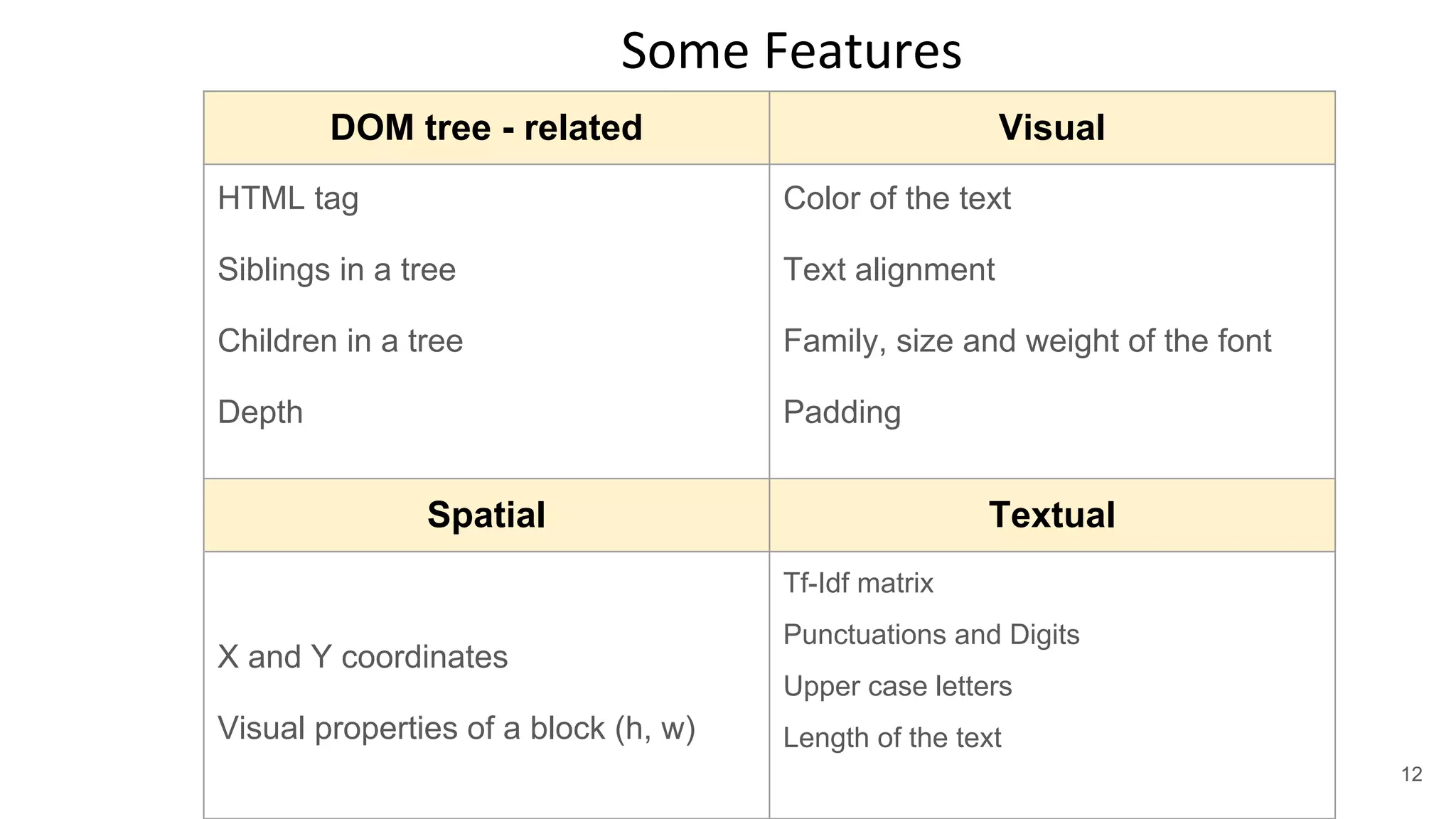

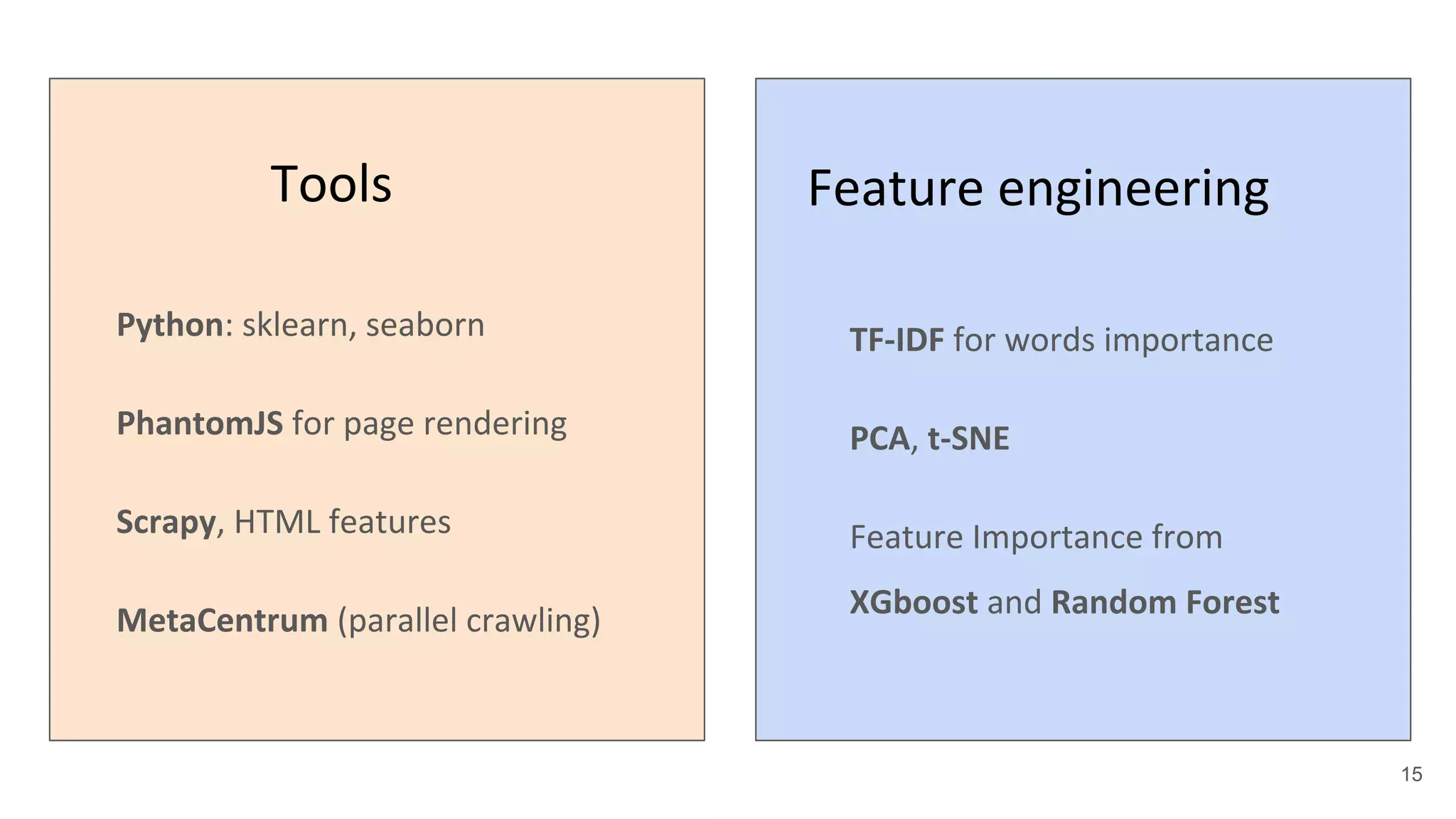

The master's thesis focuses on developing an automatic local events extractor which can extract event-related information such as name, date, location, and description from any webpage format with high accuracy. It outlines a structured approach involving data collection, feature extraction, and model evaluation, using various classification algorithms to achieve optimal results. The resulting dataset is publicly available and aims to facilitate scalable event recognition systems for different cities.

![Classification problem

Web element

id: //a[@id = 'name-id']

tag: <div>

text: “Summer classic concert”

font size: 16px

font weight: 300

block height: 35 px

block width: 79 px

X, Y coords: [155, 230]

# of siblings: 2

….

Class: “Event name”

6](https://image.slidesharecdn.com/sociopathpresentation1-170830005852/75/Sociopath-presentation-6-2048.jpg)