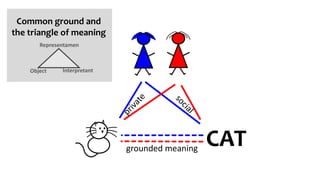

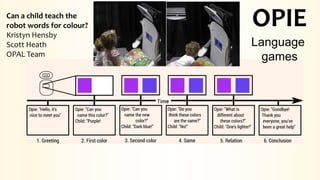

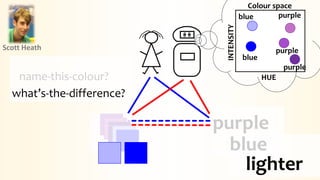

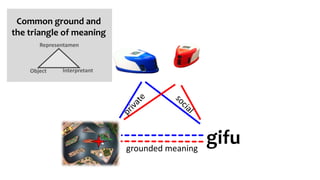

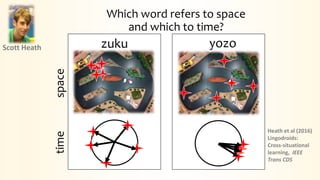

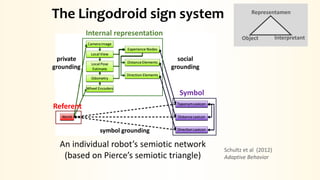

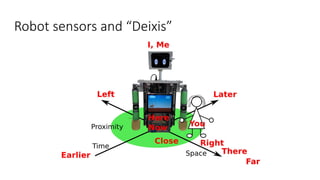

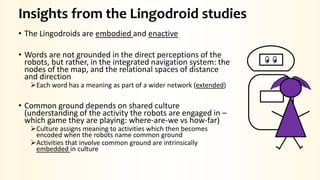

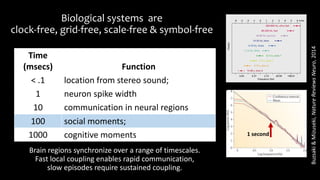

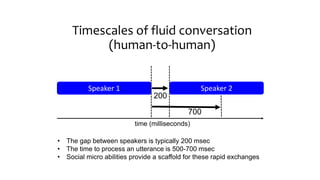

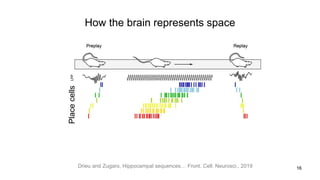

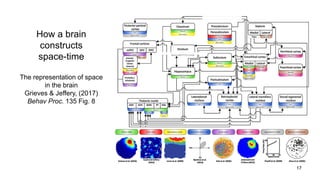

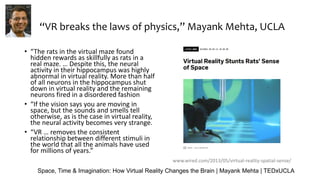

This document summarizes research into the intersection of language, artificial intelligence (AI), and virtual reality (VR). It discusses three key points: 1) How robots are learning language and building common ground through studies of word meanings. 2) The promise of VR/AR for creating shared understanding and directing attention during language learning. 3) How VR impacts the brain's representation of space and time based on mismatches between visual and other sensory inputs. The research aims to advance social technologies through collaborative work between computer scientists, roboticists, psychologists and other experts.