Sanath pabba hadoop resume 1.0

•Download as DOCX, PDF•

0 likes•80 views

Sanath Pabba has over 5 years of experience working with big data technologies like Hadoop, Spark, Hive, Pig, Kafka and NoSQL databases. He has expertise in data extraction, transformation and loading processes. Some of his responsibilities include writing Sqoop and Spark jobs to load and prepare data, developing automation scripts to monitor cluster utilization, and implementing validation rules for data quality. He has worked on various projects involving data warehousing, reporting, stream processing and analytics using technologies like SQL Server, Hive and Spark.

Report

Share

Report

Share

Recommended

Poorna Hadoop

This document provides a detailed summary of Poorna Chandra Rao Kommana's professional experience and technical skills. It outlines his 8 years of experience in big data technologies including Hadoop, Hive, Pig, Spark, Kafka and AWS services. It details his roles and responsibilities in building scalable big data solutions, developing ETL pipelines, performing data analysis, and optimizing performance. His skills include Java, Python, SQL, Pig Latin, HiveQL, and tools like Eclipse and PyCharm.

sam_resume - updated

Sam has over 8 years of experience in big data technologies including Hadoop, Spark, Hive, Pig, Kafka and Cassandra. He has expertise in data ingestion, processing, ETL and analytics. Some of his responsibilities include developing MapReduce programs, Spark applications, data pipelines, streaming jobs and visualizations. He is proficient in Java, Python, Scala and various big data tools.

Sureh hadoop 3 years t

• Capable of processing large sets of structured, semi-structured and unstructured data and supporting system architecture

• Implemented Proof of concepts on Hadoop stack and different big data analytic tools, migration from different databases to Hadoop.

• Developed multiple Map Reduce jobs in java for data cleaning and pre-processing according to the business requirements, Importing and exporting data into HDFS and Hive using Sqoop.

Having Experience in writing HIVE queries & Pig scripts.

Shiv shakti resume

Shiv Shakti is seeking a position utilizing his 4+ years of experience in Hadoop development and automation projects. He has expertise in handling structured and unstructured data using tools like Python, HiveQL, Sqoop, Oozie, PigLatin, Impala, HDFS. His technical skills include Hadoop and its components, ShellScript, Python, SQL, and Java. He has worked on several projects for Accenture involving data ingestion, processing, analysis and automation using Hadoop technologies.

Madhu

The document provides a professional summary and details for Madhusudhn Reddy.Gujja including 3 years of experience in big data tools like Hadoop, Hive, Pig and Spark. He has extensive experience developing Pig Latin scripts, writing MapReduce programs in Java, and loading/transforming large datasets. He is proficient in technologies such as HDFS, HBase, Kafka, Flume, Impala and has worked on projects involving data analytics, ETL processes and clustering Hadoop.

BigData_Krishna Kumar Sharma

The document provides details about the candidate's experience and skills in big data technologies like Hadoop, Hive, Pig, Spark, Sqoop, Flume, and HBase. The candidate has over 1.5 years of experience learning and working with these technologies. He has installed and configured Hadoop clusters from different versions and used distributions from MapR. He has in-depth knowledge of Hadoop architecture and frameworks and has performed various tasks in a Hadoop environment including configuration of Hive, writing Pig scripts, using Sqoop and Flume, and writing Spark programs.

Deepankar Sehdev- Resume2015

- The document contains the resume of Deepankar Sehdev which details his 3.5 years of experience in developing big data and data warehouse applications using technologies like Hadoop, Hive, Pig, Sqoop, AWS Redshift. It lists his roles and responsibilities in 4 past projects involving migration of data from mainframe and databases to Hadoop clusters and data warehouses.

Sunshine consulting mopuru babu cv_java_j2ee_spring_bigdata_scala

Mopuru Babu has over 9 years of experience in software development using Java technologies and 3 years experience in Hadoop development. He has extensive experience designing, developing, and deploying multi-tier and enterprise-level distributed applications. He has expertise in technologies like Hadoop, Hive, Pig, Spark, and frameworks like Spring and Struts. He has worked on both small and large projects for clients in various industries.

Recommended

Poorna Hadoop

This document provides a detailed summary of Poorna Chandra Rao Kommana's professional experience and technical skills. It outlines his 8 years of experience in big data technologies including Hadoop, Hive, Pig, Spark, Kafka and AWS services. It details his roles and responsibilities in building scalable big data solutions, developing ETL pipelines, performing data analysis, and optimizing performance. His skills include Java, Python, SQL, Pig Latin, HiveQL, and tools like Eclipse and PyCharm.

sam_resume - updated

Sam has over 8 years of experience in big data technologies including Hadoop, Spark, Hive, Pig, Kafka and Cassandra. He has expertise in data ingestion, processing, ETL and analytics. Some of his responsibilities include developing MapReduce programs, Spark applications, data pipelines, streaming jobs and visualizations. He is proficient in Java, Python, Scala and various big data tools.

Sureh hadoop 3 years t

• Capable of processing large sets of structured, semi-structured and unstructured data and supporting system architecture

• Implemented Proof of concepts on Hadoop stack and different big data analytic tools, migration from different databases to Hadoop.

• Developed multiple Map Reduce jobs in java for data cleaning and pre-processing according to the business requirements, Importing and exporting data into HDFS and Hive using Sqoop.

Having Experience in writing HIVE queries & Pig scripts.

Shiv shakti resume

Shiv Shakti is seeking a position utilizing his 4+ years of experience in Hadoop development and automation projects. He has expertise in handling structured and unstructured data using tools like Python, HiveQL, Sqoop, Oozie, PigLatin, Impala, HDFS. His technical skills include Hadoop and its components, ShellScript, Python, SQL, and Java. He has worked on several projects for Accenture involving data ingestion, processing, analysis and automation using Hadoop technologies.

Madhu

The document provides a professional summary and details for Madhusudhn Reddy.Gujja including 3 years of experience in big data tools like Hadoop, Hive, Pig and Spark. He has extensive experience developing Pig Latin scripts, writing MapReduce programs in Java, and loading/transforming large datasets. He is proficient in technologies such as HDFS, HBase, Kafka, Flume, Impala and has worked on projects involving data analytics, ETL processes and clustering Hadoop.

BigData_Krishna Kumar Sharma

The document provides details about the candidate's experience and skills in big data technologies like Hadoop, Hive, Pig, Spark, Sqoop, Flume, and HBase. The candidate has over 1.5 years of experience learning and working with these technologies. He has installed and configured Hadoop clusters from different versions and used distributions from MapR. He has in-depth knowledge of Hadoop architecture and frameworks and has performed various tasks in a Hadoop environment including configuration of Hive, writing Pig scripts, using Sqoop and Flume, and writing Spark programs.

Deepankar Sehdev- Resume2015

- The document contains the resume of Deepankar Sehdev which details his 3.5 years of experience in developing big data and data warehouse applications using technologies like Hadoop, Hive, Pig, Sqoop, AWS Redshift. It lists his roles and responsibilities in 4 past projects involving migration of data from mainframe and databases to Hadoop clusters and data warehouses.

Sunshine consulting mopuru babu cv_java_j2ee_spring_bigdata_scala

Mopuru Babu has over 9 years of experience in software development using Java technologies and 3 years experience in Hadoop development. He has extensive experience designing, developing, and deploying multi-tier and enterprise-level distributed applications. He has expertise in technologies like Hadoop, Hive, Pig, Spark, and frameworks like Spring and Struts. He has worked on both small and large projects for clients in various industries.

hadoop exp

M.V. Rama Kumar has 3 years of experience in application development using Java and big data technologies like Hadoop. He has 1.6 years of experience using Hadoop components such as HDFS, MapReduce, Pig, Hive, Sqoop, HBase and Oozie. He has extensive experience setting up Hadoop clusters and processing large, structured and unstructured data.

a9TD6cbzTZotpJihekdc+w==.docx

The document provides a summary of an individual's professional experience working with big data technologies like Hadoop, Spark, Scala, Java, and AWS. It details over 9 years of experience in areas including data engineering, ETL processes, batch and stream data processing, working with technologies such as HDFS, YARN, Hive, Impala, Kafka, and databases like Oracle, MySQL, and MongoDB. Specific experiences are listed from roles at Morgan Stanley, ECA, and BMO Harris Bank involving data ingestion, transformation, analytics and reporting using Hadoop ecosystems.

Pallavi_Resume

The document provides a summary of Pallavi's professional experience and skills. She has over 8 years of experience working with big data, databases, and web applications. Some of her key skills and experiences include developing ETL processes using tools like Apache Spark, Hive, Pig, Sqoop and Flume; loading and analyzing data in Hadoop clusters; creating dashboards and reports in Tableau; and developing applications using technologies like SQL Server, SSIS, SSRS, Java, and .NET. She has worked on projects involving healthcare, performance metrics, and business intelligence.

Prashanth Kumar_Hadoop_NEW

Prashanth Shankar Kumar has over 8 years of experience in data analytics, Hadoop, Teradata, and mainframes. He currently works as a Hadoop Developer/Tech Lead at Bank of America where he develops Hive queries, Impala queries, MapReduce programs, and Oozie workflows. Previously he worked as a Hadoop Developer at State Farm Insurance where he installed and managed Hadoop clusters and developed solutions using Hive, Pig, Sqoop, and HBase. He has expertise in Teradata, SQL, Java, Linux, and agile methodologies.

PRAFUL_HADOOP

Prafulla Kumar Dash has over 5 years of experience in Hadoop development. He has worked on projects involving loading data from various sources into HDFS, performing ETL using Hive and Pig, and generating reports. Currently he is working on a bank reconciliation project involving matching data between systems using Spark and Scala.

Resume

This document provides a summary of M.V. Rama Kumar's professional experience and qualifications. He has over 3 years of experience in application development using Java and big data technologies like Hadoop, HDFS, MapReduce, Apache Pig, Hive and Sqoop. Some of his key responsibilities have included writing Pig scripts to optimize job execution time, creating Hive tables and queries, and using Sqoop to transfer data between HDFS and relational databases. He is currently working as a Software Engineer with Tata Consultancy Services on projects involving XML analytics using Hadoop and sentiment analysis on customer data in the banking domain.

PRAFUL_HADOOP

Prafulla Kumar Dash has over 6 years of experience in Hadoop development and administration. He has worked on various projects involving data ingestion from multiple sources into Hadoop, building Hive data warehouses, writing Pig and Spark programs, and developing ETL processes. Currently he is working as a Hadoop developer for IDFC Bank, where he has set up Hadoop clusters and develops reconciliation jobs.

Nagesh Hadoop Profile

This document contains the resume of Nagesh Madanala. It summarizes his career objective, professional experience, areas of expertise, hadoop projects, and academic qualifications. Nagesh has over 2 years of experience as a Hadoop Developer with skills in Pig, Hive, Sqoop, HBase, Flume, SQL, and Java. His projects involve processing large datasets using MapReduce, loading data from databases into HDFS, and sentiment analysis on customer data in the banking domain. He has expertise in Hadoop ecosystems, programming languages, databases, and tools.

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...Agile Testing Alliance

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Processing by "Sampat Kumar" from "Harman". The presentation was done at #doppa17 DevOps++ Global Summit 2017. All the copyrights are reserved with the authorResume - Narasimha Rao B V (TCS)

This document contains the professional summary and experience details of Venkata Narasimha Rao B. He has over 10 years of experience as a Big Data professional and is currently working as a Hadoop Administrator at Tata Consultancy Services. Some of his key qualifications and skills include expertise in Hadoop administration, installation and maintenance of Hadoop clusters, data ingestion, ETL processes, and automating workflows. He has strong technical skills and experience working with Hadoop, HDFS, MapReduce, Hive, Pig and other Big Data tools. He has administered large-scale Hadoop deployments for clients such as American Express, Electronic Arts and Sedgwick CMS.

R and Big Data using Revolution R Enterprise with Hadoop

Find out how Revolution Analytics is making it easier to work with Hadoop frameworks with Revolution R Enterprise.

spark_v1_2

Spark is an open-source cluster computing framework that can run analytics applications much faster than Hadoop by keeping data in memory rather than on disk. While Spark can access Hadoop's HDFS storage system and is often used as a replacement for Hadoop's MapReduce, Hadoop remains useful for batch processing and Spark is not expected to fully replace it. Spark provides speed, ease of use, and integration of SQL, streaming, and machine learning through its APIs in multiple languages.

Big Data Simplified - Is all about Ab'strakSHeN

This document discusses designing a new big data platform to replace an existing complex and outdated one. It analyzes challenges with the current platform, including inability to keep up with business needs. The proposed new platform called Dredge would use abstraction layers to integrate big data tools in a loosely coupled and scalable way. This would simplify development and maintenance while supporting business goals. Key aspects of Dredge include declarative configuration, logical workflows, and plug-and-play integration of tools like HDFS, Hive, HBase, Kafka and Spark in a reusable and event-driven manner. The new platform aims to improve scalability, reduce costs and better support analytics needs over time.

Big dataarchitecturesandecosystem+nosql

This document provides an overview of big data architecture, the Hadoop ecosystem, and NoSQL databases. It discusses common big data use cases, characteristics, and tools. It describes the typical 3-tier traditional architecture compared to the big data architecture using Hadoop. Key components of Hadoop like HDFS, MapReduce, Hive, Pig, Avro/Thrift, HBase are explained. The document also discusses stream processing tools like Storm, Spark and real-time query with Impala. It notes how NoSQL databases can integrate with Hadoop/MapReduce for both batch and real-time processing.

Predictive Analytics with Hadoop

This document discusses predictive analytics using Hadoop. It provides examples of recommendation and classification using big data. It describes obtaining large training datasets through crowdsourcing and implicit feedback. It also discusses operational considerations for predictive models, including snapshotting data, leveraging NFS for ingestion, and ensuring high availability. The document concludes with a question and answer section.

Big data processing with apache spark

Workshop

December 9, 2015

LBS College of Engineering

www.sarithdivakar.info | www.csegyan.org

http://sarithdivakar.info/2015/12/09/wordcount-program-in-python-using-apache-spark-for-data-stored-in-hadoop-hdfs/

Mukul-Resume

Mukul Upadhyay is seeking a position in Big Data technology with an IT company. He has over 5 years of experience developing Hadoop applications and working with technologies like MapReduce, Hive, HBase, HDFS, and Sqoop. Some of his responsibilities include architecting Big Data platforms, developing custom MapReduce jobs, importing and exporting data between HDFS and relational databases, and tuning and monitoring Hadoop clusters. He has worked on projects for clients in the USA and India involving building Hadoop-based analytics platforms and processing terabytes of device log data.

Srikanth hadoop 3.6yrs_hyd

Srikanth K - Hadoop Developer - Jawaharlal Nehru Technological University (JNTU), 2012, Hadoop Developer, ADP india Pvt Ltd from Alwasi Software Pvt Ltd, 3.6 yrs, Hyderabad

Real World Use Cases: Hadoop and NoSQL in Production

"Real World Use Cases: Hadoop and NoSQL in Production" by Tugdual Grall.

What’s important about a technology is what you can use it to do. I’ve looked at what a number of groups are doing with Apache Hadoop and NoSQL in production, and I will relay what worked well for them and what did not. Drawing from real world use cases, I show how people who understand these new approaches can employ them well in conjunction with traditional approaches and existing applications. Thread Detection, Datawarehouse optimization, Marketing Efficiency, Biometric Database are some examples exposed during this presentation.

Resume_VipinKP

This document contains the resume of Vipin KP, who has over 5 years of experience as a Big Data Hadoop Developer. He has extensive experience developing Hadoop applications for clients such as EMC, Apple, Dun & Bradstreet, Neilsen, Commonwealth Bank of Australia, and Nokia Siemens Network. He has expertise in technologies such as Hadoop, Hive, Pig, Sqoop, Oozie, and Spark and has developed ETL processes, data pipelines, and analytics solutions on Hadoop clusters. He holds a Master's degree in Computer Science and is Cloudera certified in Hadoop development.

Monika_Raghuvanshi

Monika Raghuvanshi is seeking a position as a Hadoop Administrator where she can apply her 7 years of experience in Hadoop and Unix administration. She has expertise in installing, configuring, and maintaining Hadoop clusters as well as ensuring security through Kerberos and SSL. She is proficient in Linux, networking, programming languages, and databases. Her experience includes projects with Barclays, GE Healthcare, Ontario Ministry of Transportation, and Nortel where she administered Hadoop and Unix systems.

Anil_BigData Resume

This document contains Anil Kumar's resume. It summarizes his contact information, professional experience working with Hadoop and related technologies like MapReduce, Pig, and Hive. It also lists his technical skills and qualifications, including being a MapR certified Hadoop Professional. His work experience includes developing MapReduce algorithms, installing and configuring MapR Hadoop clusters, and working on projects for clients like Pfizer and American Express involving data analytics using Hadoop, Spark, and Hive.

More Related Content

What's hot

hadoop exp

M.V. Rama Kumar has 3 years of experience in application development using Java and big data technologies like Hadoop. He has 1.6 years of experience using Hadoop components such as HDFS, MapReduce, Pig, Hive, Sqoop, HBase and Oozie. He has extensive experience setting up Hadoop clusters and processing large, structured and unstructured data.

a9TD6cbzTZotpJihekdc+w==.docx

The document provides a summary of an individual's professional experience working with big data technologies like Hadoop, Spark, Scala, Java, and AWS. It details over 9 years of experience in areas including data engineering, ETL processes, batch and stream data processing, working with technologies such as HDFS, YARN, Hive, Impala, Kafka, and databases like Oracle, MySQL, and MongoDB. Specific experiences are listed from roles at Morgan Stanley, ECA, and BMO Harris Bank involving data ingestion, transformation, analytics and reporting using Hadoop ecosystems.

Pallavi_Resume

The document provides a summary of Pallavi's professional experience and skills. She has over 8 years of experience working with big data, databases, and web applications. Some of her key skills and experiences include developing ETL processes using tools like Apache Spark, Hive, Pig, Sqoop and Flume; loading and analyzing data in Hadoop clusters; creating dashboards and reports in Tableau; and developing applications using technologies like SQL Server, SSIS, SSRS, Java, and .NET. She has worked on projects involving healthcare, performance metrics, and business intelligence.

Prashanth Kumar_Hadoop_NEW

Prashanth Shankar Kumar has over 8 years of experience in data analytics, Hadoop, Teradata, and mainframes. He currently works as a Hadoop Developer/Tech Lead at Bank of America where he develops Hive queries, Impala queries, MapReduce programs, and Oozie workflows. Previously he worked as a Hadoop Developer at State Farm Insurance where he installed and managed Hadoop clusters and developed solutions using Hive, Pig, Sqoop, and HBase. He has expertise in Teradata, SQL, Java, Linux, and agile methodologies.

PRAFUL_HADOOP

Prafulla Kumar Dash has over 5 years of experience in Hadoop development. He has worked on projects involving loading data from various sources into HDFS, performing ETL using Hive and Pig, and generating reports. Currently he is working on a bank reconciliation project involving matching data between systems using Spark and Scala.

Resume

This document provides a summary of M.V. Rama Kumar's professional experience and qualifications. He has over 3 years of experience in application development using Java and big data technologies like Hadoop, HDFS, MapReduce, Apache Pig, Hive and Sqoop. Some of his key responsibilities have included writing Pig scripts to optimize job execution time, creating Hive tables and queries, and using Sqoop to transfer data between HDFS and relational databases. He is currently working as a Software Engineer with Tata Consultancy Services on projects involving XML analytics using Hadoop and sentiment analysis on customer data in the banking domain.

PRAFUL_HADOOP

Prafulla Kumar Dash has over 6 years of experience in Hadoop development and administration. He has worked on various projects involving data ingestion from multiple sources into Hadoop, building Hive data warehouses, writing Pig and Spark programs, and developing ETL processes. Currently he is working as a Hadoop developer for IDFC Bank, where he has set up Hadoop clusters and develops reconciliation jobs.

Nagesh Hadoop Profile

This document contains the resume of Nagesh Madanala. It summarizes his career objective, professional experience, areas of expertise, hadoop projects, and academic qualifications. Nagesh has over 2 years of experience as a Hadoop Developer with skills in Pig, Hive, Sqoop, HBase, Flume, SQL, and Java. His projects involve processing large datasets using MapReduce, loading data from databases into HDFS, and sentiment analysis on customer data in the banking domain. He has expertise in Hadoop ecosystems, programming languages, databases, and tools.

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...Agile Testing Alliance

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Processing by "Sampat Kumar" from "Harman". The presentation was done at #doppa17 DevOps++ Global Summit 2017. All the copyrights are reserved with the authorResume - Narasimha Rao B V (TCS)

This document contains the professional summary and experience details of Venkata Narasimha Rao B. He has over 10 years of experience as a Big Data professional and is currently working as a Hadoop Administrator at Tata Consultancy Services. Some of his key qualifications and skills include expertise in Hadoop administration, installation and maintenance of Hadoop clusters, data ingestion, ETL processes, and automating workflows. He has strong technical skills and experience working with Hadoop, HDFS, MapReduce, Hive, Pig and other Big Data tools. He has administered large-scale Hadoop deployments for clients such as American Express, Electronic Arts and Sedgwick CMS.

R and Big Data using Revolution R Enterprise with Hadoop

Find out how Revolution Analytics is making it easier to work with Hadoop frameworks with Revolution R Enterprise.

spark_v1_2

Spark is an open-source cluster computing framework that can run analytics applications much faster than Hadoop by keeping data in memory rather than on disk. While Spark can access Hadoop's HDFS storage system and is often used as a replacement for Hadoop's MapReduce, Hadoop remains useful for batch processing and Spark is not expected to fully replace it. Spark provides speed, ease of use, and integration of SQL, streaming, and machine learning through its APIs in multiple languages.

Big Data Simplified - Is all about Ab'strakSHeN

This document discusses designing a new big data platform to replace an existing complex and outdated one. It analyzes challenges with the current platform, including inability to keep up with business needs. The proposed new platform called Dredge would use abstraction layers to integrate big data tools in a loosely coupled and scalable way. This would simplify development and maintenance while supporting business goals. Key aspects of Dredge include declarative configuration, logical workflows, and plug-and-play integration of tools like HDFS, Hive, HBase, Kafka and Spark in a reusable and event-driven manner. The new platform aims to improve scalability, reduce costs and better support analytics needs over time.

Big dataarchitecturesandecosystem+nosql

This document provides an overview of big data architecture, the Hadoop ecosystem, and NoSQL databases. It discusses common big data use cases, characteristics, and tools. It describes the typical 3-tier traditional architecture compared to the big data architecture using Hadoop. Key components of Hadoop like HDFS, MapReduce, Hive, Pig, Avro/Thrift, HBase are explained. The document also discusses stream processing tools like Storm, Spark and real-time query with Impala. It notes how NoSQL databases can integrate with Hadoop/MapReduce for both batch and real-time processing.

Predictive Analytics with Hadoop

This document discusses predictive analytics using Hadoop. It provides examples of recommendation and classification using big data. It describes obtaining large training datasets through crowdsourcing and implicit feedback. It also discusses operational considerations for predictive models, including snapshotting data, leveraging NFS for ingestion, and ensuring high availability. The document concludes with a question and answer section.

Big data processing with apache spark

Workshop

December 9, 2015

LBS College of Engineering

www.sarithdivakar.info | www.csegyan.org

http://sarithdivakar.info/2015/12/09/wordcount-program-in-python-using-apache-spark-for-data-stored-in-hadoop-hdfs/

Mukul-Resume

Mukul Upadhyay is seeking a position in Big Data technology with an IT company. He has over 5 years of experience developing Hadoop applications and working with technologies like MapReduce, Hive, HBase, HDFS, and Sqoop. Some of his responsibilities include architecting Big Data platforms, developing custom MapReduce jobs, importing and exporting data between HDFS and relational databases, and tuning and monitoring Hadoop clusters. He has worked on projects for clients in the USA and India involving building Hadoop-based analytics platforms and processing terabytes of device log data.

Srikanth hadoop 3.6yrs_hyd

Srikanth K - Hadoop Developer - Jawaharlal Nehru Technological University (JNTU), 2012, Hadoop Developer, ADP india Pvt Ltd from Alwasi Software Pvt Ltd, 3.6 yrs, Hyderabad

Real World Use Cases: Hadoop and NoSQL in Production

"Real World Use Cases: Hadoop and NoSQL in Production" by Tugdual Grall.

What’s important about a technology is what you can use it to do. I’ve looked at what a number of groups are doing with Apache Hadoop and NoSQL in production, and I will relay what worked well for them and what did not. Drawing from real world use cases, I show how people who understand these new approaches can employ them well in conjunction with traditional approaches and existing applications. Thread Detection, Datawarehouse optimization, Marketing Efficiency, Biometric Database are some examples exposed during this presentation.

Resume_VipinKP

This document contains the resume of Vipin KP, who has over 5 years of experience as a Big Data Hadoop Developer. He has extensive experience developing Hadoop applications for clients such as EMC, Apple, Dun & Bradstreet, Neilsen, Commonwealth Bank of Australia, and Nokia Siemens Network. He has expertise in technologies such as Hadoop, Hive, Pig, Sqoop, Oozie, and Spark and has developed ETL processes, data pipelines, and analytics solutions on Hadoop clusters. He holds a Master's degree in Computer Science and is Cloudera certified in Hadoop development.

What's hot (20)

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

R and Big Data using Revolution R Enterprise with Hadoop

R and Big Data using Revolution R Enterprise with Hadoop

Real World Use Cases: Hadoop and NoSQL in Production

Real World Use Cases: Hadoop and NoSQL in Production

Similar to Sanath pabba hadoop resume 1.0

Monika_Raghuvanshi

Monika Raghuvanshi is seeking a position as a Hadoop Administrator where she can apply her 7 years of experience in Hadoop and Unix administration. She has expertise in installing, configuring, and maintaining Hadoop clusters as well as ensuring security through Kerberos and SSL. She is proficient in Linux, networking, programming languages, and databases. Her experience includes projects with Barclays, GE Healthcare, Ontario Ministry of Transportation, and Nortel where she administered Hadoop and Unix systems.

Anil_BigData Resume

This document contains Anil Kumar's resume. It summarizes his contact information, professional experience working with Hadoop and related technologies like MapReduce, Pig, and Hive. It also lists his technical skills and qualifications, including being a MapR certified Hadoop Professional. His work experience includes developing MapReduce algorithms, installing and configuring MapR Hadoop clusters, and working on projects for clients like Pfizer and American Express involving data analytics using Hadoop, Spark, and Hive.

Rajeev kumar apache_spark & scala developer

Rajeev Kumar is an experienced Apache Spark and Scala developer based in Amsterdam, NL. He has over 8 years of experience working with big data technologies like Apache Spark, Scala, Java, Hadoop, and data integration tools. He is proficient in processing large structured and unstructured datasets to identify patterns and gain insights. His experience includes designing and developing Spark applications using Scala, ETL processes, data warehousing, and working with technologies like Hive, HDFS, MapReduce, Sqoop, Kafka and more.

resumePdf

Amit Kumar is a technical professional with 3+ years of experience in Spark, Scala, Java, Hadoop and AWS. He has experience developing data ingestion frameworks using these technologies. His current project involves ingesting data from multiple sources into AWS S3 and creating a golden record for each customer. He is responsible for data quality checks, creating jobs to ingest and process the data, and automating the workflow using AWS Lambda and EMR. Previously he has worked on projects involving data migration from Teradata to Hadoop, converting graphs to XML/Java code to replicate workflows, and developing software for aircraft cabin systems.

Arindam Sengupta _ Resume

Arindam Sengupta has over 17 years of experience in architecting, developing, implementing, and customizing client-server and web-based applications. He has extensive experience with technologies like Hadoop, HDFS, MapReduce, Pig, Hive, HBase, Spark, Java, Oracle, SQL Server, and IBM DB2. Some of his recent projects involve designing Hadoop-based solutions for data ingestion, analytics, and visualization using technologies like Flume, Sqoop, HBase, MapReduce, Spark, and REST services.

sudipto_resume

This document contains a summary of a candidate's experience and qualifications. In 2 sentences:

The candidate has over 2 years of experience in big data technologies like Hadoop, Hive, and Spark, and has worked on projects involving data ingestion, analytics, and building recommendation engines. They are seeking a position in big data or data science that provides opportunities for learning, growth, and career advancement.

Sidharth_CV

This document contains the resume of Sidharth Kumar which summarizes his professional experience working as a Hadoop Engineer at HCL Technologies Ltd. since 2014. It outlines his responsibilities maintaining Hadoop clusters and developing MapReduce applications to automate tasks. Sidharth has experience with technologies like Java, HBase, Solr, MapReduce, Spark and Flume. He also has skills in Linux, shell scripting, Oracle, MySQL and web technologies.

Sandish3Certs

The document provides a summary of a senior big data consultant with over 4 years of experience working with technologies such as Apache Spark, Hadoop, Hive, Pig, Kafka and databases including HBase, Cassandra. The consultant has strong skills in building real-time streaming solutions, data pipelines, and implementing Hadoop-based data warehouses. Areas of expertise include Spark, Scala, Java, machine learning, and cloud platforms like AWS.

HariKrishna4+_cv

This document provides a summary of R.HariKrishna's professional experience and skills. He has over 4 years of experience developing software using technologies like Java, Scala, Hadoop and NoSQL databases. Some of his key projects involved developing real-time analytics platforms using Spark Streaming, Kafka and Cassandra to analyze sensor data, and using Hadoop, Hive and Pig to perform predictive analytics on server logs and calculate production credit reports by analyzing banking transactions. He is proficient in MapReduce, Pig, Hive, HDFS and has skills in machine learning technologies like Mahout.

Started with-apache-spark

In the past, emerging technologies took years to mature. In the case of big data, while effective tools are still emerging, the analytics requirements are changing rapidly resulting in businesses to either make it or be left behind

Vijay

Vijay Muralidharan has over 5 years of experience as a Big Data Engineer working with Hadoop, Spark, Hive, Pig and other big data tools. He has a Master's in Cloud Computing and is a certified Hadoop Administrator. The document provides details of his skills, work experience implementing and managing Hadoop clusters, and education background working on projects related to performance evaluation of distributed file systems.

RESUME_N

The document contains details about Nageswara Rao Dasari including his contact information, career objective, professional summary, technical summary, educational summary, and assignments. It outlines his 4+ years of experience as a Software Engineer working with technologies like Hadoop, Java, SQL, and tools like Eclipse. It provides details on 3 projects he worked on involving building platforms for banking customer data, retail customer data processing, and a web application.

Sunshine consulting Mopuru Babu CV_Java_J2ee_Spring_Bigdata_Scala_Spark

This document provides a summary of Mopuru Babu's experience and skills. He has over 9 years of experience in software development using Java technologies and 2 years of experience in Hadoop development. He has expert knowledge of technologies like Hadoop, Hive, Pig, Spark, and databases like HBase and SQL. He has worked on projects in data analytics, ETL, and building applications on big data platforms. He is proficient in Java, Scala, SQL, Pig Latin, HiveQL and has strong skills in distributed systems, data modeling, and Agile methodologies.

Sunshine consulting mopuru babu cv_java_j2_ee_spring_bigdata_scala_Spark

This document provides a summary of Mopuru Babu's experience and skills. He has over 9 years of experience in software development using Java technologies and 2 years of experience in Hadoop development. He has expert knowledge of technologies like Hadoop, Hive, Pig, Spark, and databases like HBase and SQL. He has worked on projects for clients in various industries involving designing, developing, and deploying distributed applications that process and analyze large datasets.

Vishnu_HadoopDeveloper

Vishnu has over 5 years of experience in application development using Java and big data technologies like Hadoop. He has worked on projects involving web application development, data analytics using Hadoop components like HDFS, MapReduce, Pig and Hive. His skills include Java, J2EE, databases, version control and he has experience developing applications for both web and mobile. He is currently working as a Hadoop developer at Capgemini.

Manikyam_Hadoop_5+Years

M. Manikyam is a software engineer with over 5 years of experience developing solutions for big data problems using Apache Spark, Hadoop, and related technologies. He has extensive hands-on experience building real-time data streaming pipelines and analytics applications on large datasets. Some of his responsibilities have included developing Spark Streaming applications, integrating Hive and HBase, and administering Hadoop clusters. He is looking for new opportunities to innovate and improve software products.

Resume (1)

This document contains the resume summary of Nageswara Rao Dasari. It outlines his 3.1 years of experience as a Software Engineer working on BIG DATA Technologies like Hadoop, HDFS, MapReduce, Hive and Pig. It also mentions his 1.4 years of experience in core Java and lists his technical skills like Java, SQL, JavaScript, CSS, Oracle, MySQL. It summarizes his most recent roles on projects for Barclays Bank and Target, where he performed tasks like data loading, writing MapReduce programs and Hive queries, and resolving JIRA tickets.

Prasanna Resume

This document contains a summary and details for Prasanna Kumar, including his contact information, work experience, education, technical skills, and professional projects. He has 4 years of experience in application and Hadoop development with expertise in the Hadoop ecosystem, Hive, Impala, Pig, Phoenix, HBase, Spark, and Scala. His work experience includes projects involving building reports from sales data stored in HDFS using Hive, Pig, and Sqoop, and developing insurance management applications using Oracle ADF.

Enterprise Data Workflows with Cascading and Windows Azure HDInsight

SF Bay Area Azure Developers meetup at Microsoft, SF on 2013-06-11

http://www.meetup.com/bayazure/events/120889902/

Big data with java

Big Data Applications with Java discusses various big data technologies including Apache Hadoop, Apache Spark, Apache Kafka, and Apache Cassandra. It defines big data as huge volumes of data that cannot be processed using traditional approaches due to constraints on storage and processing time. The document then covers characteristics of big data like volume, velocity, variety, veracity, variability, and value. It provides overviews of Apache Hadoop and its ecosystem including HDFS and MapReduce. Apache Spark is introduced as an enhancement to MapReduce that processes data faster in memory. Apache Kafka and Cassandra are also summarized as distributed streaming and database platforms respectively. The document concludes by comparing Hadoop and Spark, outlining their relative performance, costs, processing capabilities,

Similar to Sanath pabba hadoop resume 1.0 (20)

Sunshine consulting Mopuru Babu CV_Java_J2ee_Spring_Bigdata_Scala_Spark

Sunshine consulting Mopuru Babu CV_Java_J2ee_Spring_Bigdata_Scala_Spark

Sunshine consulting mopuru babu cv_java_j2_ee_spring_bigdata_scala_Spark

Sunshine consulting mopuru babu cv_java_j2_ee_spring_bigdata_scala_Spark

Enterprise Data Workflows with Cascading and Windows Azure HDInsight

Enterprise Data Workflows with Cascading and Windows Azure HDInsight

Recently uploaded

一比一原版加拿大麦吉尔大学毕业证(mcgill毕业证书)如何办理

原版一模一样【微信:741003700 】【加拿大麦吉尔大学毕业证(mcgill毕业证书)成绩单】【微信:741003700 】学位证,留信认证(真实可查,永久存档)原件一模一样纸张工艺/offer、雅思、外壳等材料/诚信可靠,可直接看成品样本,帮您解决无法毕业带来的各种难题!外壳,原版制作,诚信可靠,可直接看成品样本。行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备。十五年致力于帮助留学生解决难题,包您满意。

本公司拥有海外各大学样板无数,能完美还原。

1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。材料咨询办理、认证咨询办理请加学历顾问Q/微741003700

【主营项目】

一.毕业证【q微741003700】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【q/微741003700】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

办理加拿大麦吉尔大学毕业证(mcgill毕业证书)【微信:741003700 】外观非常简单,由纸质材料制成,上面印有校徽、校名、毕业生姓名、专业等信息。

办理加拿大麦吉尔大学毕业证(mcgill毕业证书)【微信:741003700 】格式相对统一,各专业都有相应的模板。通常包括以下部分:

校徽:象征着学校的荣誉和传承。

校名:学校英文全称

授予学位:本部分将注明获得的具体学位名称。

毕业生姓名:这是最重要的信息之一,标志着该证书是由特定人员获得的。

颁发日期:这是毕业正式生效的时间,也代表着毕业生学业的结束。

其他信息:根据不同的专业和学位,可能会有一些特定的信息或章节。

办理加拿大麦吉尔大学毕业证(mcgill毕业证书)【微信:741003700 】价值很高,需要妥善保管。一般来说,应放置在安全、干燥、防潮的地方,避免长时间暴露在阳光下。如需使用,最好使用复印件而不是原件,以免丢失。

综上所述,办理加拿大麦吉尔大学毕业证(mcgill毕业证书)【微信:741003700 】是证明身份和学历的高价值文件。外观简单庄重,格式统一,包括重要的个人信息和发布日期。对持有人来说,妥善保管是非常重要的。

一比一原版南昆士兰大学毕业证如何办理

原版一模一样【微信:741003700 】【南昆士兰大学毕业证成绩单】【微信:741003700 】学位证,留信认证(真实可查,永久存档)原件一模一样纸张工艺/offer、雅思、外壳等材料/诚信可靠,可直接看成品样本,帮您解决无法毕业带来的各种难题!外壳,原版制作,诚信可靠,可直接看成品样本。行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备。十五年致力于帮助留学生解决难题,包您满意。

本公司拥有海外各大学样板无数,能完美还原。

1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。材料咨询办理、认证咨询办理请加学历顾问Q/微741003700

【主营项目】

一.毕业证【q微741003700】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【q/微741003700】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

办理南昆士兰大学毕业证【微信:741003700 】外观非常简单,由纸质材料制成,上面印有校徽、校名、毕业生姓名、专业等信息。

办理南昆士兰大学毕业证【微信:741003700 】格式相对统一,各专业都有相应的模板。通常包括以下部分:

校徽:象征着学校的荣誉和传承。

校名:学校英文全称

授予学位:本部分将注明获得的具体学位名称。

毕业生姓名:这是最重要的信息之一,标志着该证书是由特定人员获得的。

颁发日期:这是毕业正式生效的时间,也代表着毕业生学业的结束。

其他信息:根据不同的专业和学位,可能会有一些特定的信息或章节。

办理南昆士兰大学毕业证【微信:741003700 】价值很高,需要妥善保管。一般来说,应放置在安全、干燥、防潮的地方,避免长时间暴露在阳光下。如需使用,最好使用复印件而不是原件,以免丢失。

综上所述,办理南昆士兰大学毕业证【微信:741003700 】是证明身份和学历的高价值文件。外观简单庄重,格式统一,包括重要的个人信息和发布日期。对持有人来说,妥善保管是非常重要的。

Bangalore ℂall Girl 000000 Bangalore Escorts Service

Bangalore ℂall Girl 000000 Bangalore Escorts Service

Telemetry Solution for Gaming (AWS Summit'24)

Discover the cutting-edge telemetry solution implemented for Alan Wake 2 by Remedy Entertainment in collaboration with AWS. This comprehensive presentation dives into our objectives, detailing how we utilized advanced analytics to drive gameplay improvements and player engagement.

Key highlights include:

Primary Goals: Implementing gameplay and technical telemetry to capture detailed player behavior and game performance data, fostering data-driven decision-making.

Tech Stack: Leveraging AWS services such as EKS for hosting, WAF for security, Karpenter for instance optimization, S3 for data storage, and OpenTelemetry Collector for data collection. EventBridge and Lambda were used for data compression, while Glue ETL and Athena facilitated data transformation and preparation.

Data Utilization: Transforming raw data into actionable insights with technologies like Glue ETL (PySpark scripts), Glue Crawler, and Athena, culminating in detailed visualizations with Tableau.

Achievements: Successfully managing 700 million to 1 billion events per month at a cost-effective rate, with significant savings compared to commercial solutions. This approach has enabled simplified scaling and substantial improvements in game design, reducing player churn through targeted adjustments.

Community Engagement: Enhanced ability to engage with player communities by leveraging precise data insights, despite having a small community management team.

This presentation is an invaluable resource for professionals in game development, data analytics, and cloud computing, offering insights into how telemetry and analytics can revolutionize player experience and game performance optimization.

[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024![[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

We are pleased to share with you the latest VCOSA statistical report on the cotton and yarn industry for the month of May 2024.

Starting from January 2024, the full weekly and monthly reports will only be available for free to VCOSA members. To access the complete weekly report with figures, charts, and detailed analysis of the cotton fiber market in the past week, interested parties are kindly requested to contact VCOSA to subscribe to the newsletter.

Senior Engineering Sample EM DOE - Sheet1.pdf

Sample Engineering Profiles from Product Companies DOE EM etc

06-18-2024-Princeton Meetup-Introduction to Milvus

06-18-2024-Princeton Meetup-Introduction to Milvus

tim.spann@zilliz.com

https://www.linkedin.com/in/timothyspann/

https://x.com/paasdev

https://github.com/tspannhw

https://github.com/milvus-io/milvus

Get Milvused!

https://milvus.io/

Read my Newsletter every week!

https://github.com/tspannhw/FLiPStackWeekly/blob/main/142-17June2024.md

For more cool Unstructured Data, AI and Vector Database videos check out the Milvus vector database videos here

https://www.youtube.com/@MilvusVectorDatabase/videos

Unstructured Data Meetups -

https://www.meetup.com/unstructured-data-meetup-new-york/

https://lu.ma/calendar/manage/cal-VNT79trvj0jS8S7

https://www.meetup.com/pro/unstructureddata/

https://zilliz.com/community/unstructured-data-meetup

https://zilliz.com/event

Twitter/X: https://x.com/milvusio https://x.com/paasdev

LinkedIn: https://www.linkedin.com/company/zilliz/ https://www.linkedin.com/in/timothyspann/

GitHub: https://github.com/milvus-io/milvus https://github.com/tspannhw

Invitation to join Discord: https://discord.com/invite/FjCMmaJng6

Blogs: https://milvusio.medium.com/ https://www.opensourcevectordb.cloud/ https://medium.com/@tspann

Expand LLMs' knowledge by incorporating external data sources into LLMs and your AI applications.

[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024![[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

We are pleased to share with you the latest VCOSA statistical report on the cotton and yarn industry for the month of March 2024.

Starting from January 2024, the full weekly and monthly reports will only be available for free to VCOSA members. To access the complete weekly report with figures, charts, and detailed analysis of the cotton fiber market in the past week, interested parties are kindly requested to contact VCOSA to subscribe to the newsletter.

06-20-2024-AI Camp Meetup-Unstructured Data and Vector Databases

Tech Talk: Unstructured Data and Vector Databases

Speaker: Tim Spann (Zilliz)

Abstract: In this session, I will discuss the unstructured data and the world of vector databases, we will see how they different from traditional databases. In which cases you need one and in which you probably don’t. I will also go over Similarity Search, where do you get vectors from and an example of a Vector Database Architecture. Wrapping up with an overview of Milvus.

Introduction

Unstructured data, vector databases, traditional databases, similarity search

Vectors

Where, What, How, Why Vectors? We’ll cover a Vector Database Architecture

Introducing Milvus

What drives Milvus' Emergence as the most widely adopted vector database

Hi Unstructured Data Friends!

I hope this video had all the unstructured data processing, AI and Vector Database demo you needed for now. If not, there’s a ton more linked below.

My source code is available here

https://github.com/tspannhw/

Let me know in the comments if you liked what you saw, how I can improve and what should I show next? Thanks, hope to see you soon at a Meetup in Princeton, Philadelphia, New York City or here in the Youtube Matrix.

Get Milvused!

https://milvus.io/

Read my Newsletter every week!

https://github.com/tspannhw/FLiPStackWeekly/blob/main/141-10June2024.md

For more cool Unstructured Data, AI and Vector Database videos check out the Milvus vector database videos here

https://www.youtube.com/@MilvusVectorDatabase/videos

Unstructured Data Meetups -

https://www.meetup.com/unstructured-data-meetup-new-york/

https://lu.ma/calendar/manage/cal-VNT79trvj0jS8S7

https://www.meetup.com/pro/unstructureddata/

https://zilliz.com/community/unstructured-data-meetup

https://zilliz.com/event

Twitter/X: https://x.com/milvusio https://x.com/paasdev

LinkedIn: https://www.linkedin.com/company/zilliz/ https://www.linkedin.com/in/timothyspann/

GitHub: https://github.com/milvus-io/milvus https://github.com/tspannhw

Invitation to join Discord: https://discord.com/invite/FjCMmaJng6

Blogs: https://milvusio.medium.com/ https://www.opensourcevectordb.cloud/ https://medium.com/@tspann

https://www.meetup.com/unstructured-data-meetup-new-york/events/301383476/?slug=unstructured-data-meetup-new-york&eventId=301383476

https://www.aicamp.ai/event/eventdetails/W2024062014

Sid Sigma educational and problem solving power point- Six Sigma.ppt

Sid Sigma educational and problem solving power point

reading_sample_sap_press_operational_data_provisioning_with_sap_bw4hana (1).pdf

Gran libro. Lo recomiendo

一比一原版美国帕森斯设计学院毕业证(parsons毕业证书)如何办理

原版一模一样【微信:741003700 】【美国帕森斯设计学院毕业证(parsons毕业证书)成绩单】【微信:741003700 】学位证,留信认证(真实可查,永久存档)原件一模一样纸张工艺/offer、雅思、外壳等材料/诚信可靠,可直接看成品样本,帮您解决无法毕业带来的各种难题!外壳,原版制作,诚信可靠,可直接看成品样本。行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备。十五年致力于帮助留学生解决难题,包您满意。

本公司拥有海外各大学样板无数,能完美还原。

1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。材料咨询办理、认证咨询办理请加学历顾问Q/微741003700

【主营项目】

一.毕业证【q微741003700】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【q/微741003700】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

办理美国帕森斯设计学院毕业证(parsons毕业证书)【微信:741003700 】外观非常简单,由纸质材料制成,上面印有校徽、校名、毕业生姓名、专业等信息。

办理美国帕森斯设计学院毕业证(parsons毕业证书)【微信:741003700 】格式相对统一,各专业都有相应的模板。通常包括以下部分:

校徽:象征着学校的荣誉和传承。

校名:学校英文全称

授予学位:本部分将注明获得的具体学位名称。

毕业生姓名:这是最重要的信息之一,标志着该证书是由特定人员获得的。

颁发日期:这是毕业正式生效的时间,也代表着毕业生学业的结束。

其他信息:根据不同的专业和学位,可能会有一些特定的信息或章节。

办理美国帕森斯设计学院毕业证(parsons毕业证书)【微信:741003700 】价值很高,需要妥善保管。一般来说,应放置在安全、干燥、防潮的地方,避免长时间暴露在阳光下。如需使用,最好使用复印件而不是原件,以免丢失。

综上所述,办理美国帕森斯设计学院毕业证(parsons毕业证书)【微信:741003700 】是证明身份和学历的高价值文件。外观简单庄重,格式统一,包括重要的个人信息和发布日期。对持有人来说,妥善保管是非常重要的。

Discovering Digital Process Twins for What-if Analysis: a Process Mining Appr...

This webinar discusses the limitations of traditional approaches for business process simulation based on had-crafted model with restrictive assumptions. It shows how process mining techniques can be assembled together to discover high-fidelity digital twins of end-to-end processes from event data.

一比一原版(lbs毕业证书)伦敦商学院毕业证如何办理

原版一模一样【微信:741003700 】【(lbs毕业证书)伦敦商学院毕业证成绩单】【微信:741003700 】学位证,留信认证(真实可查,永久存档)原件一模一样纸张工艺/offer、雅思、外壳等材料/诚信可靠,可直接看成品样本,帮您解决无法毕业带来的各种难题!外壳,原版制作,诚信可靠,可直接看成品样本。行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备。十五年致力于帮助留学生解决难题,包您满意。

本公司拥有海外各大学样板无数,能完美还原。

1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。材料咨询办理、认证咨询办理请加学历顾问Q/微741003700

【主营项目】

一.毕业证【q微741003700】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证【q/微741003700】

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

办理(lbs毕业证书)伦敦商学院毕业证【微信:741003700 】外观非常简单,由纸质材料制成,上面印有校徽、校名、毕业生姓名、专业等信息。

办理(lbs毕业证书)伦敦商学院毕业证【微信:741003700 】格式相对统一,各专业都有相应的模板。通常包括以下部分:

校徽:象征着学校的荣誉和传承。

校名:学校英文全称

授予学位:本部分将注明获得的具体学位名称。

毕业生姓名:这是最重要的信息之一,标志着该证书是由特定人员获得的。

颁发日期:这是毕业正式生效的时间,也代表着毕业生学业的结束。

其他信息:根据不同的专业和学位,可能会有一些特定的信息或章节。

办理(lbs毕业证书)伦敦商学院毕业证【微信:741003700 】价值很高,需要妥善保管。一般来说,应放置在安全、干燥、防潮的地方,避免长时间暴露在阳光下。如需使用,最好使用复印件而不是原件,以免丢失。

综上所述,办理(lbs毕业证书)伦敦商学院毕业证【微信:741003700 】是证明身份和学历的高价值文件。外观简单庄重,格式统一,包括重要的个人信息和发布日期。对持有人来说,妥善保管是非常重要的。

一比一原版多伦多大学毕业证(UofT毕业证书)学历如何办理

办理【微信号:176555708】【办理(UofT毕业证书)】【微信号:176555708】《成绩单、外壳、offer、真实留信官方学历认证(永久存档/真实可查)》采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【我们承诺采用的是学校原版纸张(纸质、底色、纹路)我们拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!】

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信号:176555708】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信号:176555708】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

留信网服务项目:

1、留学生专业人才库服务(留信分析)

2、国(境)学习人员提供就业推荐信服务

3、留学人员区块链存储服务

【关于价格问题(保证一手价格)】

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:客户在留信官方认证查询网站查询到认证通过结果后付款,不成功不收费!

一比一原版(UO毕业证)渥太华大学毕业证如何办理

原件一模一样【微信:95270640】【渥太华大学毕业证UO学位证成绩单】【微信:95270640】(留信学历认证永久存档查询)采用学校原版纸张、特殊工艺完全按照原版一比一制作(包括:隐形水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠,文字图案浮雕,激光镭射,紫外荧光,温感,复印防伪)行业标杆!精益求精,诚心合作,真诚制作!多年品质 ,按需精细制作,24小时接单,全套进口原装设备,十五年致力于帮助留学生解决难题,业务范围有加拿大、英国、澳洲、韩国、美国、新加坡,新西兰等学历材料,包您满意。

【业务选择办理准则】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理一份就读学校的毕业证【微信:95270640】文凭即可

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理一份毕业证【微信:95270640】即可

三、进国企,银行,事业单位,考公务员等等,这些单位是必需要提供真实教育部认证的,办理教育部认证所需资料众多且烦琐,所有材料您都必须提供原件,我们凭借丰富的经验,快捷的绿色通道帮您快速整合材料,让您少走弯路。

留信网认证的作用:

1:该专业认证可证明留学生真实身份【微信:95270640】

2:同时对留学生所学专业登记给予评定

3:国家专业人才认证中心颁发入库证书

4:这个认证书并且可以归档倒地方

5:凡事获得留信网入网的信息将会逐步更新到个人身份内,将在公安局网内查询个人身份证信息后,同步读取人才网入库信息

6:个人职称评审加20分

7:个人信誉贷款加10分

8:在国家人才网主办的国家网络招聘大会中纳入资料,供国家高端企业选择人才

→ 【关于价格问题(保证一手价格)

我们所定的价格是非常合理的,而且我们现在做得单子大多数都是代理和回头客户介绍的所以一般现在有新的单子 我给客户的都是第一手的代理价格,因为我想坦诚对待大家 不想跟大家在价格方面浪费时间

对于老客户或者被老客户介绍过来的朋友,我们都会适当给一些优惠。

选择实体注册公司办理,更放心,更安全!我们的承诺:可来公司面谈,可签订合同,会陪同客户一起到教育部认证窗口递交认证材料,客户在教育部官方认证查询网站查询到认证通过结果后付款,不成功不收费!

办理渥太华大学毕业证毕业证offerUO学位证【微信:95270640 】外观非常精致,由特殊纸质材料制成,上面印有校徽、校名、毕业生姓名、专业等信息。

办理渥太华大学毕业证UO学位证毕业证offer【微信:95270640 】格式相对统一,各专业都有相应的模板。通常包括以下部分:

校徽:象征着学校的荣誉和传承。

校名:学校英文全称

授予学位:本部分将注明获得的具体学位名称。

毕业生姓名:这是最重要的信息之一,标志着该证书是由特定人员获得的。

颁发日期:这是毕业正式生效的时间,也代表着毕业生学业的结束。

其他信息:根据不同的专业和学位,可能会有一些特定的信息或章节。

办理渥太华大学毕业证毕业证offerUO学位证【微信:95270640 】价值很高,需要妥善保管。一般来说,应放置在安全、干燥、防潮的地方,避免长时间暴露在阳光下。如需使用,最好使用复印件而不是原件,以免丢失。

综上所述,办理渥太华大学毕业证毕业证offerUO学位证【微信:95270640 】是证明身份和学历的高价值文件。外观简单庄重,格式统一,包括重要的个人信息和发布日期。对持有人来说,妥善保管是非常重要的。

Recently uploaded (20)

A gentle exploration of Retrieval Augmented Generation

A gentle exploration of Retrieval Augmented Generation

Bangalore ℂall Girl 000000 Bangalore Escorts Service

Bangalore ℂall Girl 000000 Bangalore Escorts Service

[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024![[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[VCOSA] Monthly Report - Cotton & Yarn Statistics May 2024

06-18-2024-Princeton Meetup-Introduction to Milvus

06-18-2024-Princeton Meetup-Introduction to Milvus

[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024![[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[VCOSA] Monthly Report - Cotton & Yarn Statistics March 2024

06-20-2024-AI Camp Meetup-Unstructured Data and Vector Databases

06-20-2024-AI Camp Meetup-Unstructured Data and Vector Databases

Sid Sigma educational and problem solving power point- Six Sigma.ppt

Sid Sigma educational and problem solving power point- Six Sigma.ppt

Senior Software Profiles Backend Sample - Sheet1.pdf

Senior Software Profiles Backend Sample - Sheet1.pdf

Econ3060_Screen Time and Success_ final_GroupProject.pdf

Econ3060_Screen Time and Success_ final_GroupProject.pdf

reading_sample_sap_press_operational_data_provisioning_with_sap_bw4hana (1).pdf

reading_sample_sap_press_operational_data_provisioning_with_sap_bw4hana (1).pdf

Discovering Digital Process Twins for What-if Analysis: a Process Mining Appr...

Discovering Digital Process Twins for What-if Analysis: a Process Mining Appr...

Sanath pabba hadoop resume 1.0

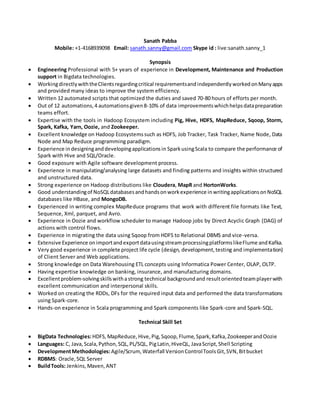

- 1. Sanath Pabba Mobile: +1-4168939098 Email: sanath.sanny@gmail.com Skype id : live:sanath.sanny_1 Synopsis Engineering Professional with 5+ years of experience in Development, Maintenance and Production support in Bigdata technologies. WorkingdirectlywiththeClientsregardingcritical requirementsandindependently workedonManyapps and provided many ideas to improve the system efficiency. Written 12 automated scripts that optimized the duties and saved 70-80 hours of efforts per month. Out of 12 automations,4 automationsgiven8-10% of data improvementswhichhelpsdatapreparation teams effort. Expertise with the tools in Hadoop Ecosystem including Pig, Hive, HDFS, MapReduce, Sqoop, Storm, Spark, Kafka, Yarn, Oozie, and Zookeeper. Excellent knowledge on Hadoop Ecosystemssuch as HDFS, Job Tracker, Task Tracker, Name Node, Data Node and Map Reduce programming paradigm. Experience indesigninganddevelopingapplicationsin SparkusingScala to compare the performance of Spark with Hive and SQL/Oracle. Good exposure with Agile software development process. Experience in manipulating/analysing large datasets and finding patterns and insights within structured and unstructured data. Strong experience on Hadoop distributions like Cloudera, MapR and HortonWorks. Good understandingof NoSQLdatabasesandhandsonworkexperience inwritingapplicationsonNoSQL databases like HBase, and MongoDB. Experienced in writing complex MapReduce programs that work with different file formats like Text, Sequence, Xml, parquet, and Avro. Experience in Oozie and workflow scheduler to manage Hadoop jobs by Direct Acyclic Graph (DAG) of actions with control flows. Experience in migrating the data using Sqoop from HDFS to Relational DBMS and vice-versa. Extensive Experience onimportandexportdatausingstreamprocessingplatformslikeFlume andKafka. Very good experience in complete project life cycle (design, development,testing and implementation) of Client Server and Web applications. Strong knowledge on Data Warehousing ETL concepts using Informatica Power Center, OLAP, OLTP. Having expertise knowledge on banking, insurance, and manufacturing domains. Excellentproblem-solvingskillswithastrong technical backgroundand resultorientedteamplayerwith excellent communication and interpersonal skills. Worked on creating the RDDs, DFs for the required input data and performed the data transformations using Spark-core. Hands-on experience in Scala programming and Spark components like Spark-core and Spark-SQL. Technical Skill Set BigData Technologies:HDFS,MapReduce,Hive,Pig,Sqoop,Flume,Spark,Kafka,ZookeeperandOozie Languages: C, Java,Scala,Python,SQL,PL/SQL, PigLatin,HiveQL, JavaScript,Shell Scripting DevelopmentMethodologies:Agile/Scrum, Waterfall VersionControlToolsGit,SVN,Bitbucket RDBMS: Oracle,SQL Server BuildTools: Jenkins,Maven,ANT

- 2. BusinessIntelligence Tools:Tableau,Splunk,QlikView,Alteryx Tools: IntelliJIDE CloudEnvironment:AWS Scripting: Unix shell scripting,Pythonscripting Scheduling:Maestro Career Highlights ProficiencyForte Extensivelyworkedondataextraction,Transformationandloadingdatafromvarioussourceslike DB2, Oracle and Flat files. StrongskillsinData RequirementAnalysisandDataMappingfor ETL processes. Well versedindevelopingthe SQLqueries,unions,andmultiple table joins. Well versedwithUNIXCommands&able towrite shellscriptsanddevelopedfew scriptsto reduce the manual interventionaspartof JobMonitoringAutomationProcess. Work experience Sep2019 till currentdate in WalmartCanada as CustomerExperienceSpecialist(Part-time). Jan 2018 to Mar 2019 in Infosys Limitedas SeniorSystemEngineer. Apr 2015 to Jan2018 in NTT Data Global DeliveryServices asApplicationsoftware dev.Consultant. Project Details Company : InfosysLimited. Project : Enterprise businesssolution(Metlife Inc.) Environment : Hadoop, Spark,Spark SQL, Scala,SQL Server, shell scripting. Scope: EBS is a project where Informatica pulls data from SFDC and sends to Big Data at RDZ. Big Data kicksitsprocesswhenthe triggerfile,control fileanddatafilesare received.Allthe filescheckvalidations. Afterall the transformationsare done,the data is storedin hive,pointingtoHDFS locations.The data is synced to bigsql and down streaming process is done by QlikView team. Roles& Responsibilities: Writing Sqoop Jobs that loads data from DBMS to Hadoop environments. Preparedcode thatinvokessparkscriptsinScalacode thatinvolvesinDataloads,pre-validations,data preparation and post validations. Prepared automation scripts using shell scripting that fetches the data utilizations across the cluster and notifies admins for every hour that helps admin team to avoid regular monitoring checks. Company : InfosysLimited. Project : BluePrism(MetlifeInc.) Environment : Spark,Spark SQL, Scala,Sqoop,SQL Server,shell scripting. Scope: BluePrism is a Source application with SQL Server as its Database. Big Data will extract the data fromBluePrism Environmentsmergetwosourcesintooneandloadthe dataintoHive Database.BigData also archives the data into corresponding history tables either monthly or ad-hoc basis basedon trigger file receivedfromBluePrism.ThisisaweeklyextractfromSQLserverusingSqoopandthenloadthe data through Scala. Jobs have been scheduled in Maestro. Roles& Responsibilities: Prepared data loading scripts using shell scripting which invokes Sqoop jobs. Implemented data merging functionality which pulls data from various environments. Developed scripts that backup data using AVRO technique.

- 3. Company : Infosys Limited. Project : Gross ProcessingMarginreports(Massmutual) Environment : Spark,Spark SQL, Scala,Sqoop,SQL Server,shell scripting. Scope:In GPMReports,we receive the input.csvfromthe Business. Basedonthe clientrequestwe need to generate 6 reports.We will receive the triggerfile anddata file for each report.Using shell script,we will perform validationon the trigger files, input file and paths that representation in Linux and HDFS, if everyvalidationsuccessfulandinvoke the hive scripttogenerate the outputfile andplacedinLinux.We will append the data in hive tables based on the output file. Migrating the Pig Scripts into spark scripts using Scala and report generation will be taken place by the spark and stores in Linux directory. Roles& Responsibilities: Based on the business need, used to prepare the data using hive QL and Spark RDD’s. By using Hadoop, we load the data, prepare the data, implement filters to remove unwanted and uncertain fields and merging all 6 reports from various teams. Implemented8pre-validationrulesand7 postvalidationruleswhichinvolvesindata count,required fields and needful changes and in post validations we move the data to HDFS archive path. Company : Ntt Data Global deliveryservices. Project : Compliance Apps(National Lifegroup) Environment : Spark,Spark SQL, Scala,SQL Server,shell scripting,pig. Scope:Compliance appisagroupof nine adminsystems(AnnuityHost,ERL,MRPS,CDI,Smartapp,WMA, VRPS,PMACS,SBR).The processistoload the datafilesbasedonthe triggerfileswe receivedforthe nine admin systems in to HDFS. There are three types of Load that takes place. They are: 1. Base load 2. Full load 3. Deltaload Tobuildthe workflowtoloadthe datafilesintoHDFSlocationsandintohive tables.We needtocreate hive tableswithoptimizedcompressedformatandload the data into the tables.To write hive script for full load and write the shell script to create a workflow. We use Pig/spark for the delta loads and shell script to invoke the hive for the full load/Historyprocessing.Then,schedule the jobsinMaestro for the daily run. Initially for delta load, we were using Pig scripts. Company : NTT Data Global DeliveryServices. Project : ManufacturingCostWalk Analysis(Honeywell) Environment : Sqoop,Shell scripting,Hive. Scope: The Manufacturingcost walkapplicationusedtostore the informationaboutthe productswhich are beenmanufacturedbyHoneywell.Theyusedtostore the datainSharePointlistsonweeklybasis.But still it is very difficult for them to handle the data using share point because of long time processing. So we proposeda solutionforthemwithHive andSqoop.But theirsource of file generationisfromcsvand xlsfiles.So,we have startedimporting data into hive and processing data based on their requirement. Academia Completed Post graduation from Loyalist college in Project Management (Sep 19- May 20) Completed diploma from Indian institute of Information Technology (Hyderabad) in Artificial Intelligence and data visualization (April 2019 – Aug 2019) Completed Graduation Under JNTU-HYD in Electronics and Communications (Aug 11 – May 14)