The document describes a proposed method for detecting copy-move image forgery using salient keypoint selection. Keypoints are extracted from images using SIFT and KAZE features. Salient keypoints are selected to reduce the number of keypoints and computation time for matching. Bounding boxes are generated around objects using selective search to match keypoints between boxes and detect duplicated regions, eliminating the need for outlier filtering. The method is evaluated on benchmark datasets and outperforms state-of-the-art techniques under geometric transformations and post-processing operations.

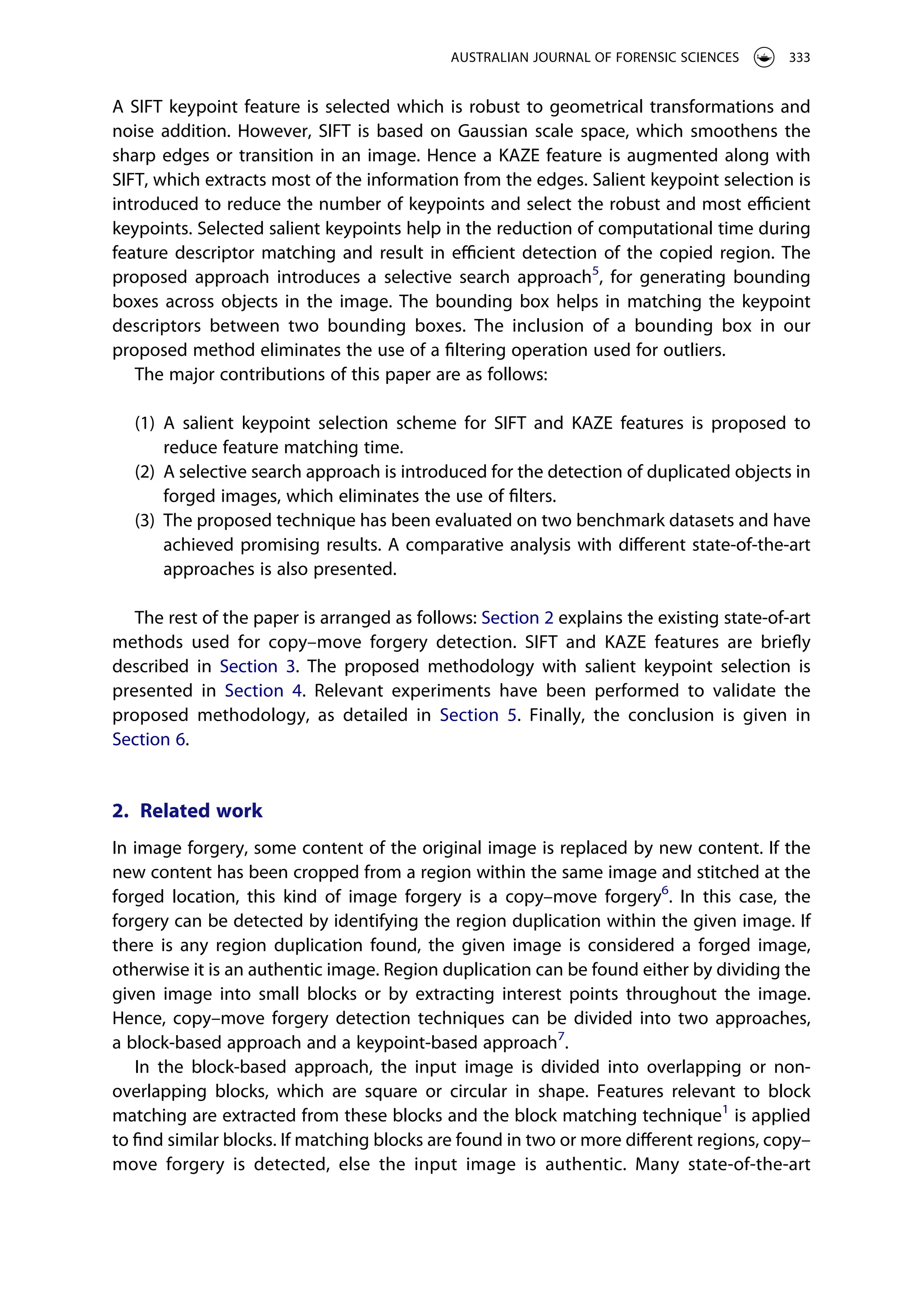

![where ðui; viÞ is the location of the ith keypoint, si is the strength of corresponding

keypoint and n is the total number of keypoints. The saliency score for each keypoint

can be calculated as:

SðPiÞ ¼ DiðPiÞ þ RðPiÞ þ DðPiÞ (10)

where in equation (10), DiðPiÞ is distinctiveness, R ðPiÞ is repeatability and D ðPiÞ is

detectability of the ith keypoint respectively.

(1) Distinctiveness: It is defined as diversity of a keypoint descriptor from the other

keypoints in an image. This can be measured by finding summation of Euclidean

distance (L) of each pair of keypoint descriptors in an image as explained in

equation (11).

DiðPiÞ ¼

1

n 1

X

ðui;viÞ2Pi;i�j

Lðdi; djÞ (11)

(2) Repeatability: It is the estimation of invariance of the keypoints descriptor across

various transformations. This can be measured by finding the average of the

Euclidean distance of the keypoint descriptor in the input image and corresponding

keypoint descriptor of transformed image. In equation (12), t represents the num

ber of geometric transformations.

RðPiÞ ¼

1

t

X

n

ði;jÞ21

Lðdi; dp

j Þ (12)

(3) Detectability: It is defined as the ability of a keypoint to be detected under different

lighting conditions or viewpoints. Detectability of a keypoint is calculated as the

average strength(s) of each keypoint and its corresponding transform as discussed

in equation (13).

DðPiÞ ¼

1

t

X

n

i21

si (13)

The saliency score of each keypoint is calculated using equation (10) by normalizing the

score of Di, R and D in the range [0,1], and the mean of saliency score (μS) is calculated as

mentioned in equation (14). Salient keypoint PS for SIFT and KAZE is selected as per

equation (15), if the SðPiÞ is greater than the μS. Here, μS is considered as salient score

threshold value. Only those keypoints having salient score greater than μSare selected as

salient keypoints. The procedure for selection of salient keypoints for SIFT and KAZE is

explained in proposed Algorithm 1.

μS ¼

1

n

X

n

1

ðSðPiÞÞ (14)

PS ¼ Pi; when SðPiÞ � μS; i ¼ 1; :::; n (15)

AUSTRALIAN JOURNAL OF FORENSIC SCIENCES 339](https://image.slidesharecdn.com/salientkeypoint-basedcopymoveimageforgerydetection-240306170303-16f304e6/75/Salient-keypoint-based-copy-move-image-forgery-detection-pdf-10-2048.jpg)

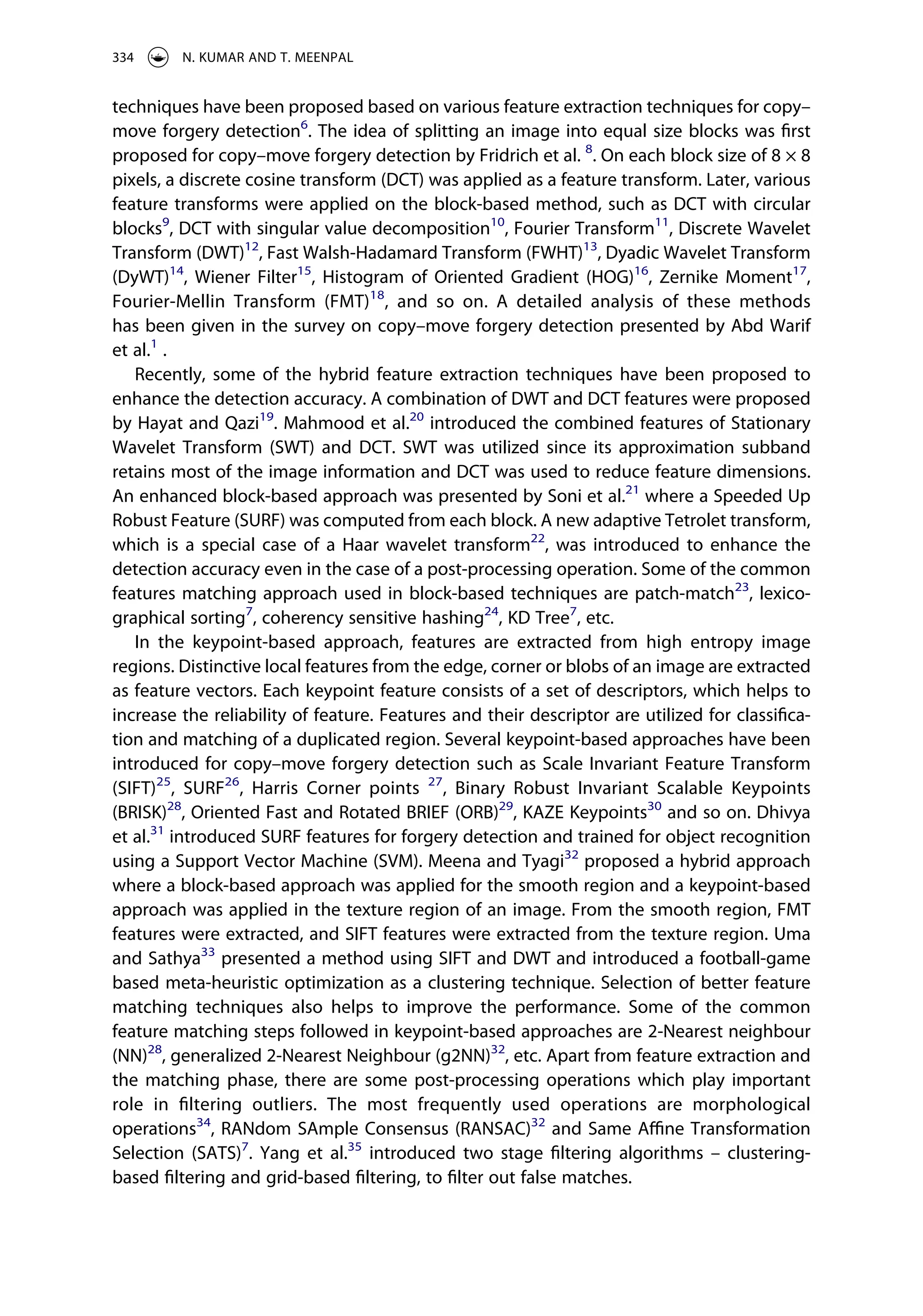

![Algorithm 1: Selection of Salient SIFT and KAZE keypoints from a given image

1 Input: Input image (I) and transformed image (IP

)

Output: PS

2 Compute SIFT and KAZE keypoint (P) from I and Ip

, let n be the number of SIFT or

KAZE KP.

3 P0

¼ ½�

4 for Pi, i 1 to n do

5 Selection of matching keypoints(P0

) between I and IP

.

6 if P I

ð Þ ¼ PðIp

Þ then

7 P0

j Pi where i ¼ 1; :::; n

8 end

9 end

10 S = []

11 for P0

j, j 1 to m do

12 S ðP0

jÞ ¼ Di ðP0

jÞ þ R ðP0

jÞ þ D ðP0

jÞ

13 by using equations (11)–(13)

14 end

15 PS ¼ ½�

16 for Pj j 1 to m do

17 if S ðP0

jÞ � μS then

18 PS ¼ P0

j

19 end

20 end

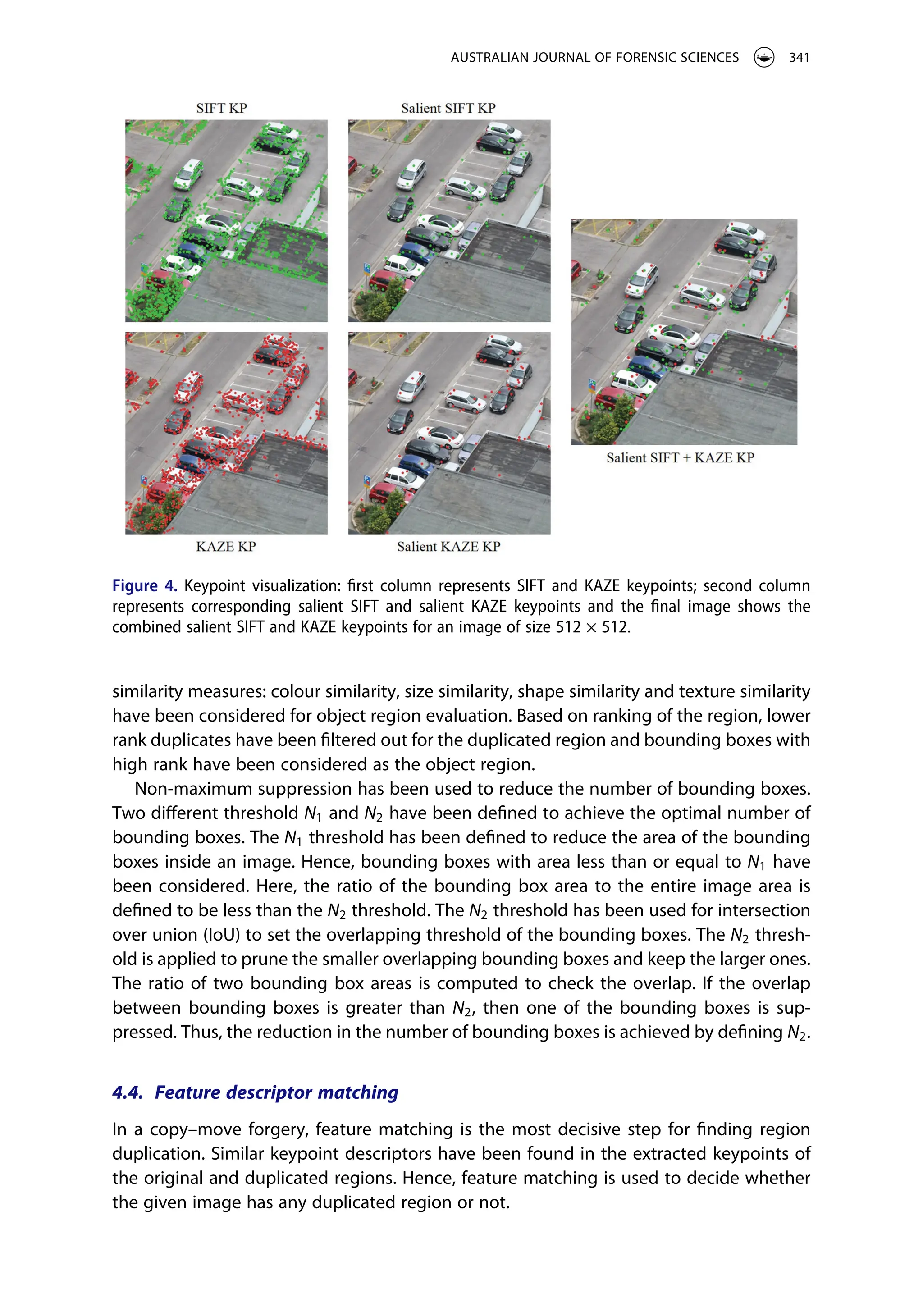

SIFT and KAZE keypoints and their corresponding selected salient keypoints are visualized

in Figure 4. Salient SIFT and KAZE keypoints are plotted together in the third column of

the image and a considerable reduction in the number of keypoints can be observed. This

reduction in the value of n drags the attention to apply the salient keypoint selection,

which reduces the time required in feature matching which is the decisive factor in copy–

move forgery detection.

4.3. Region proposal using selective search

In copy–move forgery, mostly objects have been duplicated. To improve the detection

accuracy, there is a need to first detect the different objects present in the image and then

check for duplication. Motivated by this idea, a selective search-based region proposal has

been used for detecting objects in the image as discussed in Uijlings et al.5

. In an image,

a region proposal finds out prospective objects using segmentation. A region proposal is

executed by combining pixels into smaller segments. The region proposals generated are

of different scales and with varying aspect ratios, as explained in Verma et al.40

. The region

proposal approach used here is much faster and efficient compared with the sliding

window approach for object detection. The selective search uses segmentation based on

image structure to generate class independent object locations. Four different image

340 N. KUMAR AND T. MEENPAL](https://image.slidesharecdn.com/salientkeypoint-basedcopymoveimageforgerydetection-240306170303-16f304e6/75/Salient-keypoint-based-copy-move-image-forgery-detection-pdf-11-2048.jpg)

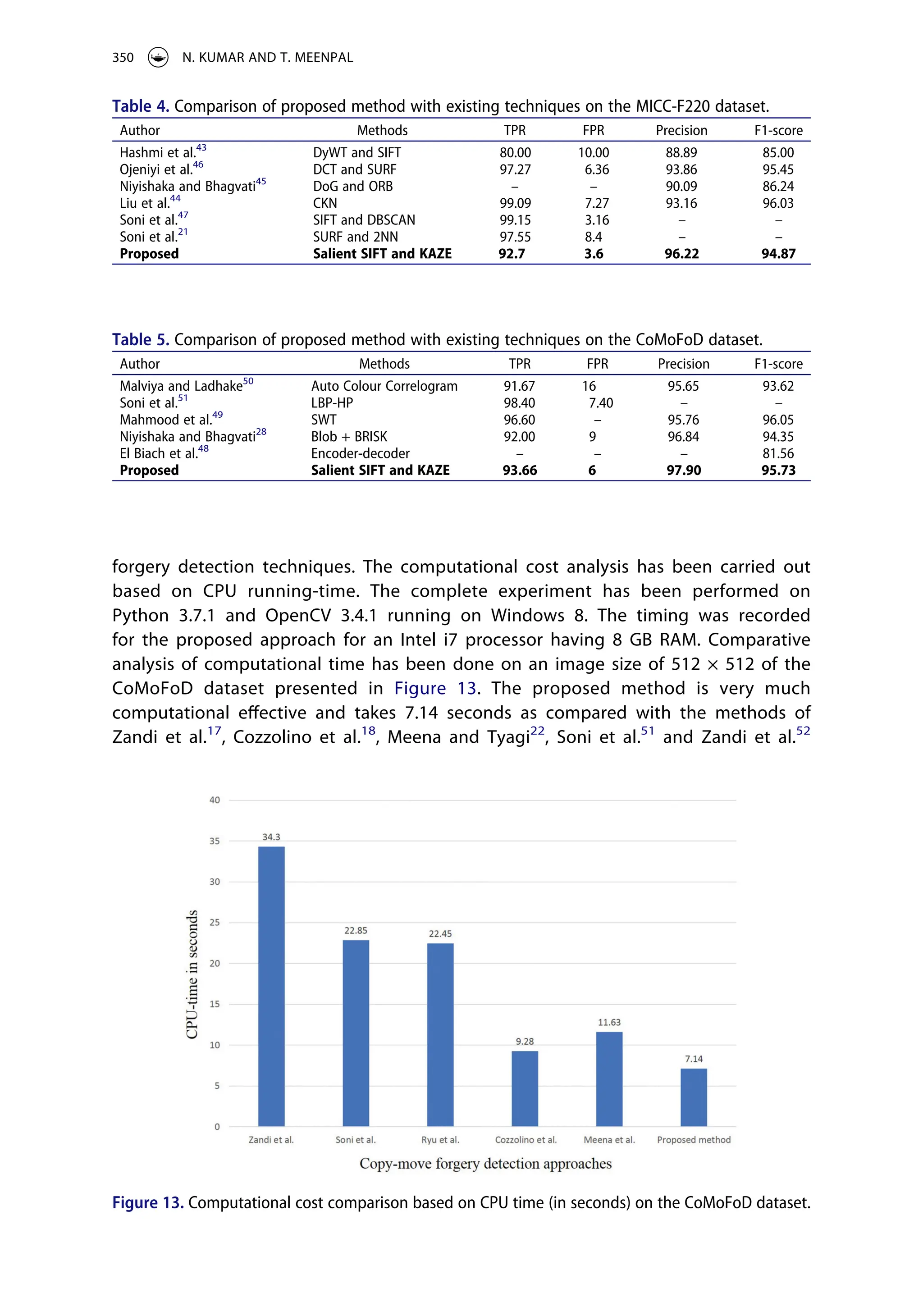

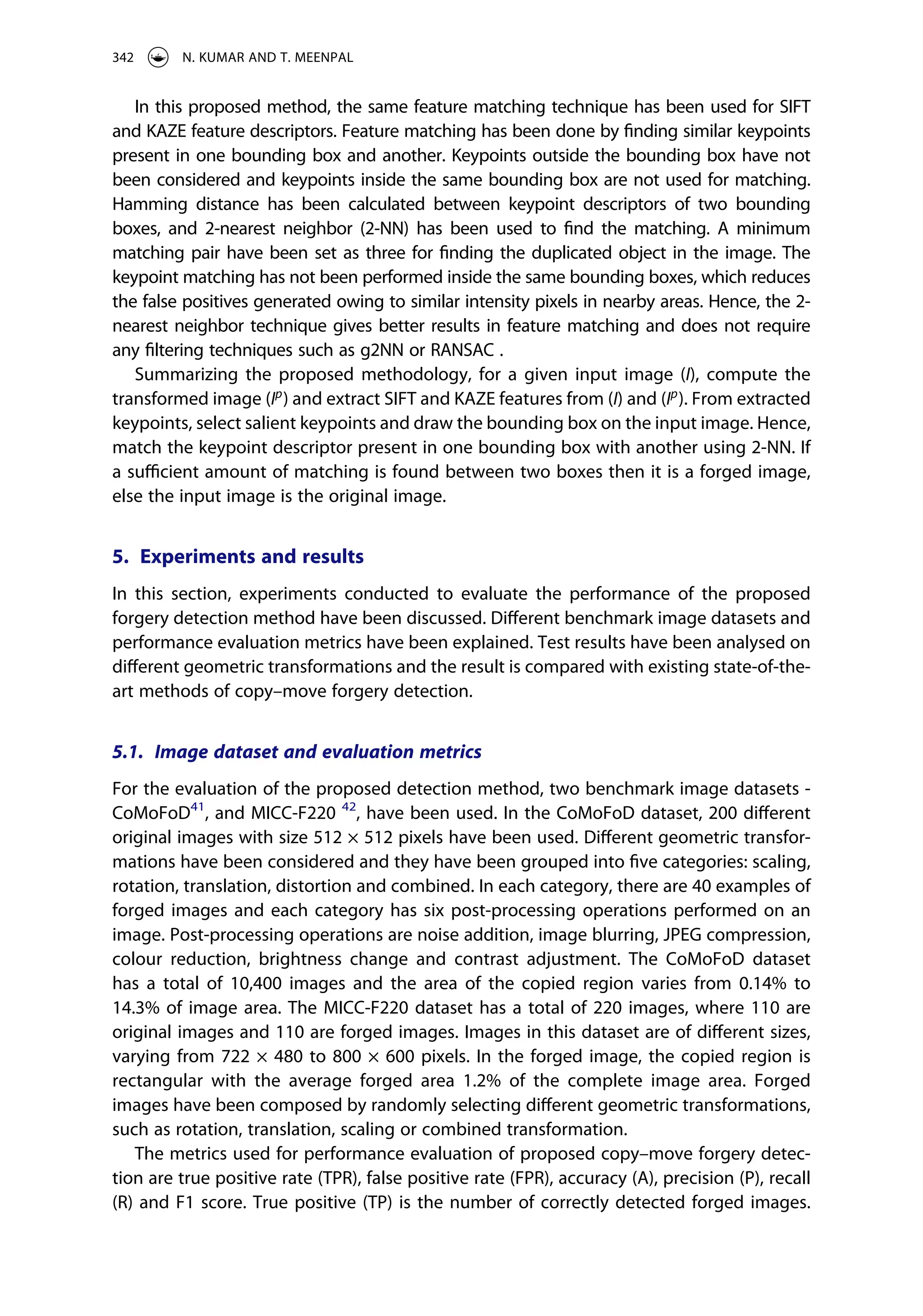

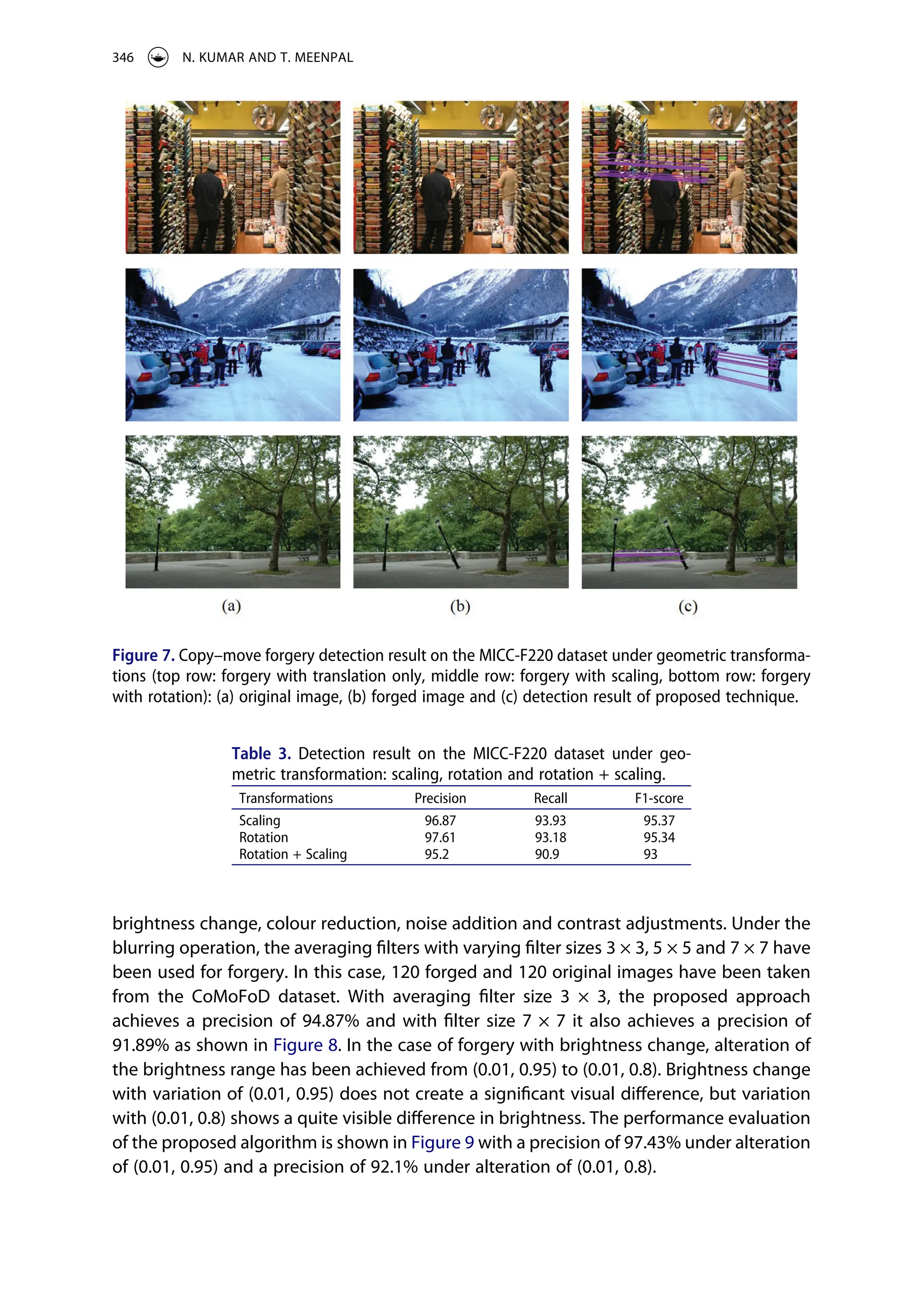

![An experimental analysis is also performed to justify the robustness of the proposed

method under noise addition. In this case, detection of 120 forged images with white

Gaussian noise having zero mean and variance (σ2

) range of [0.0005, 0.005, 0.009] is

performed. The detection result under noise addition is illustrated in Figure 10, which

shows that the proposed approach is not much affected by noise addition. Another

experiment is also performed under colour reduction with a varying number of colours

per channel: [128, 64, 32]. The detection result under colour reduction is illustrated in

Figure 11, which shows that the proposed approach performed well even after changing

the number of colours per channel. Contrast adjustment is another post-processing

operation on which the robustness of the proposed method is tested. The detection

result on different contrast ranges is shown in Figure 12, which shows the effectiveness of

the proposed method under different contrast changes. This evaluation shows that the

proposed approach is not much affected by blurring or brightness change operations,

which shows robustness against the post-processing operations.

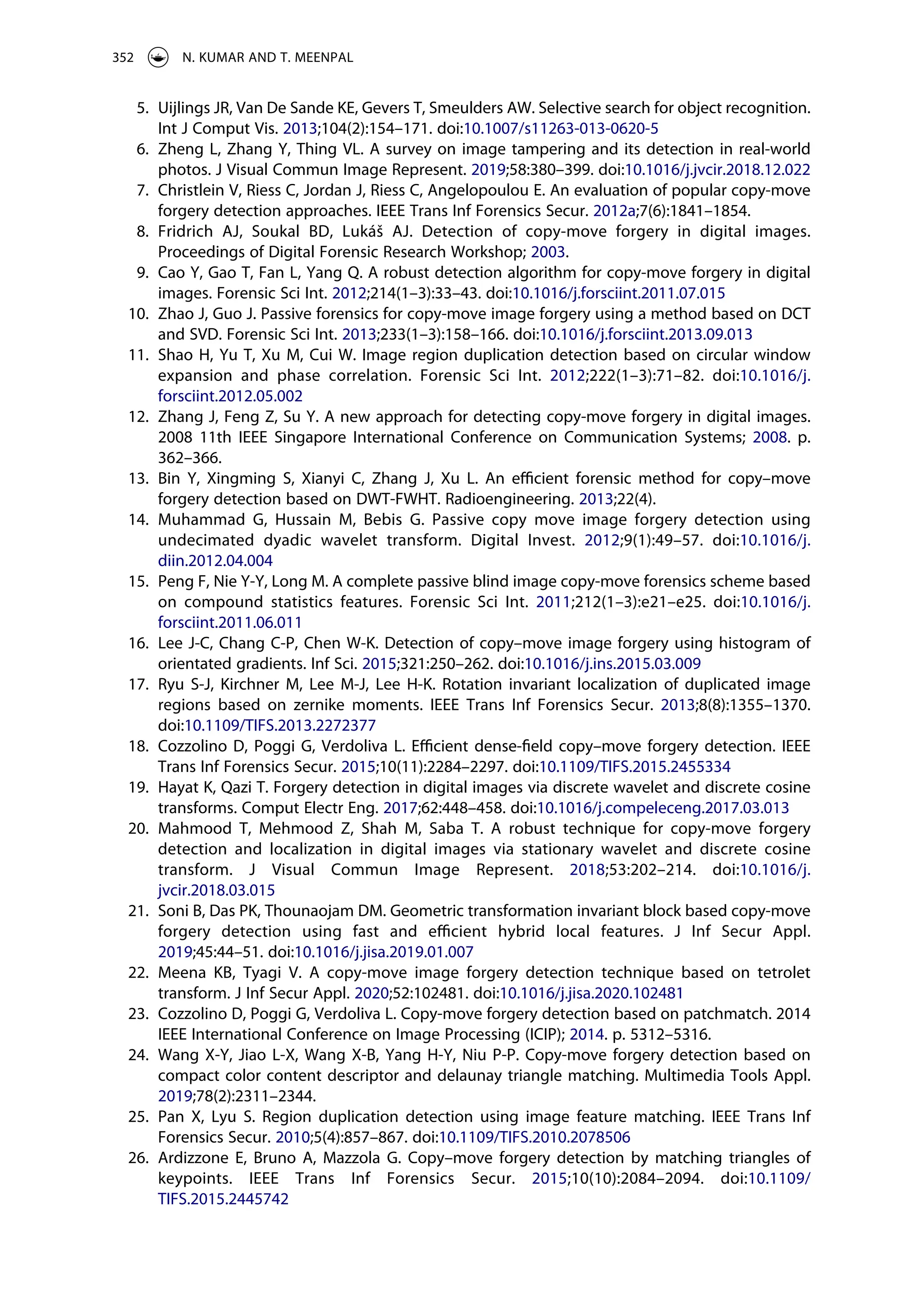

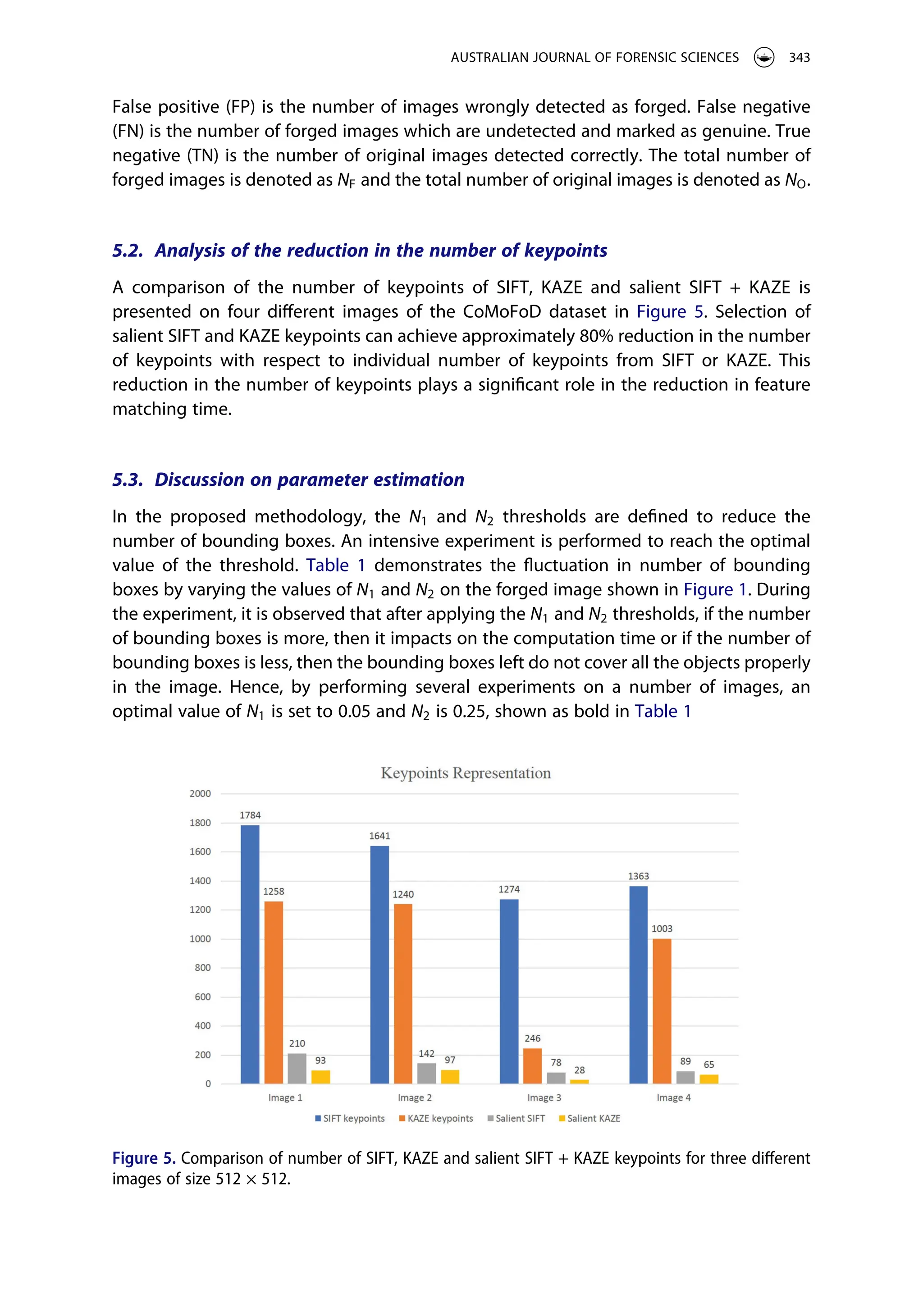

5.7. Comparative analysis of the proposed approach with existing detection

approaches

The experimental results demonstrated above of the proposed approach, show the

efficient detection of copy–move forgery under different geometric transformations and

post-processing operations. Comparative analysis based on TPR, FPR, precision and F1-

score have been performed with existing detection approaches21,43–47

on the MICC-F220

dataset, and the performance of proposed method is highlighted in bold in Table 4. It is

observed that the proposed method has better performance among all methods except47

Figure 8. Detection result under blurring operation with different averaging filter size.

AUSTRALIAN JOURNAL OF FORENSIC SCIENCES 347](https://image.slidesharecdn.com/salientkeypoint-basedcopymoveimageforgerydetection-240306170303-16f304e6/75/Salient-keypoint-based-copy-move-image-forgery-detection-pdf-18-2048.jpg)