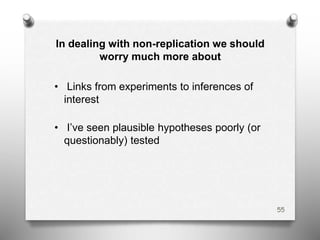

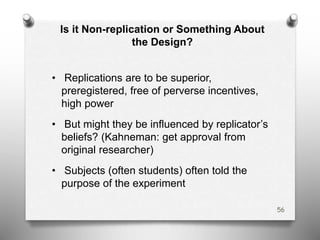

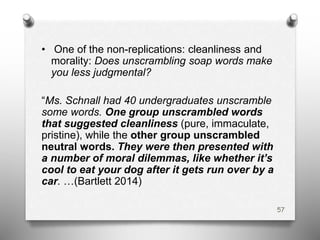

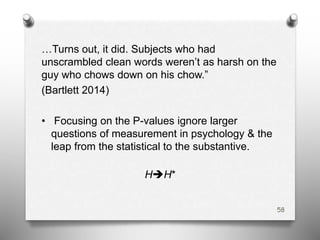

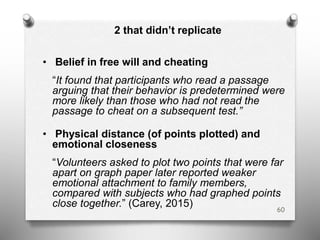

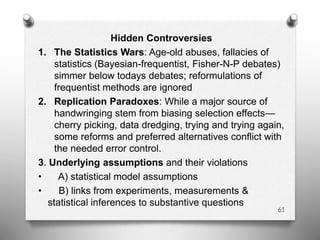

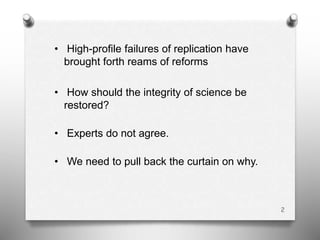

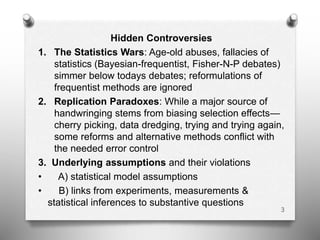

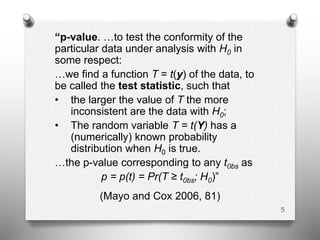

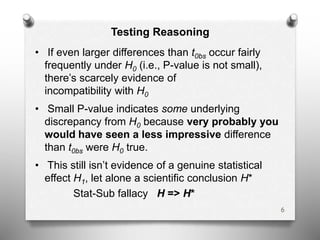

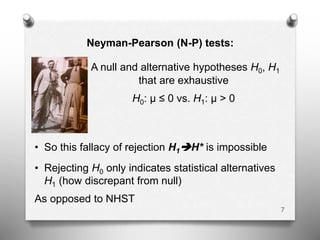

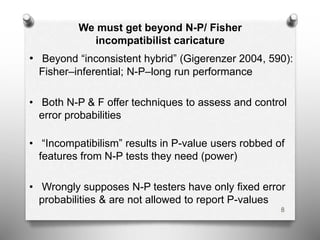

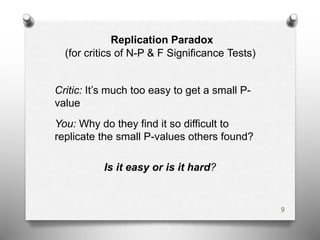

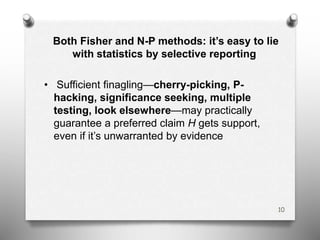

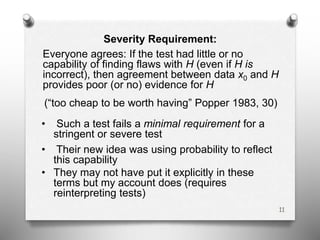

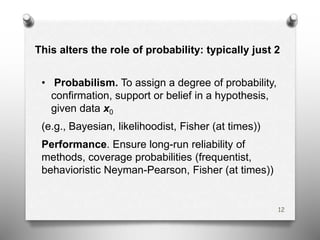

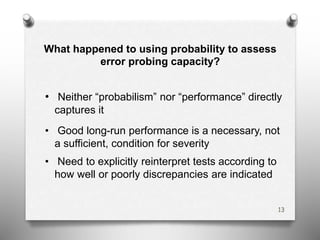

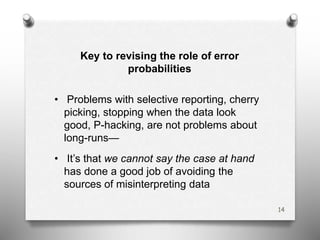

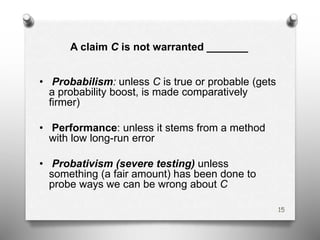

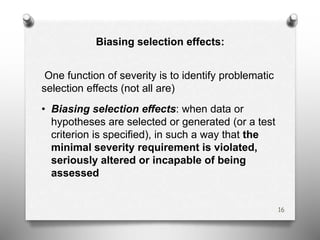

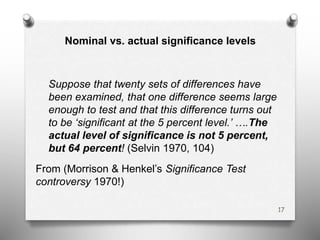

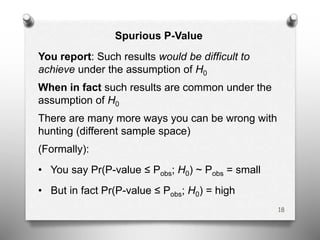

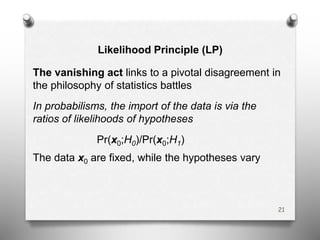

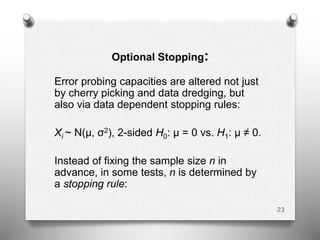

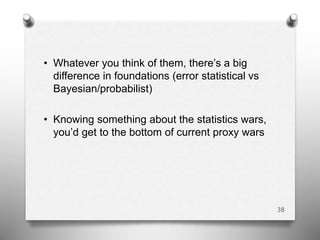

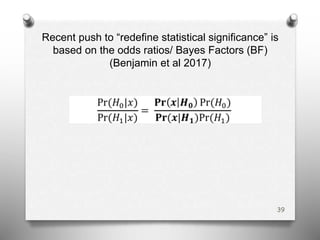

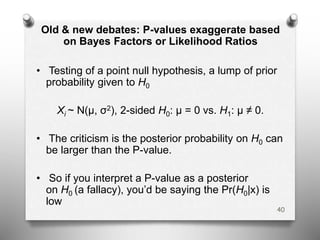

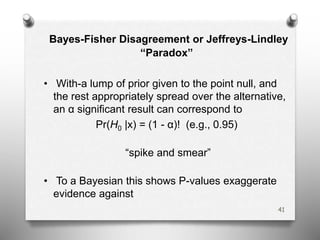

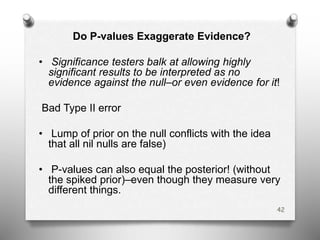

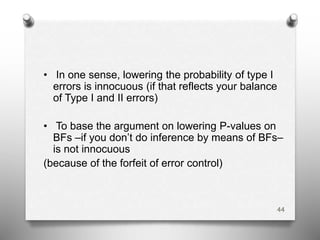

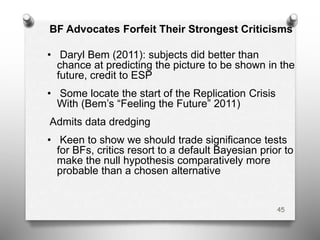

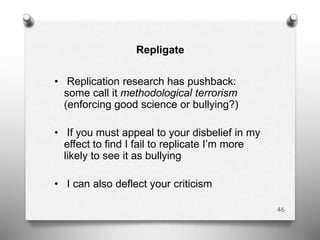

The document examines the replication crisis in psychology, discussing the controversies surrounding statistical methods and the integrity of scientific research. It highlights issues such as p-hacking, selective reporting, and the limitations of traditional significance tests. The author calls for a re-evaluation of the role of probability in testing and urges improved error control to enhance the reliability of scientific findings.

![Significance Tests

• The most used methods are most criticized

• Statistical significance tests are a small part of a

rich set of:

“techniques for systematically appraising and

bounding the probabilities … of seriously

misleading interpretations of data [bounding

error probabilities]” (Birnbaum 1970, 1033)

• These I call error statistical methods (or sampling

theory).

4](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-4-320.jpg)

![All error probabilities violate the LP

(even without selection effects):

Sampling distributions, significance levels, power, all

depend on something more [than the likelihood

function]–something that is irrelevant in Bayesian

inference–namely the sample space

(Lindley 1971, 436)

The LP implies…the irrelevance of predesignation,

of whether a hypothesis was thought of before hand

or was introduced to explain known effects

(Rosenkrantz 1977, 122)

22](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-22-320.jpg)

![28

What Counts as Cheating?

“[I]f the sampling plan is ignored, the researcher is

able to always reject the null hypothesis, even if it is

true. This example is sometimes used to argue that

any statistical framework should somehow take the

sampling plan into account. Some people feel that

‘optional stopping’ amounts to cheating…. This

feeling is, however, contradicted by a mathematical

analysis. (Eric-Jan Wagenmakers, 2007, 785)

But the “proof” assumes the likelihood principle (LP)

by which error probabilities drop out. (Edwards,

Lindman, and Savage 1963)](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-28-320.jpg)

![30

“This [optional stopping] example is cited as a

counter-example by both Bayesians and

frequentists! If we statisticians can't agree which

theory this example is counter to, what is a

clinician to make of this debate?”

(Little 2006, 215).

Underlies today’s disputes](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-30-320.jpg)

![• “[T]he reason that Bayesians can regard P-values as

overstating the evidence against the null is simply a

reflection of the fact that Bayesians can

disagree sharply with each other“ (Stephen Senn

2002, 2442)

• “In fact it is not the case that P-values are too

small, but rather that Bayes point null posterior

probabilities are much too big!….Our concern

should not be to analyze these misspecified

problems, but to educate the user so that the

hypotheses are properly formulated,” (Casella and

Berger 1987b, 334, 335).

• “concentrating mass on the point null hypothesis

is biasing the prior in favor of H0 as much as

possible” (ibid. 1987a, 111) whether in 1 or 2-sided

tests

43](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-43-320.jpg)

![Bem’s Response

Whenever the null hypothesis is sharply defined but

the prior distribution on the alternative hypothesis is

diffused over a wide range of values, as it is [here] it

boosts the probability that any observed data will be

higher under the null hypothesis than under the

alternative. This is known as the Lindley-Jeffreys

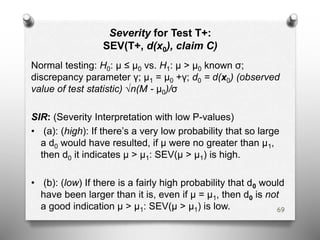

paradox: A frequentist analysis that yields strong

evidence in support of the experimental hypothesis

can be contradicted by a misguided Bayesian analysis

that concludes that the same data are more likely

under the null. (Bem et al., 717)

47](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-47-320.jpg)

![• tells us: an α-significant difference indicates less of

a discrepancy from the null if it results from larger

(n1) rather than a smaller (n2) sample size (n1 > n2 )

• What’s more indicative of a large effect (fire), a fire

alarm that goes off with burnt toast or one that

doesn’t go off unless the house is fully ablaze?

• [The larger sample size is like the one that goes off

with burnt toast] 50](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-50-320.jpg)

![Some Bayesians Care About Model Testing

• “[C]rucial parts of Bayesian data analysis, such

as model checking, can be understood as ‘error

probes’ in Mayo’s sense” which might be seen

as using modern statistics to implement the

Popperian criteria of severe tests.”

(Andrew Gelman and Cosma Shalizi 2013, 10).

54](https://image.slidesharecdn.com/june14ucld-180615132318/85/Replication-Crises-and-the-Statistics-Wars-Hidden-Controversies-54-320.jpg)