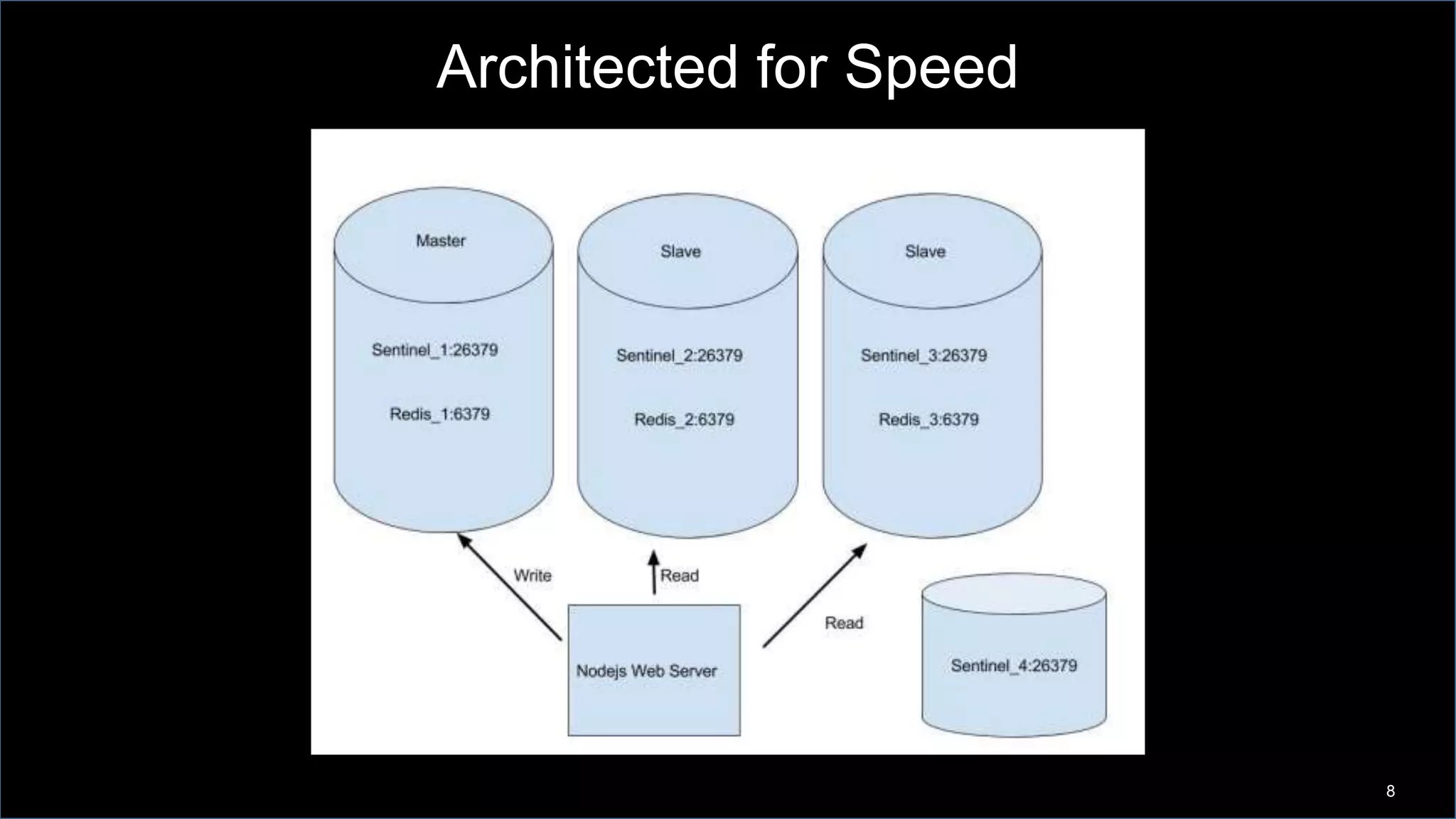

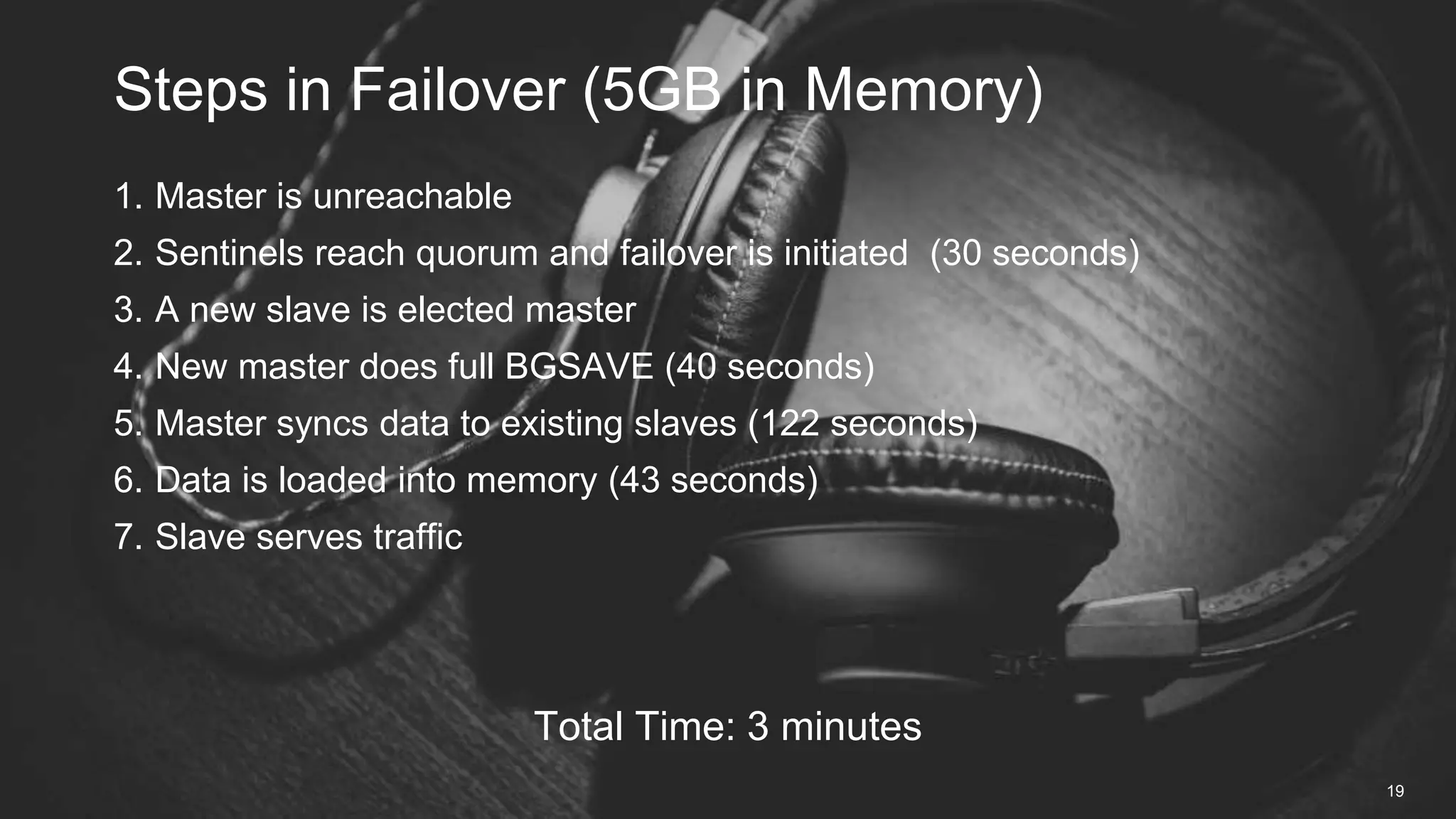

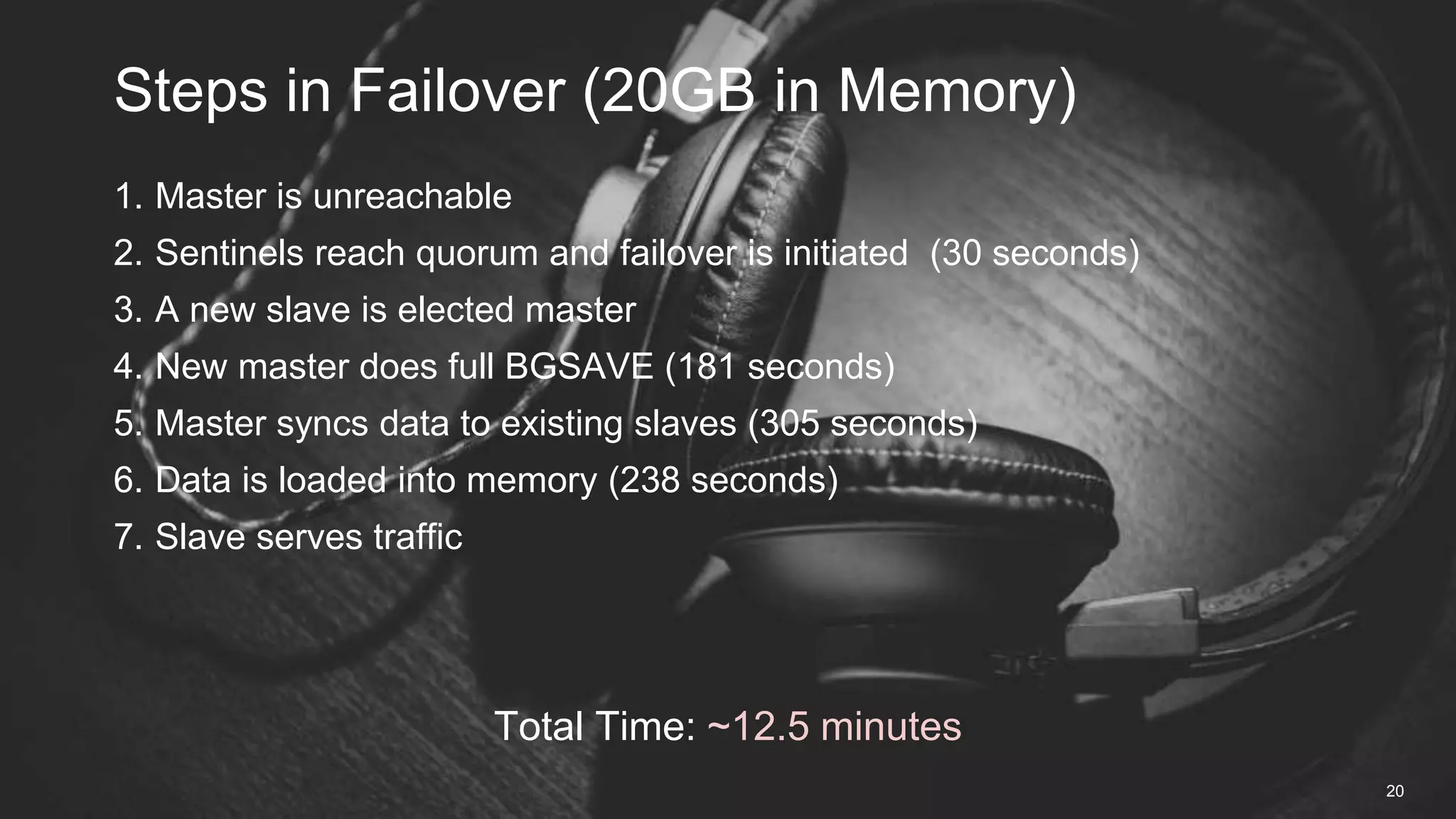

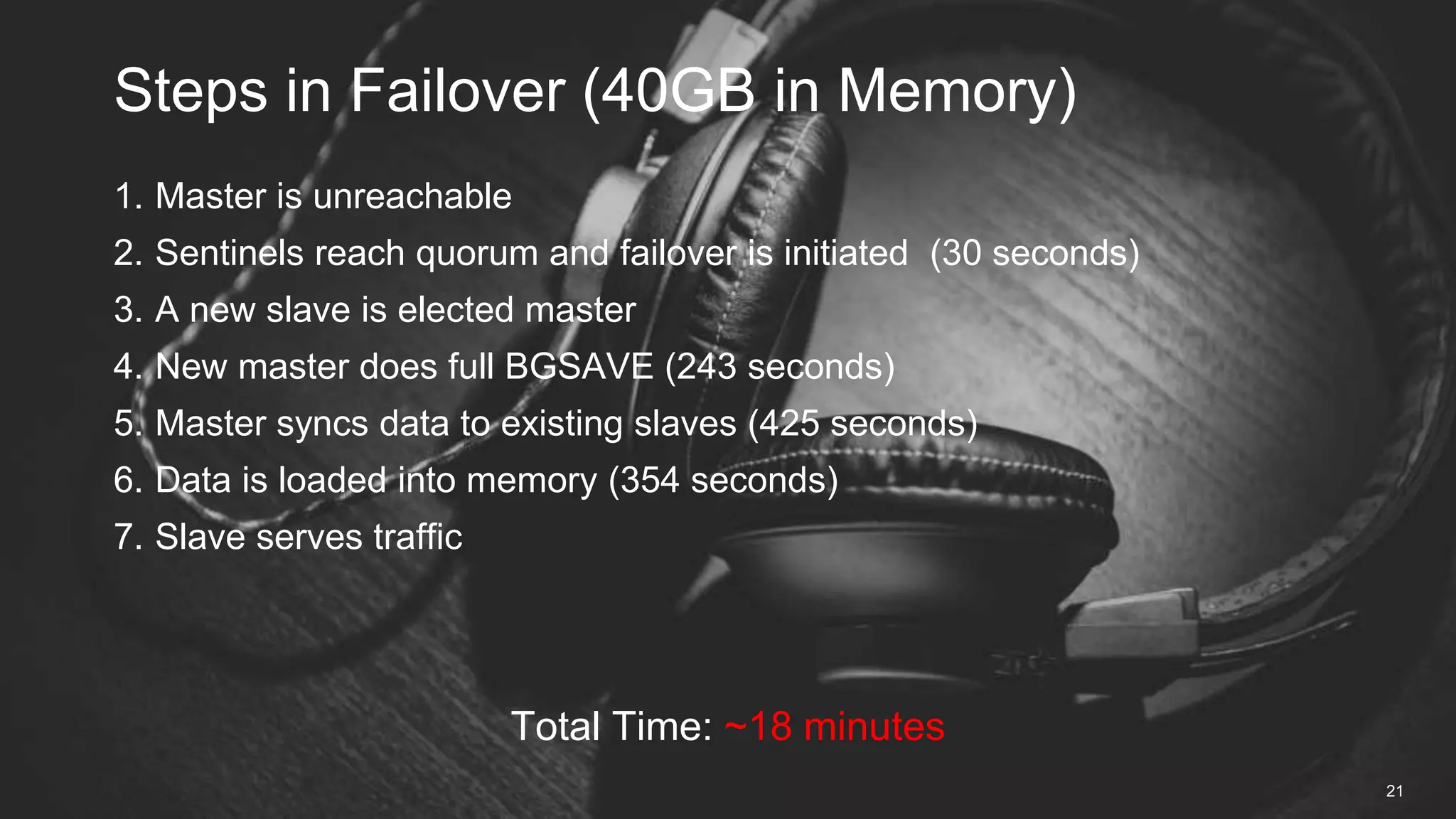

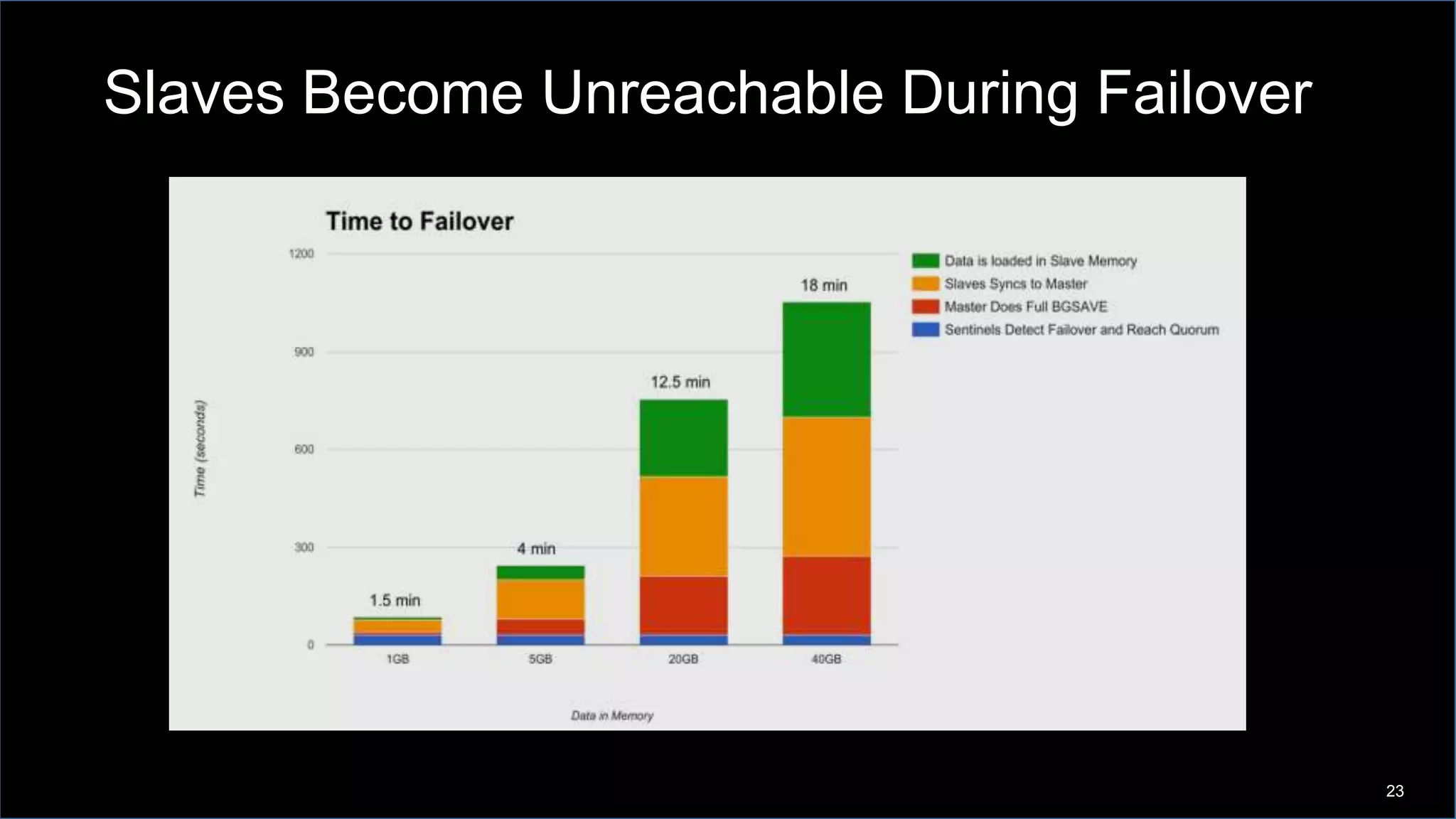

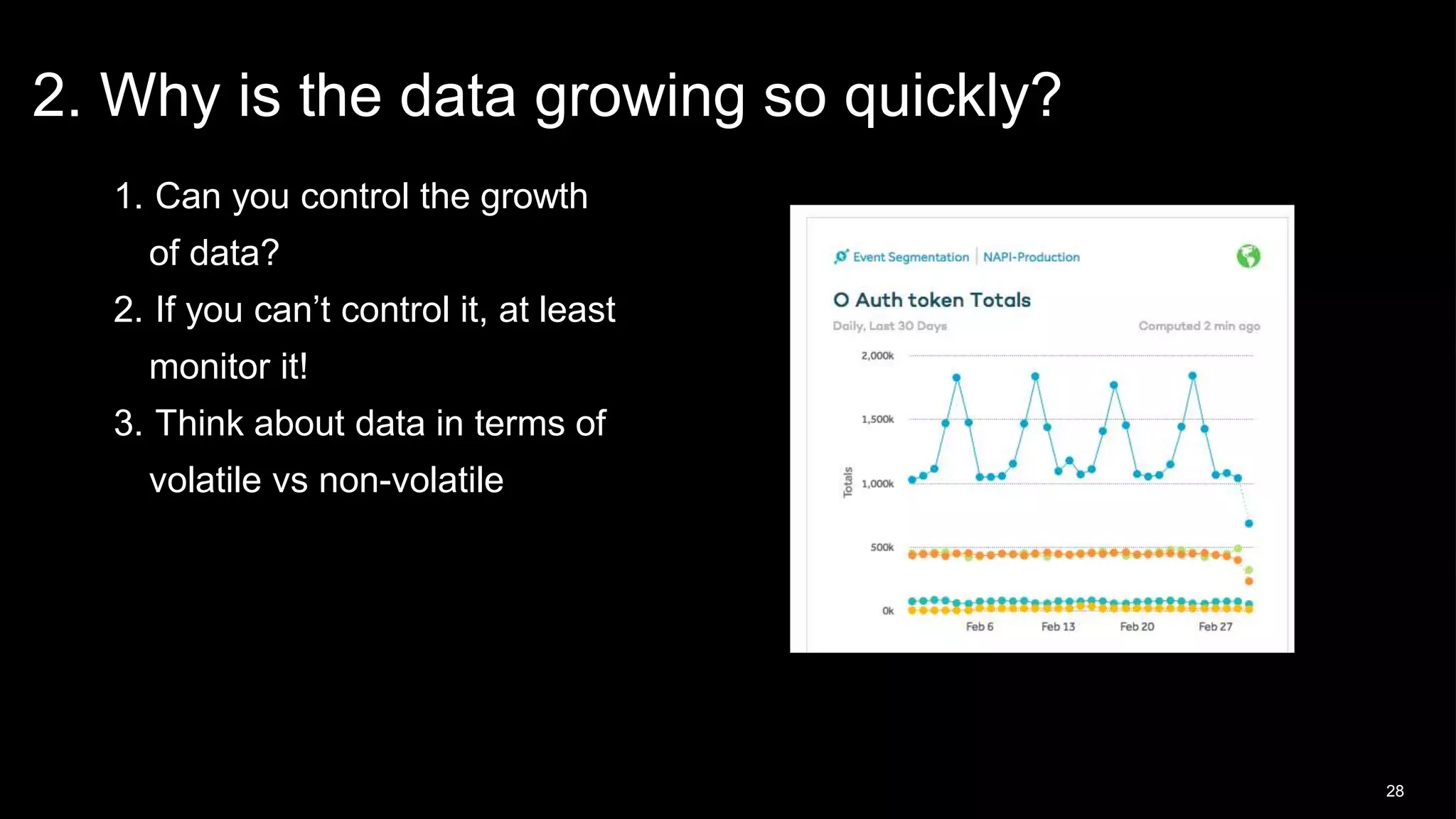

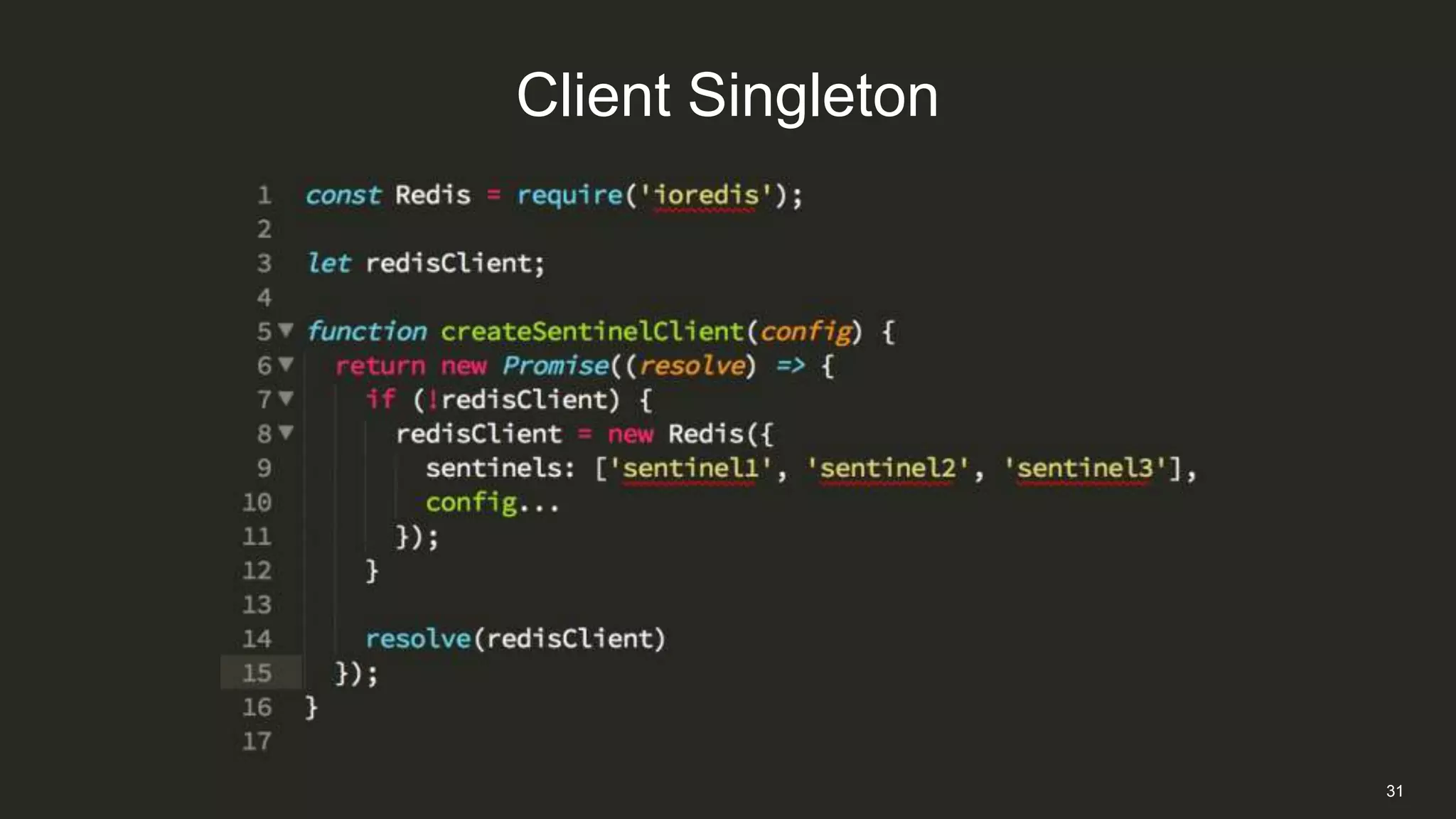

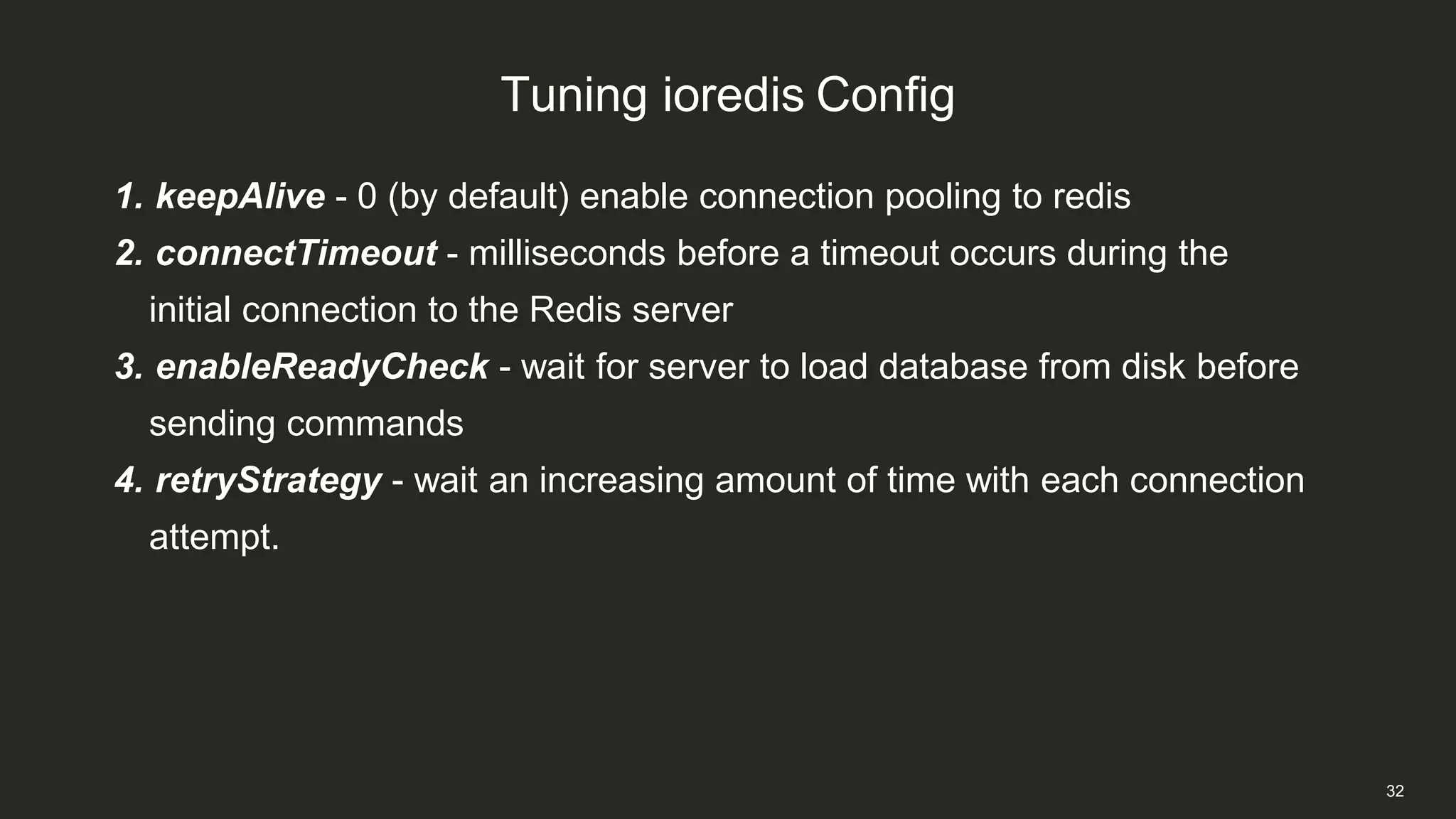

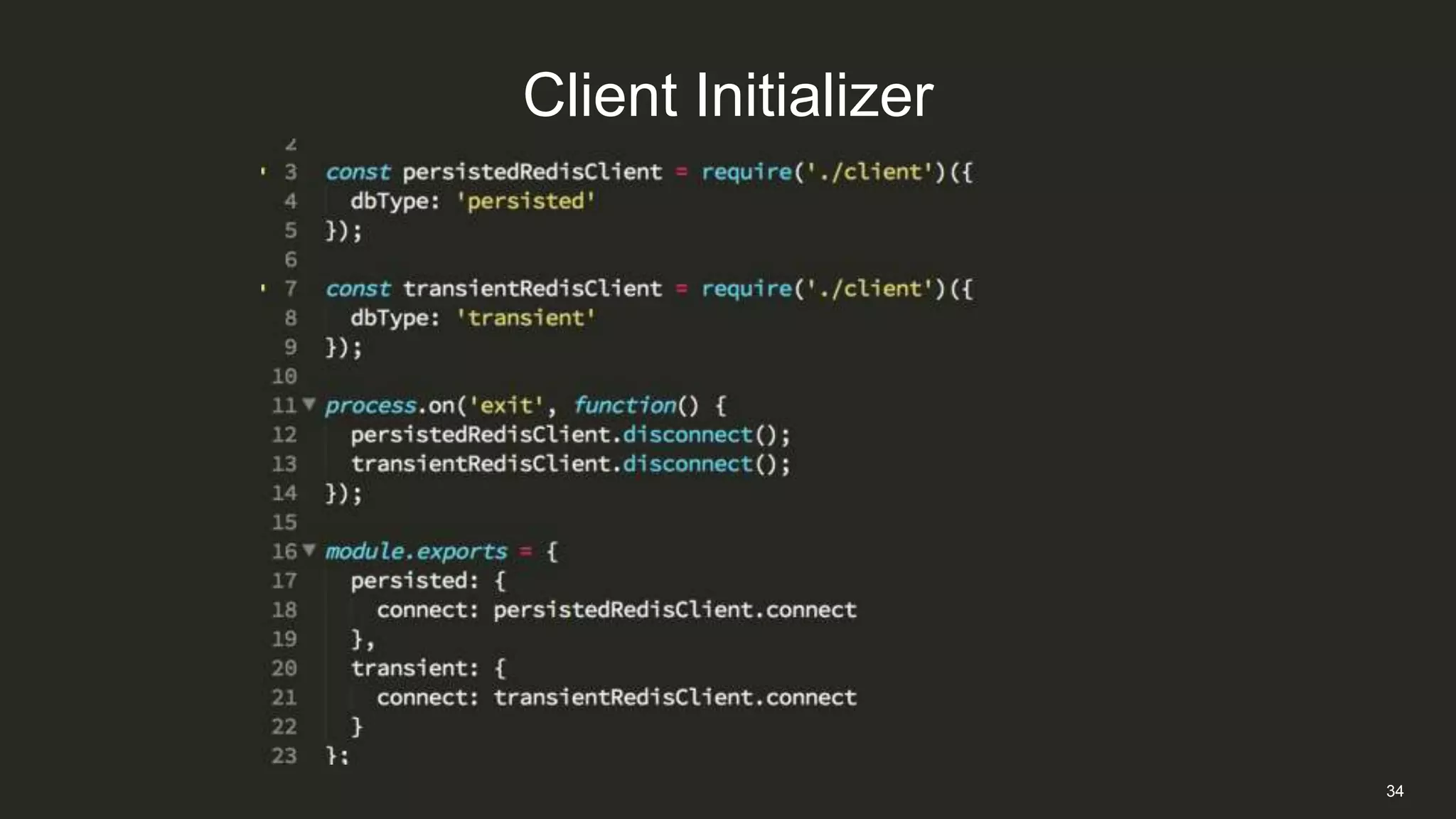

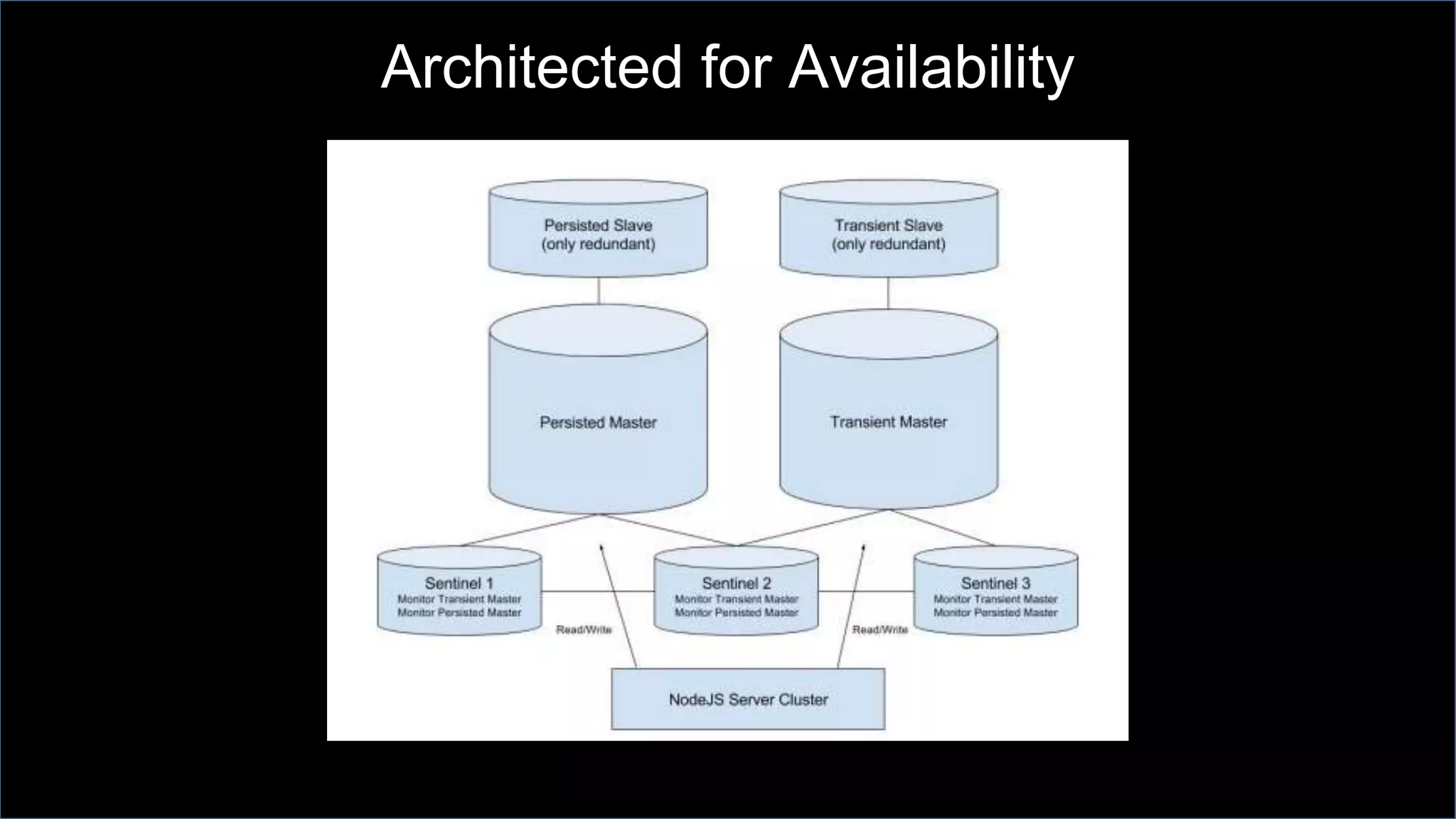

This document discusses Napster's use of Redis for caching and the problems they encountered with Redis failover at scale. As Napster's Redis data grew to tens of gigabytes, failover times increased to over 15 minutes, disrupting their API services. Napster addressed this by implementing connection pooling with ioredis to better handle connections during failovers, configuring Redis for availability rather than consistency, and architecting their systems to gracefully handle temporary Redis outages.