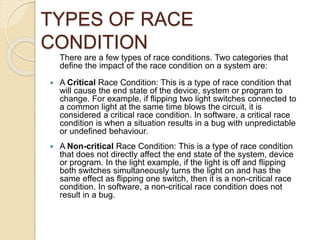

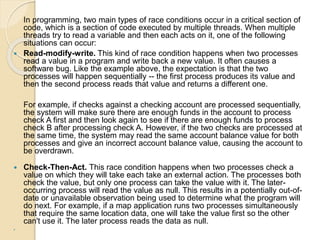

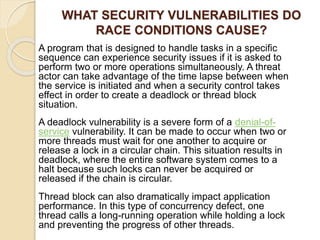

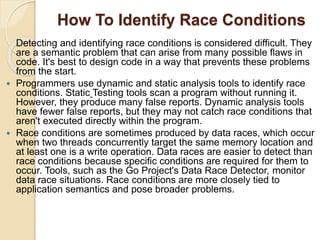

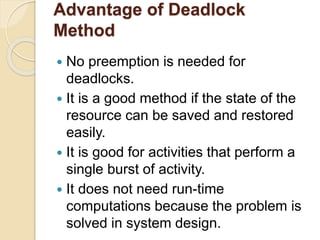

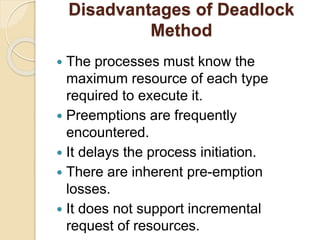

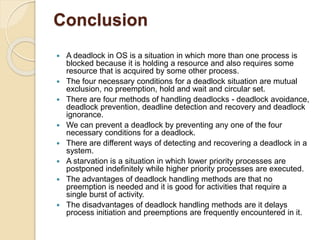

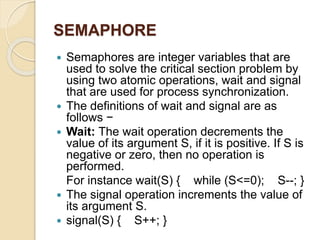

The document discusses race conditions, deadlocks, and semaphores in computing. It defines race conditions as undesirable situations where multiple processes access shared resources concurrently, leading to potential errors, and explains types of race conditions and techniques for their prevention. Additionally, it elaborates on deadlocks, their necessary conditions, methods for handling them, and details on semaphores, which are used for process synchronization.