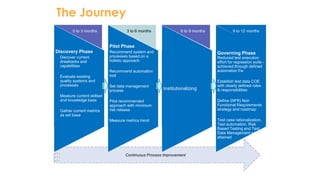

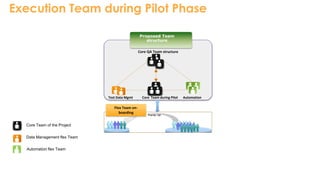

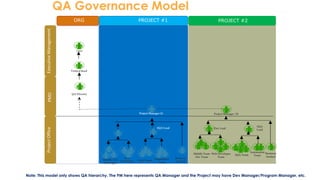

The document provides recommendations for setting up a quality assurance organization, including establishing standard processes, metrics, automation frameworks, and governance. It outlines challenges with the current model and advantages of the proposed model such as streamlined processes, a governance model, reduced defects, and defined metrics. The proposed model includes phases for discovery, piloting recommendations, and institutionalizing changes with an iterative testing methodology, team structure, and governance model.