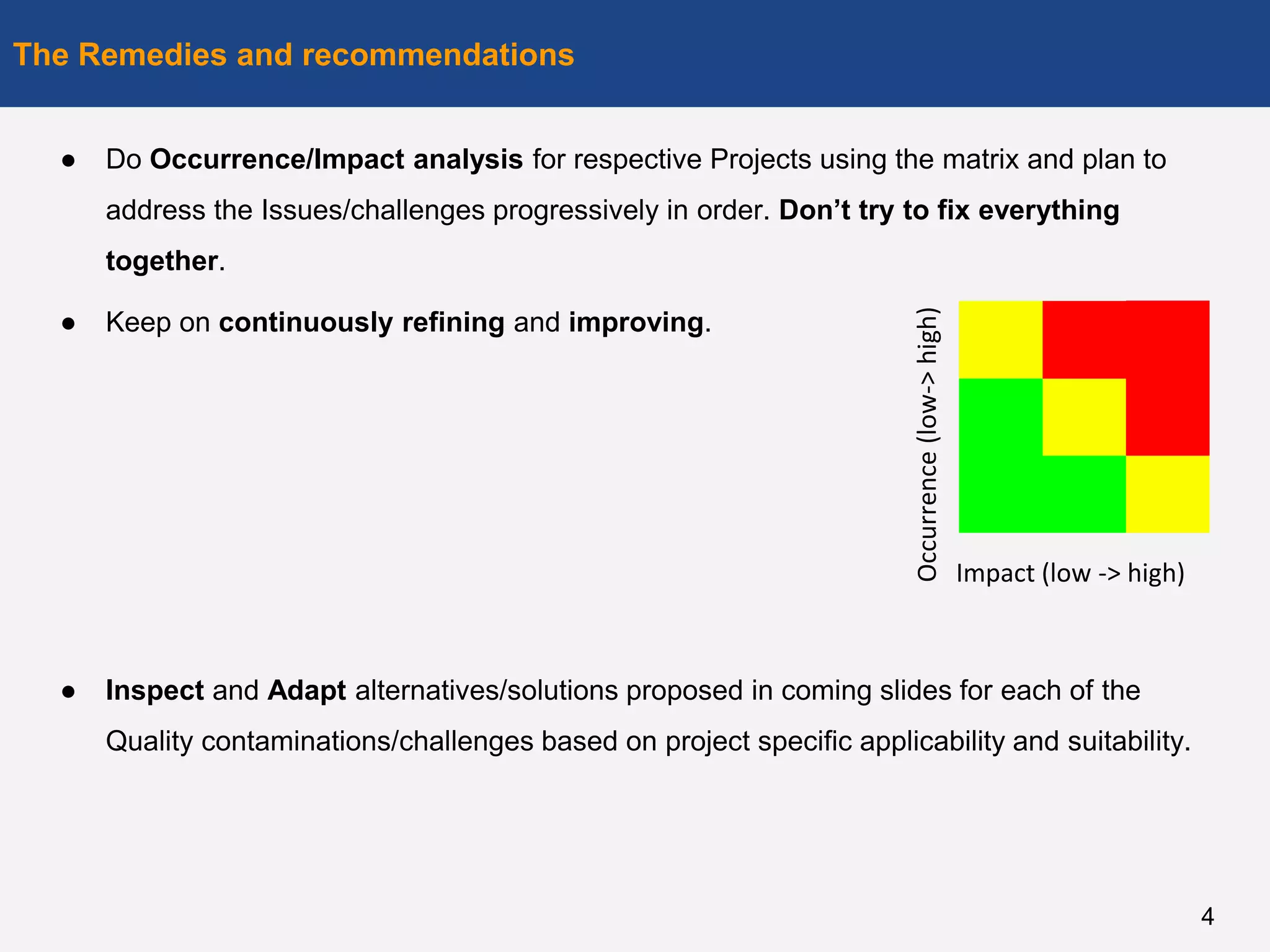

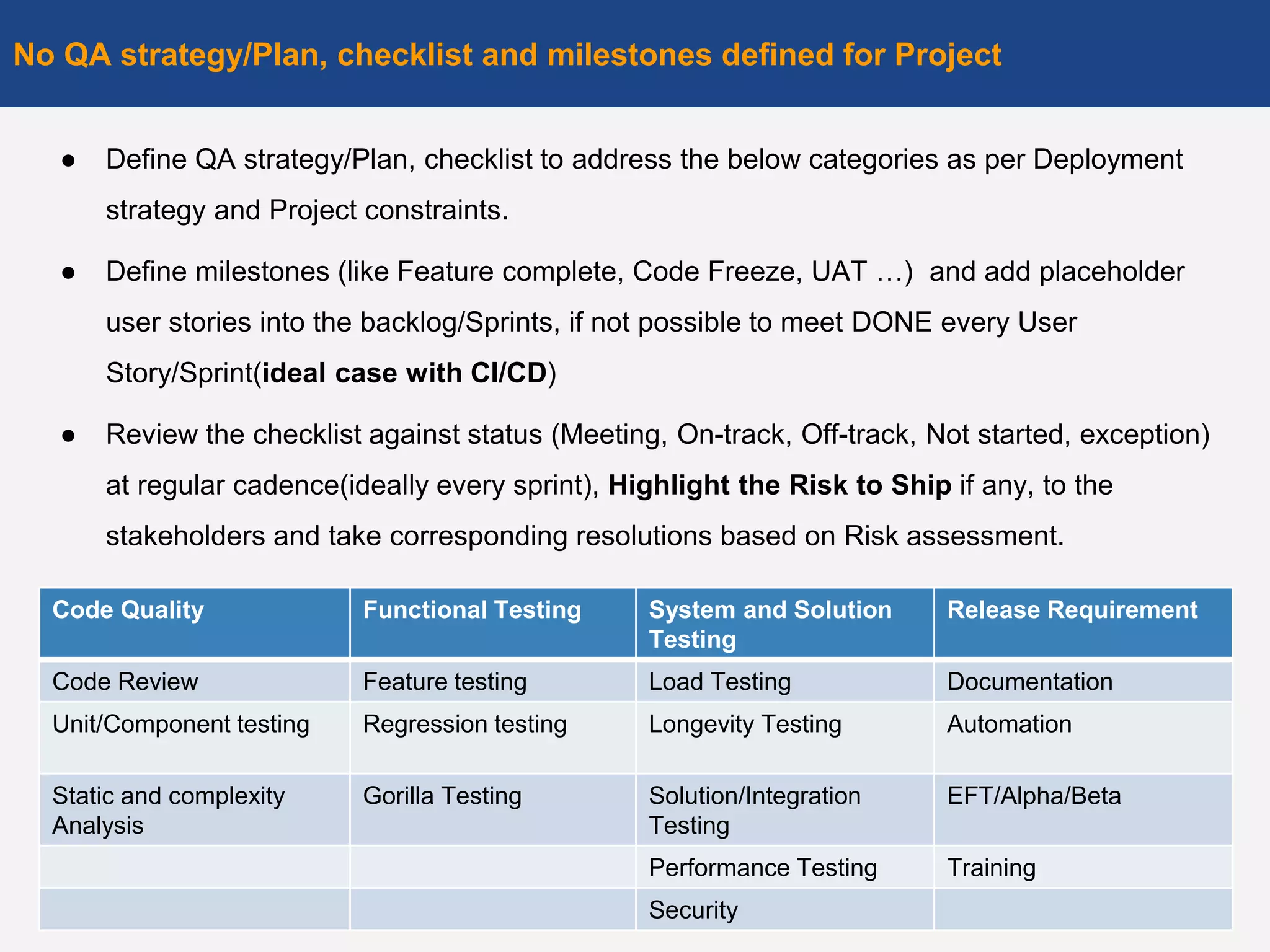

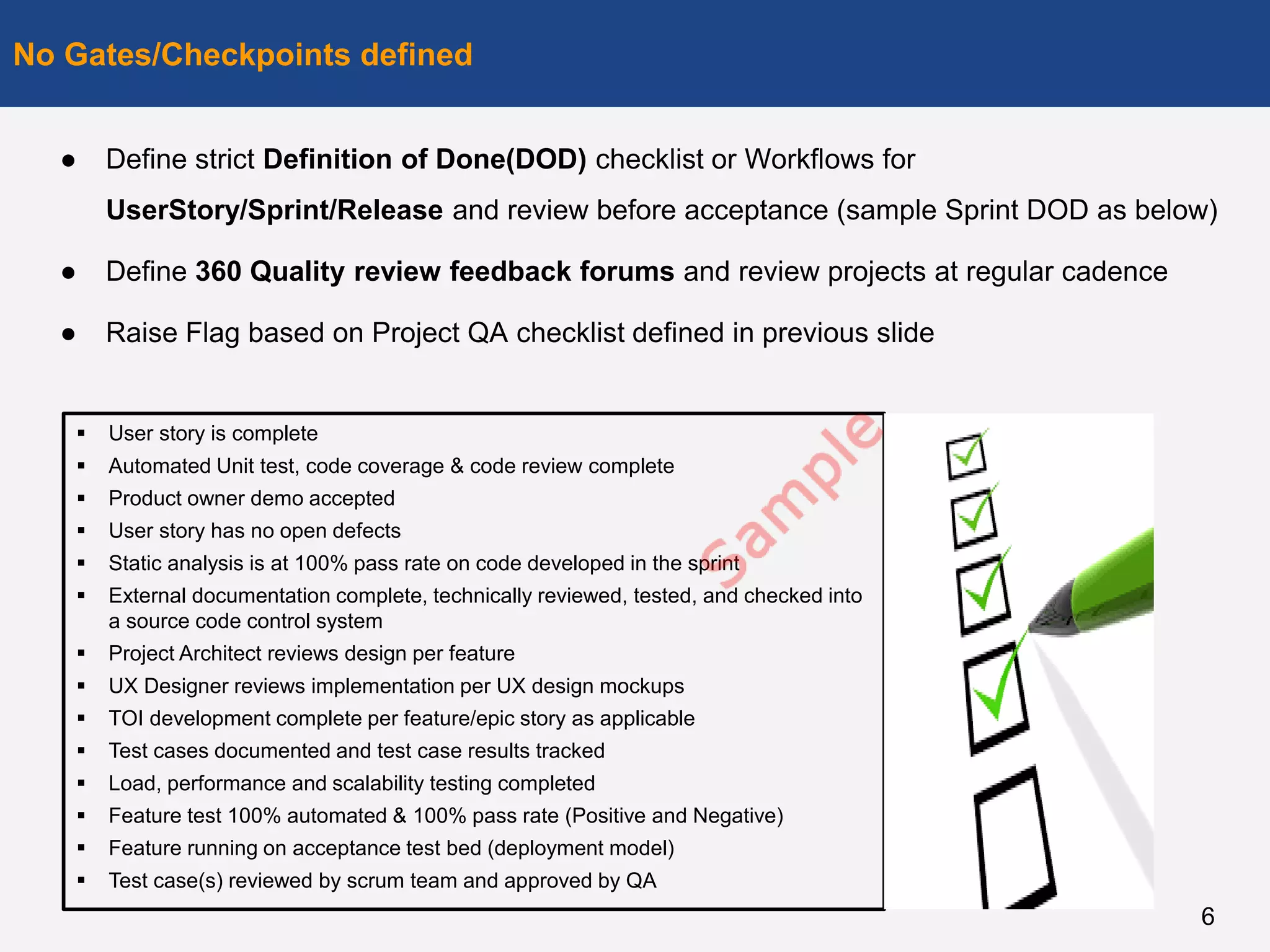

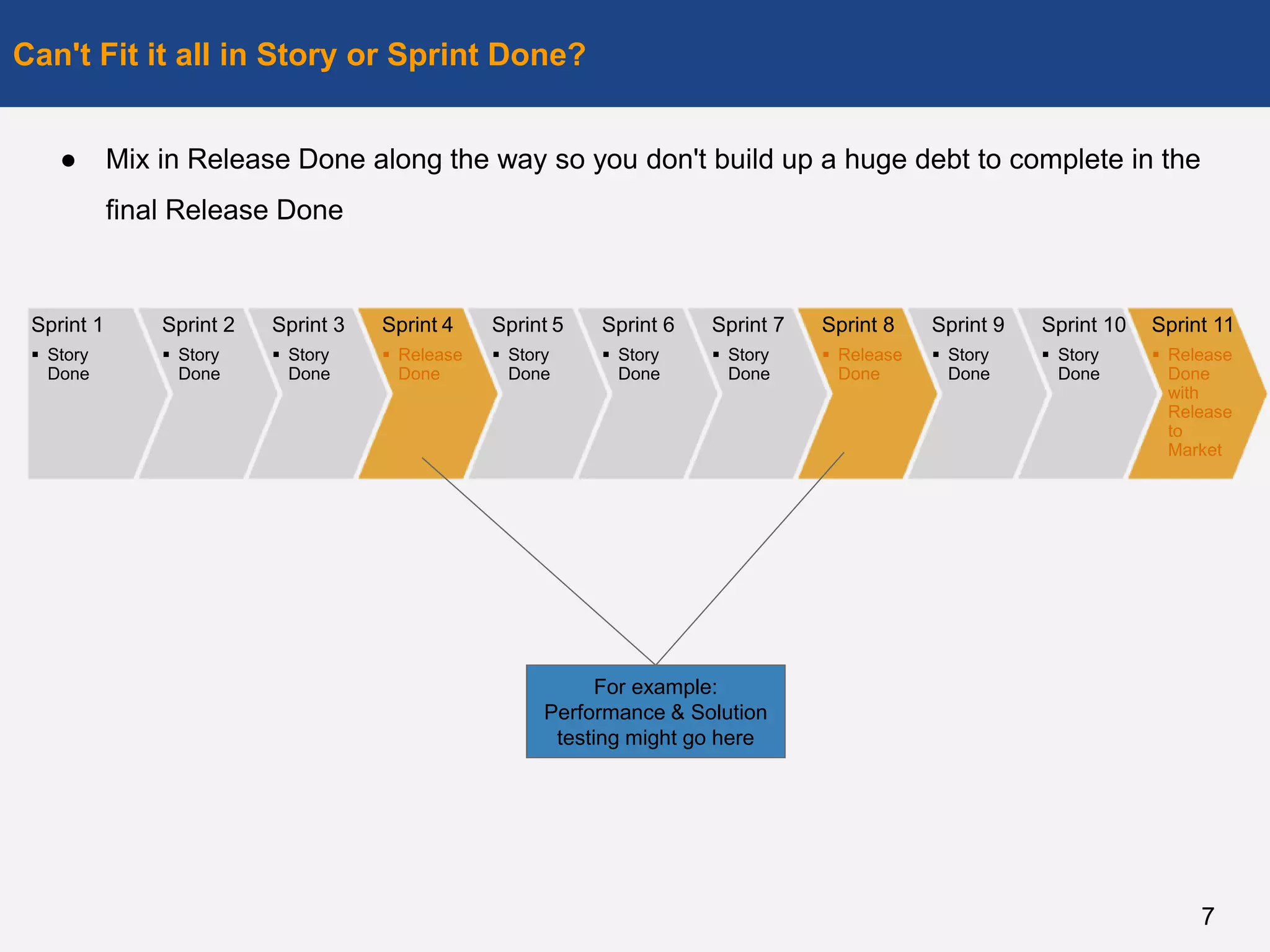

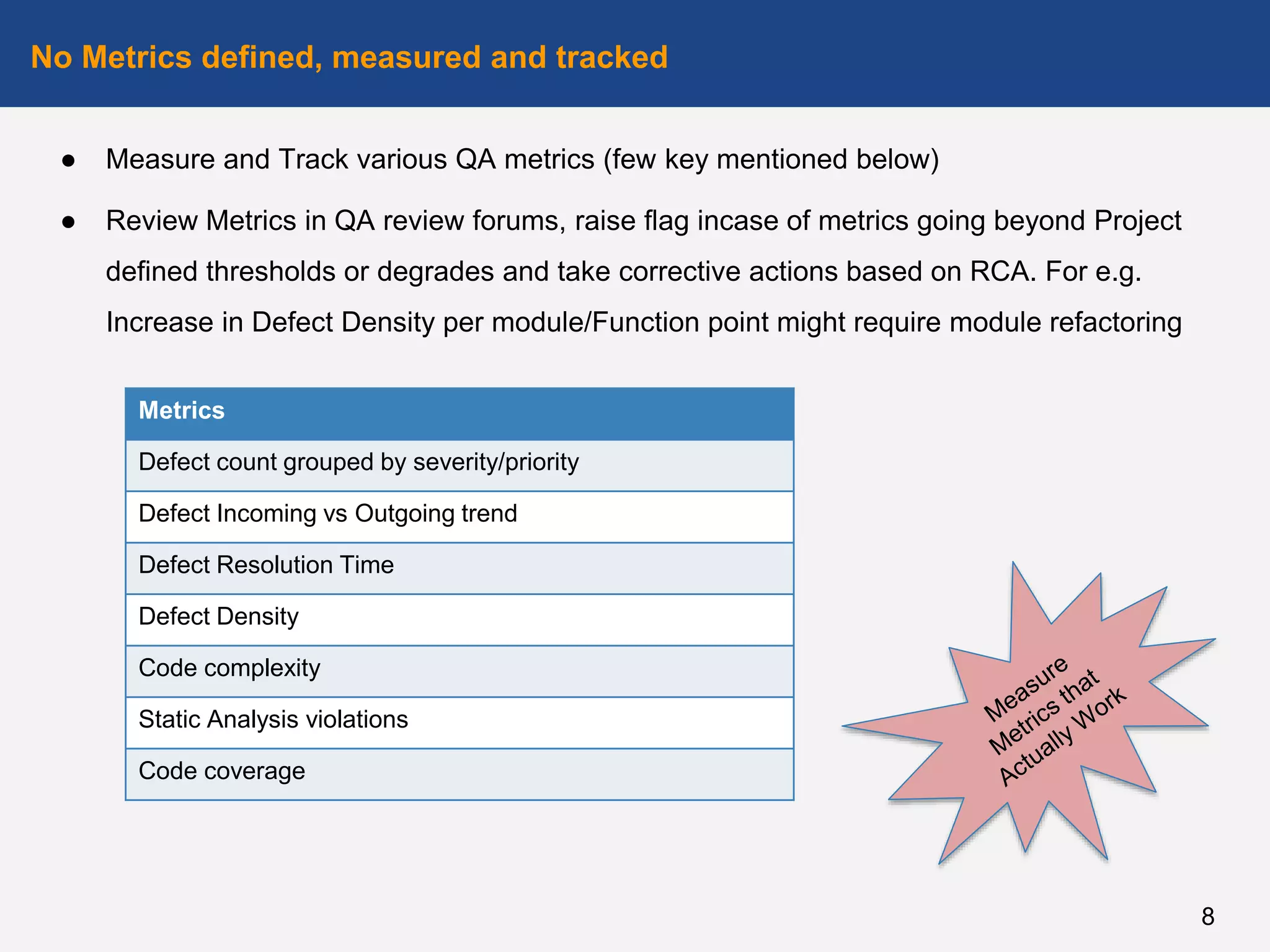

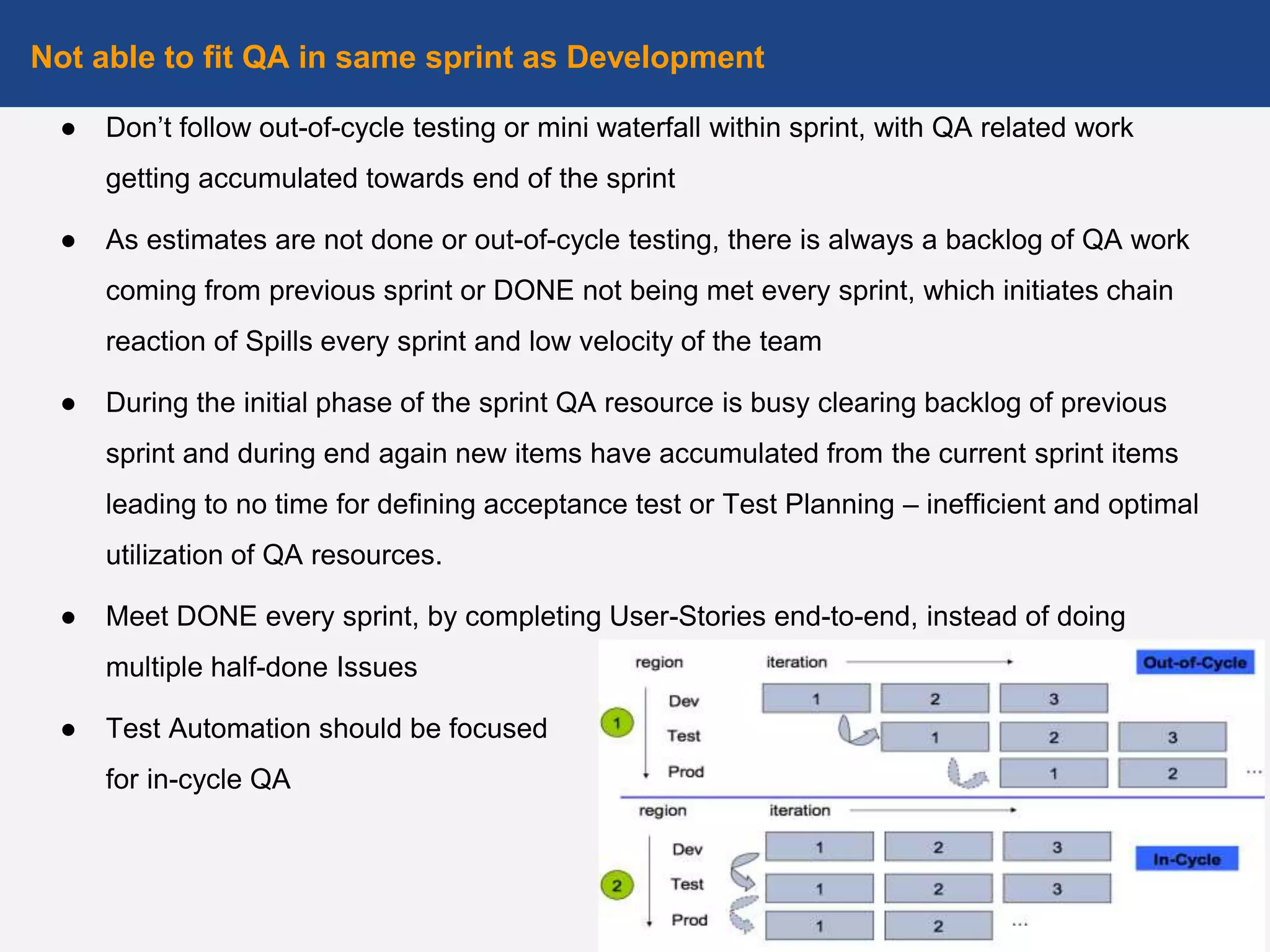

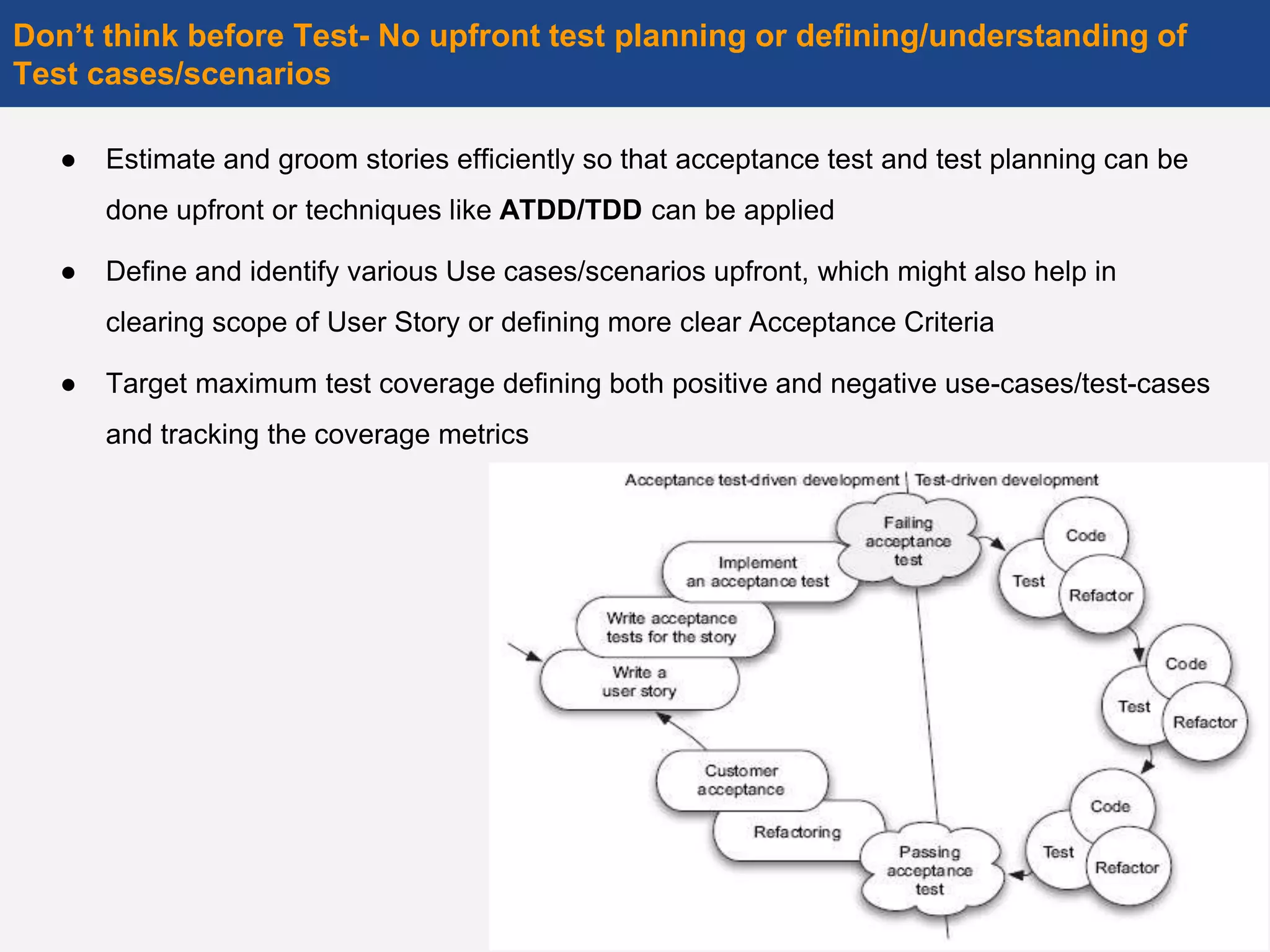

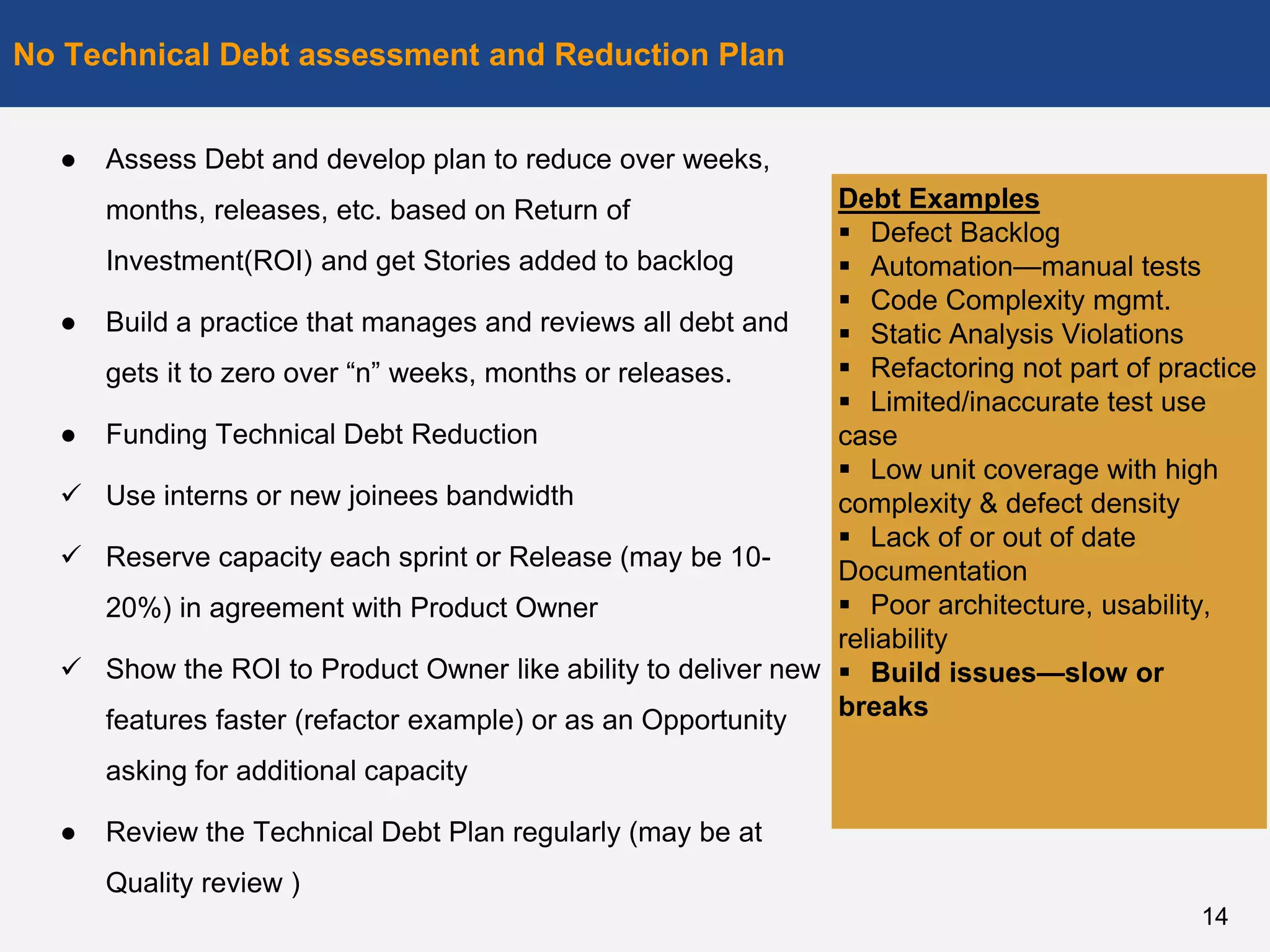

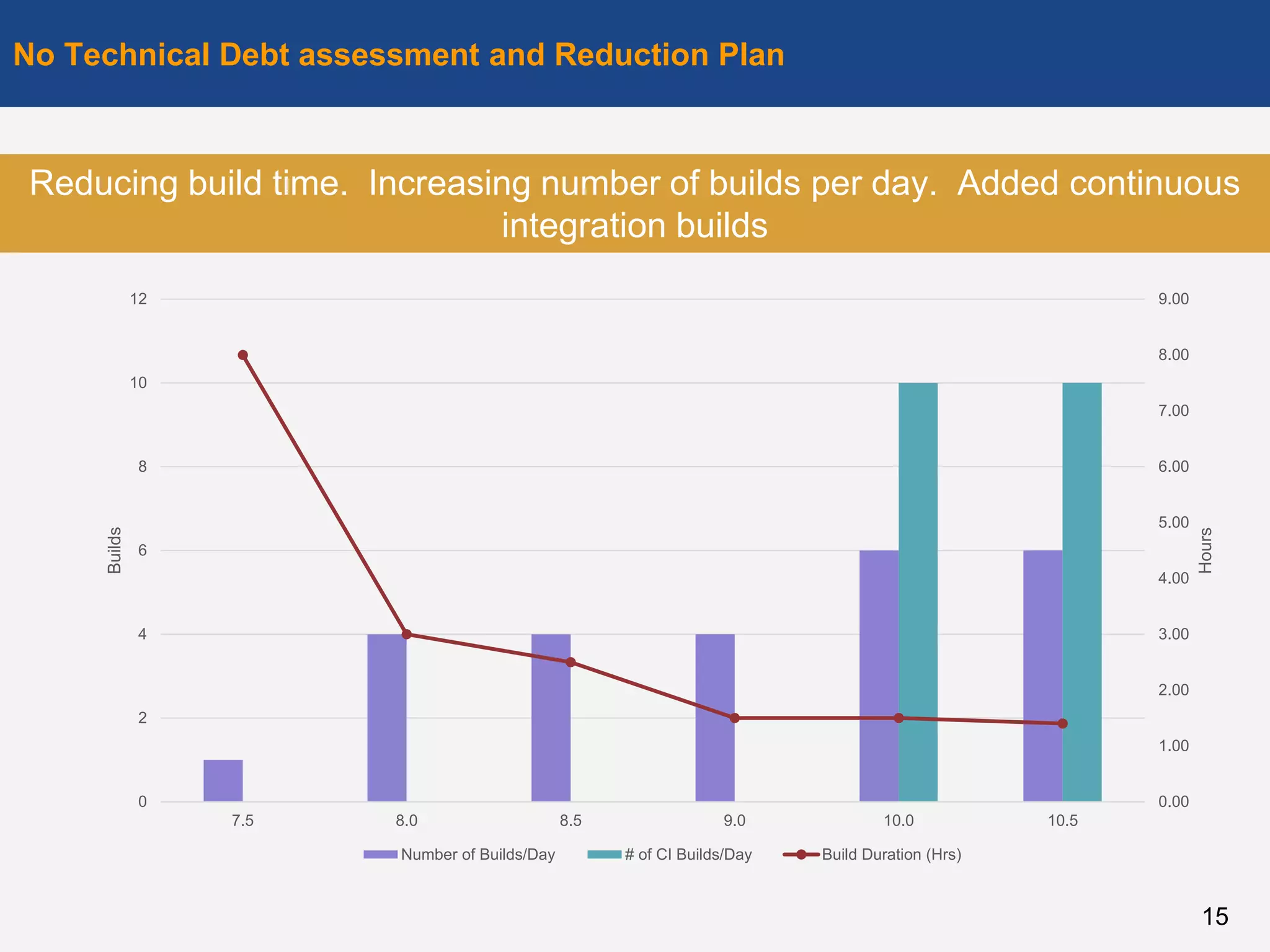

The document discusses quality contamination issues in Agile Scrum teams, highlighting the lack of QA strategies, guidelines for defect management, and metrics tracking. It proposes remedies to improve QA practices, such as defining comprehensive QA plans, conducting regular reviews, and integrating QA efforts within development teams. Key suggestions include thorough test planning, involvement of QA in story development, and continuous improvement to minimize technical debt.