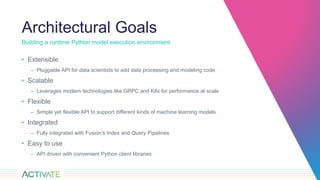

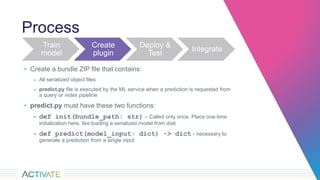

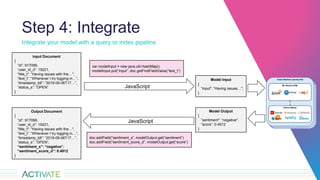

The document outlines the introduction of native Python model serving in Fusion 5.0, enabling data scientists to integrate their custom-trained ML models with Fusion's index and query pipelines. It describes a microservices architecture running in Kubernetes that supports real-time model serving, ease of use through a client library, and provides a detailed process for deploying and testing models. Additionally, it emphasizes the flexibility, extensibility, and scalability of the architecture, allowing for effective data processing and machine learning model execution.