This document discusses stacked convolutional neural networks as a model for imagined speech in EEG data. It outlines several challenges in studying imagined speech, including the difficulty of obtaining clean EEG data without external stimuli interfering, and the brain's complex and diffuse representation of imagination. The document argues that progress requires a robust language model that accounts for the brain's organizational structure, and that understanding imagined speech could have applications like restoring communication for paralyzed patients.

![Stacked Convolutional Neural Networks as a Language Model of Imagined

Speech in EEG Data

Barak Oshri

Stanford University

boshri@stanford.edu

Manu Chopra

Stanford University

mchopra@stanford.edu

Nishith Khandwala

Stanford University

nishith@stanford.edu

1. Introduction

1.1. Imagined Speech Faculty

Imagined speech refers to thinking in the form of sound,

often in a person’s own spoken language, without moving

any muscles. It happens consciously and subconsciously,

when people use it to imagine their vocalization or sub-

consciously as a form of thinking, whether during reading,

reciting, or silently talking to oneself.

This phenomenon includes an imaginative component and a

vocal component, with the brain calling on parts of the men-

tal circuit responsible for speech production, falling short of

producing the signals for moving the muscles of the vocal

cords. Instead, the language signals are embedded and pro-

cessed in the buffer that leads into the imaginative space of

the mind.

Despite a limited understanding of what this mental cir-

cuit looks like, we can reason necessary and sufficient con-

ditions about what the silent language formation pipeline

must entail, for example that ”sound representation deeply

informs... linguistic expressions at a much higher level than

previously thought,” indicating that speech production must

be tightly subsumed within language formation, instead of

the circuits running in parallel with some auxiliary connec-

tions [3].

1.2. Brain Representations of

Imagined Speech

What makes imagined speech research promising and opti-

mistic is that the rich linguistic features present in language

is what the brain anchors to as it develops its language fa-

cilities. This means that the structures present in the brain

for speech formation must be ordered at least in part in the

same way that language is structured abstractly. Hence to

succeed in imagined speech, we need only learn how these

speech patterns interface with imagination in a determinis-

tic way. Once we do, was can apply many of the same tech-

niques used in traditional speech recognition such as hidden

markov models, language tree models, and deep learning

approaches.

So whether this is possible is not just a question of how lan-

guage is formed in the brain, but how it is represented in

the high-level faculties such as imagination and conscious-

ness. Even if language models are deterministically present

in language formation, we still do not have any understand-

ing of how they are manifested in imaginative capacities;

imagination could just as well noise the lucid language rep-

resentations evoked from fundamental language structures

such as the Broca’s and Wernicke’s areas.

And what understanding we have of how imagination works

merely points to how complex and unconfined it is, being

unlocalized to any specific structure in the brain and be-

ing spread out in a highly diffuse manner. Just alone the

fact that mental states severely affect its functionality via

changing neural oscillation frequencies depending on levels

of waking attention shows how the output from electroen-

cephalography (EEG) appears indeterminate and chaotic.

That is, assuming such a ”layered understanding” of the

brain, we don’t know how close imagination is from the

act of producing the language.

Therefore, imagined speech research is a neuroscientific en-

deavor as much as it is a computational one. Any successes

and failures in performance are indications on how well the

model fits to the data, which allows us to then make extrap-

olating claims about how the brain is organized.

1.3. The Trouble with Data

Attaining well-formed data for imagined speech research is

the largest challenge in this field. Most studies have used

EEG imaging for its high temporal resolution. The trouble

with this data is that each channel only captures an ”av-

erage” electric signal across an axis relative to some root

(often the top of the spin at the back of the head).

Experimentation with imagined speech is exceedingly chal-

lenging. The human brain is incredibly sensitive to external

stimuli, anything of which could confound the controlled

1](https://image.slidesharecdn.com/6b0b738c-6dbe-4b01-ba71-41f65be4cf7a-151214091001/75/Oshri-Khandwala-Chopra199-1-2048.jpg)

![lab environment of the experiment. It is difficult to make

a setup that determines exactly when a word is thought. If

a pitch is used to cue the subject, then the brain response

to the pitch affects the immediate signal. This is a serious

danger to the integrity of the experiment as a classifier could

inadvertently classify the variable responses to the different

level pitches.

And even when data is well-formed and meets the task de-

scriptions, there is no guarantee that the resolution of the

technology has captured the relevant information. EEG

has high noise-to-signal ratio, and noise reduction methods

are effective but not complete. Technological advances in

brain-imaging in the future will increasingly help research

in this field.

1.4. The Brain as a Personal Computer

To summarize the challenge of this task in an analogy, con-

sider a modern personal computer. Such a PC computes a

myriad of tasks at any given moment: resting, active, back-

ground, etc. We would like to identify the current state of

exactly one of these processes. We have faint ideas of where

this process appears within the entire system, but we are not

sure what its form is, or how loud or prominent it is in com-

parison with other processes. In fact, it is not a single pro-

cess, but a parallel one, merged, influenced, and blockaded

by all the other processes happening in the computer. Also

consider that our apparatus for measuring this brain, call it

our voltmeter, does not get to measure the state of every

transistor, but only some start and end points.

To find this elusive process, we start with the assumption

that our voltmeter is omnipotent and knows the states of

all transistors. Given the entire state of the computer, we

exploit any understanding we have of the structure of the

process, such as expectations for how some of the wires

should be arranged in the process, allowing us to ”pattern

match” with the data. Without understanding of the struc-

ture of this process, any algorithm could only blindly tra-

verse the data as it doesn’t know what to expect from this

process. Therefore the success of generalizing this process

to new situations depends on the correctness of our exter-

nal ”fitting”. It seems like pattern recognition, even without

hand-engineered features, is doomed to needing some level

of assumption about the organization of the data, or at least

how it could be learnt.

This analogy is important in explaining why we need a ro-

bust language model suitable for the task to be able to com-

prehensively solve imagined speech. Keeping the challenge

of this field in mind, any research done in the present times

will aid efforts in the future when the technology and neuro-

science catch up with the immensity of the task. Therefore,

it is important not to lose aspiration to solve this problem so

that the methodology and foundations are ready and an op-

timistic tone has been set for when science and technology

allow us to converge upon a solution.

1.5. Uses of Imagined Speech

These challenges should be viewed against the many bene-

fits and opportunities opened by commercial, medical, and

research aspects of imagined speech. Thousands of severely

disabled patients are unable to communicate due to paraly-

sis, locked-in syndrome, Lou Gehrigs disease, or other neu-

rological diseases. Restoring communication in these pa-

tients has proven to be a major challenge. Prosthetic de-

vices that are operated by electrical signals measured by

sensors implanted in the brain are being developed in an

effort to solve this problem. Researchers at U.C Berkeley

have worked on developing models to generate speech, in-

cluding arbitrary words and sentences, using brain record-

ings from the human cortex. [4] Success in understand-

ing imagined speech will enable these patients to talk as

thoughts can be directly synthesized into sound. Imagined

speech may finally restore the lost speech functions to thou-

sands, if not millions, of patients.

Furthermore, the capability to comprehend imagined

speech has the clear potential for a variety of other uses,

such as silent communication when visual or oral commu-

nication is undesirable. As fluency and dependence on tech-

nology raises the demands for faster, cleaner, and more pro-

ductive interfaces, the pathways such an accomplishment

would pave in the scope of our communicative abilities

would lead to a revolution in our natural and digital interac-

tion with the world.

Humans have created a world of messages, expressions, and

meanings central to our living individually and in commu-

nities, and this is true in the mind just as much as it is in our

writings and other artistic mediums.

Tapping into the sheer vastness and wealth of color exhib-

ited by the mind is sure to revolutionize the dimensionality

of human expression, its data, and our relationship with the

digital world. The ability to understand imagined speech

will fundamentally change the way we interact with our de-

vices, as digital technology shows trends of connecting with

our bodies and activities increasingly more, from eye-wear

to watches. While such research is controversial in the least,

invasive at worst, these fears should not inhibit attempts at

studying how much information could be mined from the

brain.

Tapping into imagined speech data is the holy grail of hu-

man communication interfacing. There are fewer problems

that reach so closely to human demands and intentions, as

imagined speech happens involuntarily and irrepressibly.

2](https://image.slidesharecdn.com/6b0b738c-6dbe-4b01-ba71-41f65be4cf7a-151214091001/75/Oshri-Khandwala-Chopra199-2-2048.jpg)

![This makes imagined speech an underestimated advance

in our ability to make use of human abilities in our activ-

ities.

1.6. The State of Imagined Speech

Progress in imagined speech has been slow, lacking, and

misguided. Studies in this field make simple and blanketing

studies unfavorable to progressive growth and experimenta-

tion. Most of these studies perform classification tasks of

words, syllables, or phonemes such as classifying between

several thousand samples of syllables ba and ku using an

EEG or classifying between yes or no using an Emotiv. The

first of these can be given recognition as showing that pho-

netically disparate syllables can be strongly differentiated

[2], and the second of these that even given the small num-

ber of channels an Emotiv can differentiate between two

words.

Neither of these studies address the larger challenges posed

by general imagined speech progress or advise for a robust

approach to experimentation with imagined speech by ex-

ploiting domain-specific knowledge. Neither of the studies

can claim to have classified patterns that activate on those

syllables or words when the class space is grown. That is,

each symbol studied is classified dependently on the other

symbols classified which makes anything learnt in the final

model completely restricted to the task-definition.

The only major sponsored effort for imagined speech re-

search was by DARPA in 2008 in a $4 million grant to UC

Irvine to conduct experiments that will allow user-to-user

communication on the battlefield without the use of vocal-

ized speech through neural signals analysis” on the basis

that ”the brain generates word-specific signals prior to send-

ing electrical impulses to the vocal cords.” While promis-

ing, this research has not produced a foundation for general

imagined speech research, instead focusing on military as-

pects and small tasks that benefit battlefield communication

in the short-term.

There is thus a theoretical need to direct this field into an ex-

perimental understanding of how decoding speech from the

brain could work. In this way, research in this field should

not focus on results or data evaluation, but on approaches

and methodologies, especially given that data produced is

heavily dependent on specific context, technology, and sub-

jects.

1.7. Learning Linguistic Features

In a previous study of ours, we showed that multi-class

classification of four syllables is possible and effective with

neural networks to an accuracy of greater than 80%. These

results, however, are not as promising as the performance

indicates. There is no reason to believe that the model is

classifying the syllables for their linguistic features; any

other patterns such as associative memory activations could

be the differentiating factors. So what we repeatedly see

in the examples discussed is that the tasks used to research

imagined speech are inherently ill-posed. Therefore, for any

model to succeed, we need to be able to assure ourselves

that the classifier is classifying for the right reasons, in this

case linguistic features, which are invariable and context-

independent.

We guarantee that the model is learning linguistic features

by teaching it to classify the same linguistic features in dif-

ferent words and expressions. That is, for robustness the

model must not only generalize to new samples, but to new

language situations. By defining a learning model that is

built around the foundations of general language under-

standing, we can at least guarantee that the parameter space

includes solutions that capture the dependencies inherent in

the language so that the differentiating factors include the

constituents of the language.

2. A Language Model using Convolutional

Neural Networks

Having discussed the main foundations of imagined speech,

we now devote the rest of the paper to suggesting the re-

search questions and methods that need to be used to make

progress in imagined speech research, as well as some pre-

liminary results using these approaches.

2.1. Segmentation and Composition

The first of these questions is related to proving that clas-

sification is a valid approach to solving imagined speech.

Before we can assume that language features are static sym-

bols in imagined speech, and thus before we can prove that

classification is suited to this problem, we must verify that

the linguistic features ”add up” in EEG data in such a way

that they appropriately and convincingly capture the pat-

terns of higher level features. That is, the language ob-

served in the imagination is not necessarily correspondent

and tainted by associative caches of different words that

obscure the layered foundation of language models as ob-

served in the imagination.

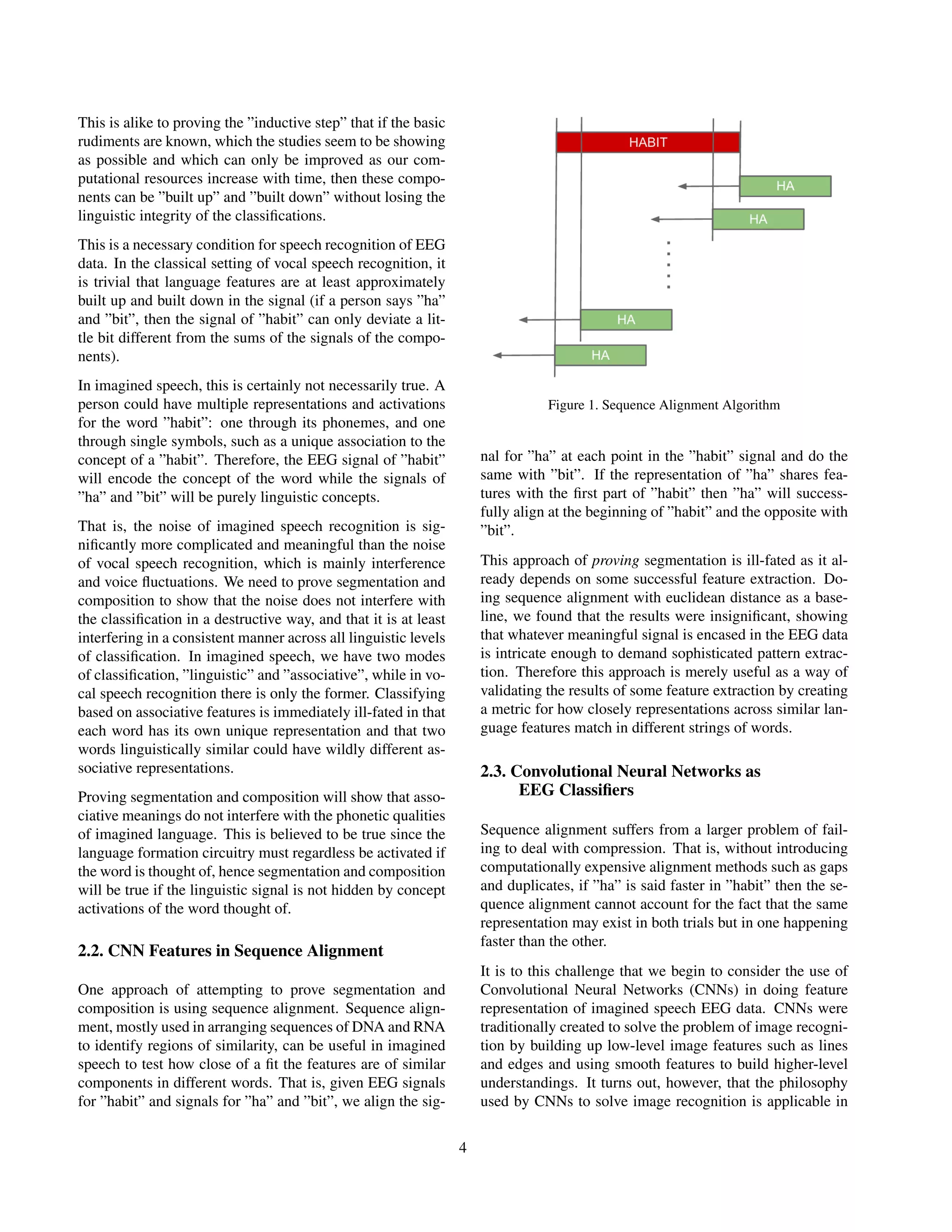

We can answer this question by proving that classifications

of components in imagined speech can be segmented into

correct classifications of the sub-components, and that the

converse is also true, namely that two language symbols

composed next to each other have the same classifications

as if the two symbols were featured as one symbol.

3](https://image.slidesharecdn.com/6b0b738c-6dbe-4b01-ba71-41f65be4cf7a-151214091001/75/Oshri-Khandwala-Chopra199-3-2048.jpg)

![subject as the tones. After a short break, the experiment

was repeated with the other pair of symbols. We then per-

formed the first pair again in another round and the second

pair in the next. In total, 200 readings of each symbol were

collected.

The length of the queuing sound lasted for 0.2 seconds,

enough to perceive the pitch but not too long that response

to the tone interferes with thinking. The subject was given

2.3 seconds and asked to utter the correct syllable once, af-

ter which he was asked to rest his mind until the next beep

was heard.

A time line for a single trial for a symbol pair is shown

below:

3.2. Labelling

The labels were given to the trials based on the construc-

tion of the stacked CNN. That is, for each level, there were

as many classes as there are linguistic features on that level

trained on. For example suppose a component was roughly

the size of a single letter, and the second level took three

components of the layer before. Then the word ”signal”

would have 6 classes in its first level for each letter, and 2

classes in its second level for ”sig” and ”nal”. Note that in

the first level we have 6 different classes because each word

is different. Classes are assigned based on the unique lin-

guistic features that are matched on that level. If the compo-

nent is sufficiently small that no relevant linguistic feature

can be matched to its size, then the classes are just assigned

in order. As an example, if we did letter classifications of

the words ”had” and ”hat”, then the samples on the first

level would have labels of [0, 1, 2] and [0, 1, 3] respec-

tively.

3.3. Preprocessing

For the purposes of this project, we used the Neuroscan soft-

ware to interact with the experiment environment, record

EEG signals in their raw form and storing them in the CNT

format. EEG recordings, in the pure form, have a very

low signal-to-noise ratio and hence need to undergo heavy

preprocessing before being operated upon. In order to do

so, we used Brainstorm, a MATLAB toolkit, to refine our

dataset. This module facilitated the process of removing eye

blinks and other involuntary muscle movements recorded

by the EEG. It also offers a functionality to detect blatantly

corrupt samples and outliers. After the data cleansing step,

the toolkit stores them as MATLAB structures, mat files.

As a final step, we bridge the gap between our coding lan-

guage and the dataset format so that our Python scripts are

able to successfully interface with the data. Python’s Scien-

tific Python (SciPy) module hosts a few input-ouput (IO)

functions - the most important one in this context being

the loadmat function which reads in a MATLAB file as

a numpy array. We stack each instance of a symbol over

each other to obtain the dataset in the right form.

4. Results and Evaluation

We trained and tested our data using a three level stacked

CNN with two layers at each level. The levels above the first

tend to always perform better than the first because the class

size is smaller, so we will focus our analysis on training the

first level. We found that the CNN would severely over-

fit, with making tuneups to encourage generalization having

very little desired effect.

Our tests were run against our samples of ”sig”, ”nal”, and

”signal”. We decided to split each of the syllables into ten

classes, making 20 classes in total in the first layer. There

were 200 samples of each word, and with 10 components

per syllable and 20 for ”signal”, with 90% of the trials taken

for training, we had a training set size of 7200 samples.

Since we ran our experiments on a limited resource com-

puter, we advise future experiments to perform a data aug-

mentation of averaging different combinations of samples

to produce artificially new trials.

We use an SVM loss function to capture the notion that the

symbolic components should be as distinct and dissimilar

as possible. Our two best models have a training accuracy

of 94% with a validation accuracy of 10%, and the second

has a training accuracy of 45% with a validation accuracy

of 29%. The performance of the second was rare and not

reproducible, affected by weight initializations.

In every training case, RMSProp unfailingly solved the op-

timization problem, reducing the loss function in a strongly

exponential manner. Strangely, many times the loss fell

without improvements in the training or validation rate.

This must indicate that the optimization problem does not

sufficiently represent the situation and that a stronger pat-

tern recognition model should be used.

Using dropout, increasing the regularization rate, and in-

creasing the number of samples trained on had little ef-

7](https://image.slidesharecdn.com/6b0b738c-6dbe-4b01-ba71-41f65be4cf7a-151214091001/75/Oshri-Khandwala-Chopra199-7-2048.jpg)

![fect on the validation rate. Increasing the number of fil-

ters had a consistent positive increase on the validation rate.

The innefectiveness of dropout and the regularization rate

likely indicate that the patterns observed are highly intri-

cate with weights that are best not biased to be smooth or

averaged.

The low validation rate should not eclipse the fact that train-

ing produced extremely high results, suggesting that even

with one convolutional layer and one affine layer strongly

distinguishing features were found. This suggests that with

a significantly stronger model, one that matches the ex-

tent of the problem discussed in the beginning of the pa-

per, trained using GPUs and other advanced methods, more

global patterns may be found.

5. Future Work

In conclusion, we found in our limited experiment that

small-layered CNNs offer modest performance accuracies

in basic imagined speech tasks but likely nowhere near to

fully reaching or testing the validity of a stacked language

model. Further experiments in this field must increase the

size of the dataset, improve the breadth of the data, and train

on much larger CNNs to saturate their effectiveness on the

data.

We encourage further experimentation with pattern recogni-

tion methods that fit well into the stacked model of language

understanding. For example, this paper does not mention

the use of Recurrent Neural Networks and their widely ob-

served success in vocal speech recognition [1], which could

also prove effective in imagined speech recognition.

6. Acknowledgements

We would like to acknowledge the efforts of the follow-

ing people: Prof. Fei Fei Li for overseeing this project,

Dave Deriso for his inspiring support, Andrej Karpathy

and CS231N TAs for instruction in the art of CNNs, Prof.

Takako Fujioka for lending her time and effort in using her

lab, and Rafael Cosman for helping fuel some of the ideas

presented in this paper.

References

[1] Awni Hannun, Carl Case, Jared Casper, Bryan Catan-

zaro, Greg Diamos, Erich Elsen, Ryan Prenger, San-

jeev Satheesh, Shubho Sengupta, Adam Coates, and

Andrew Y. Ng. Deep speech: Scaling up end-to-end

speech recognition. ArXiv, 2014.

[2] B.V.K Kumar Vijaya and K Brigham. Imagined

speech classification with eeg signals for silent com-

munication: A preliminary investigation into synthetic

telepathy. Bioinformatics and Biomedical Engineering

(iCBBE), 2010.

[3] Lorenzo Magrassi, Giuseppe Aromataris, Alessandro

Cabrini, Valerio Annovazzi-Lodi, and Andrea Moro.

Sound representation in higher language areas dur-

ing language generation. Proceedings of the National

Academy of Science of the United States of America,

2014.

[4] Marc Oettinger. Decoding heard speech and imagined

speech from human brain signals. Office of Intellectual

Property Industry Research Alliances, 2012.

8](https://image.slidesharecdn.com/6b0b738c-6dbe-4b01-ba71-41f65be4cf7a-151214091001/75/Oshri-Khandwala-Chopra199-8-2048.jpg)