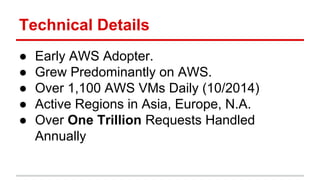

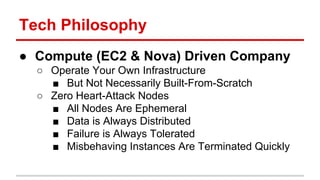

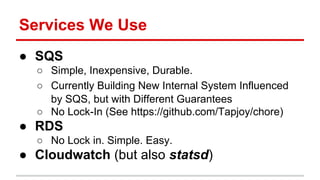

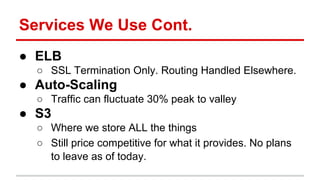

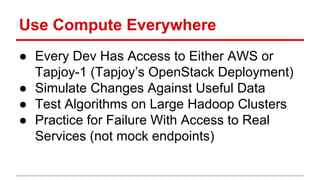

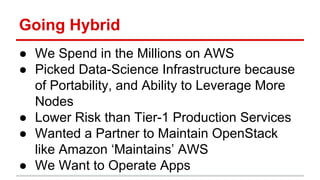

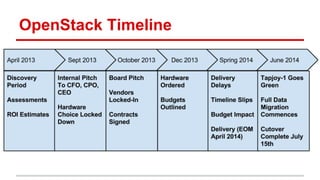

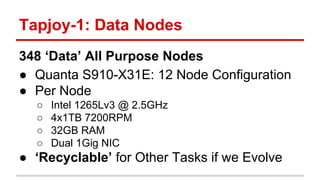

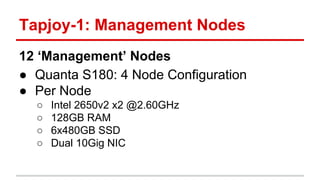

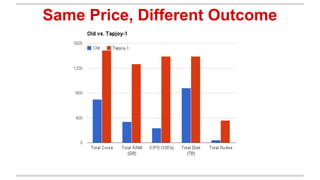

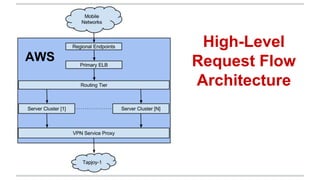

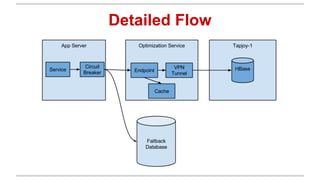

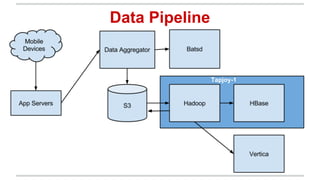

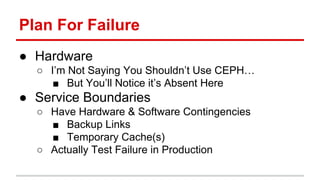

The document discusses Tapjoy's use of OpenStack and AWS. Tapjoy is a global app-tech startup that powers monetization, analytics, user acquisition and retention for mobile developers. They were an early AWS adopter but grew to over 1100 AWS VMs daily, so decided to build their own OpenStack deployment (Tapjoy-1) for additional compute capacity and flexibility. Key points included partnerships with Metacloud and Equinix to deploy and manage Tapjoy-1, challenges around hardware delays and negotiations, and plans to use both AWS and Tapjoy-1 flexibly based on application needs.