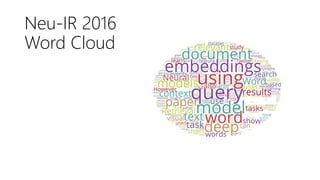

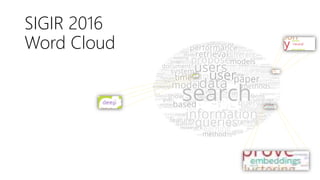

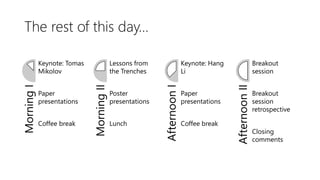

This document summarizes information about the First SIGIR Workshop on Neural Information Retrieval (Neu-IR 2016). It provides statistics on the number of registrations (121), submissions (27), accepted papers (19), countries represented (9), and reviewers (14). It also lists the program committee members and describes the workshop's program which included keynote speeches, paper and poster presentations, and breakout sessions.