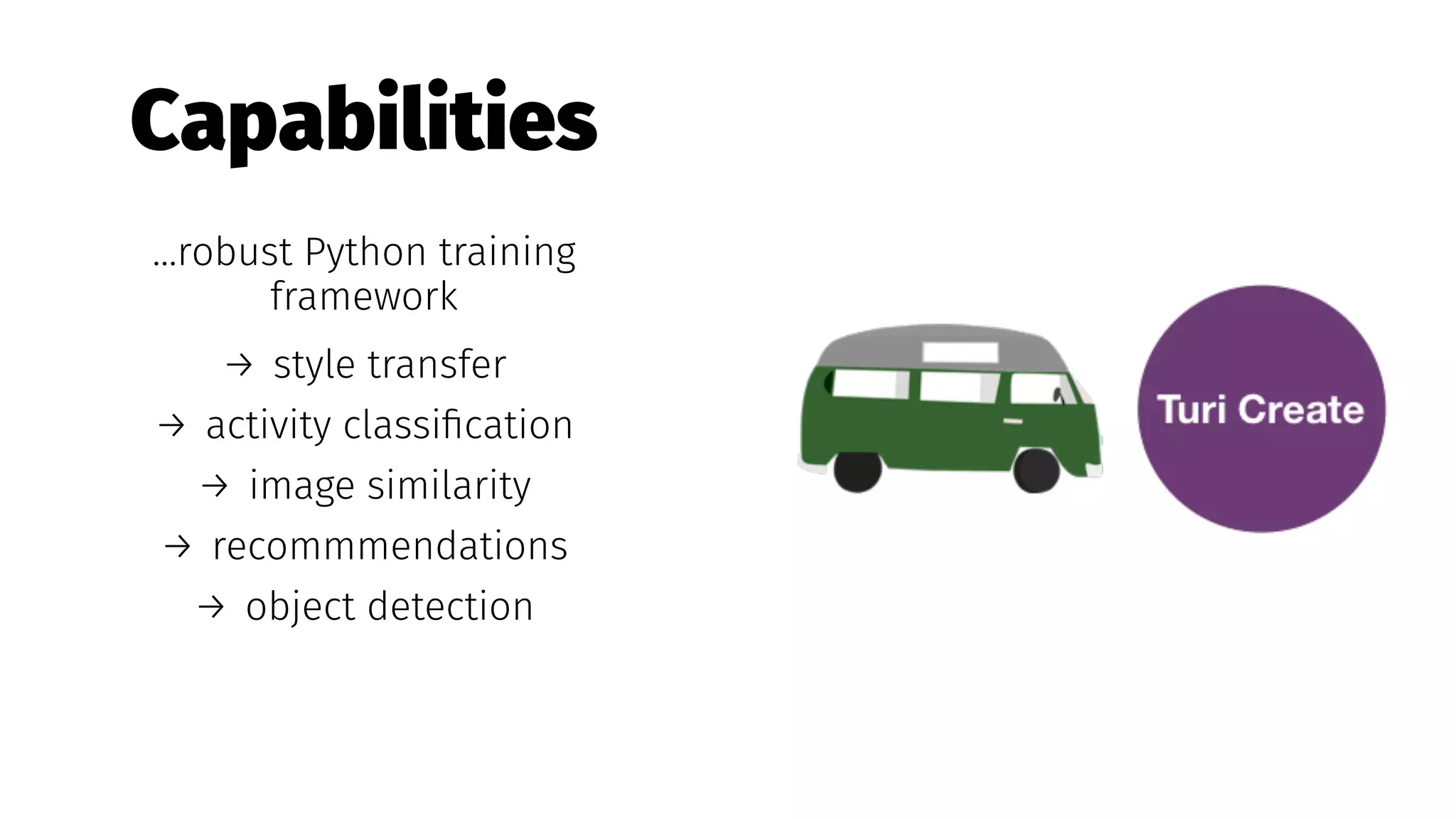

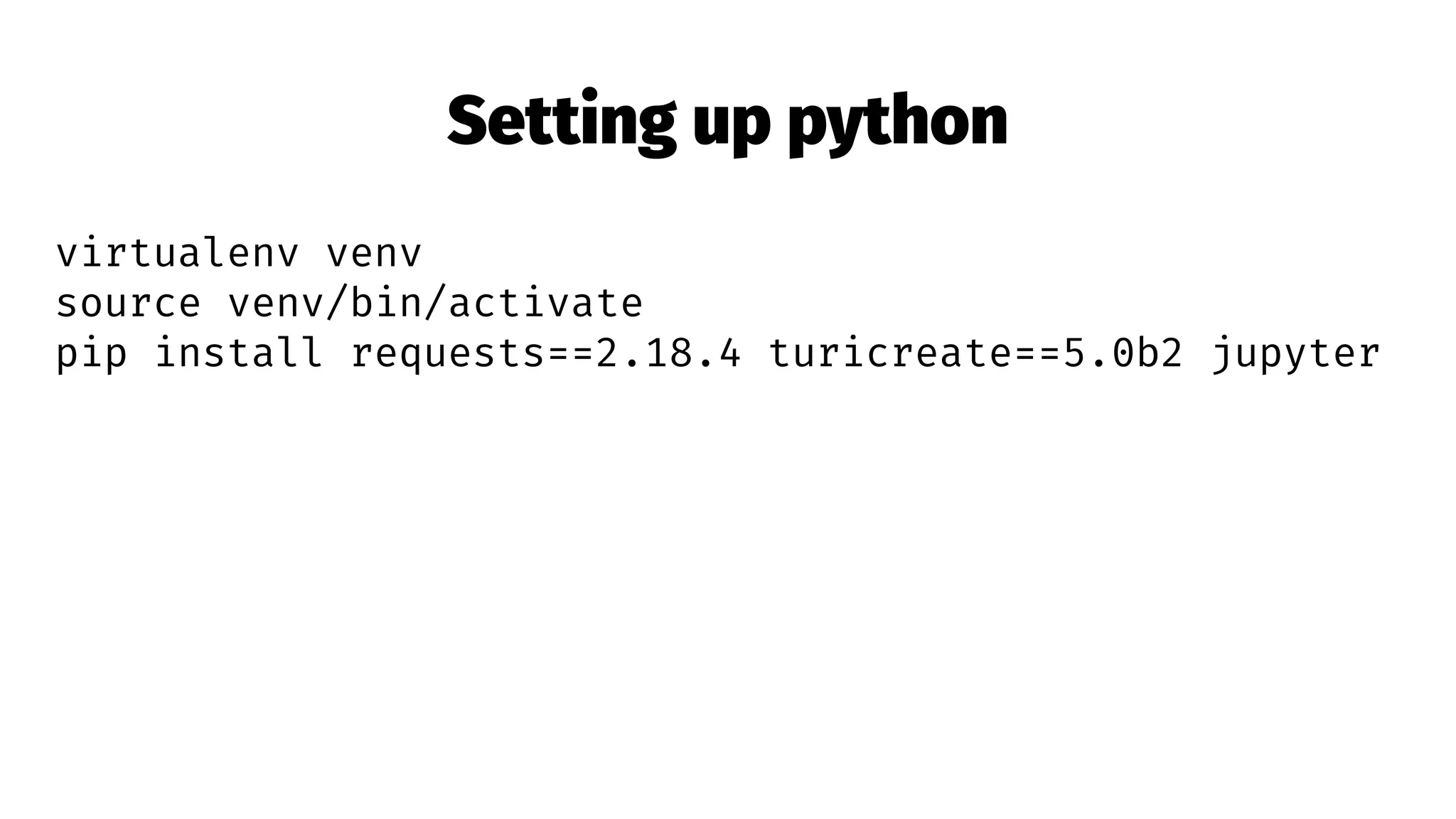

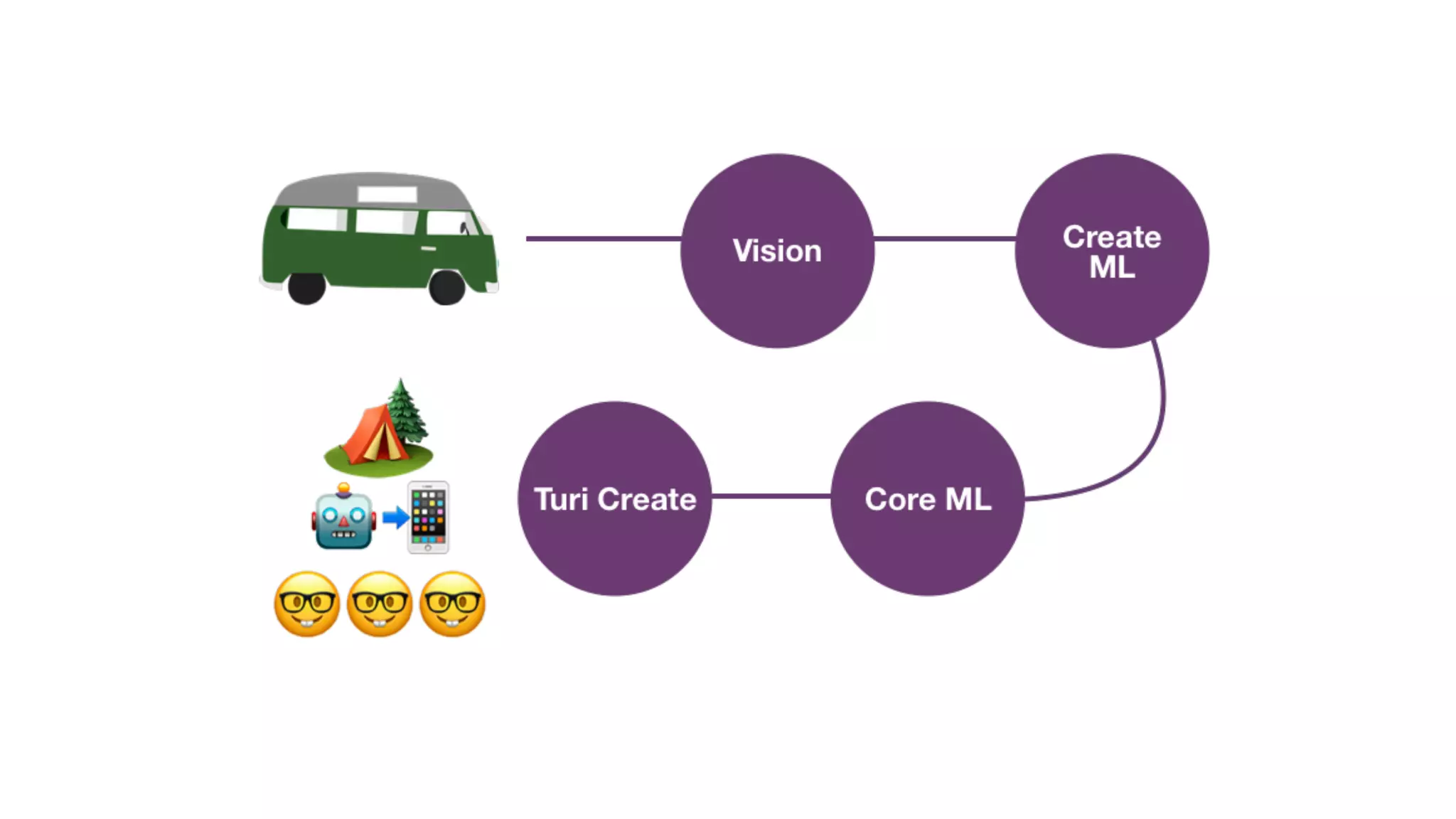

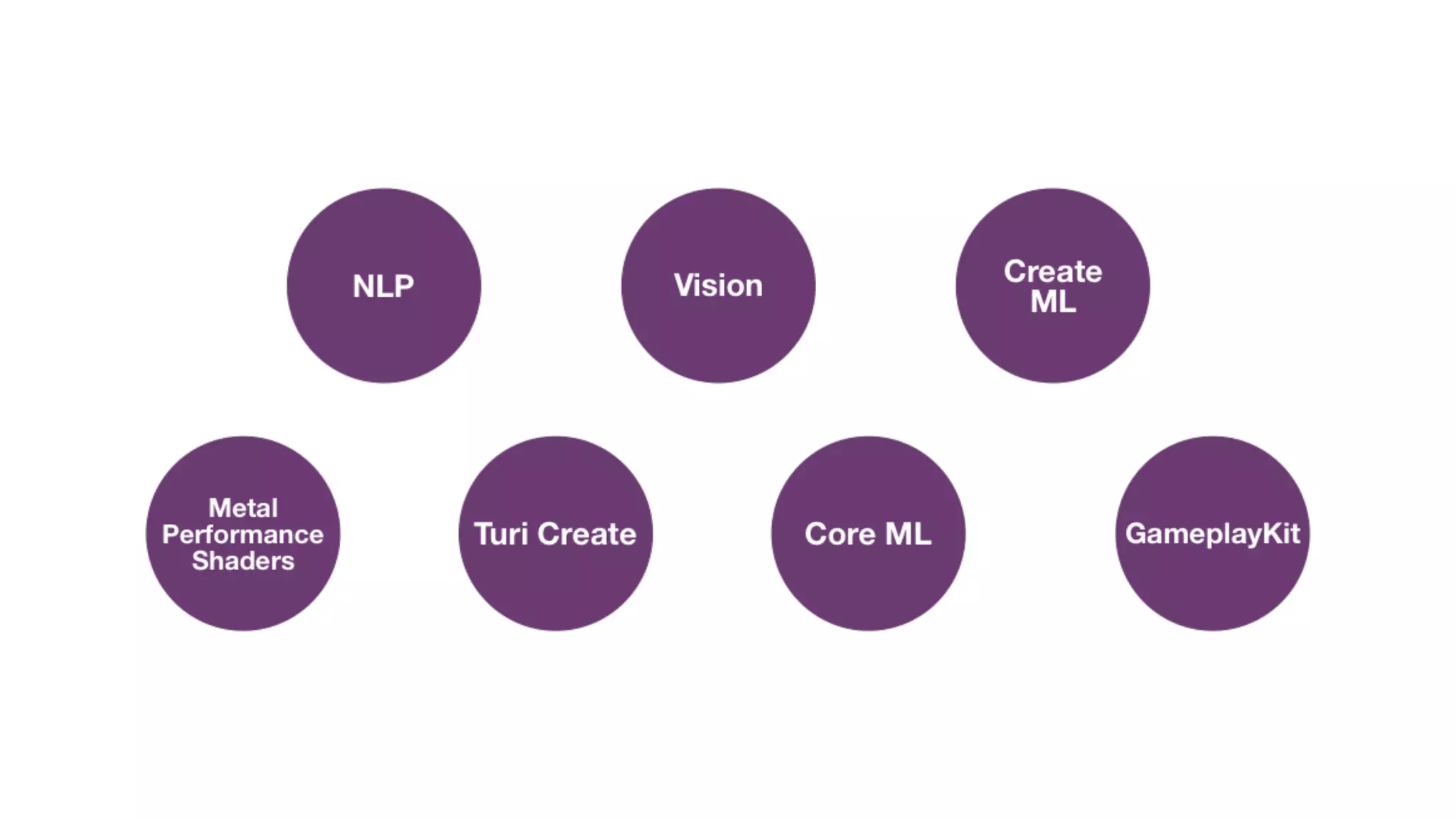

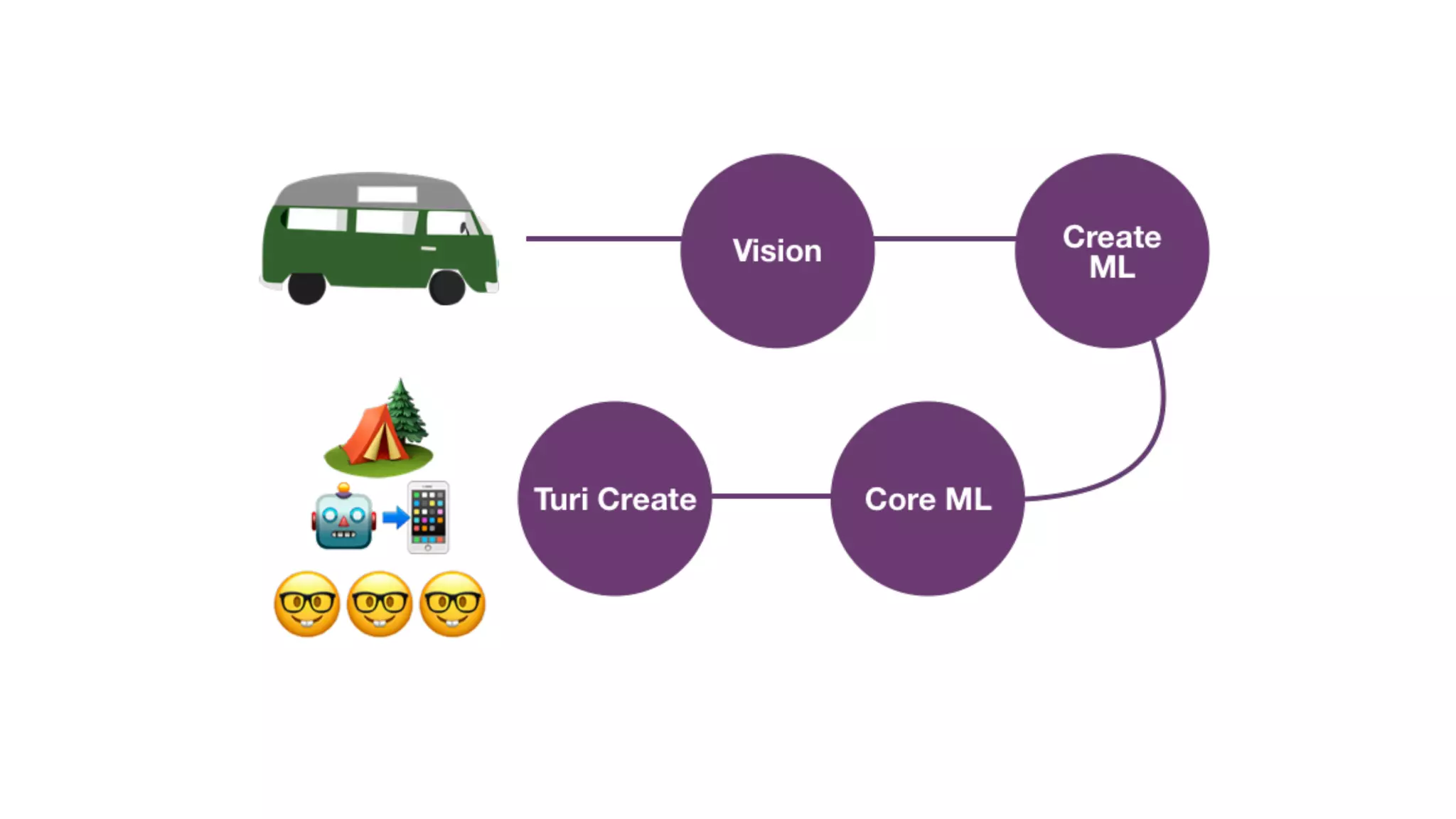

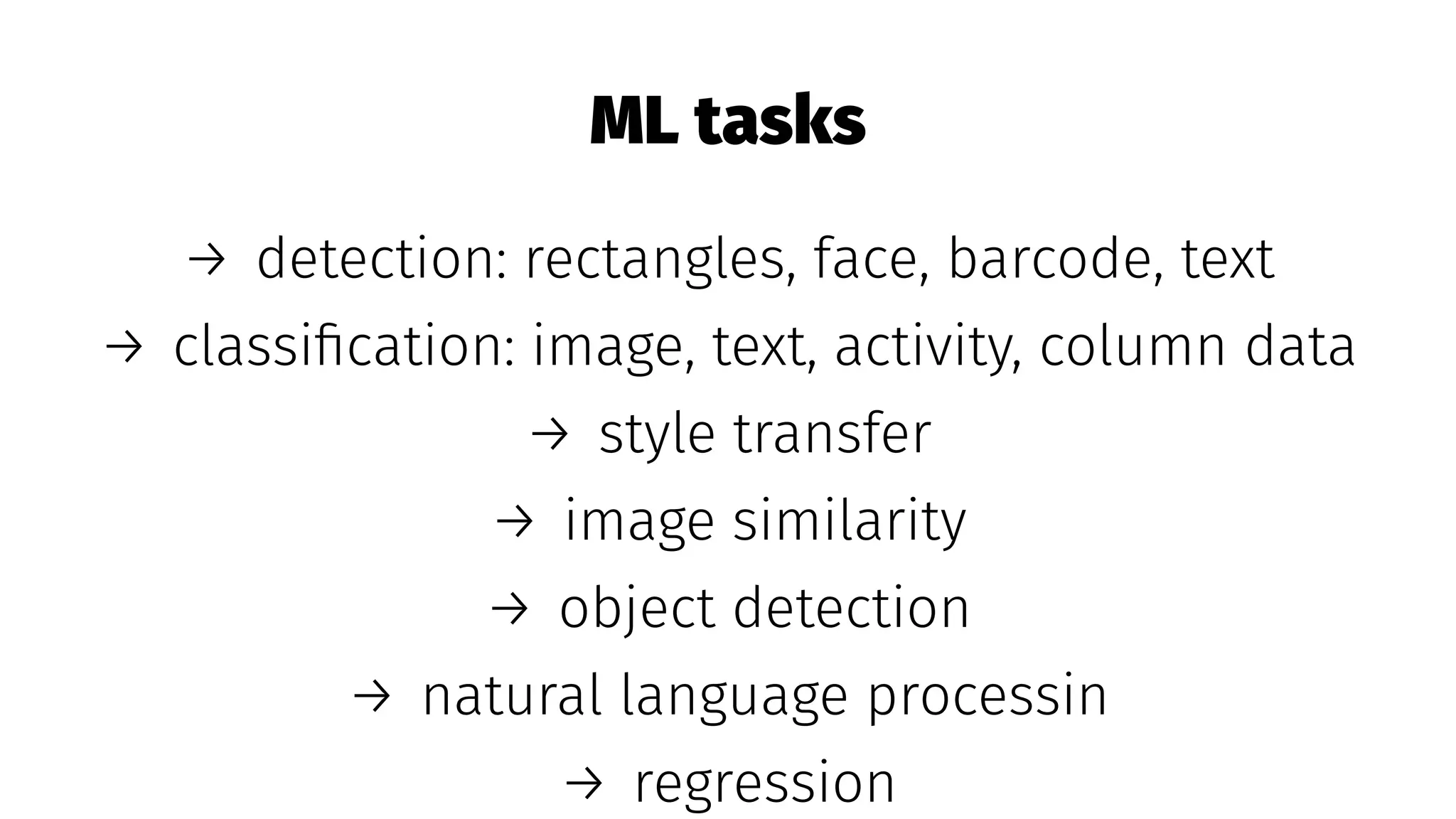

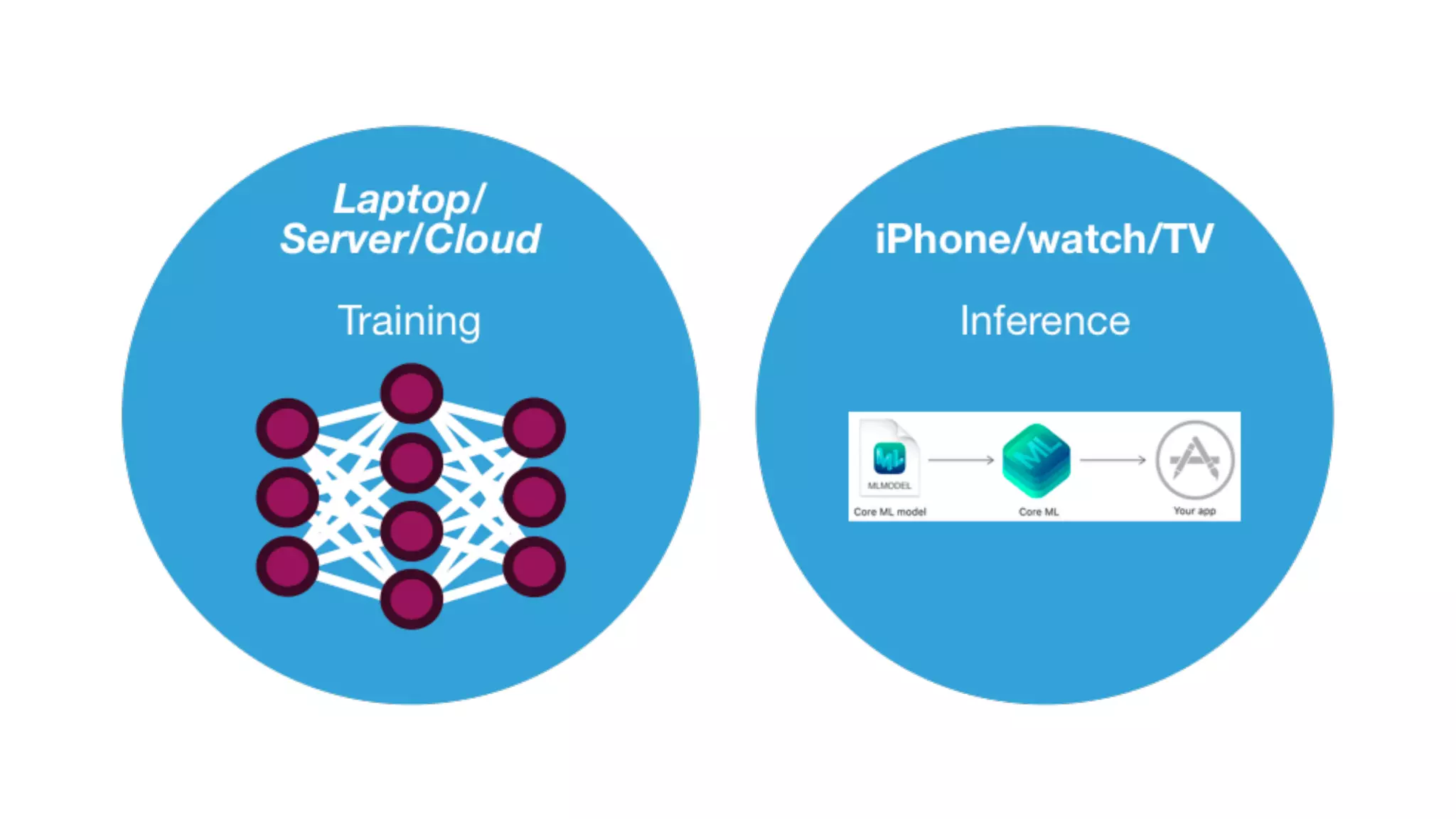

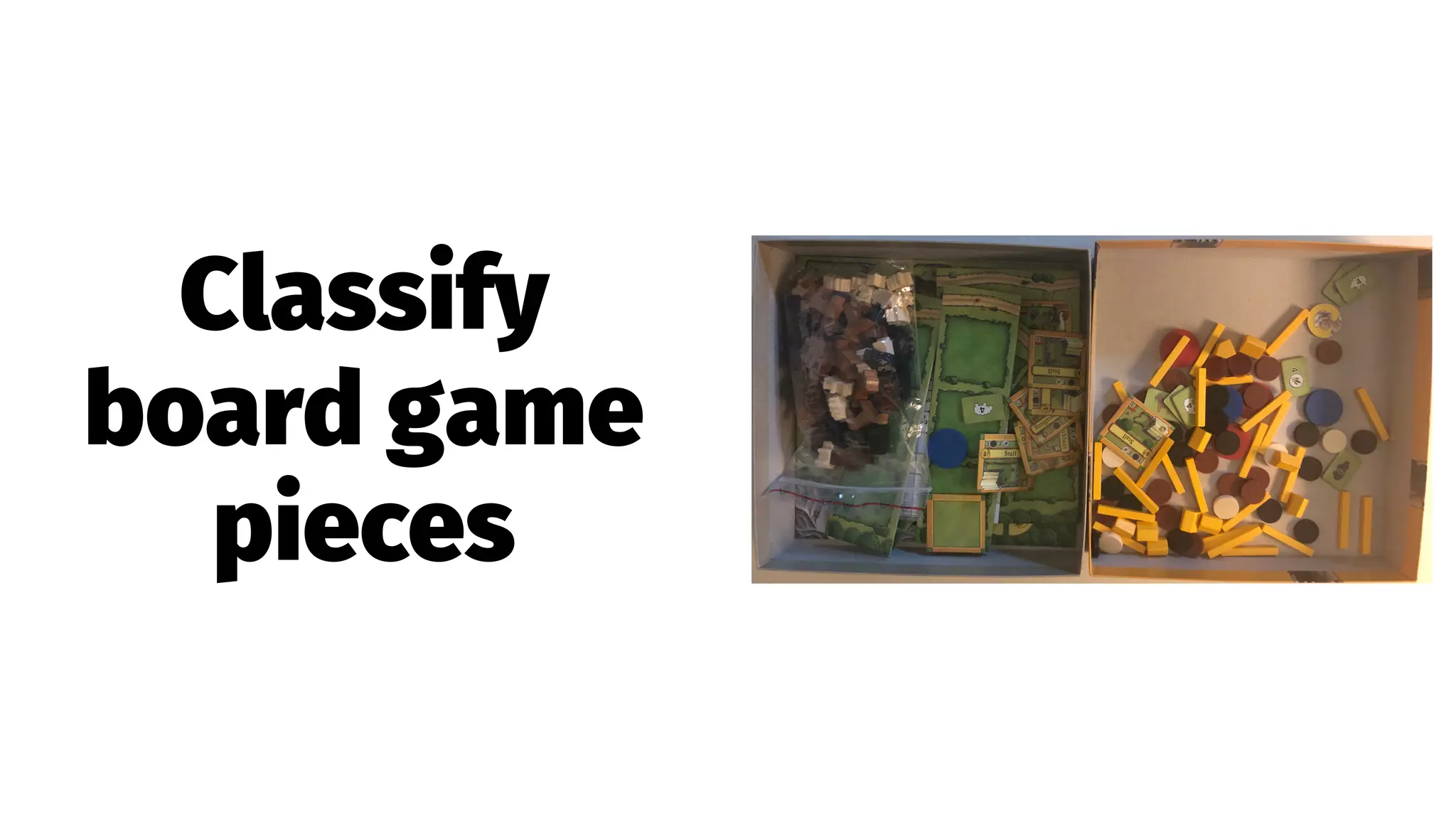

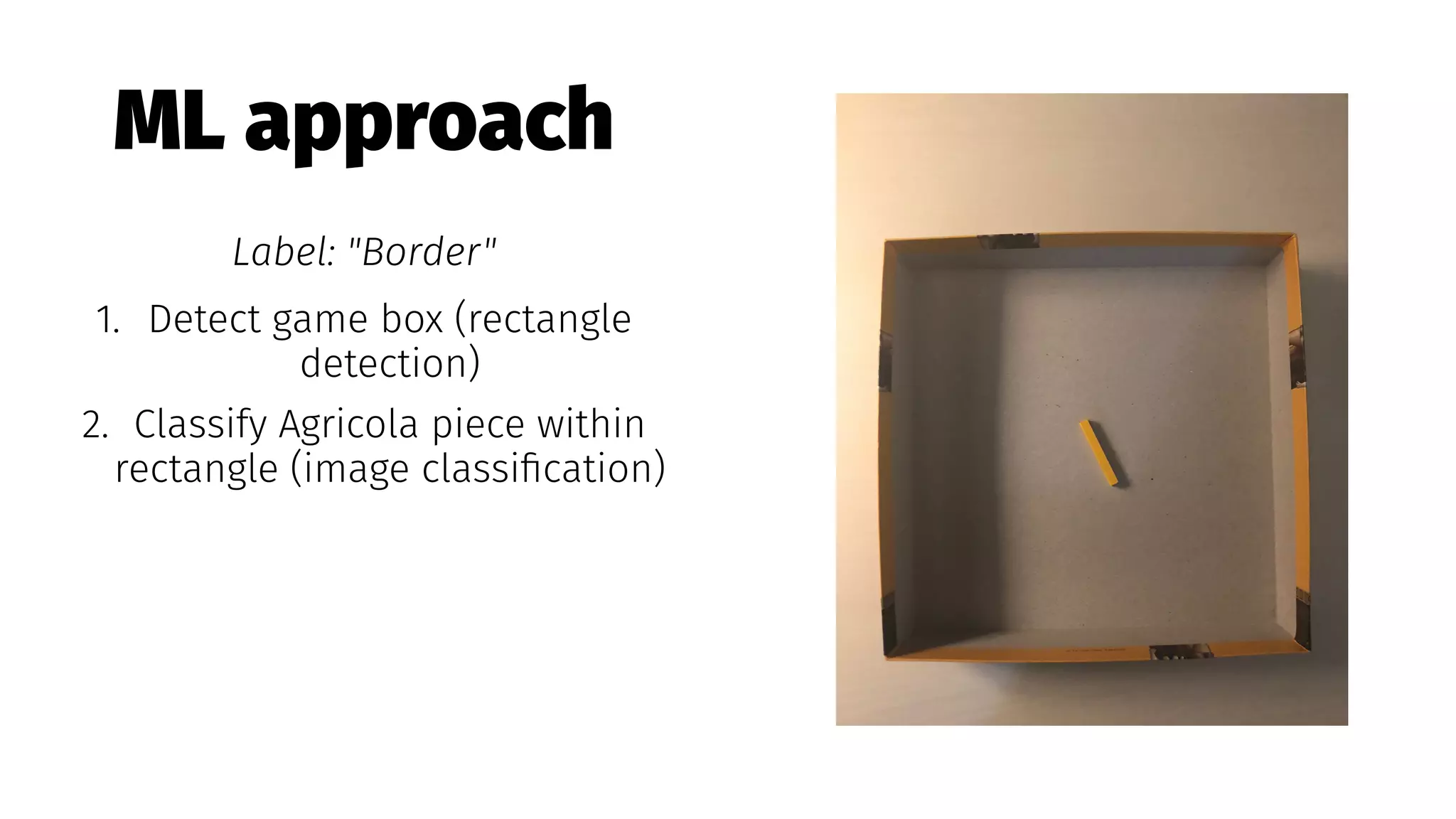

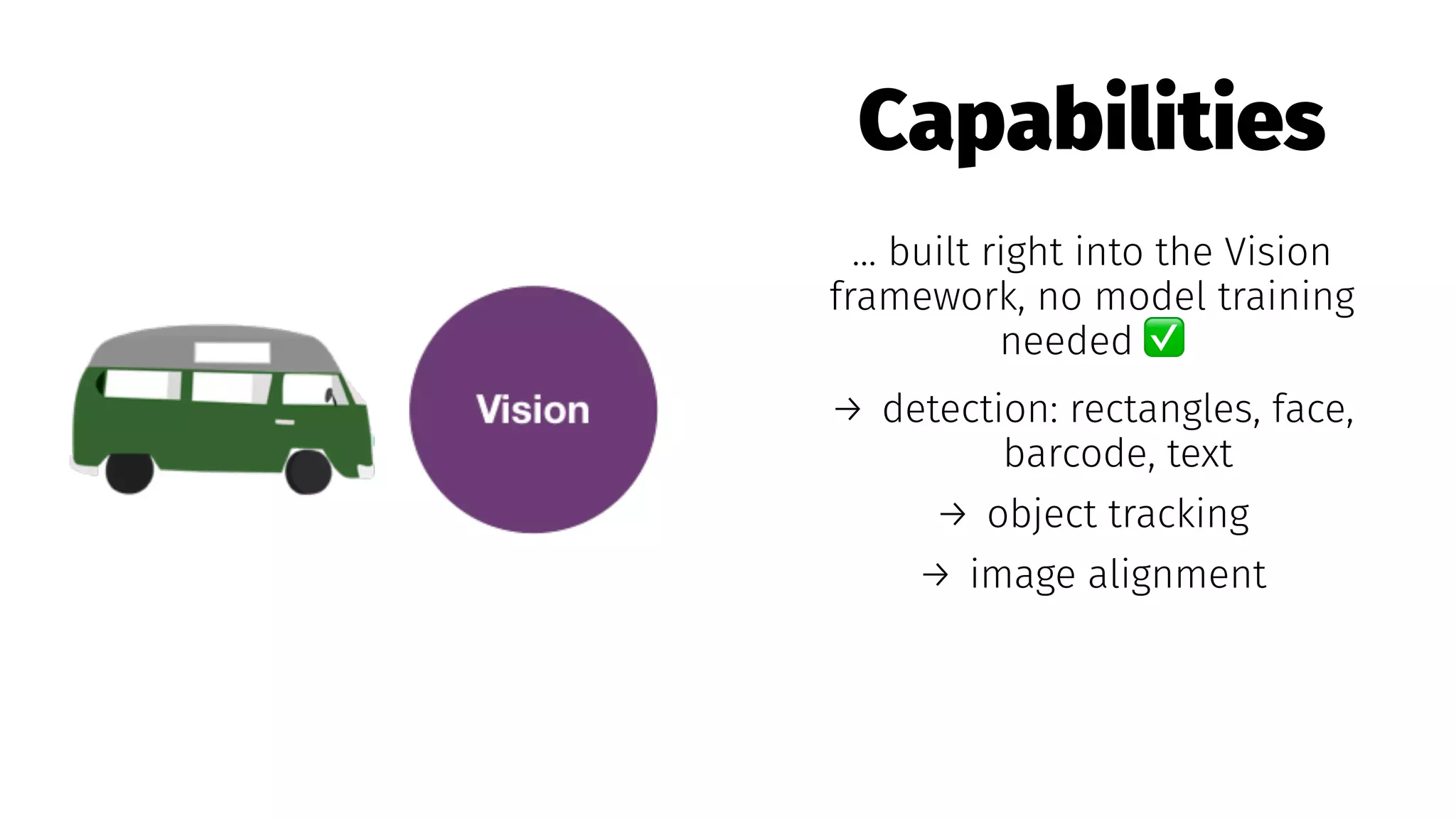

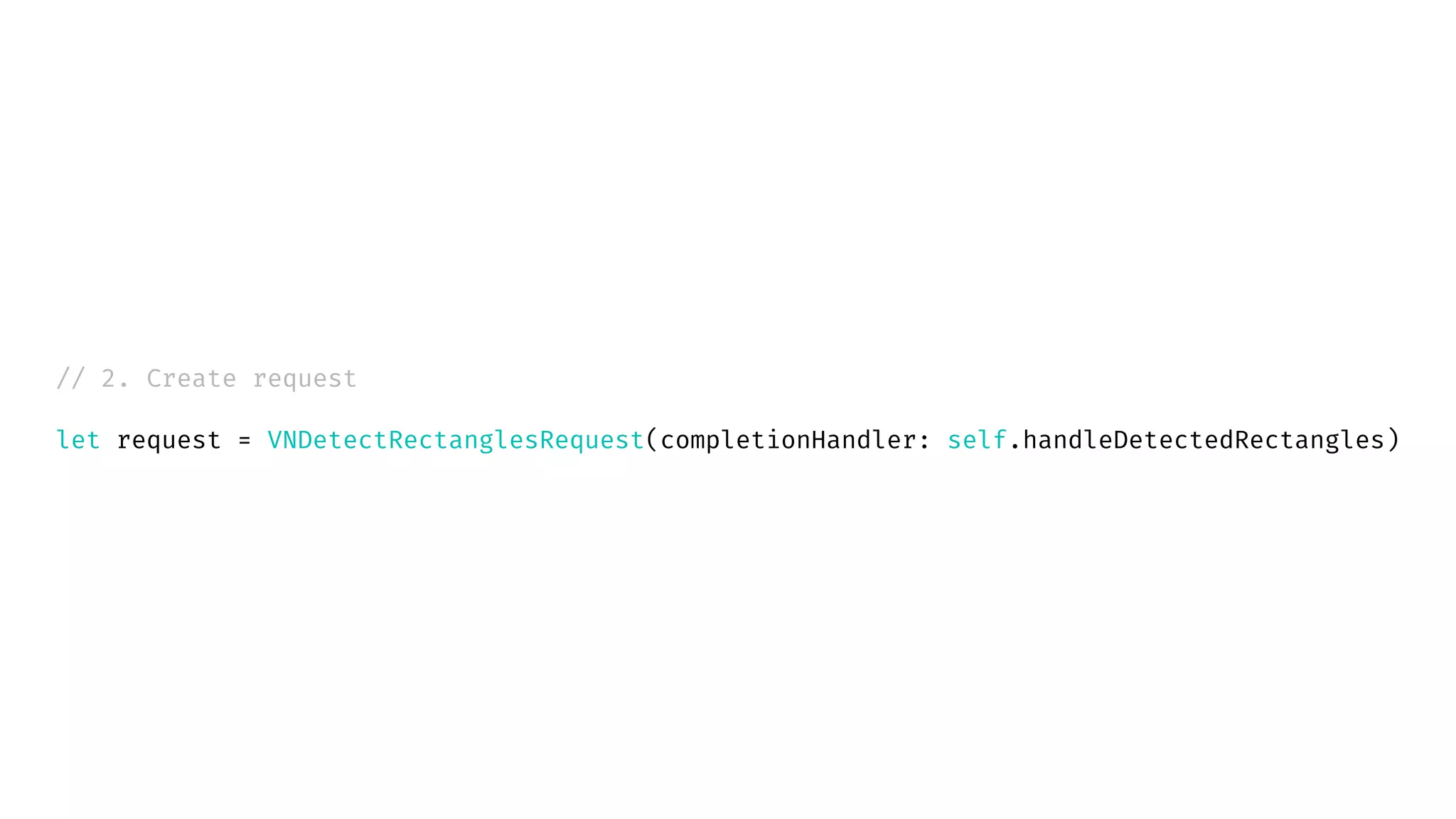

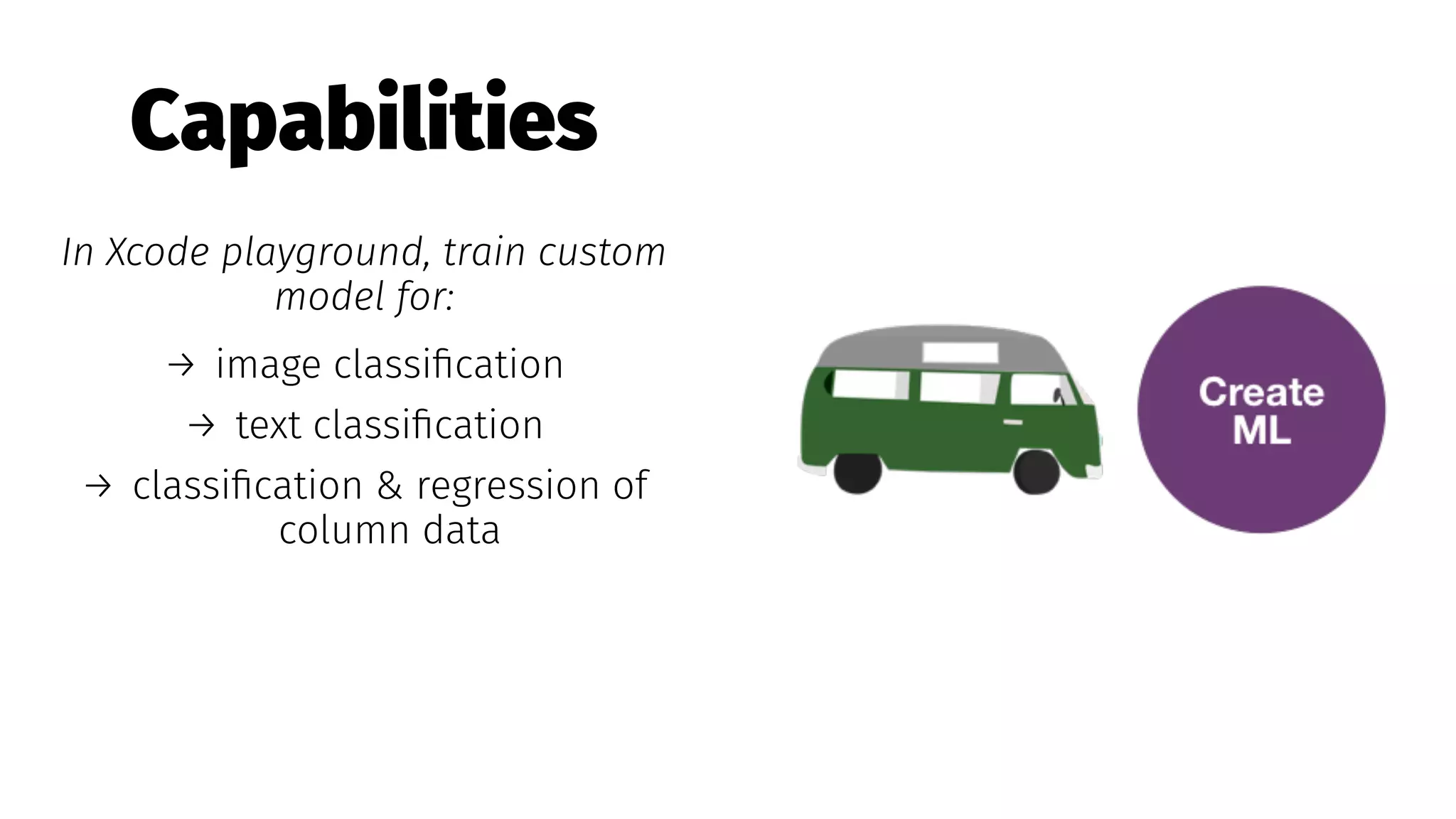

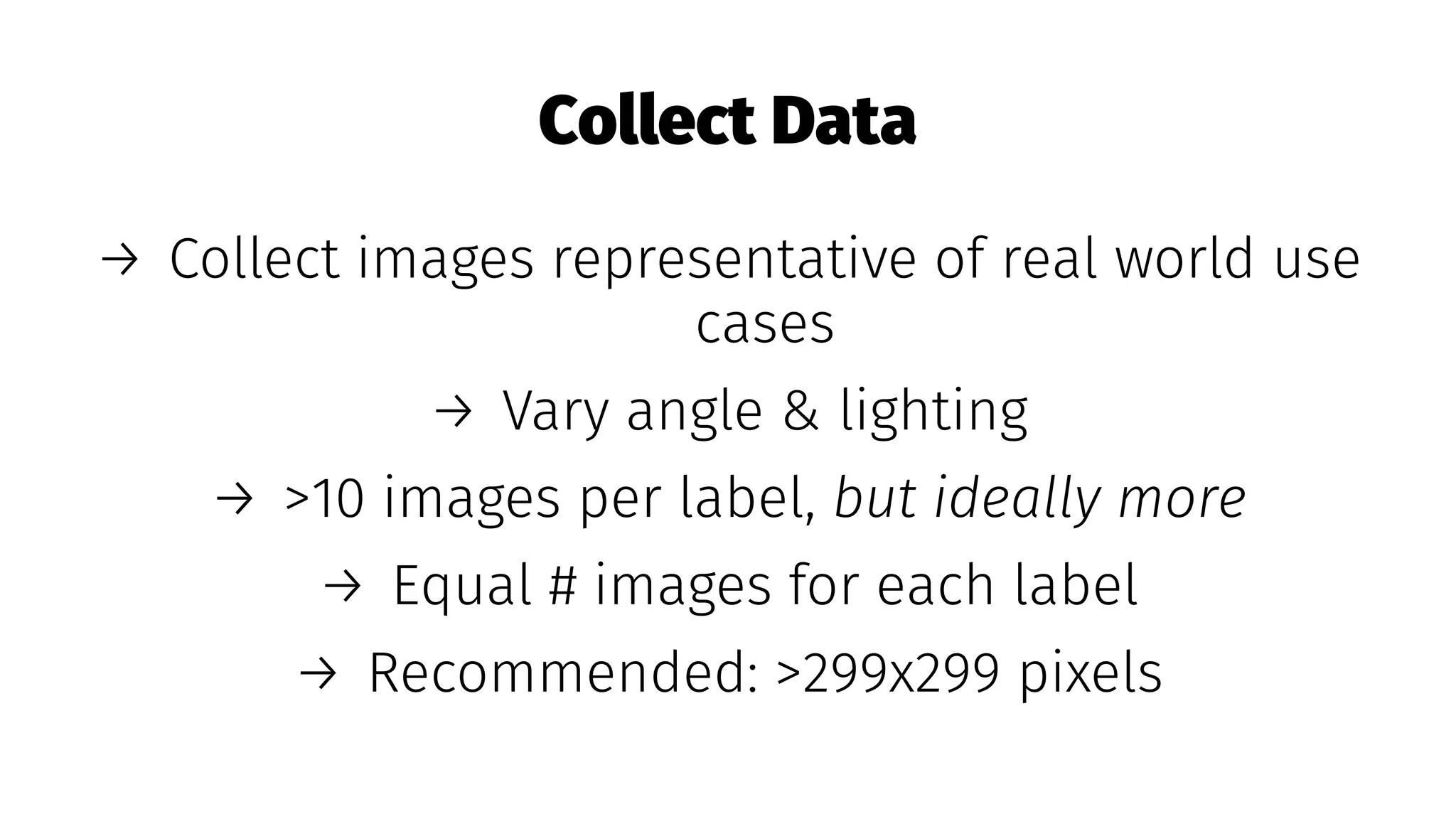

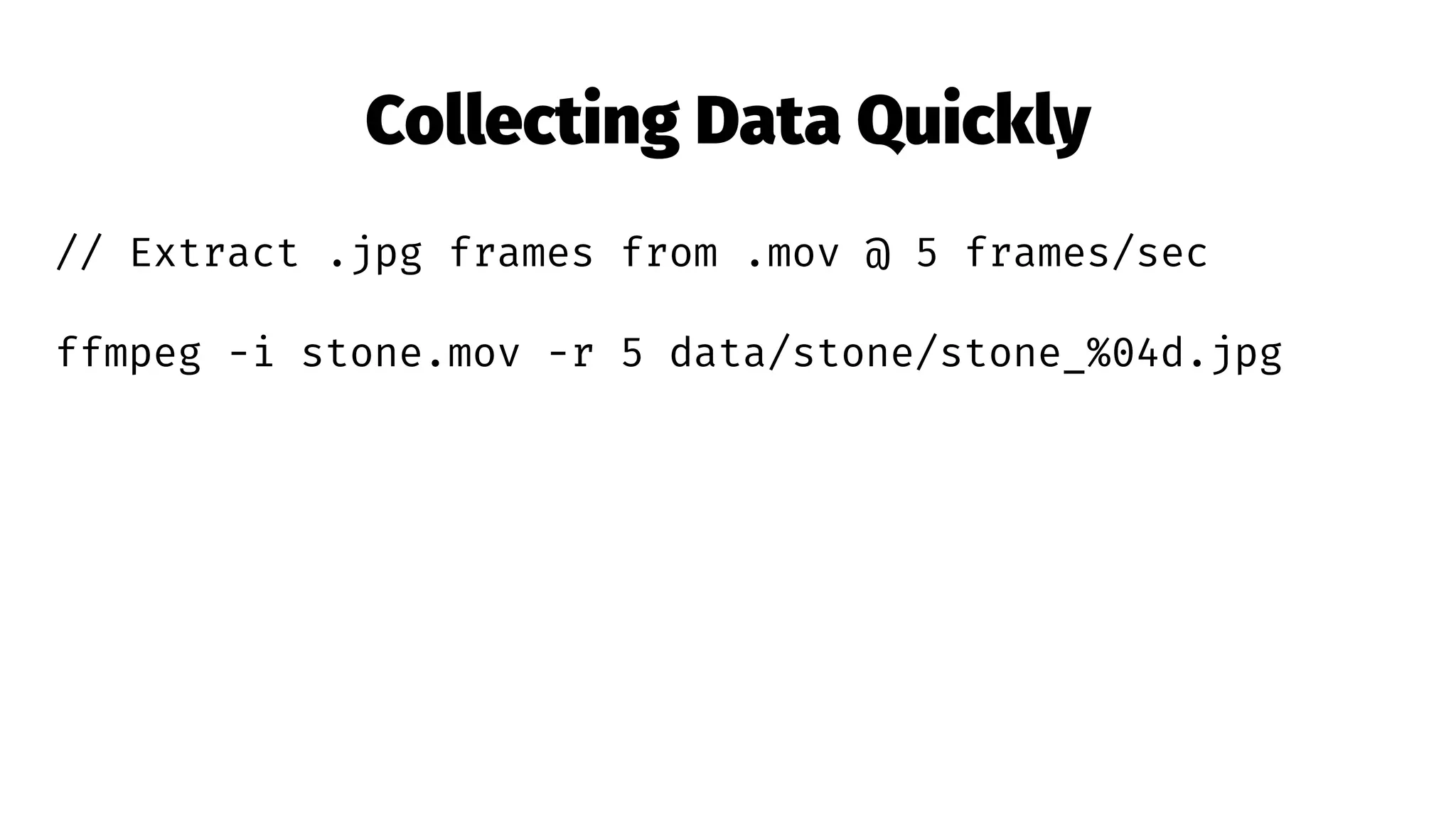

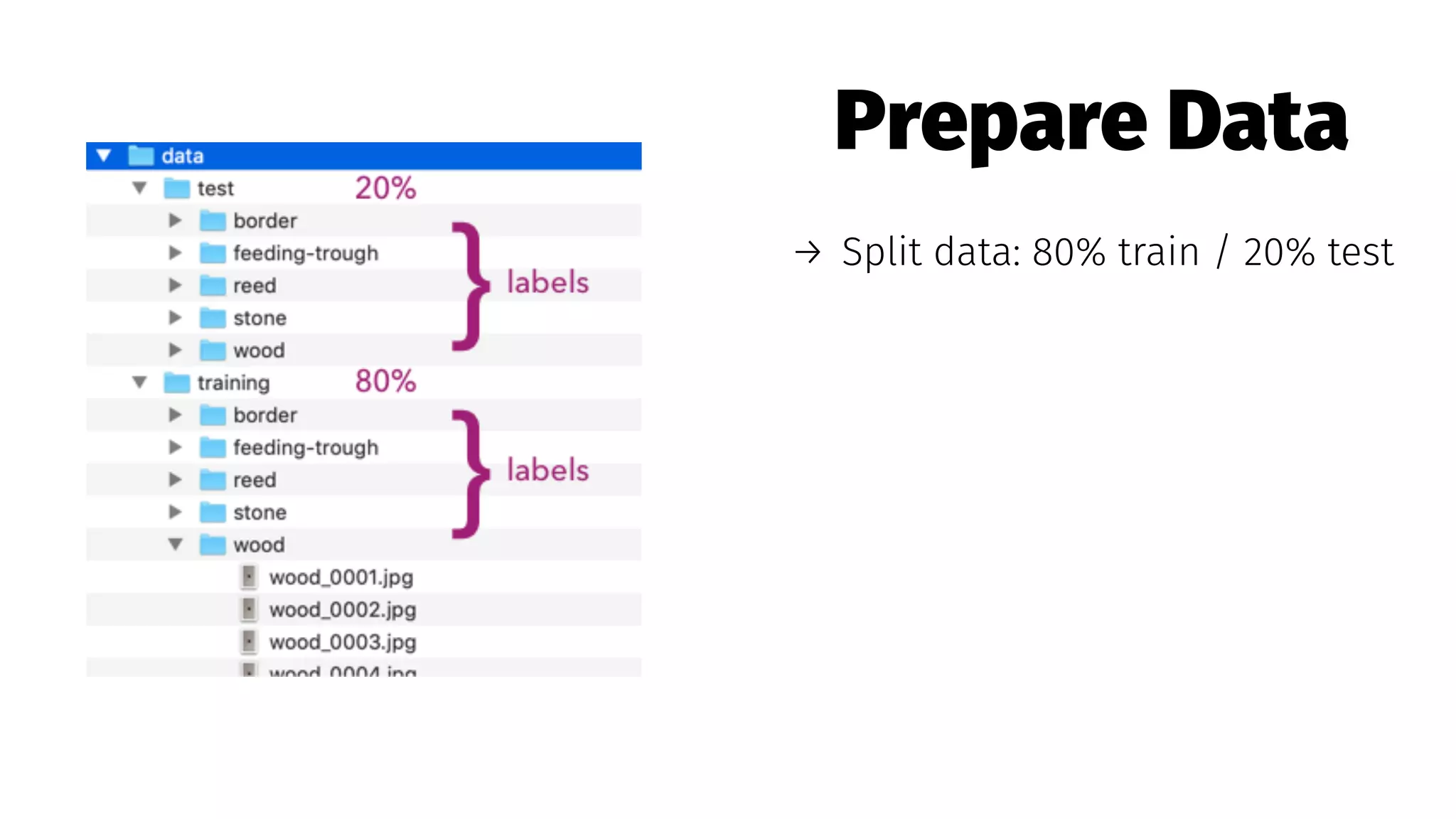

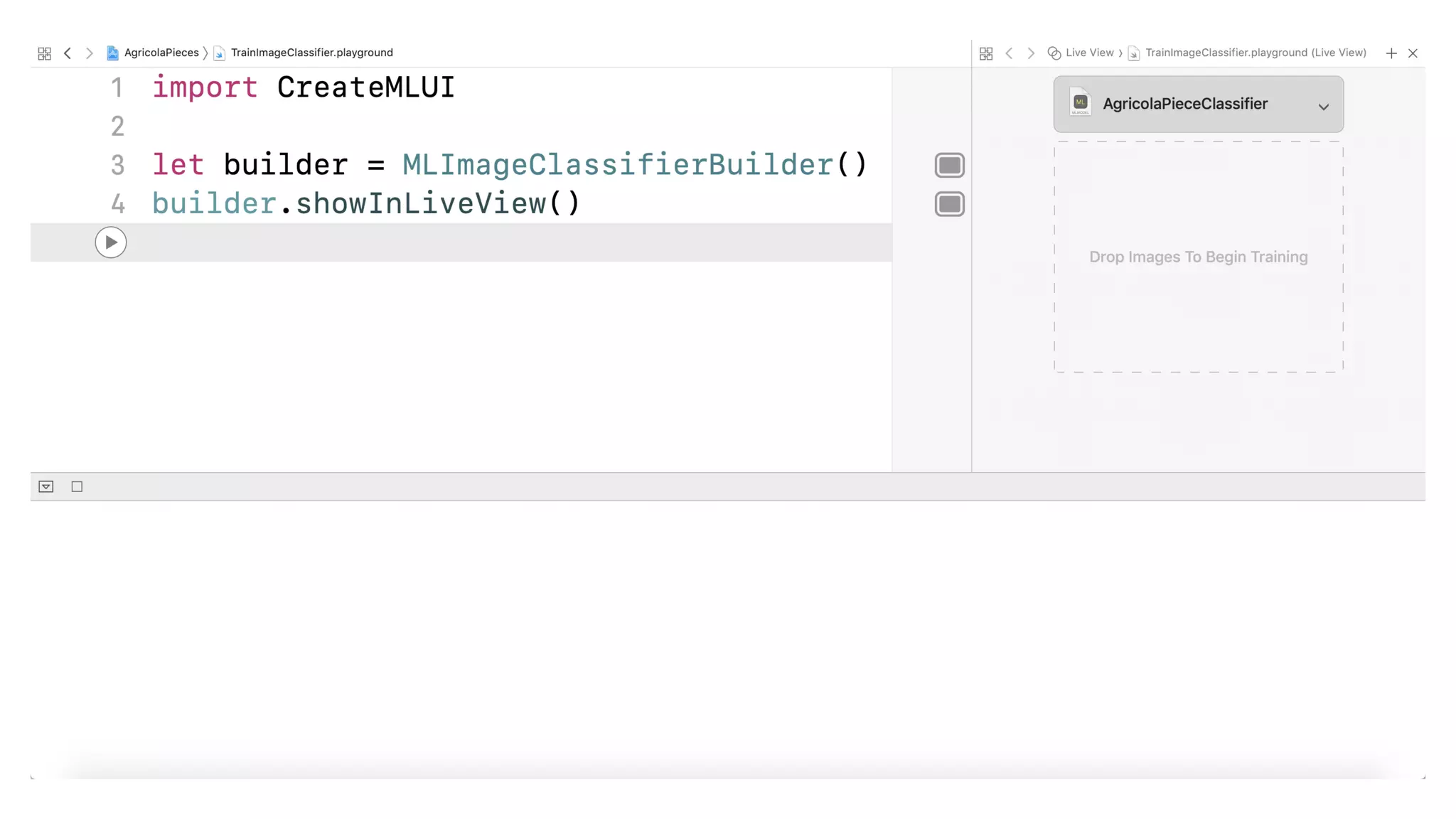

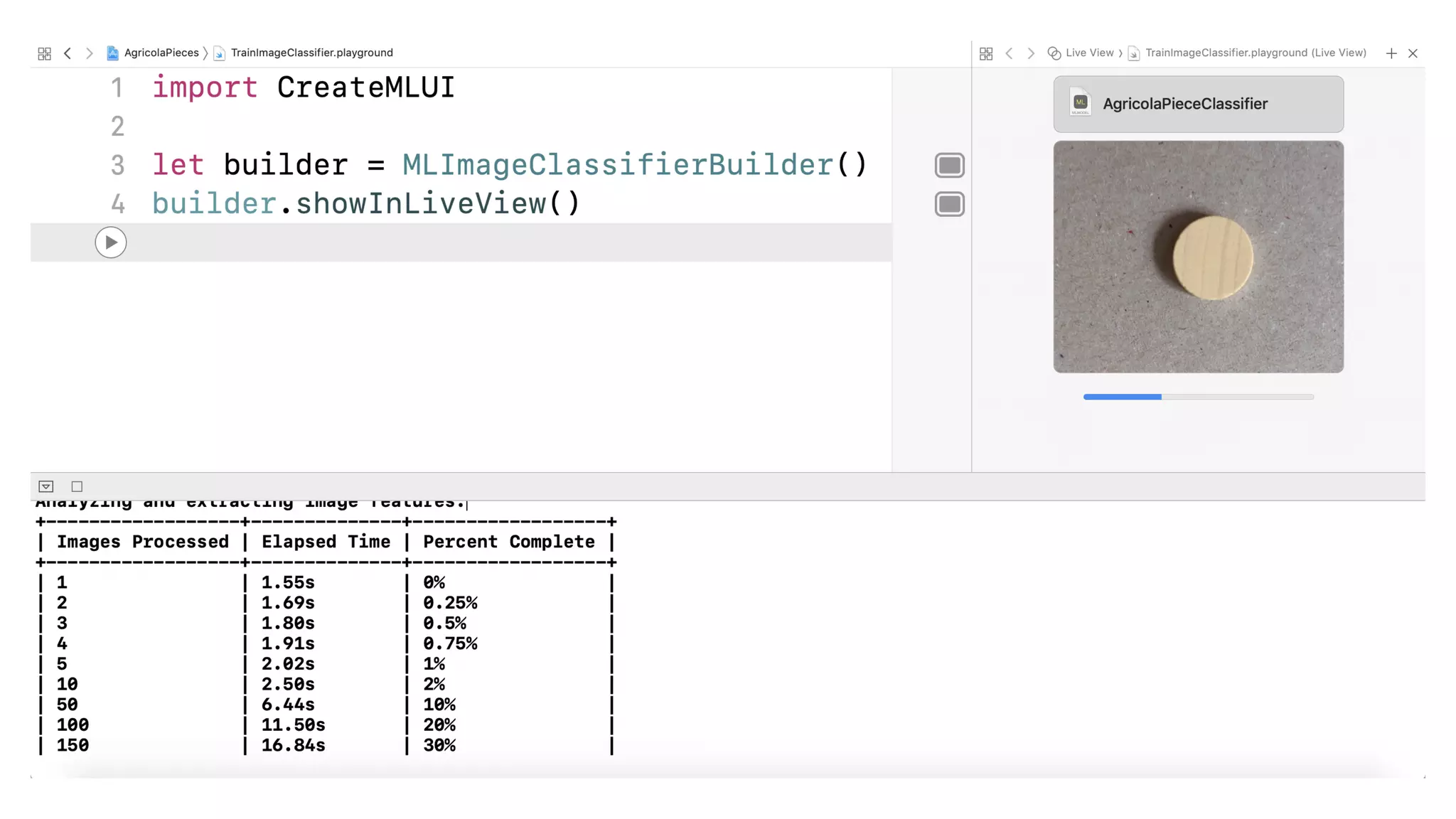

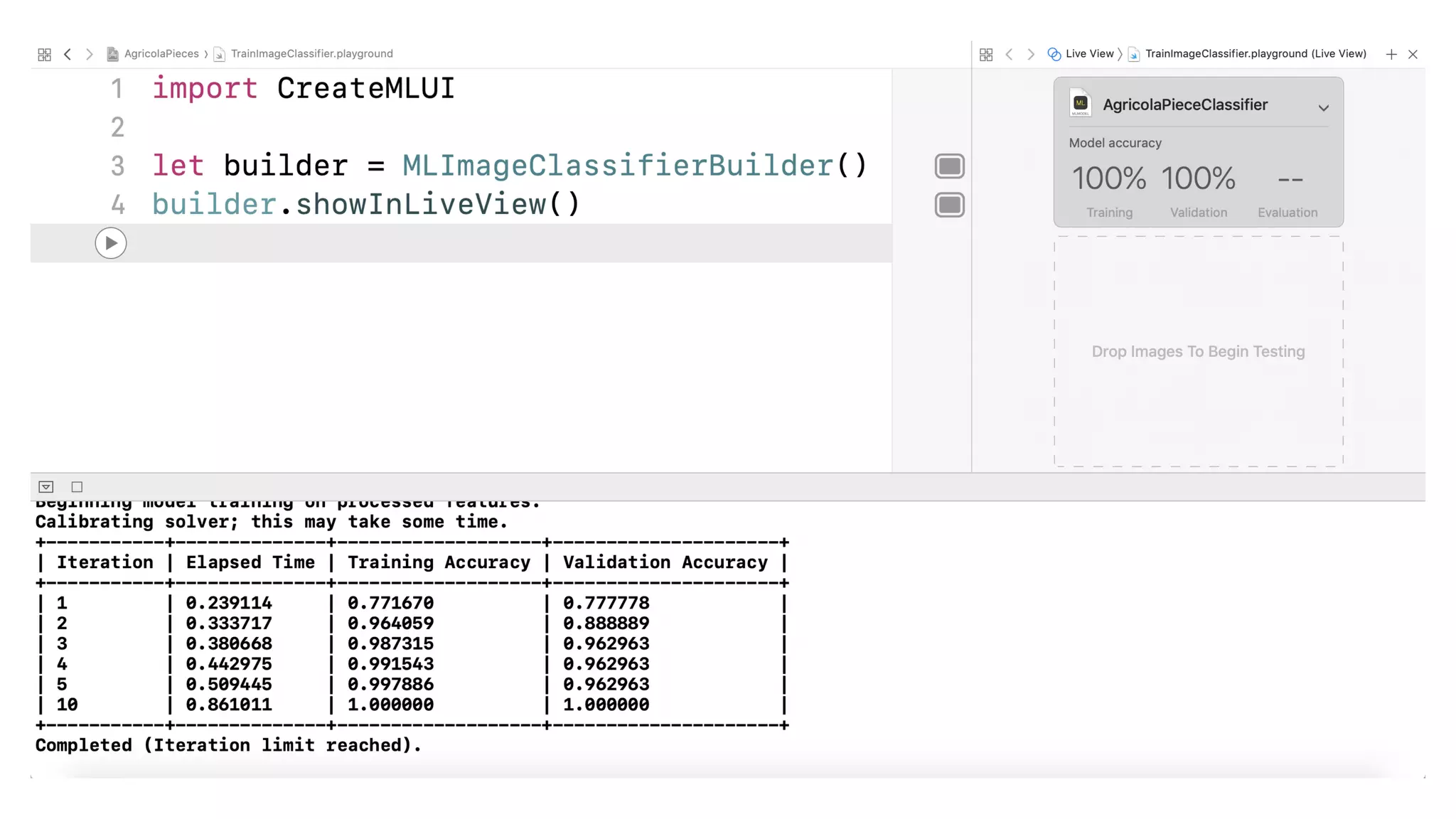

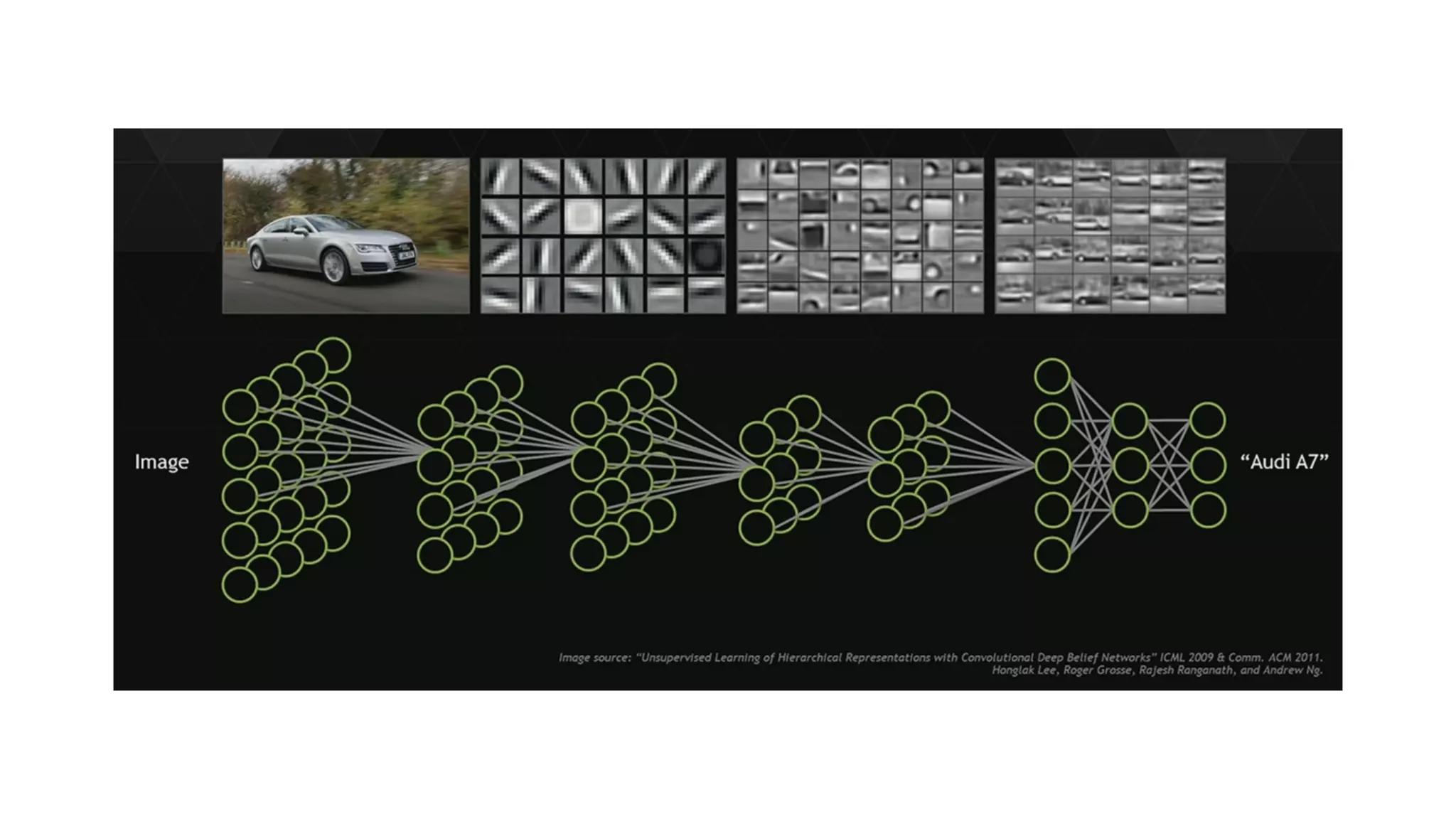

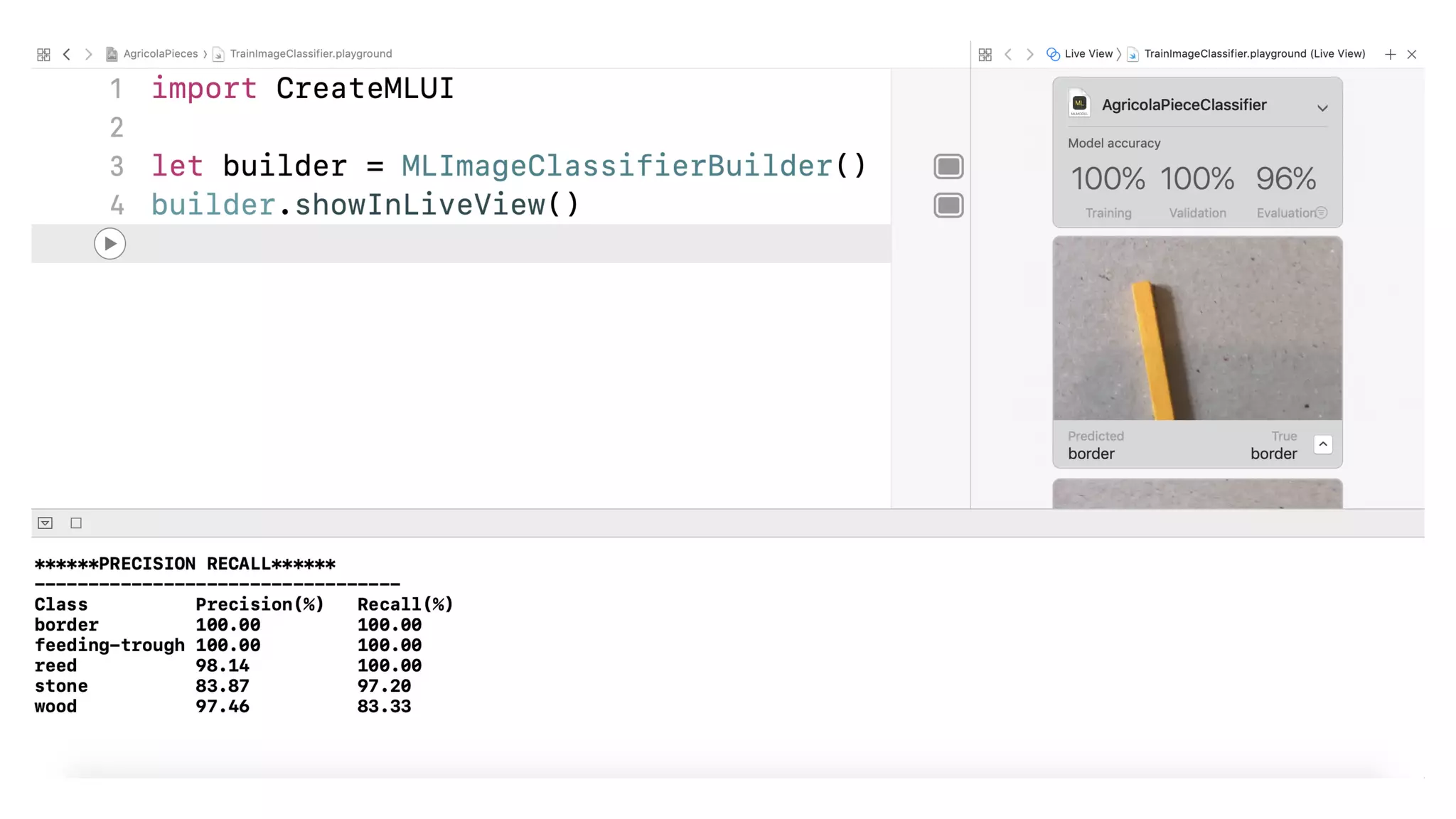

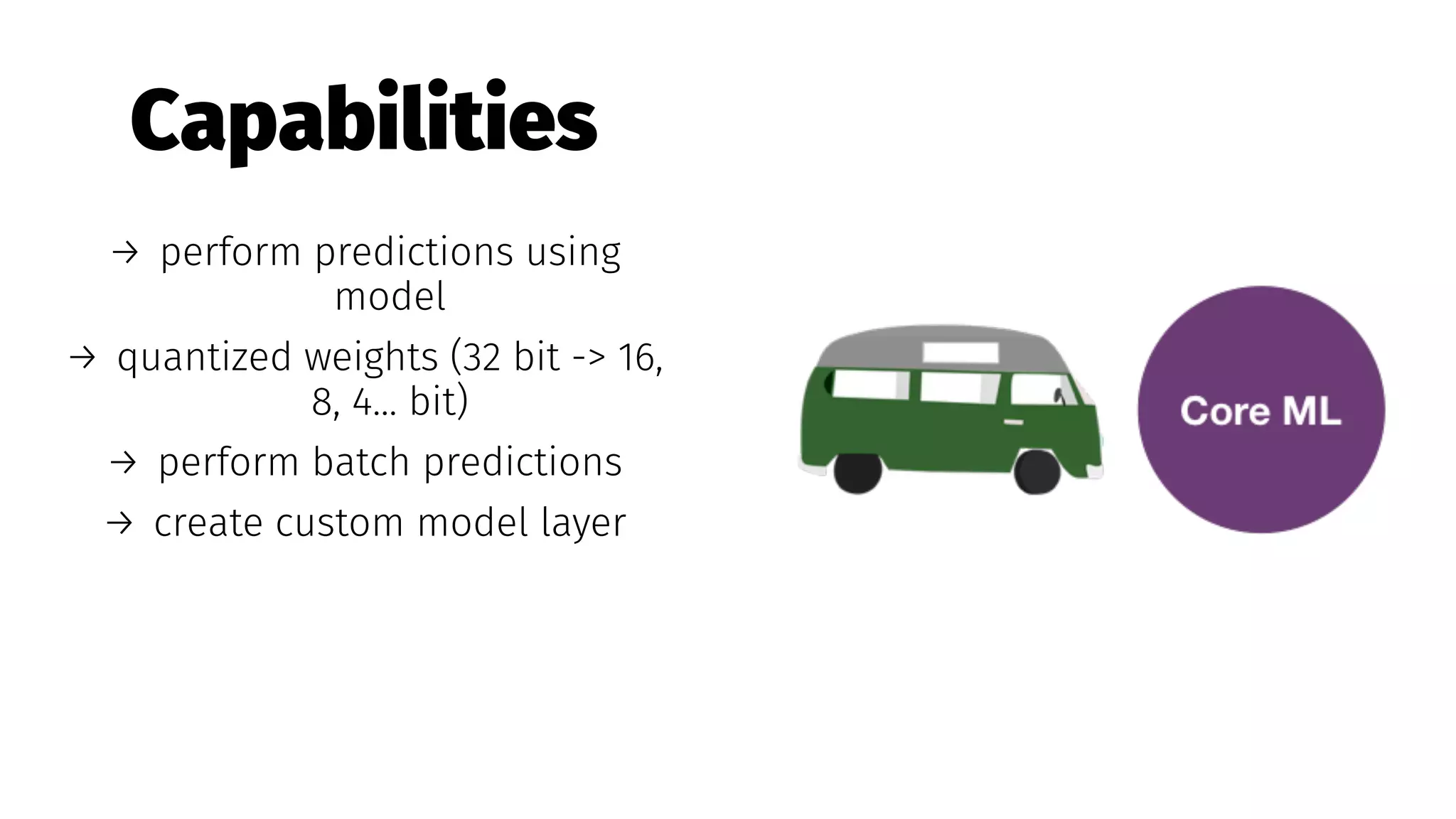

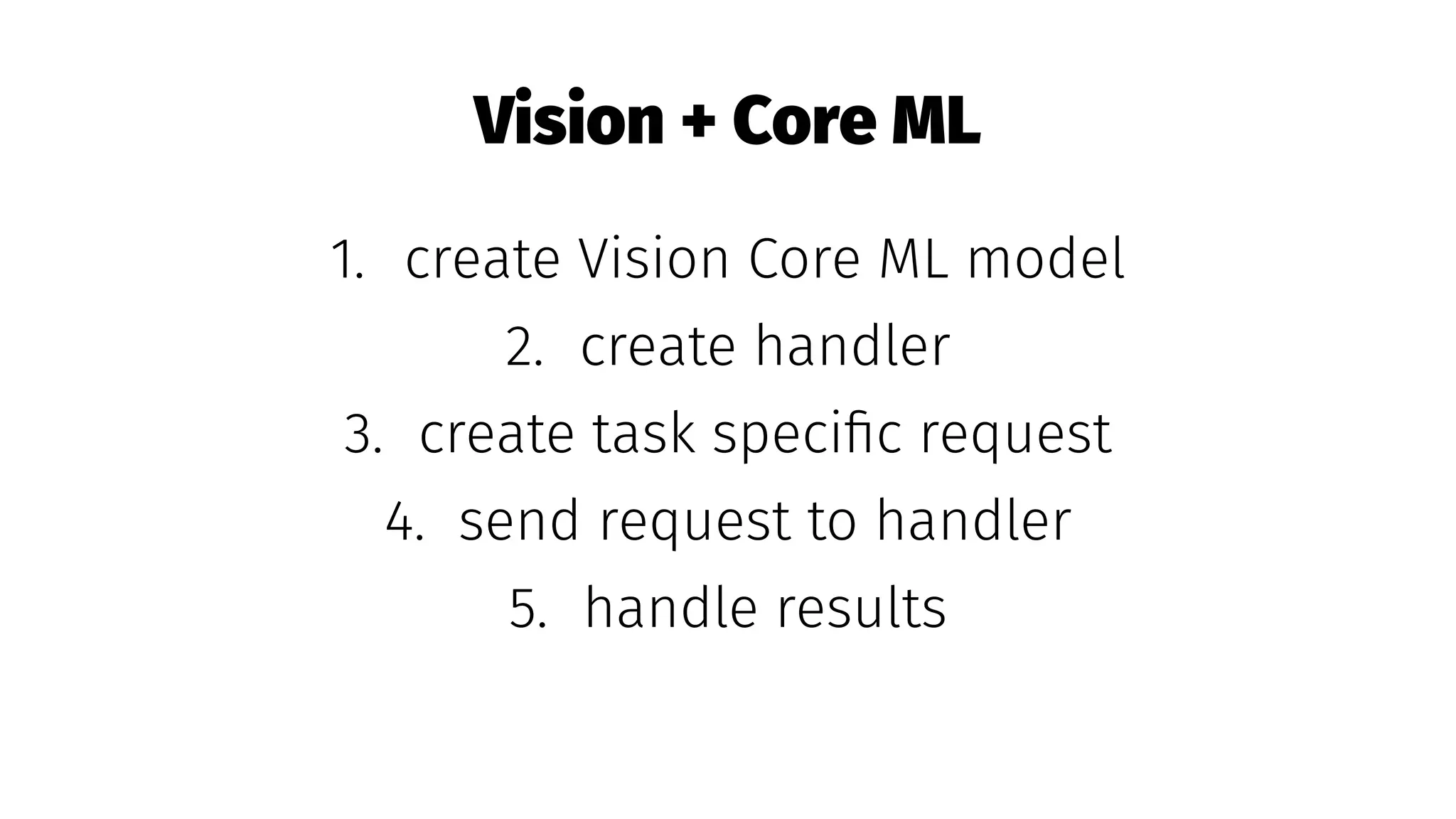

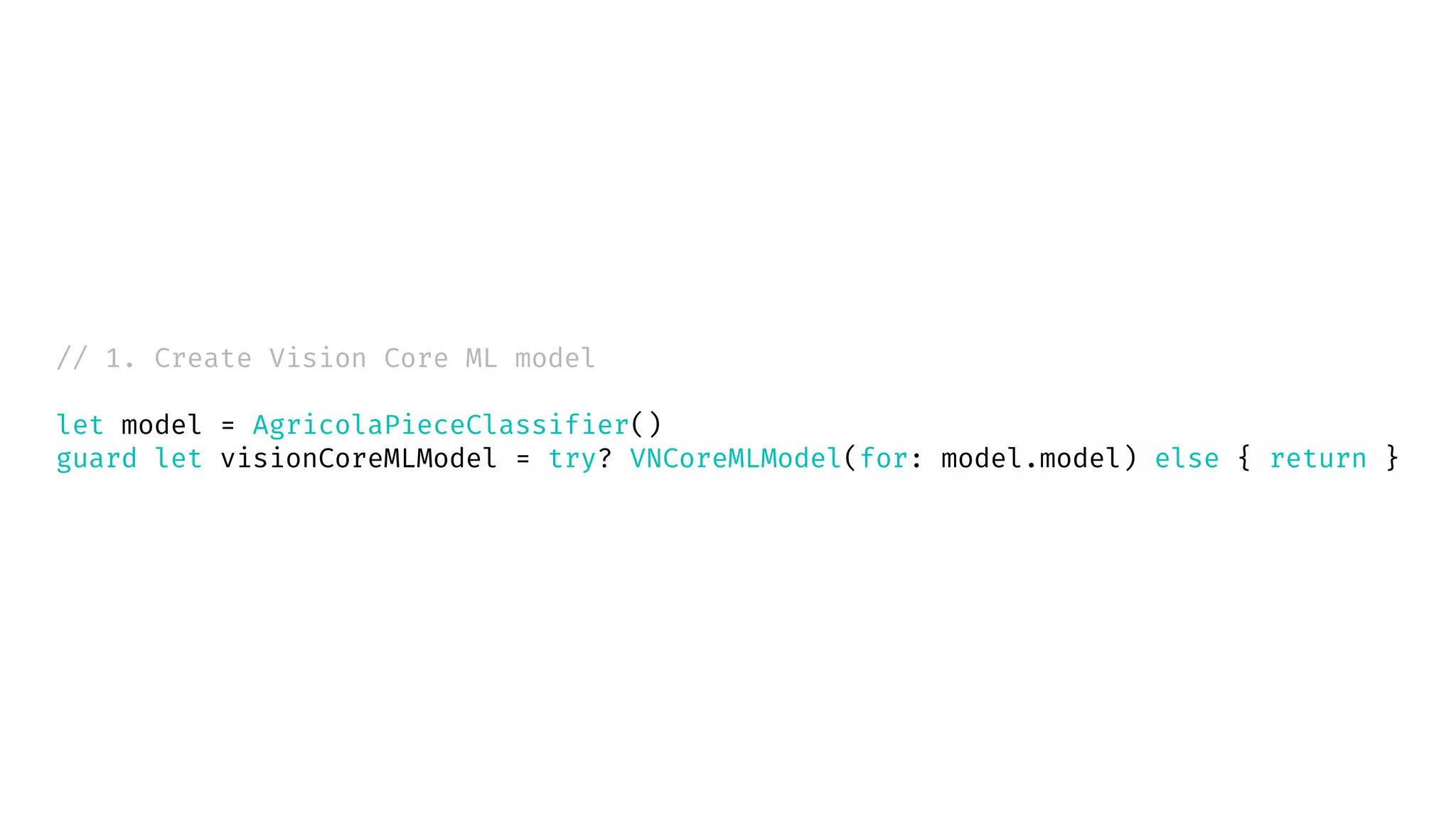

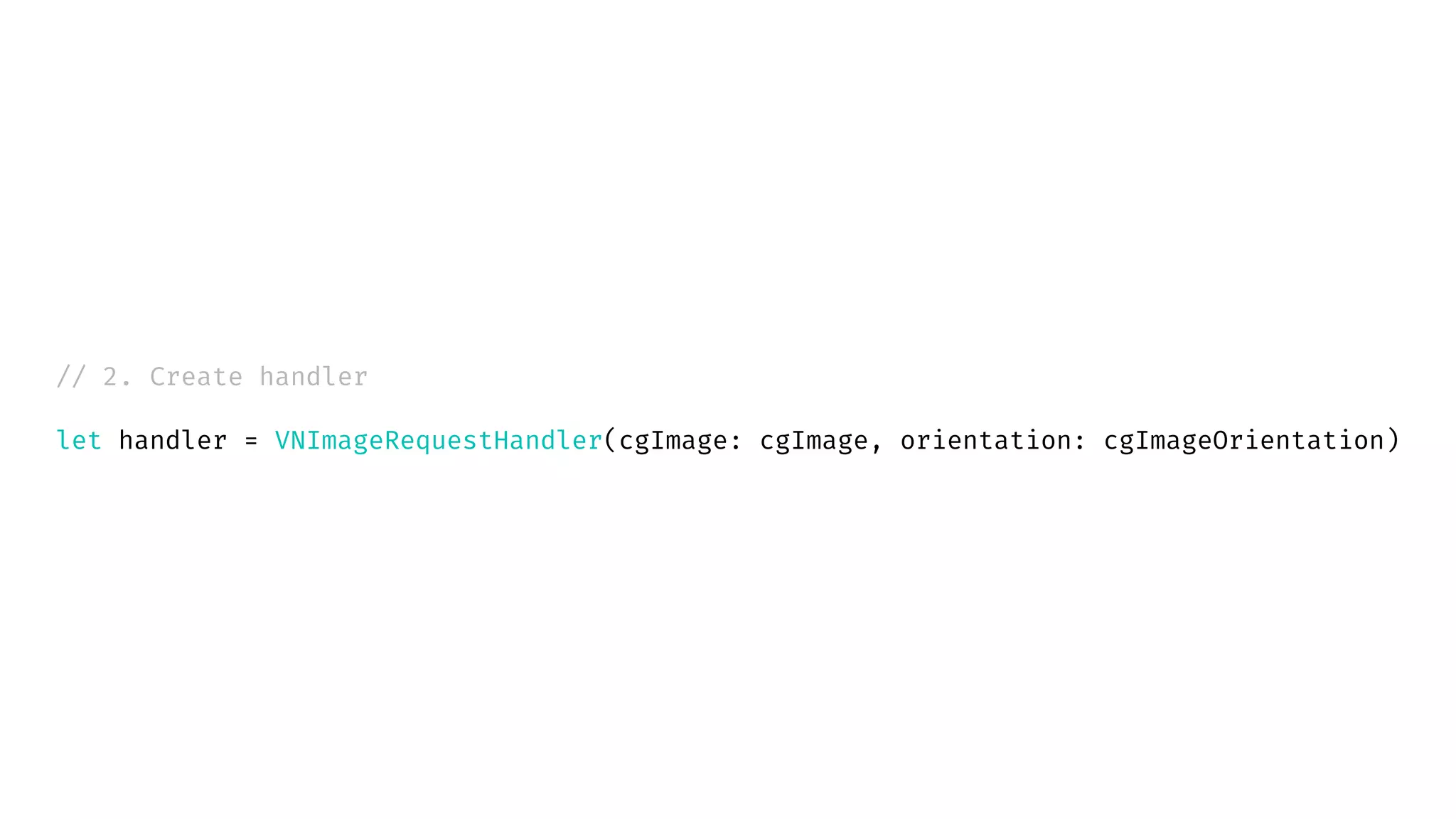

The document discusses the practical applications of machine learning (ML) in various tasks, emphasizing on-device inference benefits such as user privacy and low latency. It outlines a specific ML approach for classifying board game pieces using rectangle detection and image classification, detailing the steps for implementing this in a coding environment. Additionally, it touches on data collection, model training, and setting up a Python virtual environment for advanced ML tasks such as style transfer and activity classification.

![// 1. Create handler

let handler = VNImageRequestHandler(cgImage: image,

orientation: orientation,

options: [:])](https://image.slidesharecdn.com/navigating-the-apple-ml-landscape-180912095158/75/Navigating-the-Apple-ML-Landscape-15-2048.jpg)

![// 3. Send request to handler

do {

try handler.perform([request])

} catch let error as NSError {

// handle error

return

}](https://image.slidesharecdn.com/navigating-the-apple-ml-landscape-180912095158/75/Navigating-the-Apple-ML-Landscape-17-2048.jpg)

![// 4. Handle results

func handleDetectedRectangles(request: VNRequest?, error: Error?) {

if let results = request?.results as? [VNRectangleObservation] {

// Do something with results [*bounding box coordinates*]

}

}](https://image.slidesharecdn.com/navigating-the-apple-ml-landscape-180912095158/75/Navigating-the-Apple-ML-Landscape-18-2048.jpg)

![// 4. Send request to handler

do {

try handler.perform([request])

} catch let error as NSError {

// handle error

return

}](https://image.slidesharecdn.com/navigating-the-apple-ml-landscape-180912095158/75/Navigating-the-Apple-ML-Landscape-39-2048.jpg)

![// 5. Handle results

func handleClassificationResults(request: VNRequest?, error: Error?) {

if let results = request?.results as? [VNClassificationObservation] {

// Do something with results

}

}](https://image.slidesharecdn.com/navigating-the-apple-ml-landscape-180912095158/75/Navigating-the-Apple-ML-Landscape-40-2048.jpg)