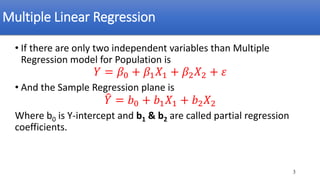

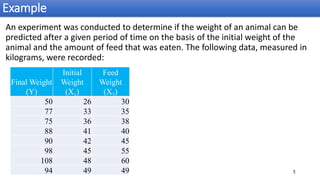

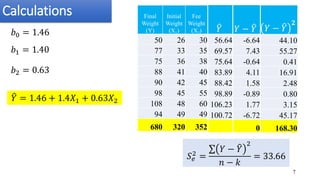

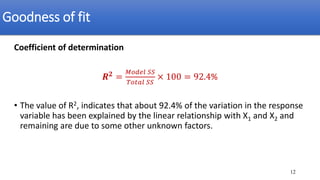

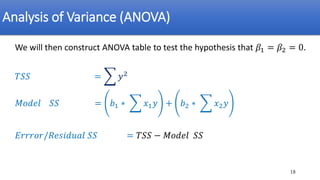

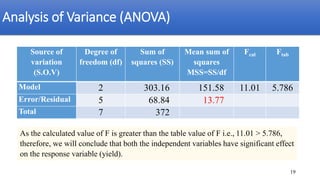

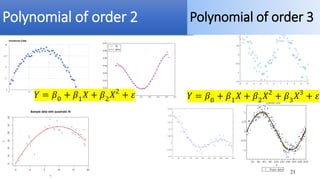

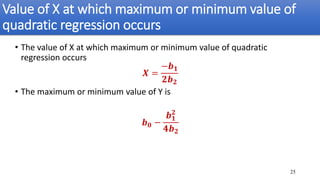

The document discusses multiple linear regression and polynomial regression, emphasizing how the response variable depends on multiple independent variables. It provides mathematical formulations for estimating parameters and hypotheses testing, including examples of applications in real-world scenarios like predicting crop yield based on various factors. Additionally, it covers the goodness of fit, analysis of variance (ANOVA), and the significance of independent variables in the model.