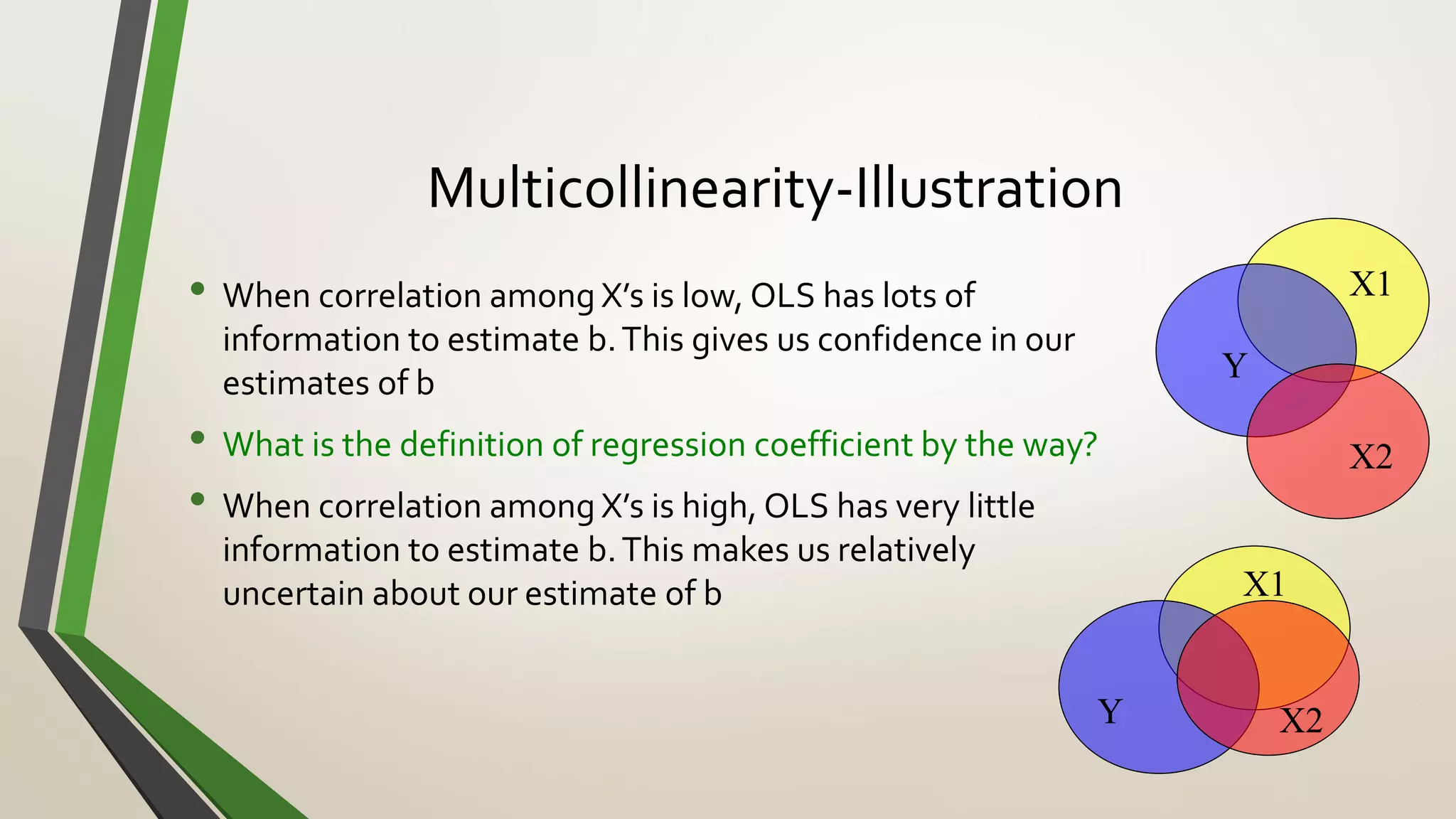

This document provides an overview of multicollniarity, including its definition, causes, detection, effects, and potential remedies. Multicollinearity exists when predictor variables are linearly related/correlated with each other. It can be caused by model specification, too many variables, or data collection methods. Detection methods include variance inflation factors, condition numbers, and sample correlations. Effects include unstable estimates and large standard errors. Potential remedies include principal components analysis, ridge regression, dropping variables, collecting additional different data, and transforming or restricting variables.