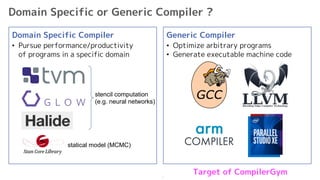

1. The document discusses using machine learning to help optimize generic compilers by learning better heuristics and parameters from large amounts of data.

2. Early works tried feature engineering and traditional machine learning models with limited success due to the large design space.

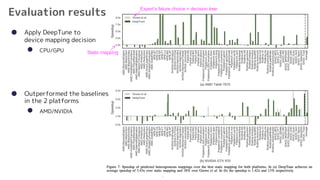

3. DeepTune used an LSTM model with embedding layers to directly learn optimizations from program code and outperformed baselines on two hardware targets.

4. CompilerGym is proposed as a framework to implement and evaluate machine learning-based compiler optimizations as reinforcement learning agents interacting with a compiler environment.

![4

Early works

● Since [Calder+ TOPLAS ’97], many academic researchers had tried ML

l Feature engineering for all of (Optimization Decisions) x (Targets) is infeasible

Step 1: Feature engineering

• case: unrolling factors

Still unacceptable engineering cost ...

Step 2: Predict the best decision

by traditional ML models

• e.g. decision tree](https://image.slidesharecdn.com/mlalsohelpsgenericcompiler-210223082532/85/Ml-also-helps-generic-compiler-4-320.jpg)

![5

DeepTune [Commins+ PaCT ’17 Best Paper!!]

● Use LSTM + Embedding layers for feature extraction

• Feed (almost) raw OpenCL code

• Handle the encoded sequence like NLP

• Make an optimzation decision with Affine

• Train modes for each target

Chris Cummns-san

(Facebook Researcher)](https://image.slidesharecdn.com/mlalsohelpsgenericcompiler-210223082532/85/Ml-also-helps-generic-compiler-5-320.jpg)

![7

After all, what is CompilerGym ?

● Background

l Compiler researchers see compiler optimizaiton tasks

as environements for RL [Leather+ FDL’20]

● CompilerGym

l Uses the OpenAI Gym to expose the “agent-environment loop”

l Support users to implement their own optimizers as RL agents

l Provides features extractors

and benchmark datasets

l Expose compiler APIs

state IR

action • IR transformation

• contex change

reward • speed up

• codesize reduction

DeepTune is

just this module](https://image.slidesharecdn.com/mlalsohelpsgenericcompiler-210223082532/85/Ml-also-helps-generic-compiler-7-320.jpg)