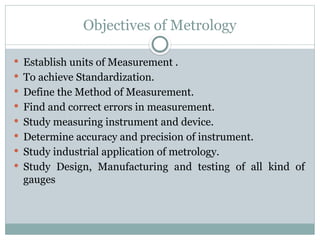

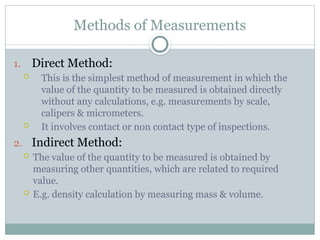

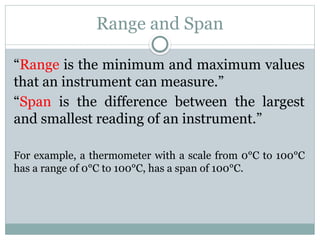

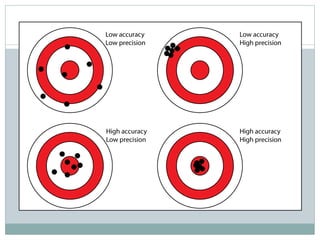

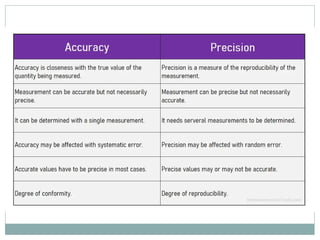

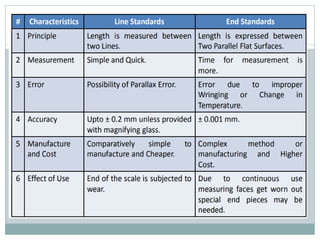

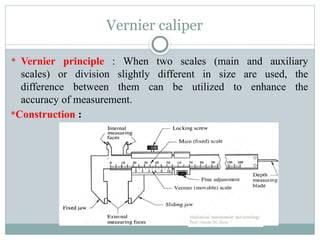

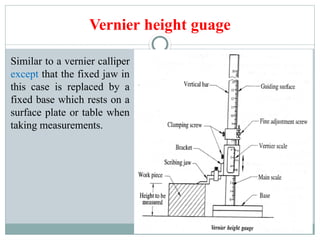

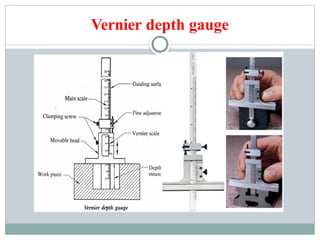

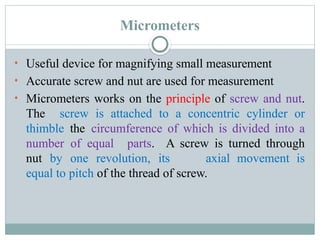

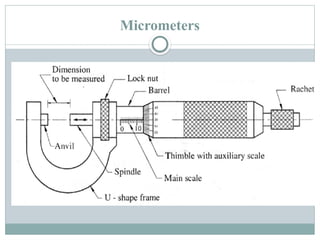

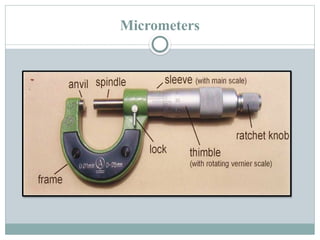

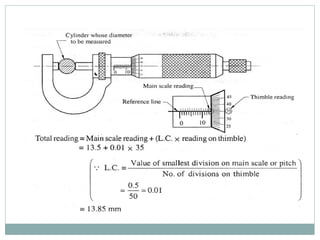

The document provides an overview of metrology, emphasizing its importance in measurement standardization, accuracy, and precision. It covers various types of metrology including scientific, industrial, and legal, as well as methods and characteristics of measurement instruments. Additionally, it discusses inspection, calibration, error types, and factors to consider when selecting measuring instruments.