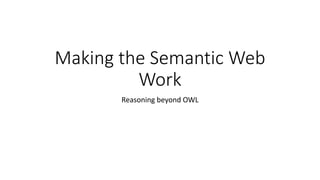

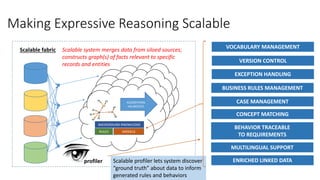

The document discusses the challenges and advancements in making the Semantic Web more practical, particularly focusing on the semantic issues in machine-to-machine communication and the importance of structured processes linking specifications to implementations. It critiques current RDF standards and highlights the need for scalable solutions such as RDF* and SPARQL*, emphasizing the evolution towards more effective data integration methods while maintaining human-readable formats. Additionally, it addresses the limitations of traditional semantic frameworks like OWL in data processing and inference capabilities.

![Predicate Calculus

RDF is a special case of the “predicate calculus”

Statement of arity 2

Predicate Calculus:

A(:Dog,:Fido)

RDF:

:Fido A :Dog .

Statement of arity 3

Predicate Calculus:

:Population(:Nigeria,2013,173.6e6)

RDF:

[

a :Population .

:where :Nigeria .

:when 2013 .

:amount 173.6e6

]

It’s not too hard to write this in

Turtle

This implementation, however,

is structurally unstable, since

we went from one triple to four

triples](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-11-320.jpg)

![The Old RDF: Expressive but not scalable

Early RDF:

RDF/XML serialization, heavy use of blank nodes, extreme

expressiveness:

[ a sp:Select ;

sp:resultVariables (_:b2) ;

sp:where ([ sp:object rdfs:Class ;

sp:predicate rdf:type ;

sp:subject _:b1

] [ a sp:SubQuery ;

sp:query

[ a sp:Select ;

sp:resultVariables (_:b2) ;

sp:where ([ sp:object _:b2 ;

sp:predicate rdfs:label ;

sp:subject _:b1

])

]

])

]

This is a representation of a SPARQL

query in RDF!

This example uses Turtle, where

square brackets create blank nodes

and parenthesis create lists.

With this graph in the JENA

framework you can easily manipulate

this as an abstract syntax tree.

Very complex relationships, such as

mathematical equations can be built

this way; blank nodes can be used to

write high-arity predicates.

Accessing it through SPARQL would

not be so easy!](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-14-320.jpg)

![Linked Data: New Focus

Linked data source

Blank nodes are discouraged because it’s hard for

a distributed community to talk about something

without a name.

[ a sp:Select ;

sp:resultVariables (_:b2) ;

sp:where ([ sp:object rdfs:Class ;

sp:predicate rdf:type ;

sp:subject _:b1

] [ a sp:SubQuery ;

sp:query

[ a sp:Select ;

sp:resultVariables (_:b2) ;

sp:where ([ sp:object _:b2 ;

sp:predicate rdfs:label ;

sp:subject _:b1

])

]

])

]

Turtle and RDF/XML (which

have sweet syntax for blank

nodes) are not scalable

because the parser cannot be

restarted after a failure: if

you have billions of triples, a

few will be bad

<http://example.org/show/218> <http://www.w3.org/2000/01/rdf-schema#label> "That Seventies Show"^^<http://www.w3.org/2001/XMLSchema#string> .

<http://example.org/show/218> <http://www.w3.org/2000/01/rdf-schema#label> "That Seventies Show" .

<http://example.org/show/218> <http://example.org/show/localName> "That Seventies Show"@en .

<http://example.org/show/218> <http://example.org/show/localName> "Cette Série des Années Septante"@fr-be .

<http://example.org/#spiderman> <http://example.org/text> "This is a multi-linenliteral with many quotes (""""")nand two apostrophes ('')." . <http://en.wikipedia.org/wiki/Helium>

<http://example.org/elements/atomicNumber> "2"^^<http://www.w3.org/2001/XMLSchema#integer> . <http://en.wikipedia.org/wiki/Helium> <http://example.org/elements/specificGravity> "1.663E-

4"^^<http://www.w3.org/2001/XMLSchema#double> .

N-Triples is practical for large databases such as Freebase and Dbpedia because records are

isolated, but blank nodes must be named, triple-centric modelling is encouraged

We now have a great query language, SPARQL. SPARQL supports the

same shorthand for blank nodes as Turtle. Some blank node patterns

work naturally, but it is particularly hard to ask questions about

ordered collections.

Blank nodes, collections, etc. are out of fashion.](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-15-320.jpg)

![Old Approaches to Reificiation

Reification with Blank Nodes

[

rdf:type rdf:Statement .

rdf:subject :Tolkien .

rdf:predicate :wrote .

rdf:object :LordOfTheRings .

:said :Wikipedia .

]

http://stackoverflow.com/questions/1312741/simple-example-of-reification-in-rdf

This isn’t too hard to write in Turtle, but it breaks

SPARQL queries and inference for reified triples.

The number of triples is at the very least tripled; the

triple store is unlikely to be able to optimize for

common use cases.](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-17-320.jpg)

![What about SPIN?

SPIN is similar in expressiveness to production rules.

ex:Person

a rdfs:Class ;

rdfs:label "Person"^^xsd:string ;

rdfs:subClassOf owl:Thing ;

spin:rule

[ a sp:Construct ;

sp:text """

CONSTRUCT {

?this ex:grandParent ?grandParent .

}

WHERE {

?parent ex:child ?this .

?grandParent ex:child ?parent .

}"""

] .

This is like a production rule written in

reverse, we infer triples from the

CONSTRUCT clause based on matching

the WHERE clause.

TopBraid Composer implements most

inference through primitive forward

chaining (a fixed point algorithm, RETE

cannot be used because the order of

rule firing is unpredictable.)

Backwards chaining can be

accomplished through the definition of

“magic properties” (something similar

can be done with Drools too)

SPIN has support for query templates, in some ways like Decision

Tables but possibly more palatable for coders and for semantic apps

Control of execution order, negation, and non-monotonic

reasoning are not settled. Less is know about how to implement it](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-42-320.jpg)

![Yet, some RDF syntaxes look almost the same

as JSON/XML

JSON

{

missions: [ “Mercury”, “Gemini”, “Apollo” ]

}

TURTLE

:Missions :members (:Mercury,:Gemini,:Apollo) .

Most RDF tools will expand this into a LISP-list

with blank nodes, but in TURTLE format the

physical layout is the same as JSON.

Collections and Containers are described as “non-

normative” in RDF 1.1; advanced tools may use

special efficient representations (like would be used for

JSON).

It’s awkward to work with ordered collections in the

common “client-server” model that revolves around

SPARQL engines, but for small graphs in memory, the

situation is different – the Jena framework provides a

facility for accessing Collections that feels a lot like

accessing data in JSON

Ordered collections are critical for dealing with external data

that supports external collections AND critical for many

traditional RDF use cases such as metadata (you’ll find

scientists are pretty sensitive to the order of authors for a

paper)](https://image.slidesharecdn.com/makingthesemanticwebwork-151013183540-lva1-app6892/85/Making-the-semantic-web-work-51-320.jpg)