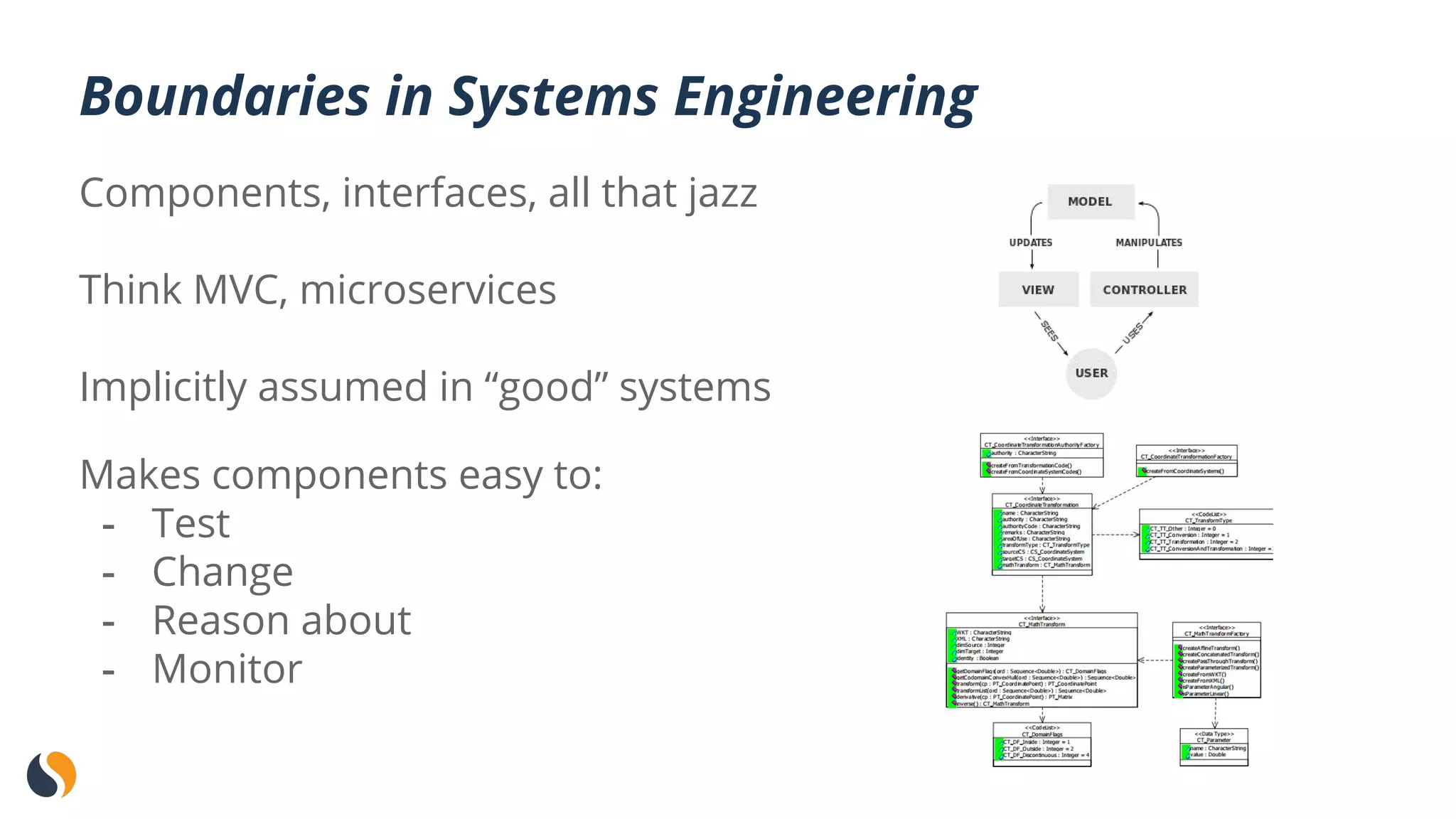

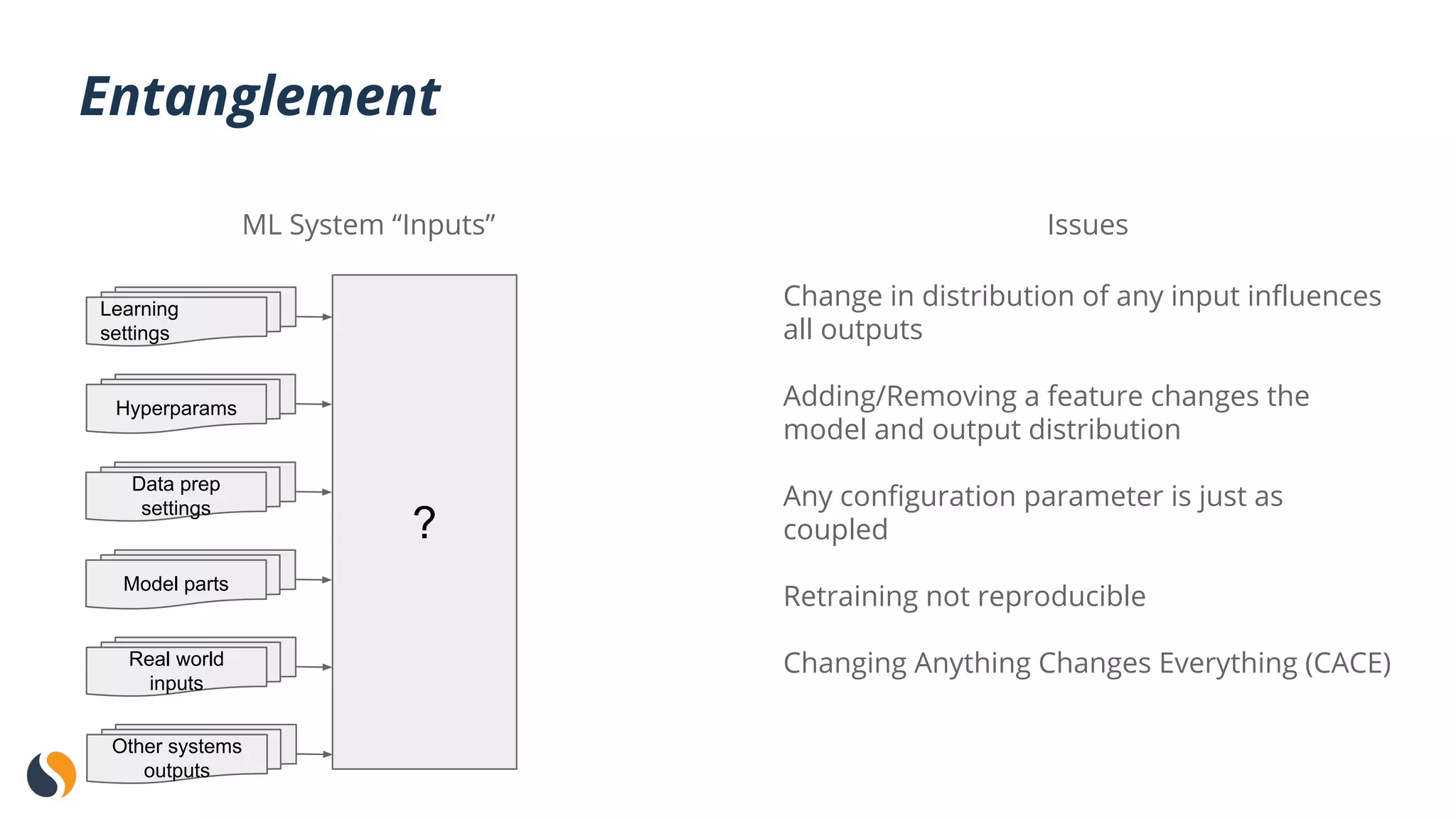

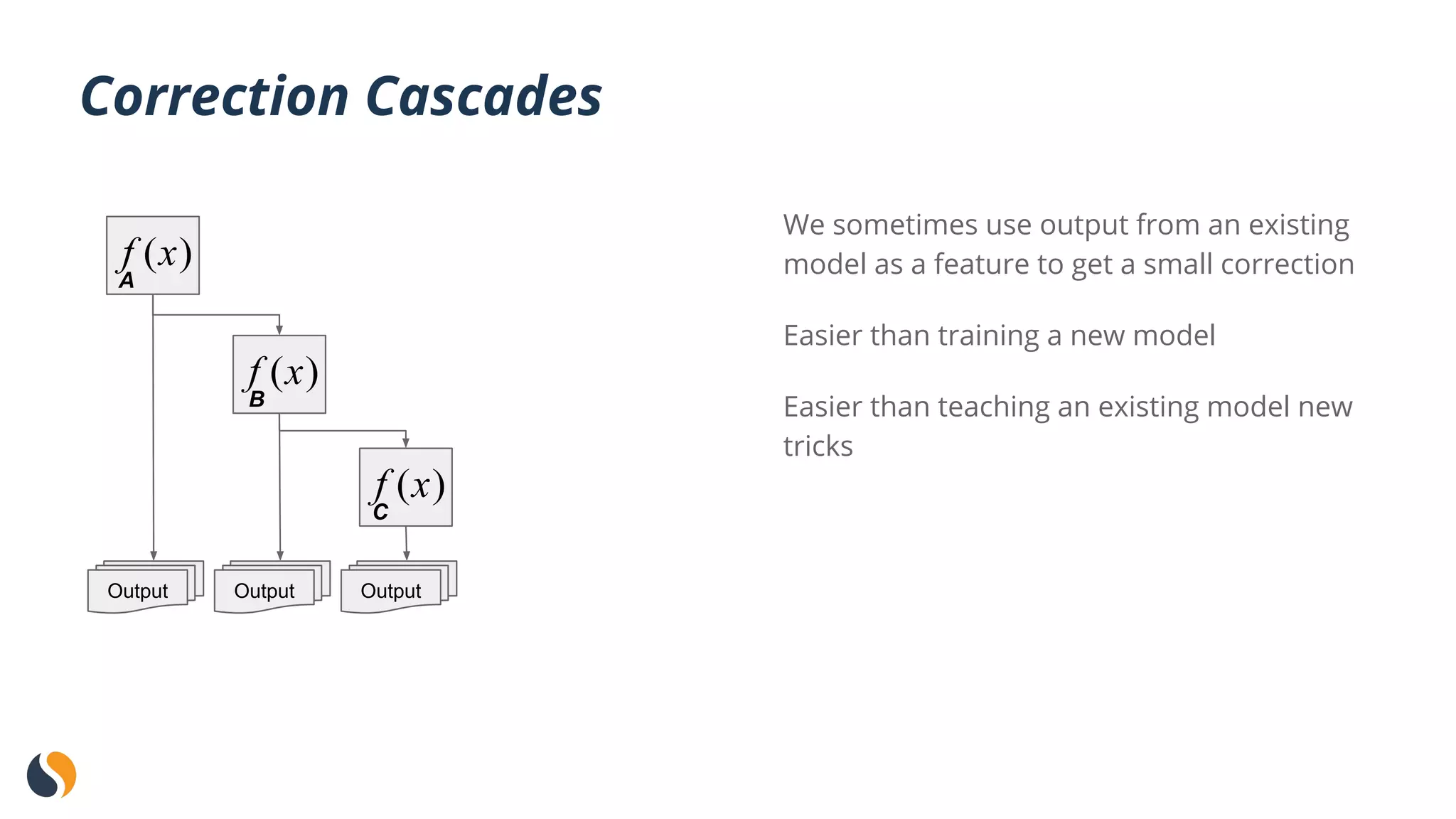

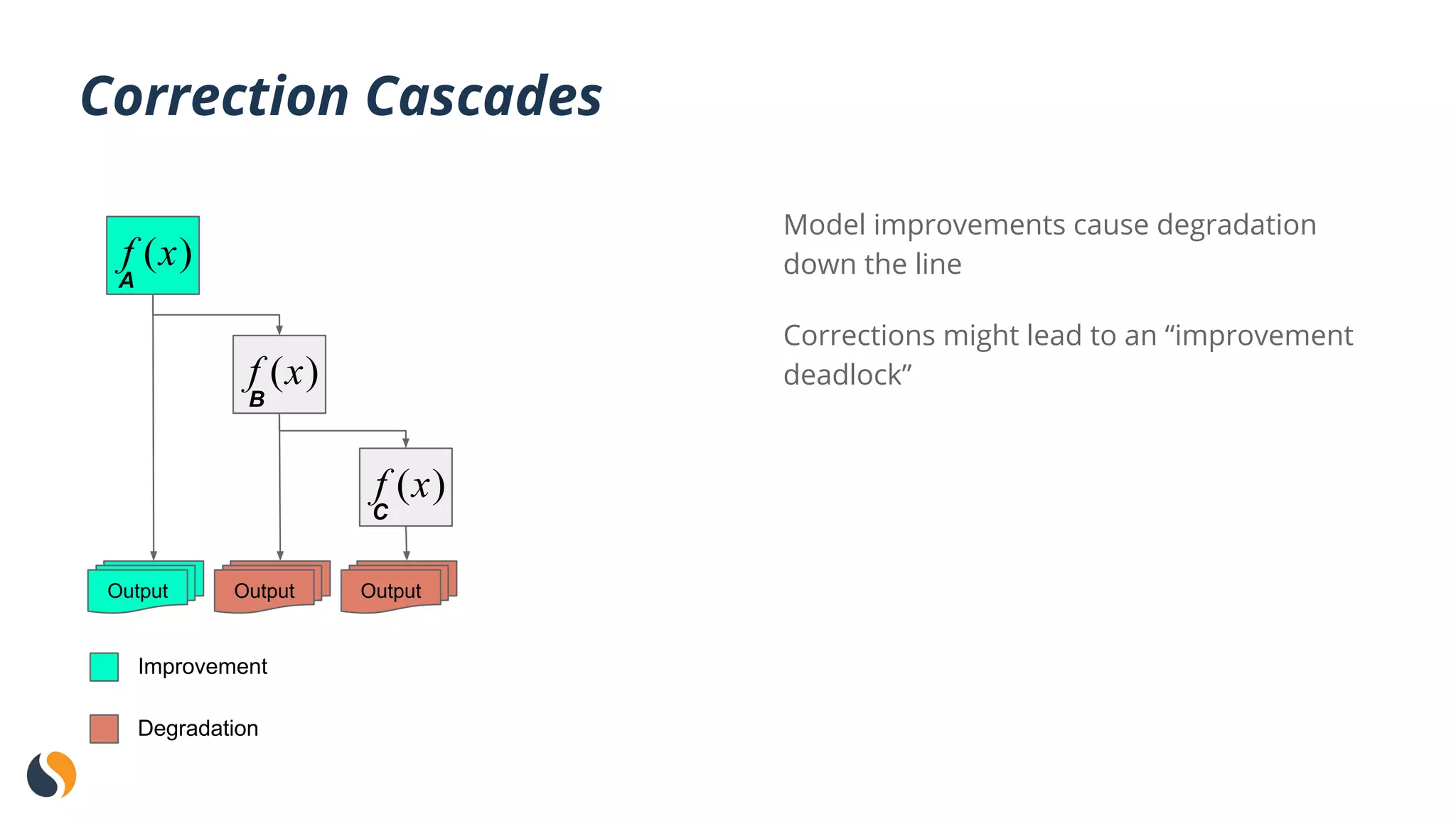

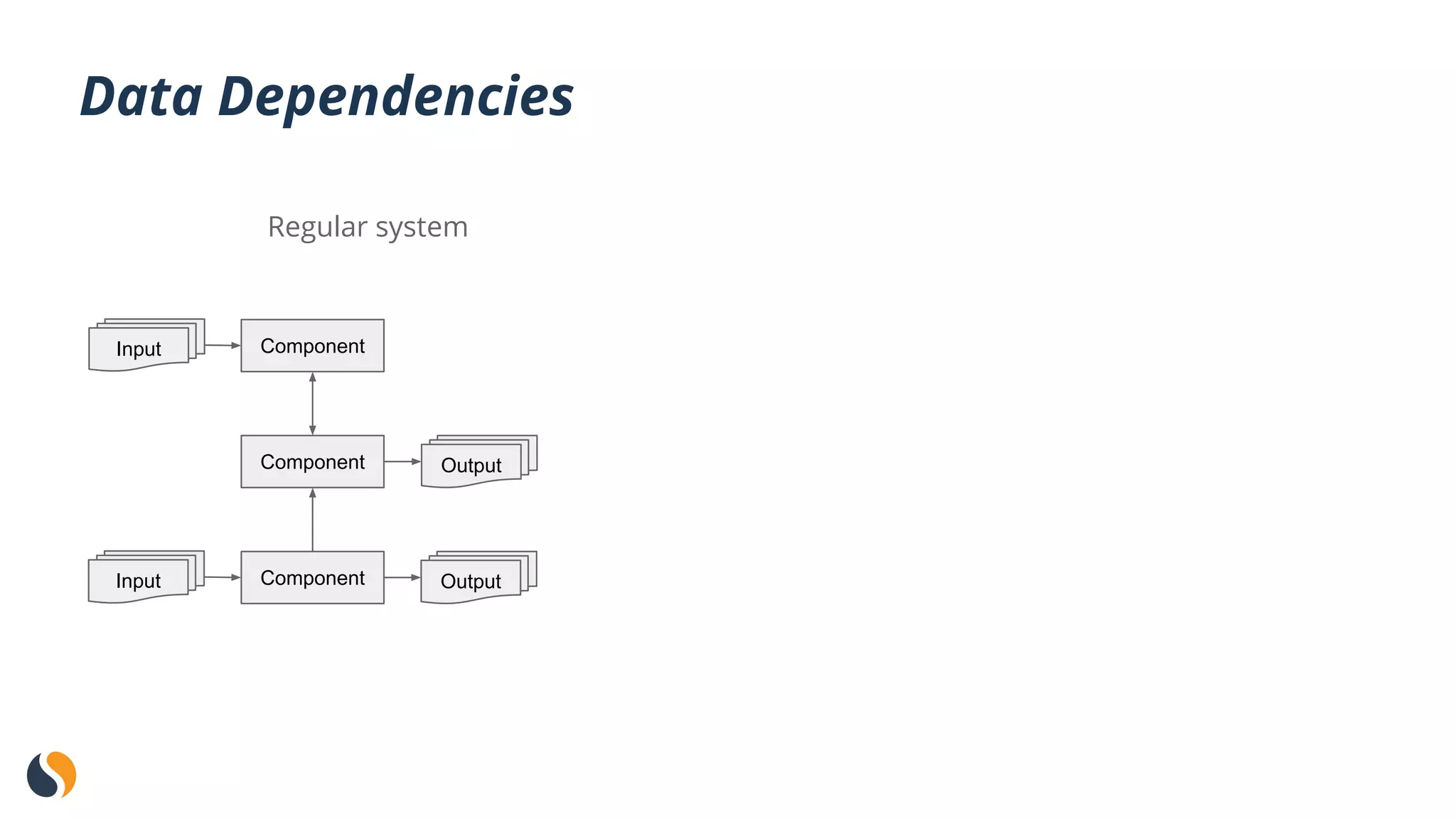

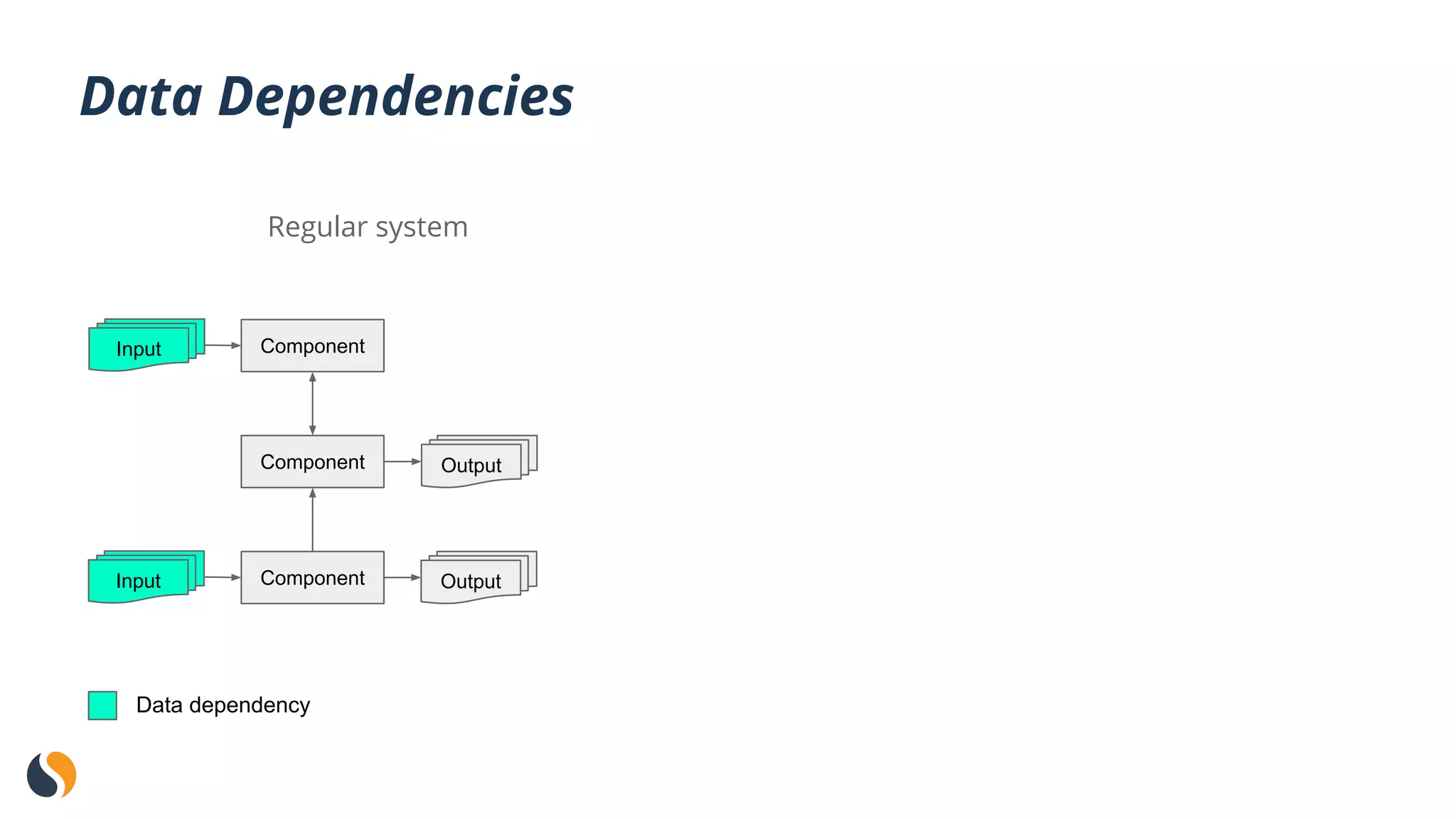

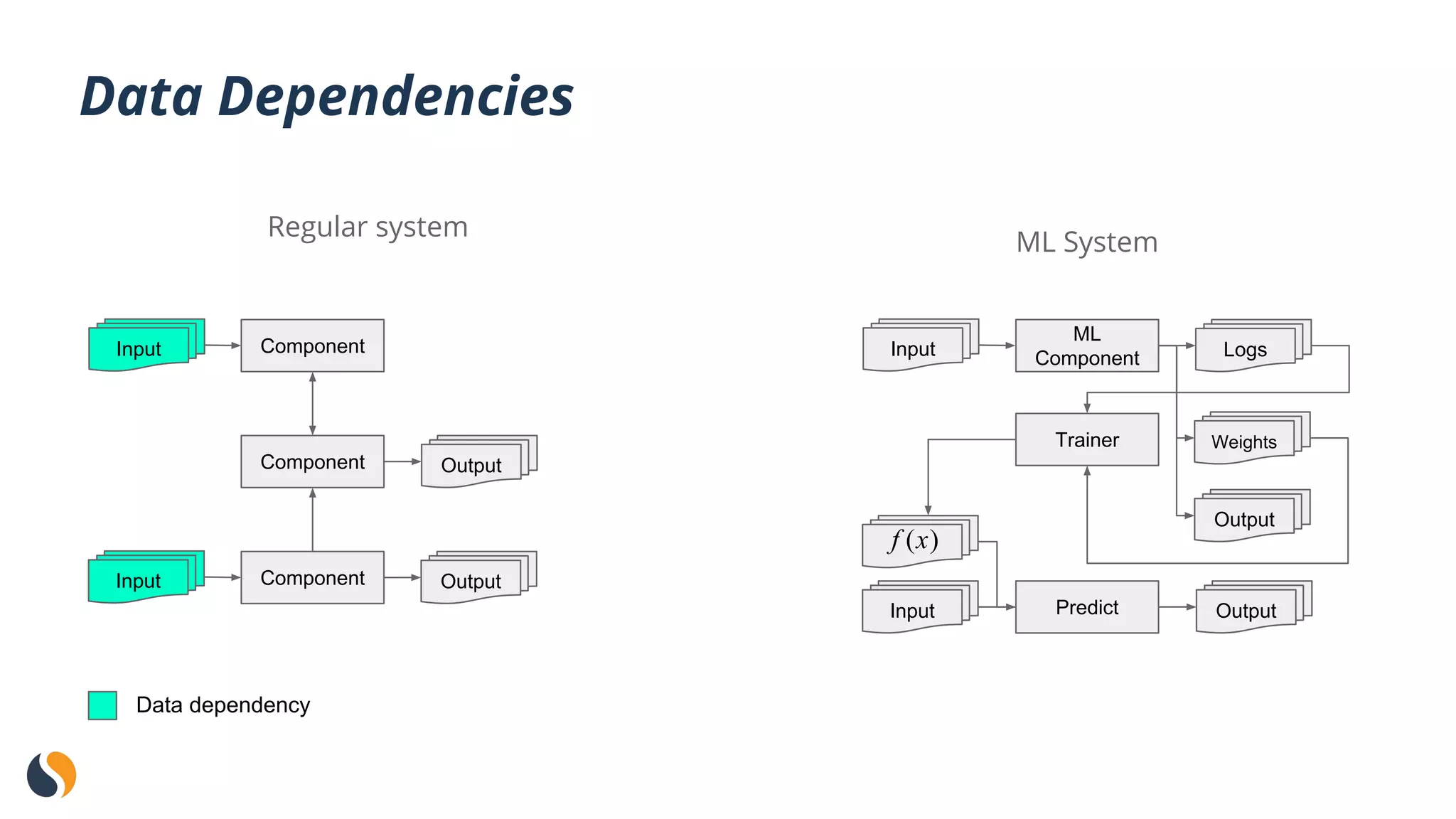

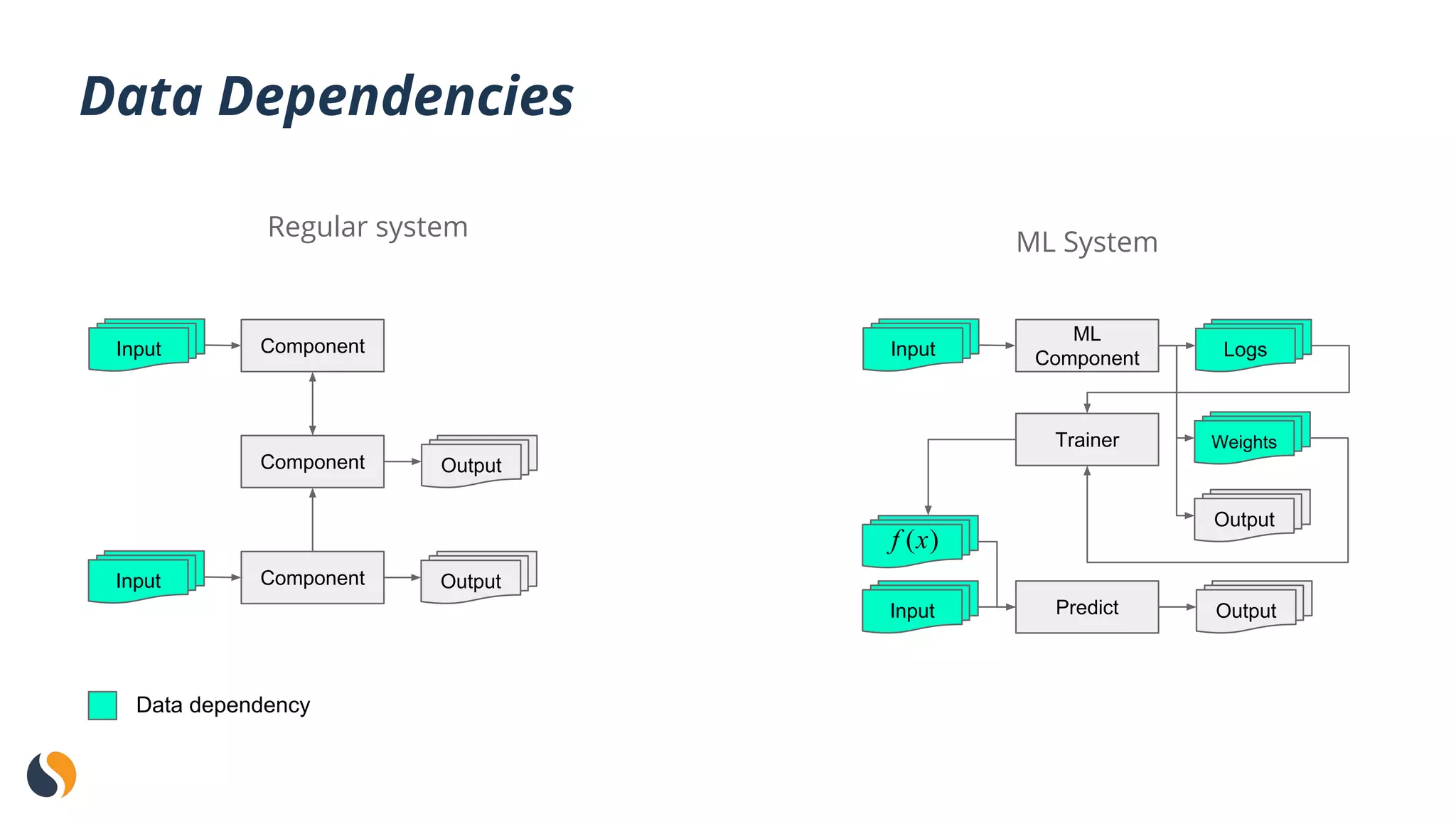

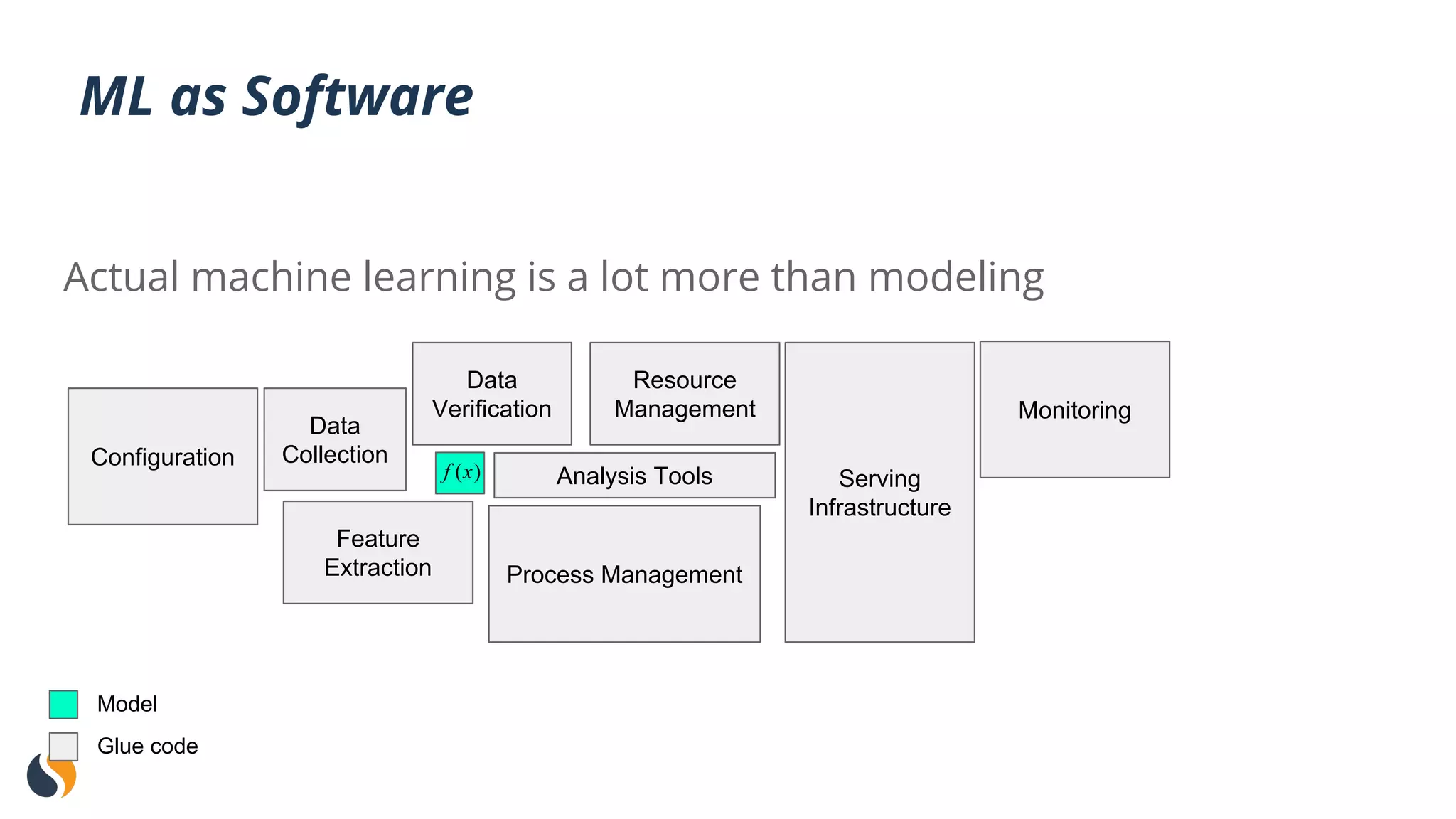

Machine learning systems can accumulate significant technical debt, like other complex software systems. This debt makes the systems difficult to change, maintain, and improve over time. There are several common sources of technical debt unique to machine learning systems, including entanglement between components, correction cascades between models, unstable or underutilized data dependencies, undeclared outputs being consumed by other systems, and issues around changes in external data. Mitigating this debt requires strategies like merging mature models, pruning experimental code, comprehensively testing data and configurations, monitoring outputs, and mapping all data and system dependencies.