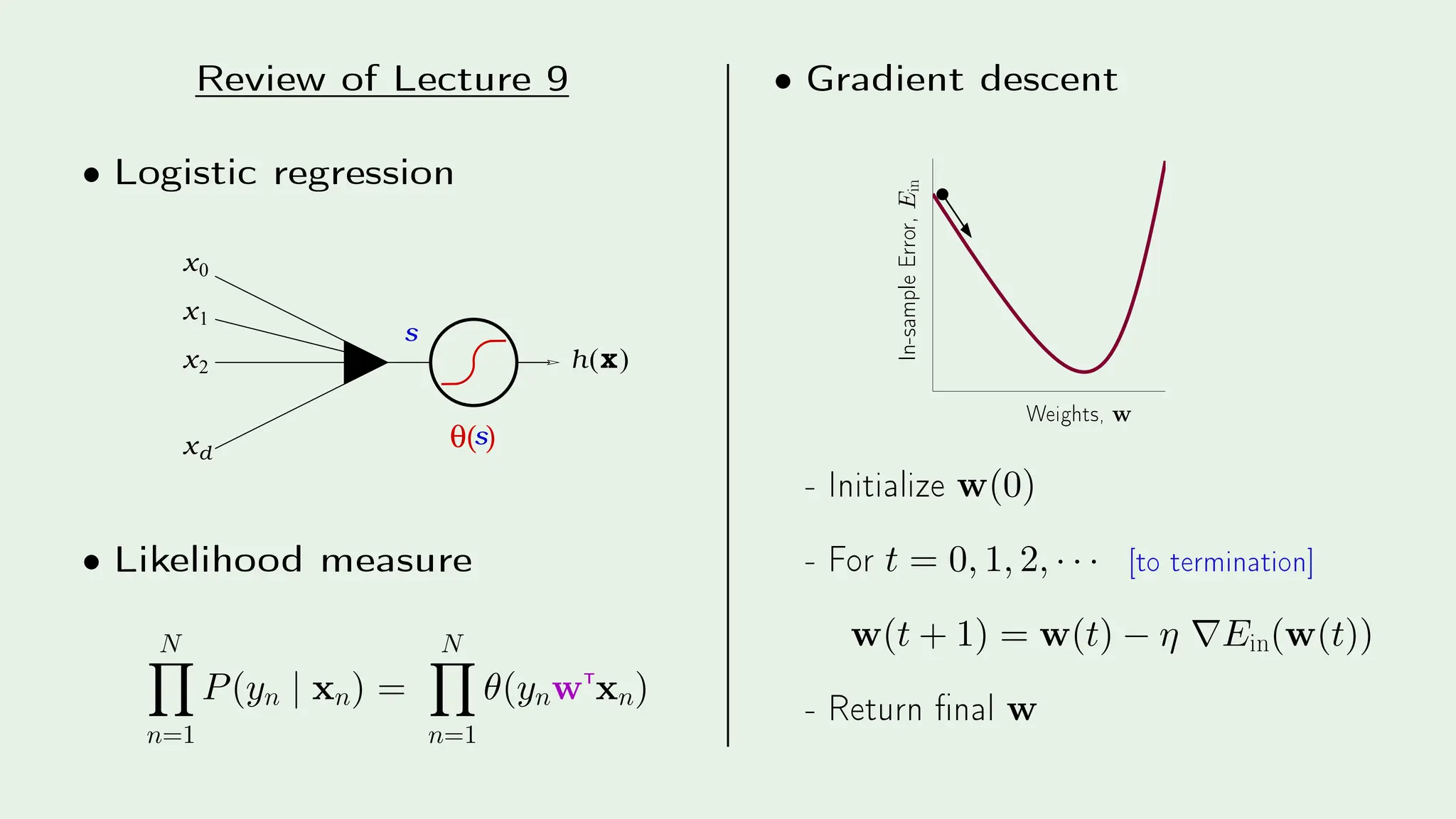

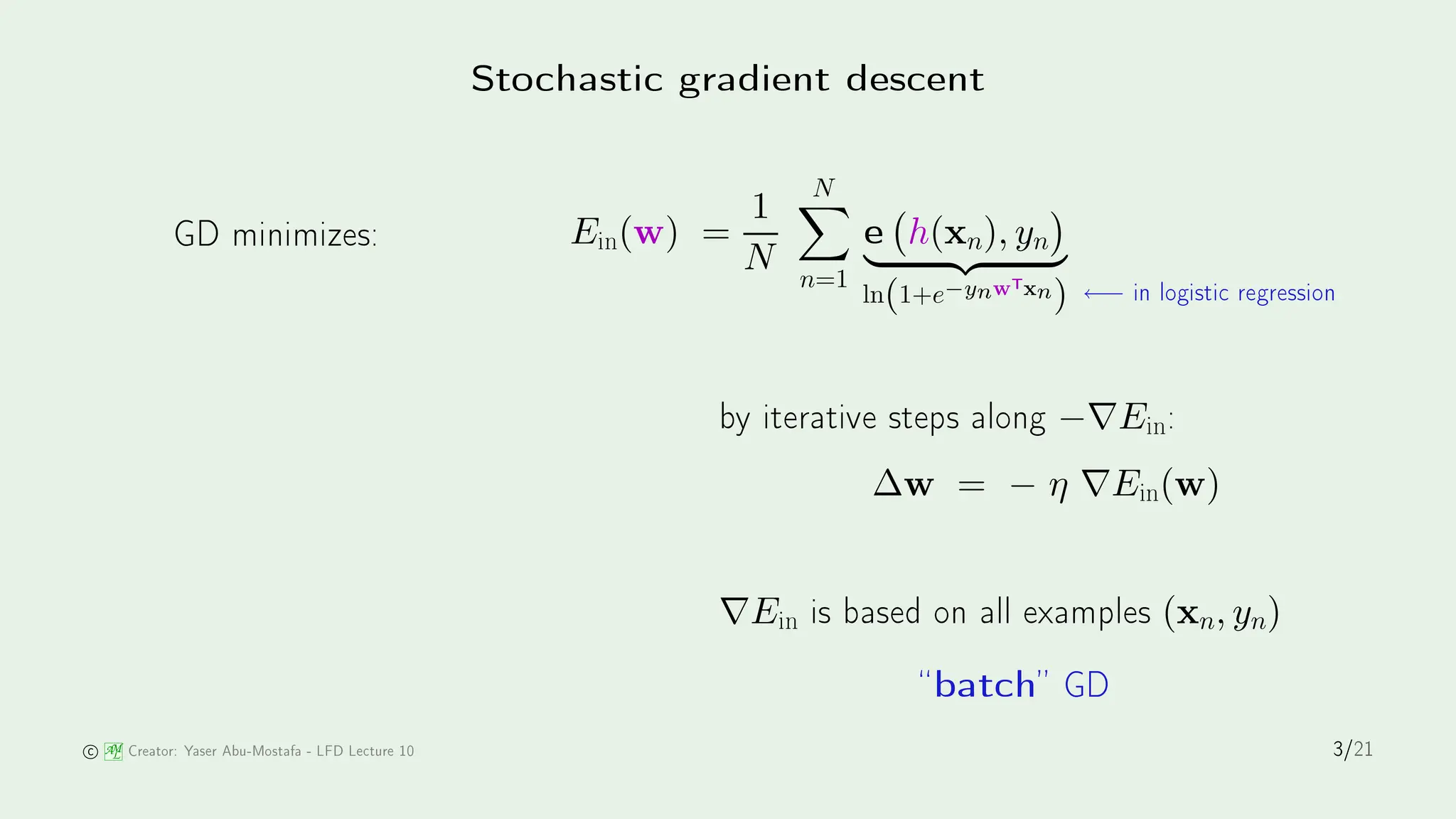

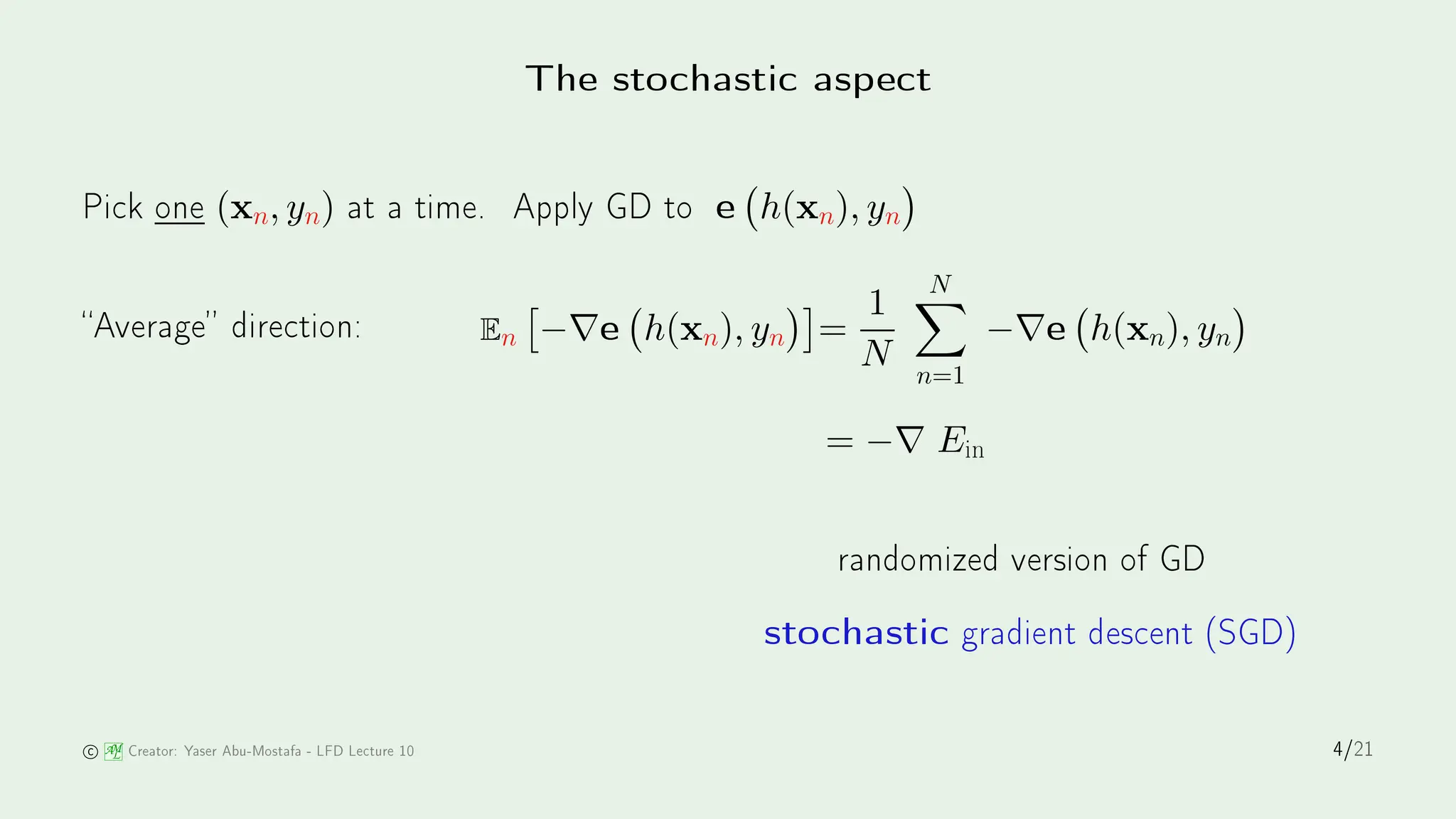

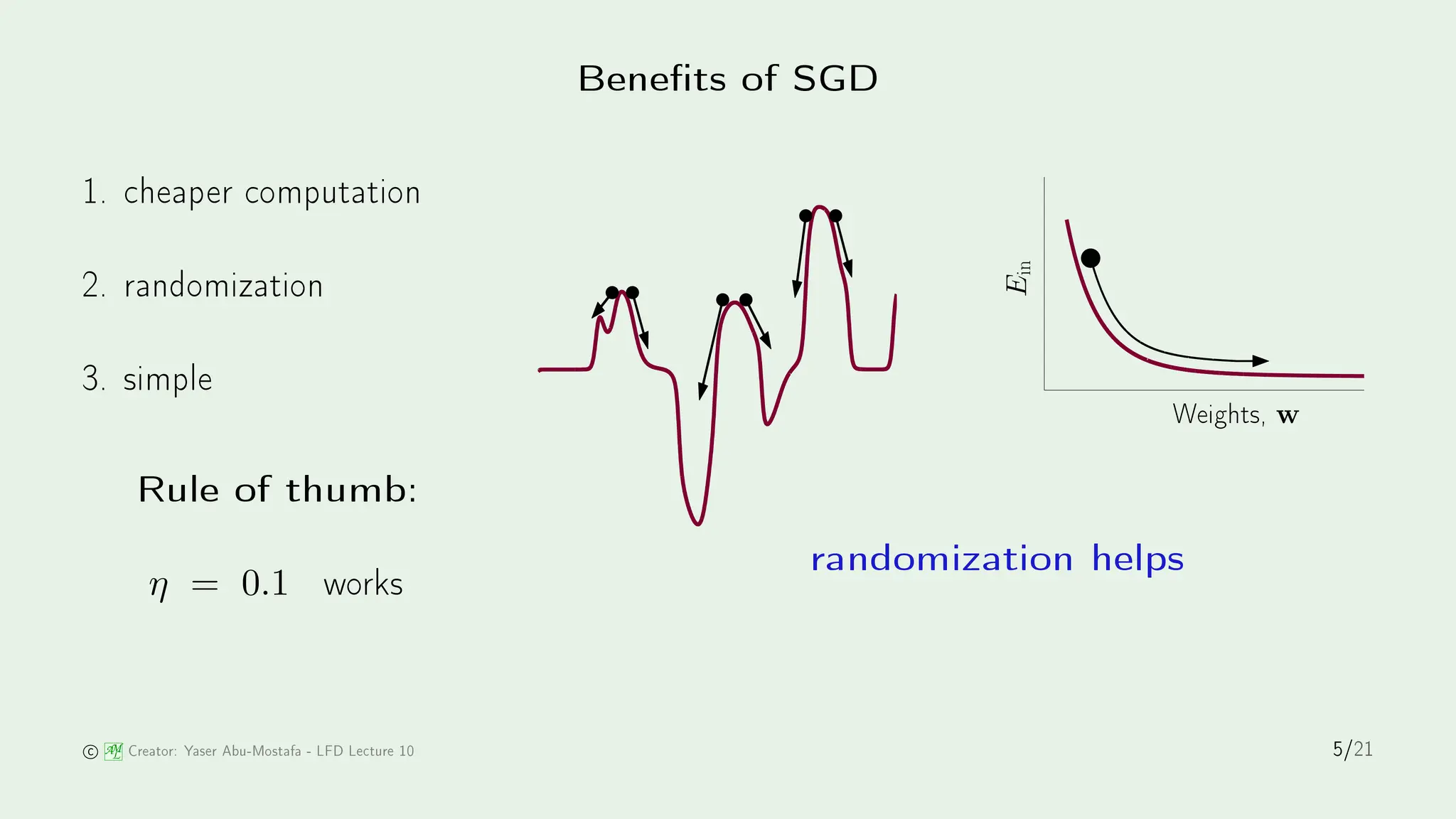

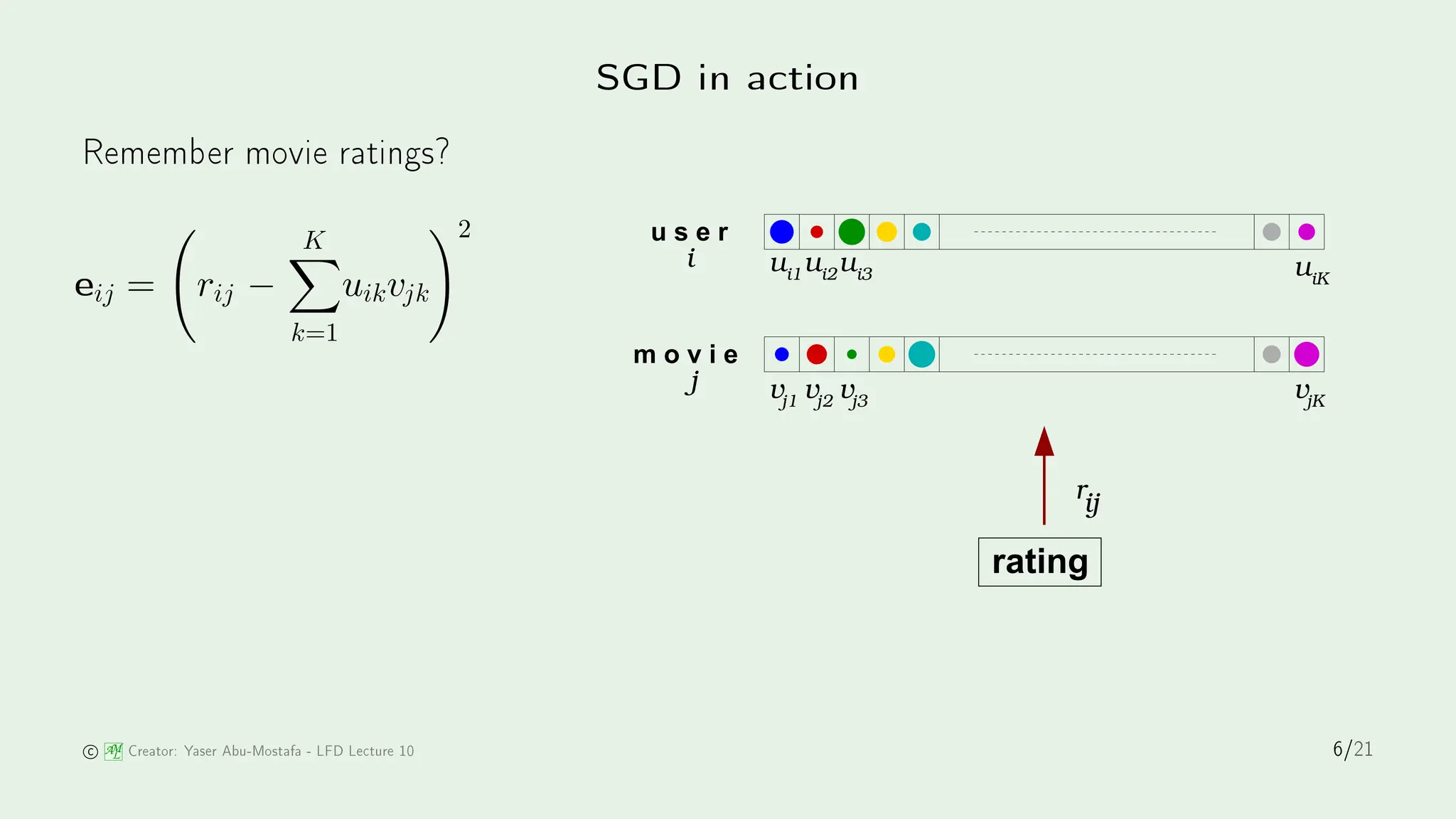

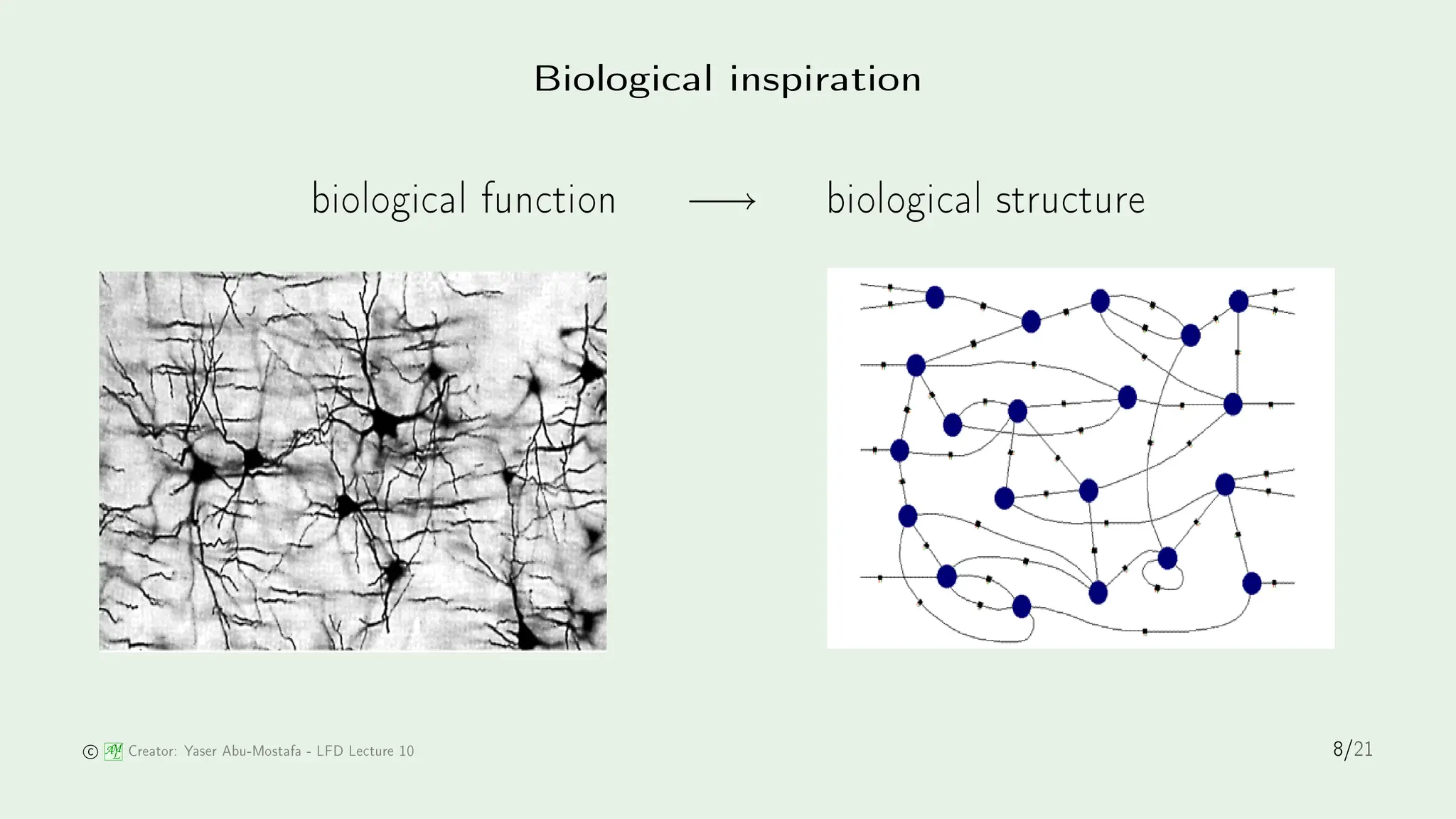

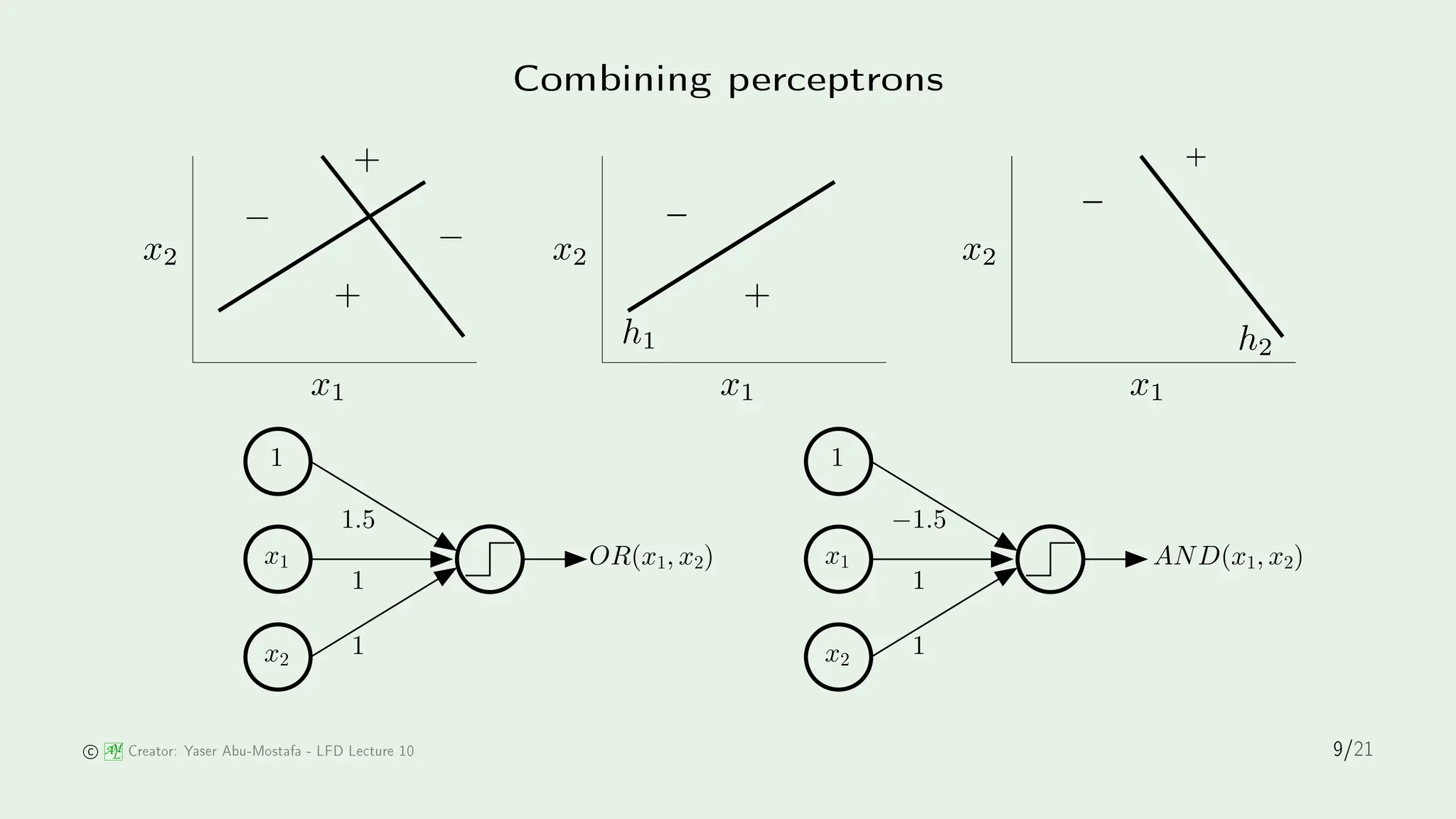

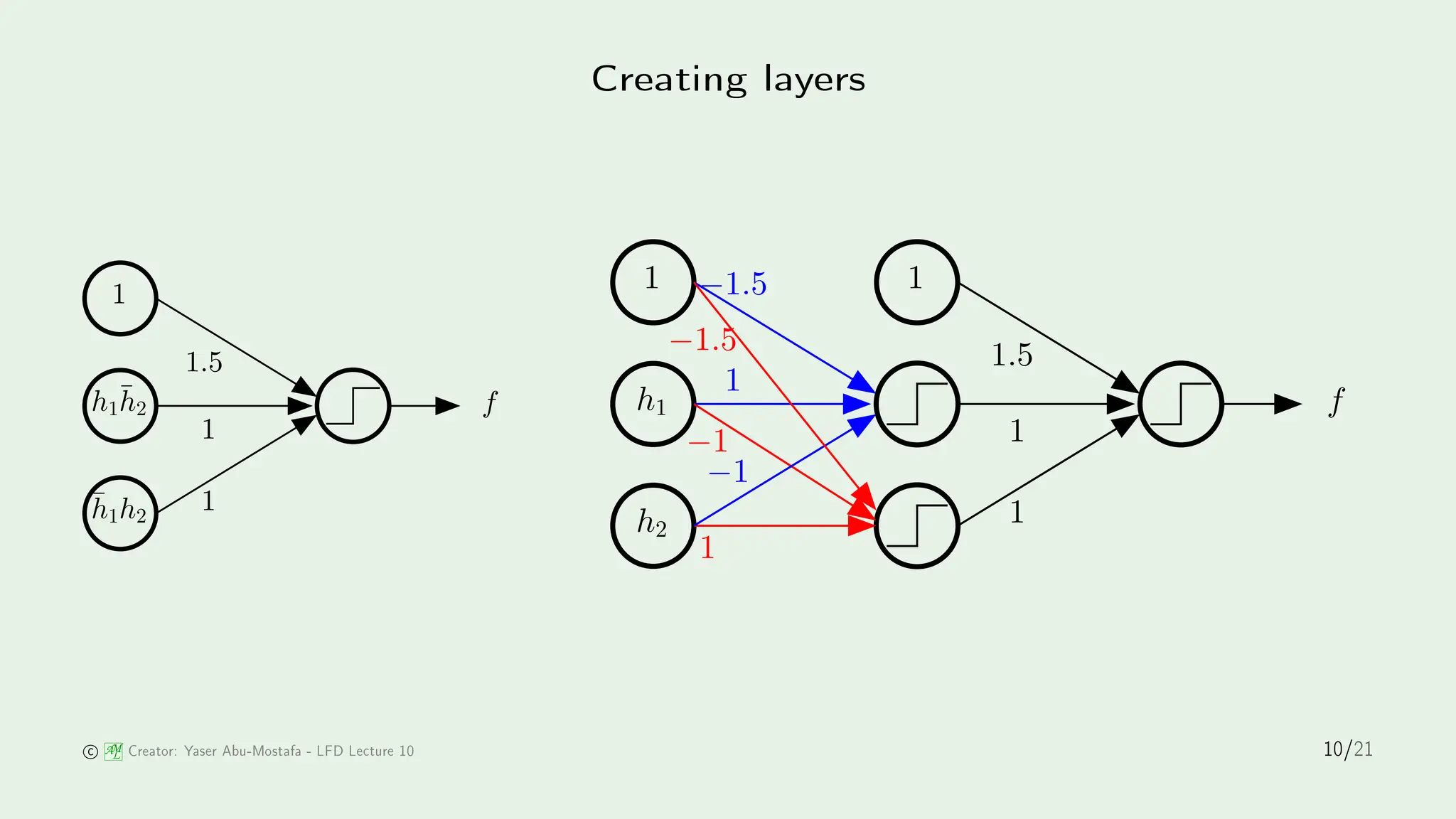

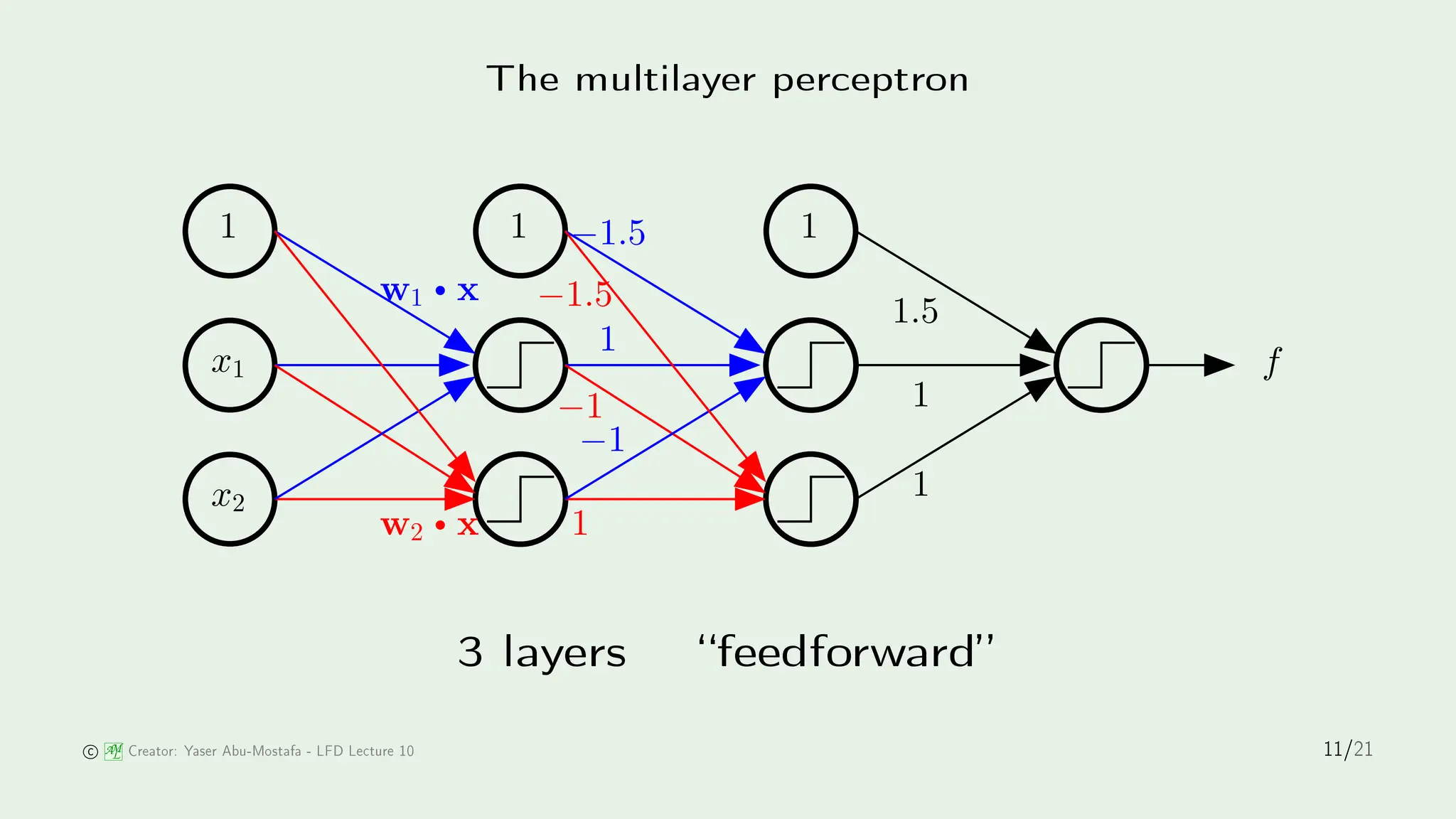

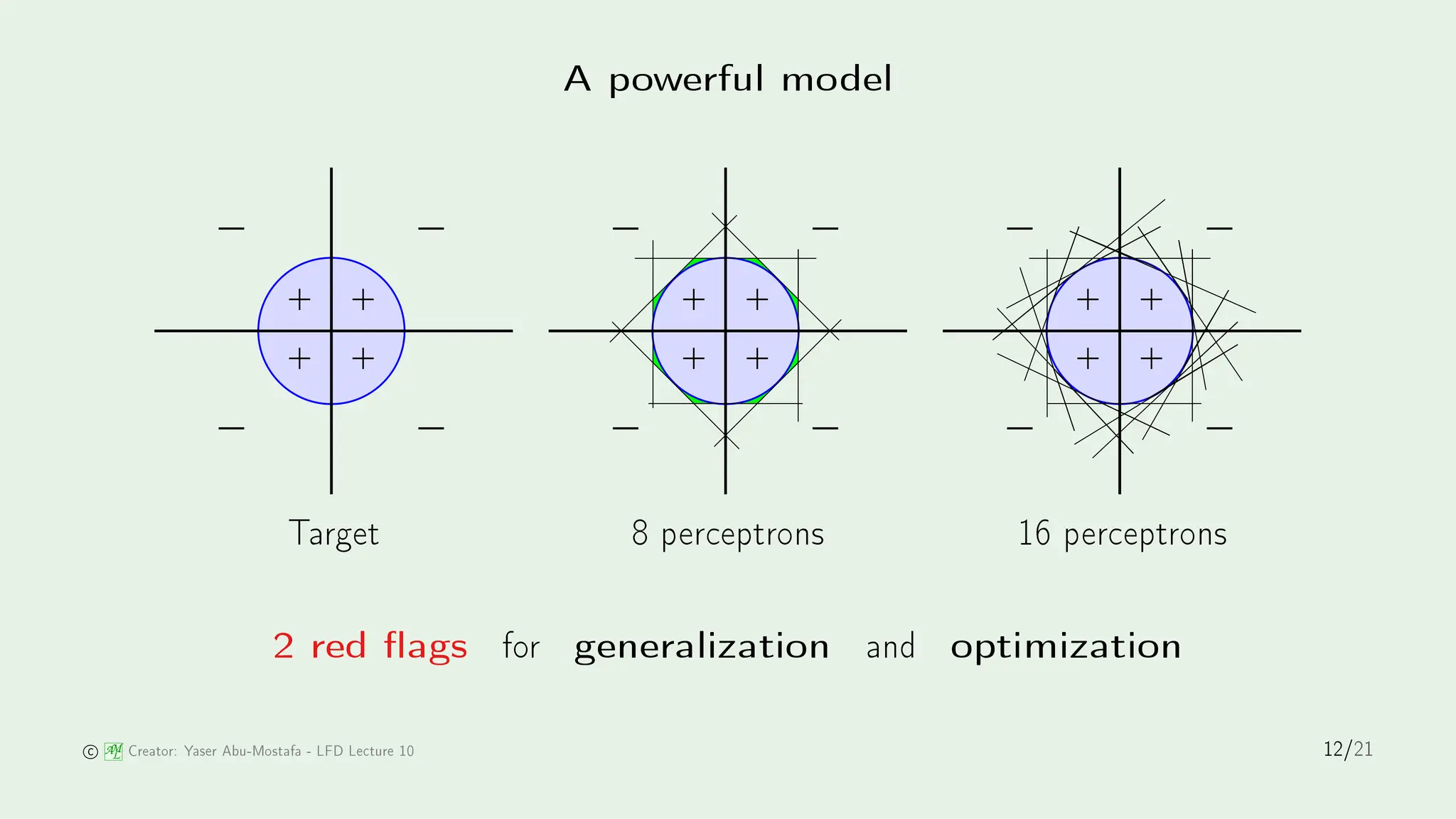

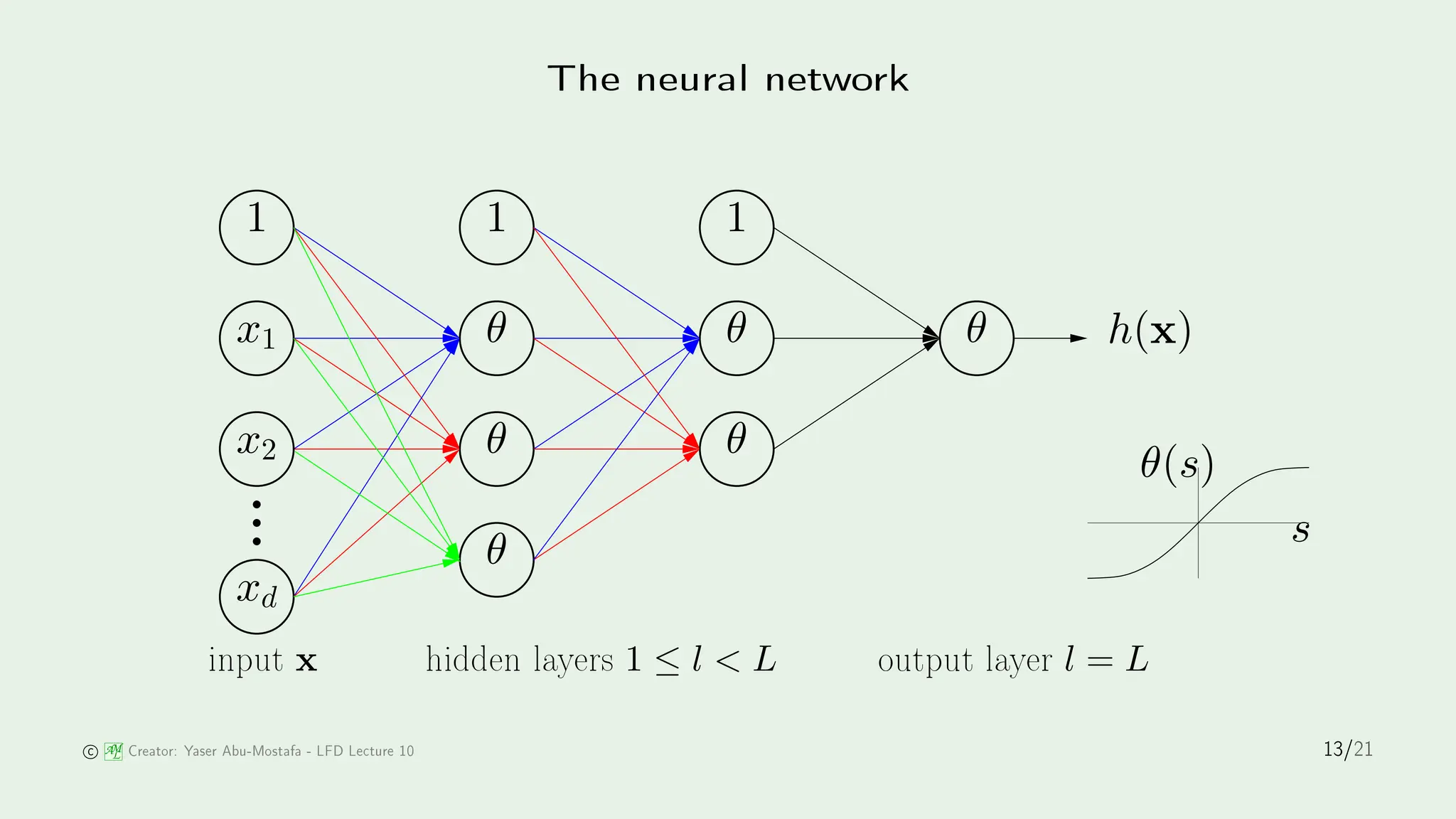

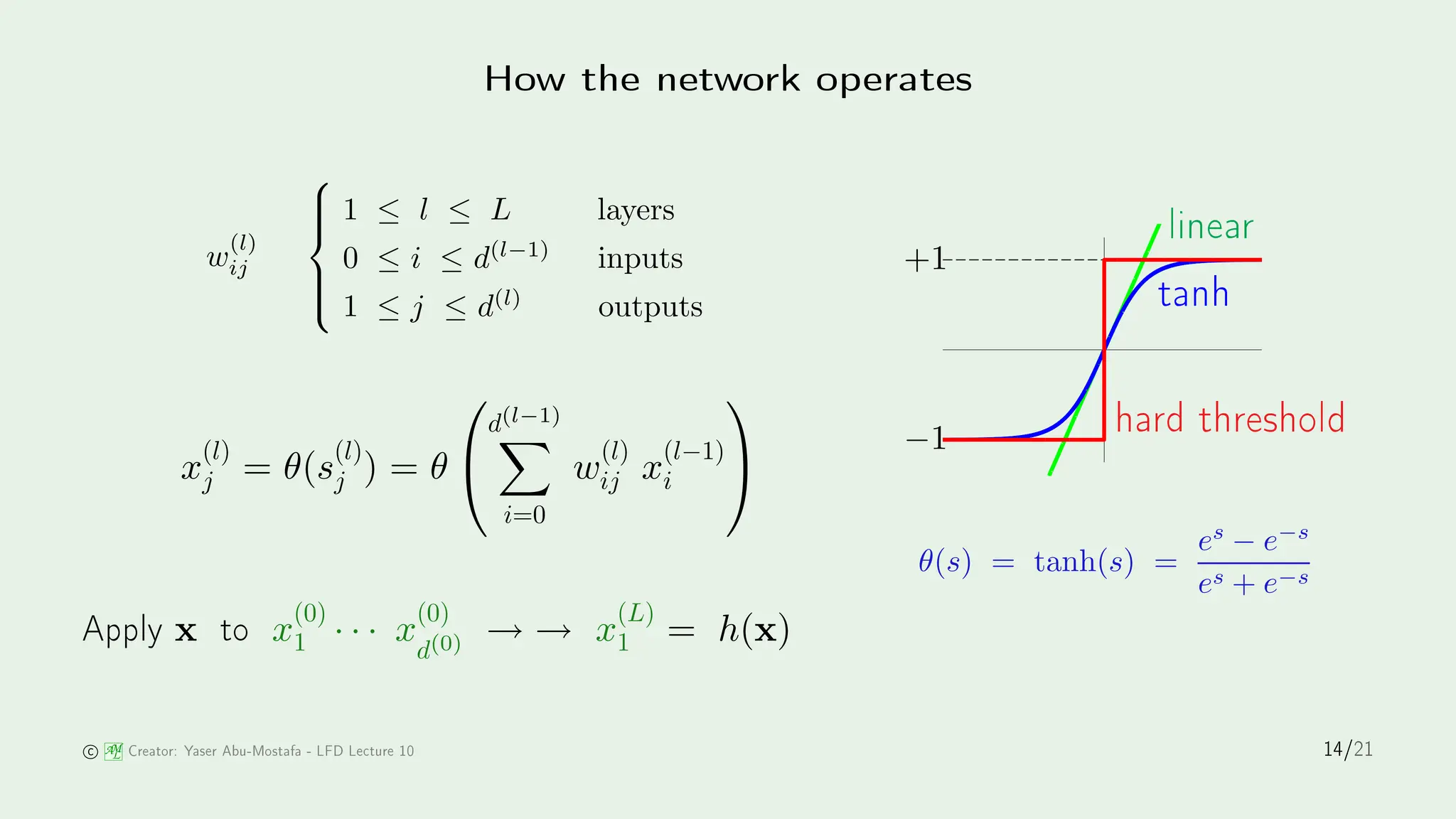

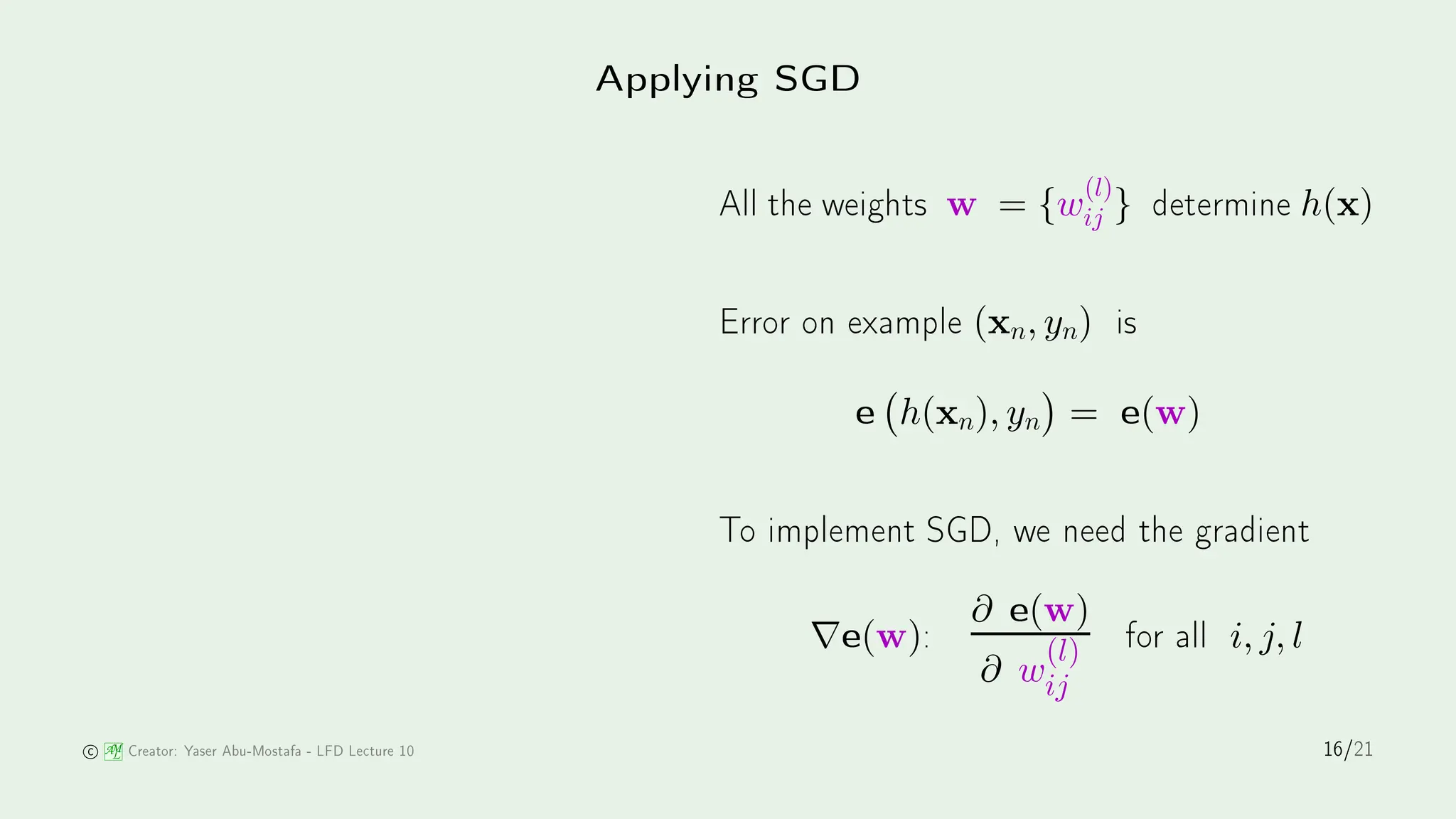

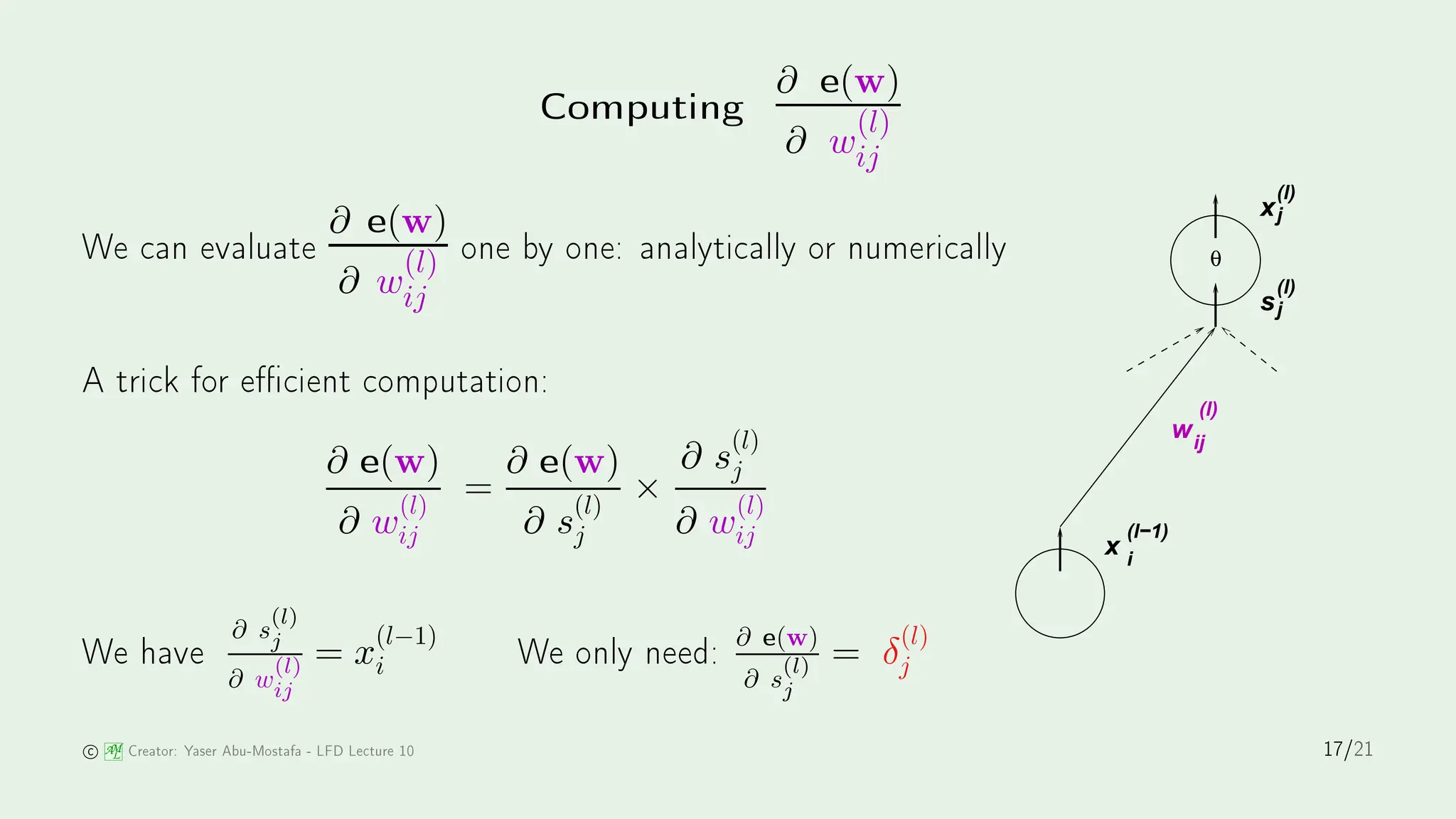

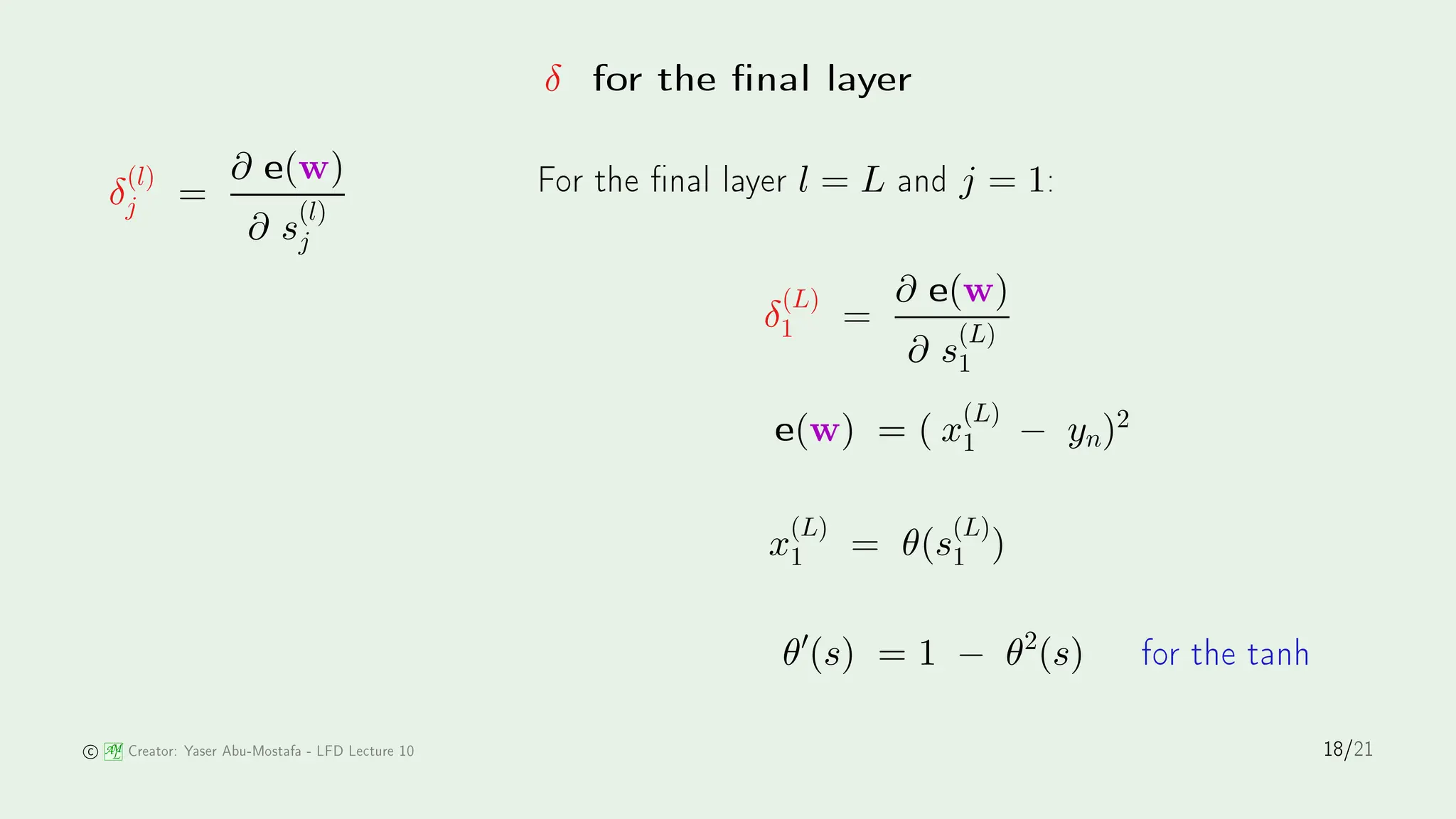

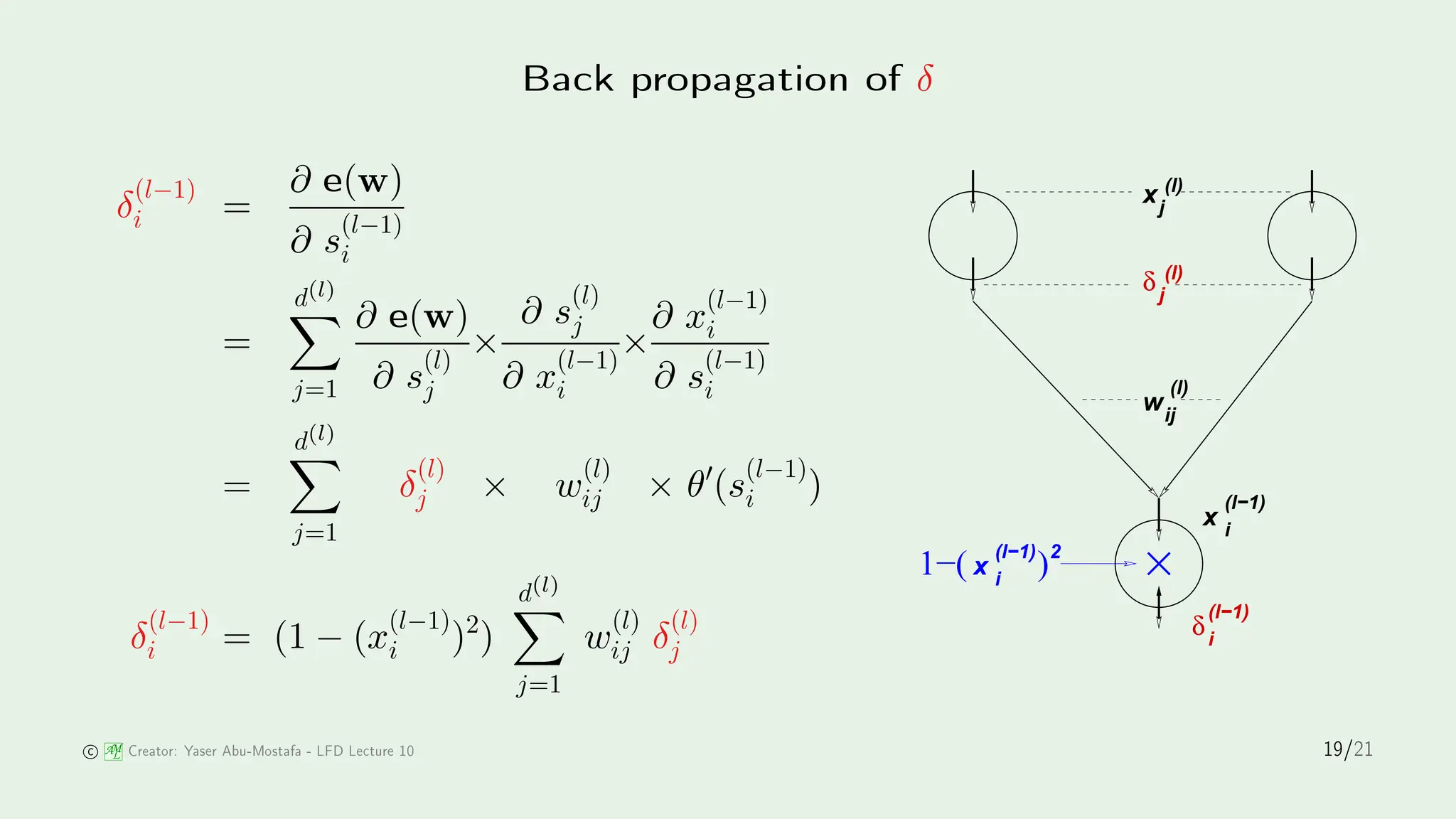

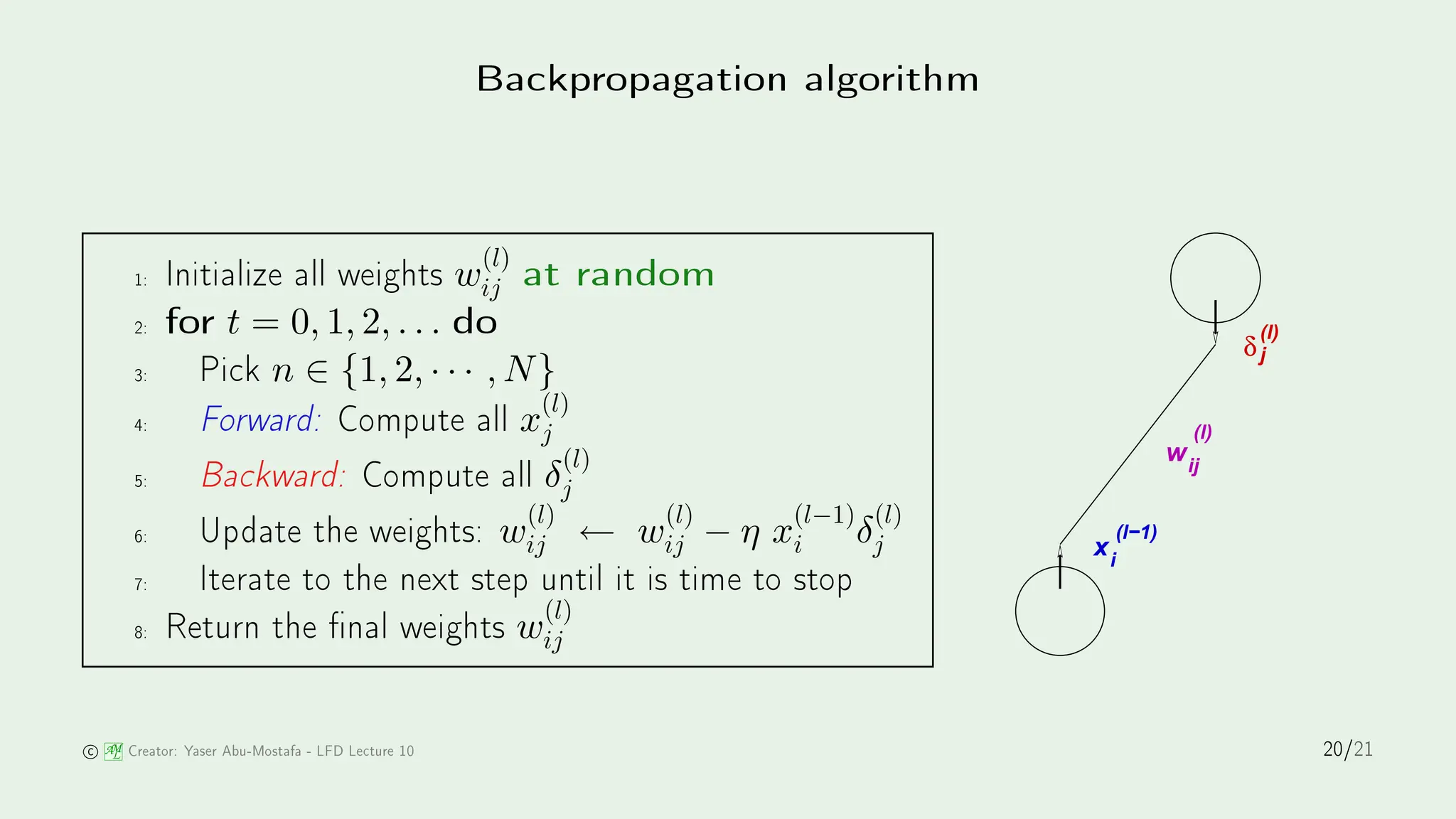

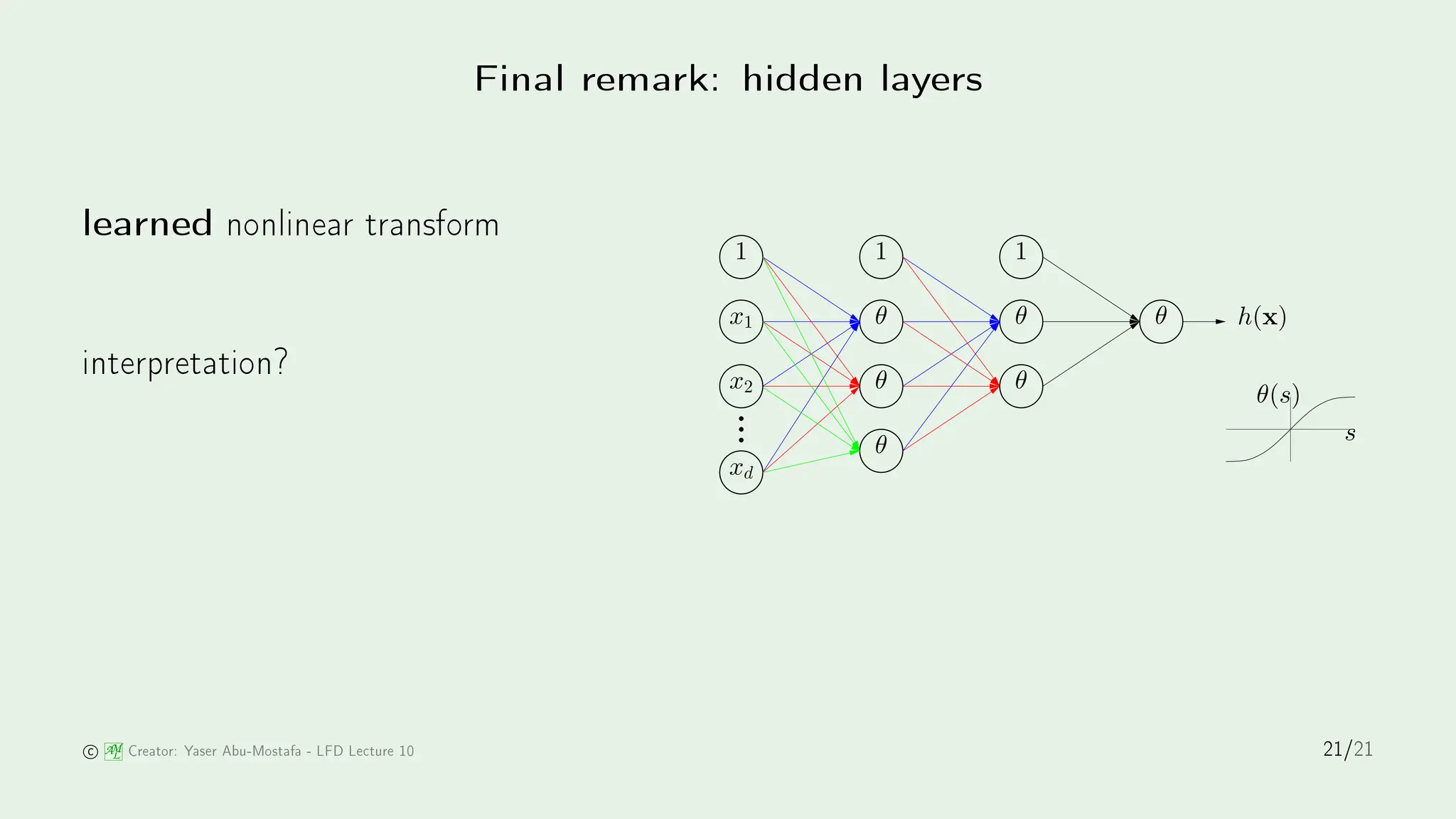

The document discusses stochastic gradient descent (SGD) and its application in logistic regression and neural networks, outlining the principles of how SGD minimizes error through iterative weight updates. It further details the backpropagation algorithm as a method for training multilayer perceptrons through the calculation of gradients. The influence of biological inspiration on neural network architecture is also addressed, emphasizing the complexity and power of neural models.