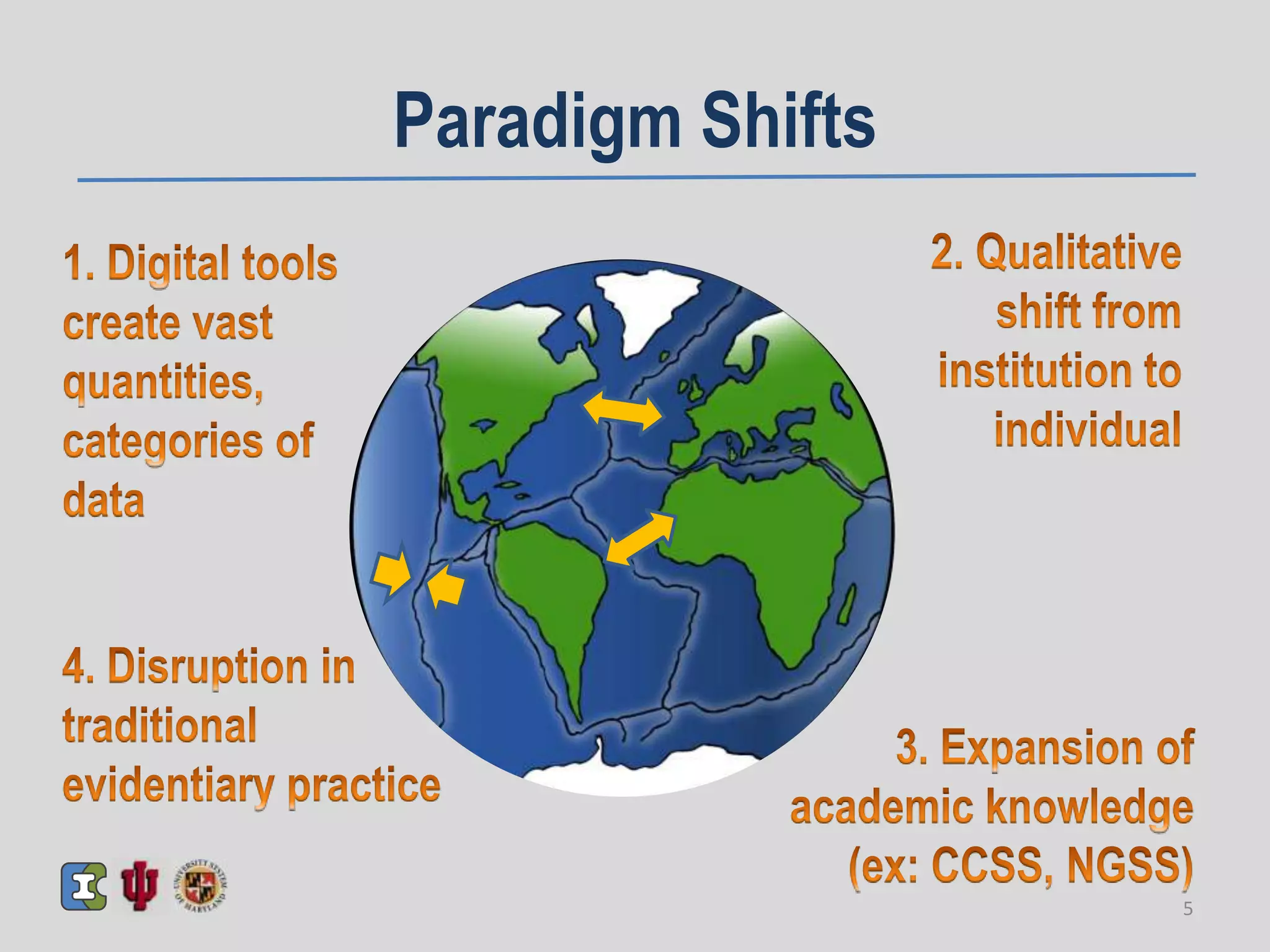

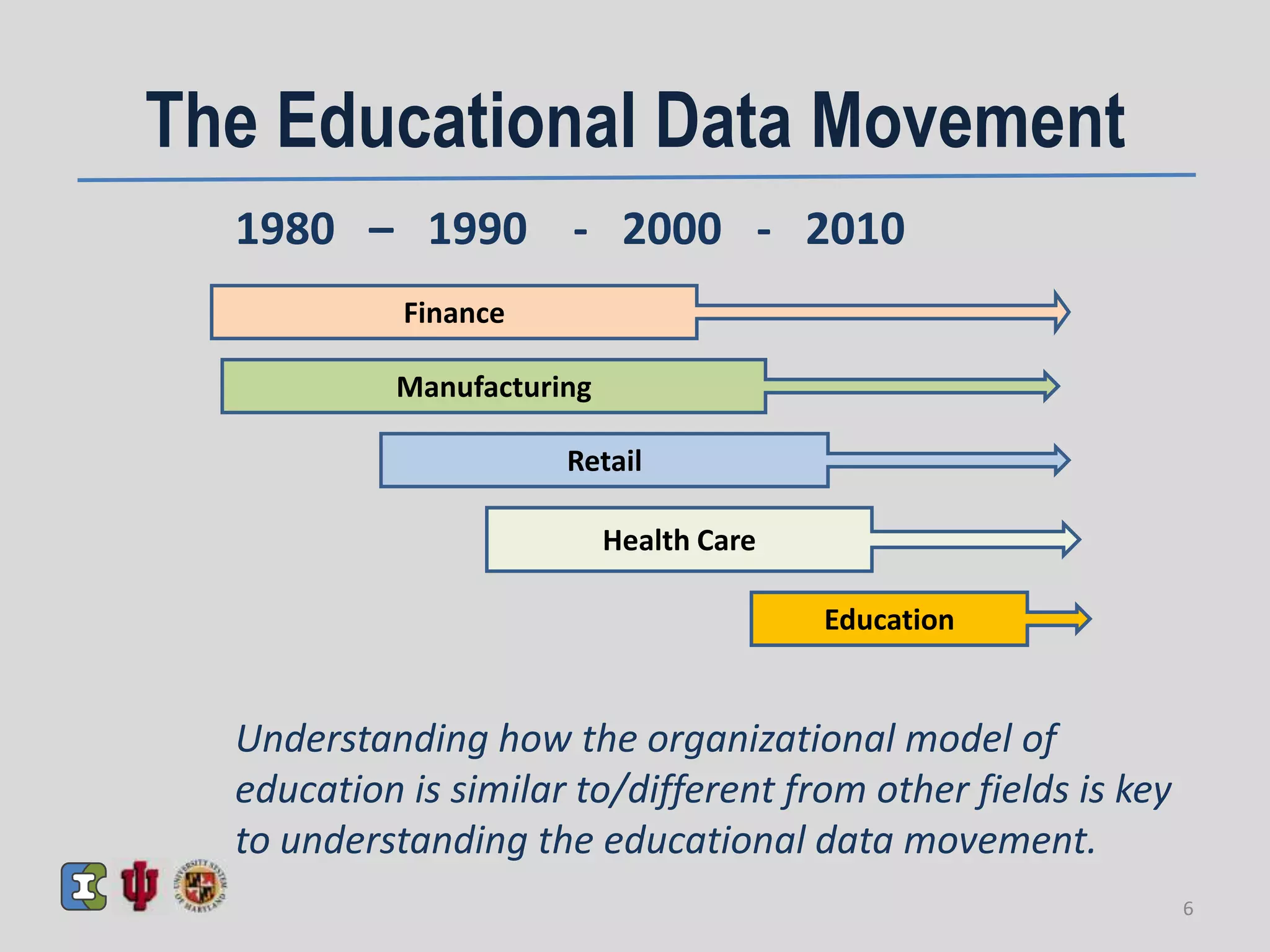

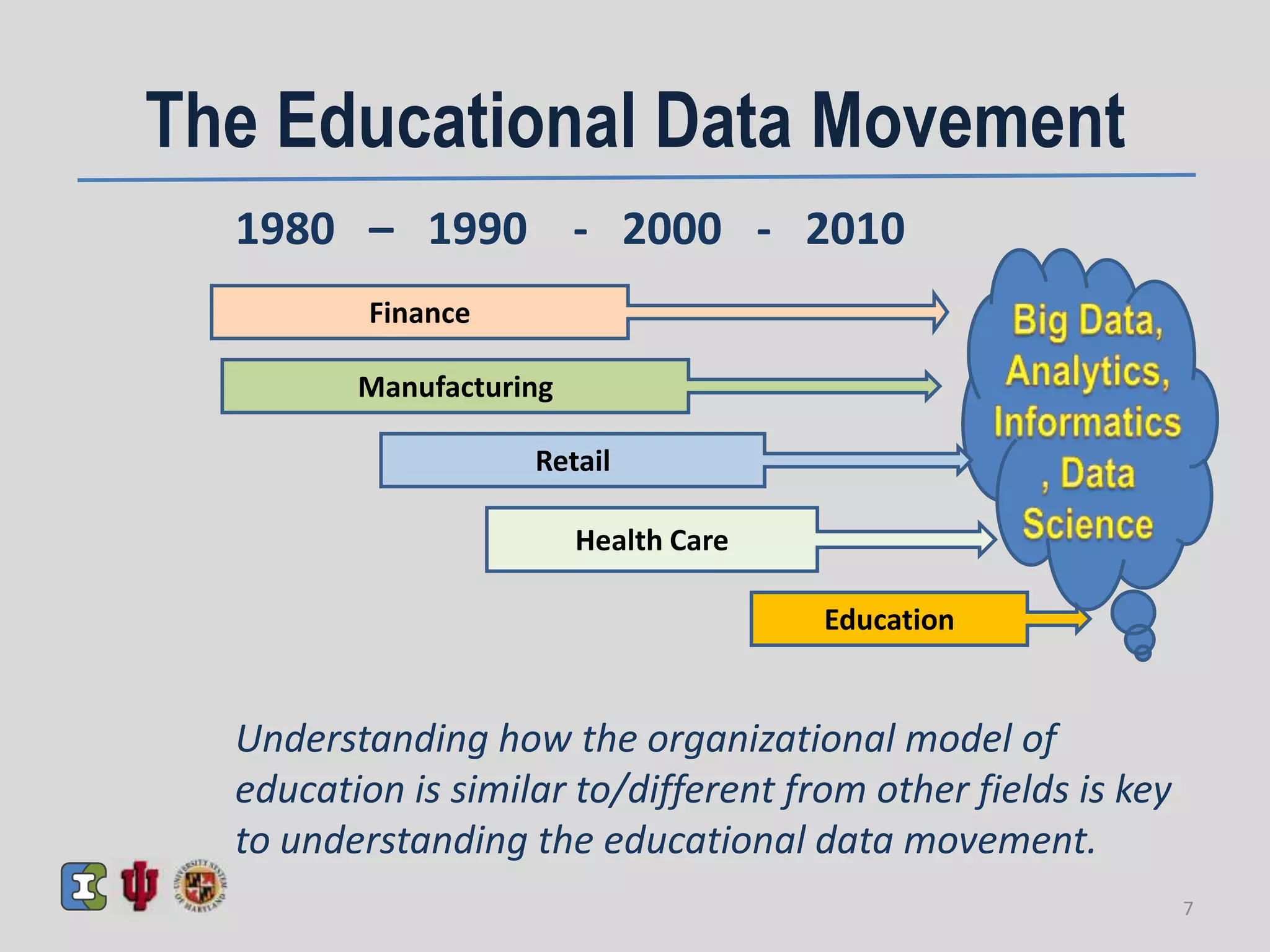

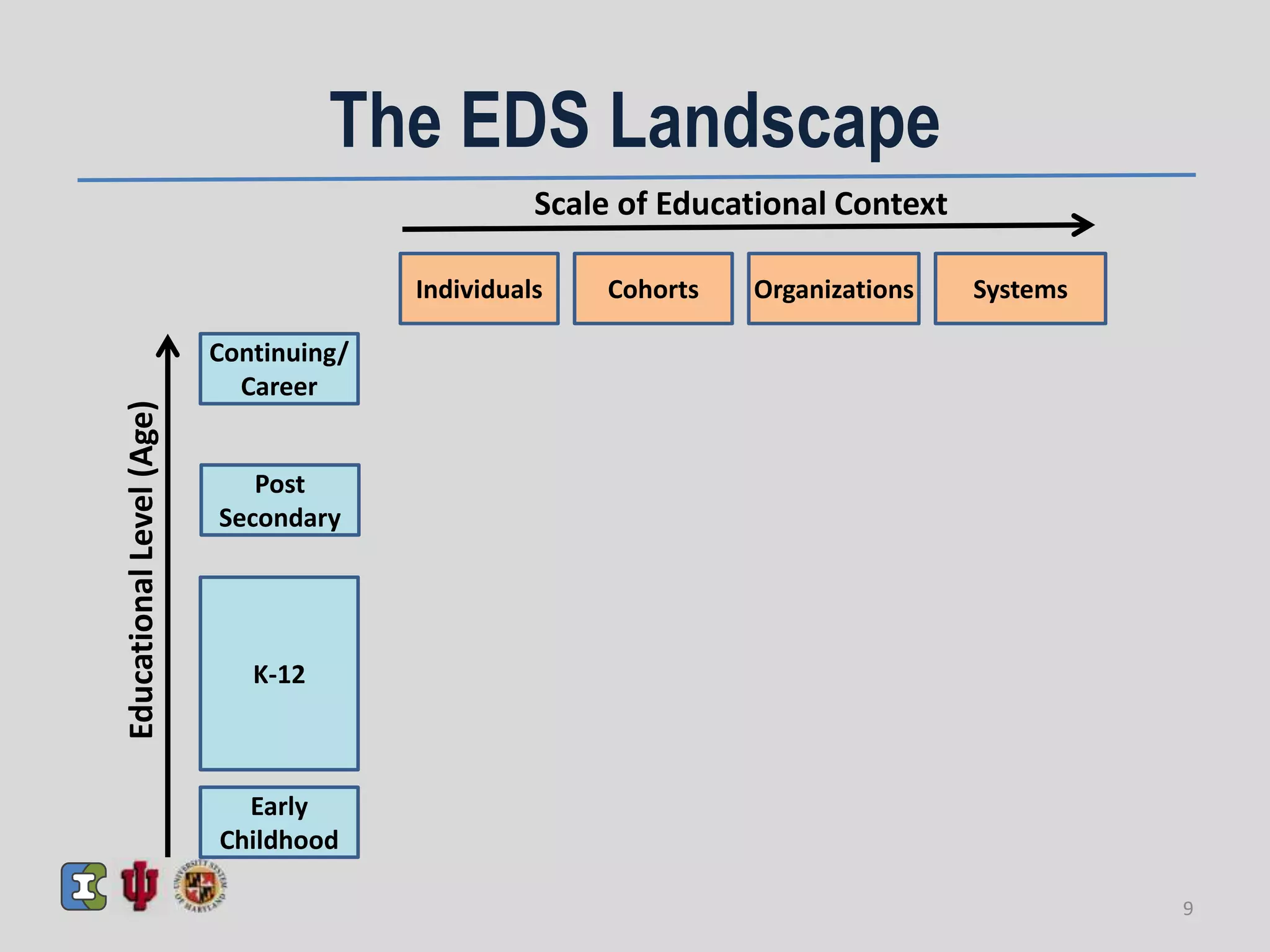

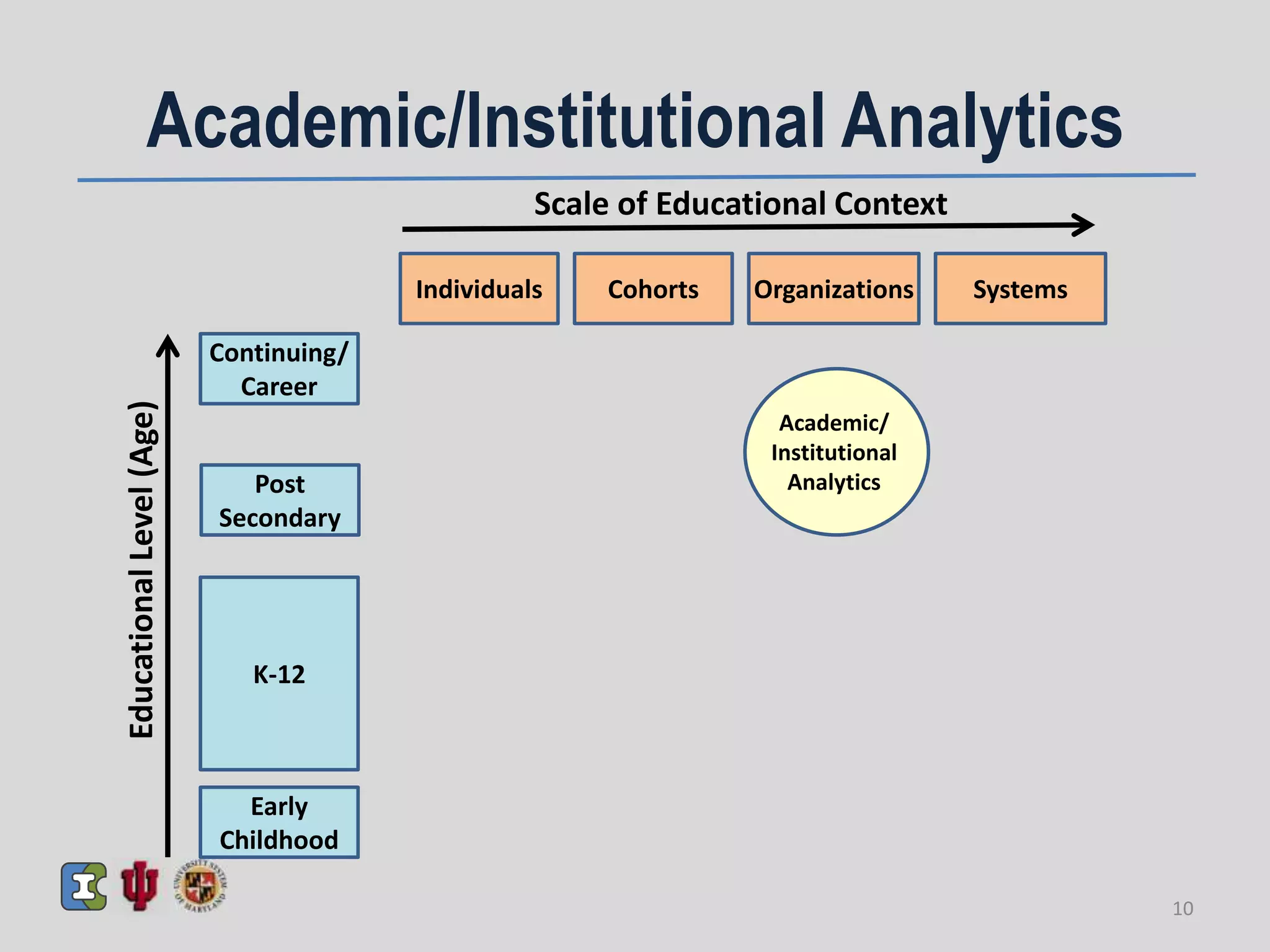

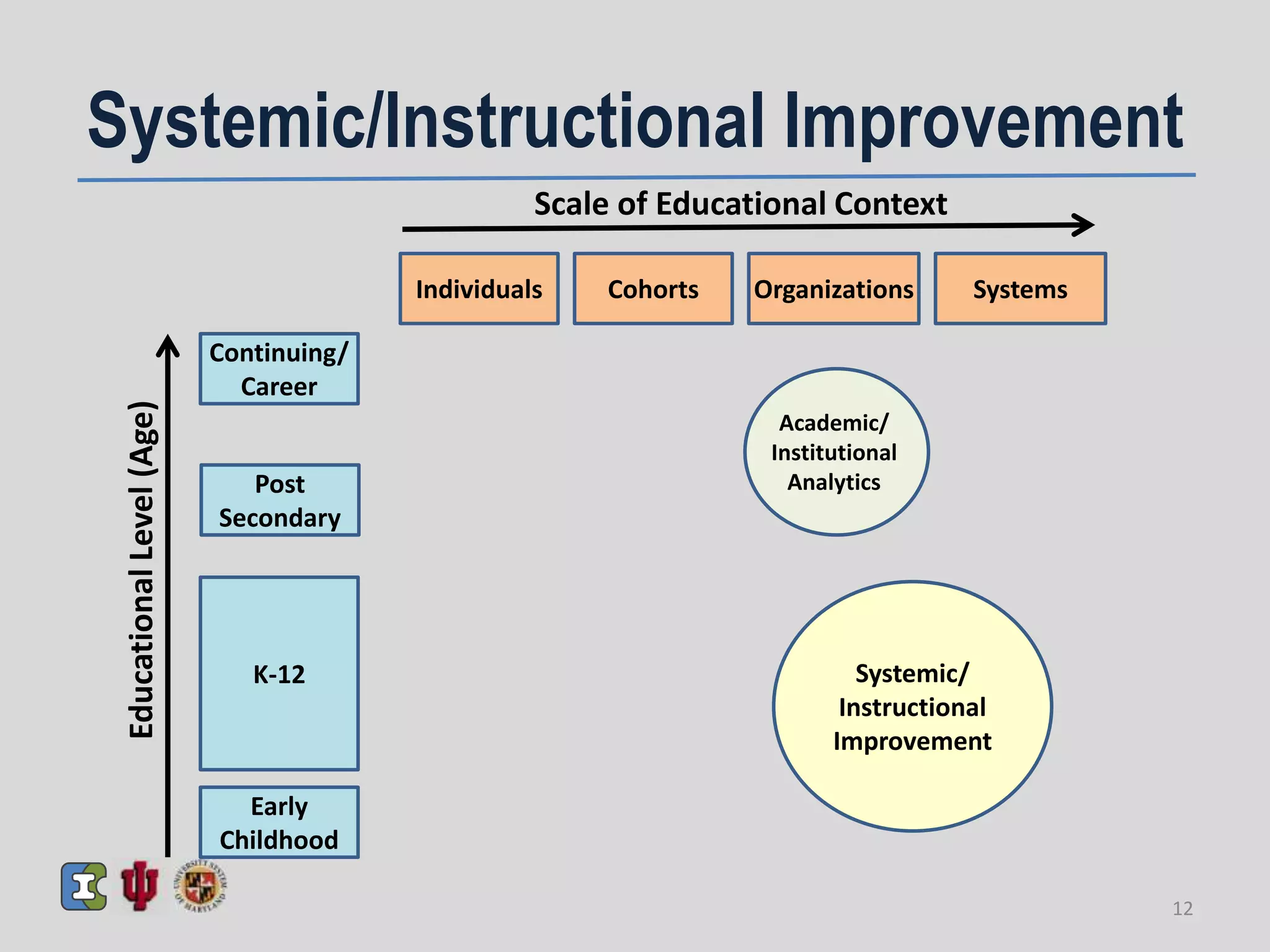

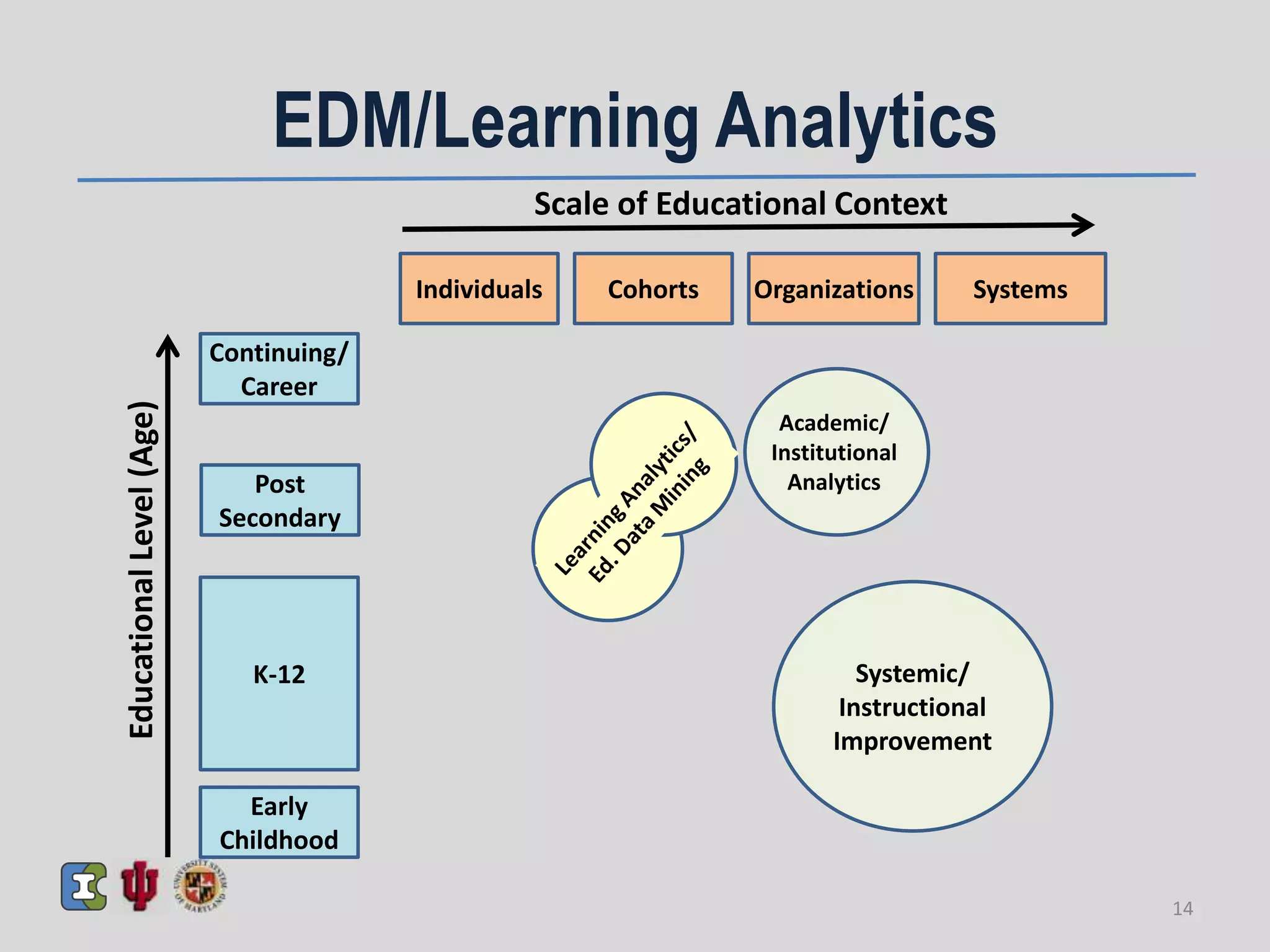

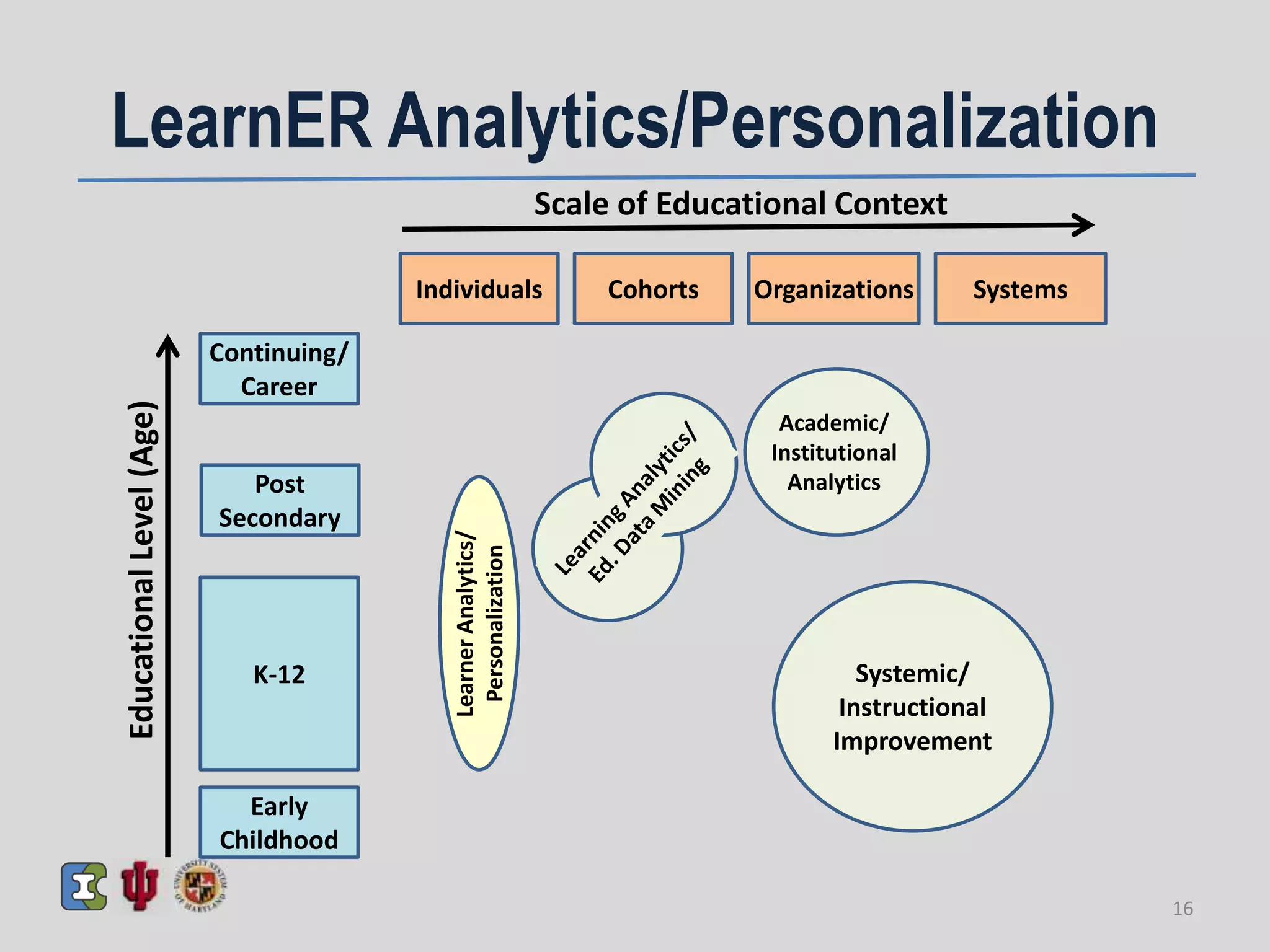

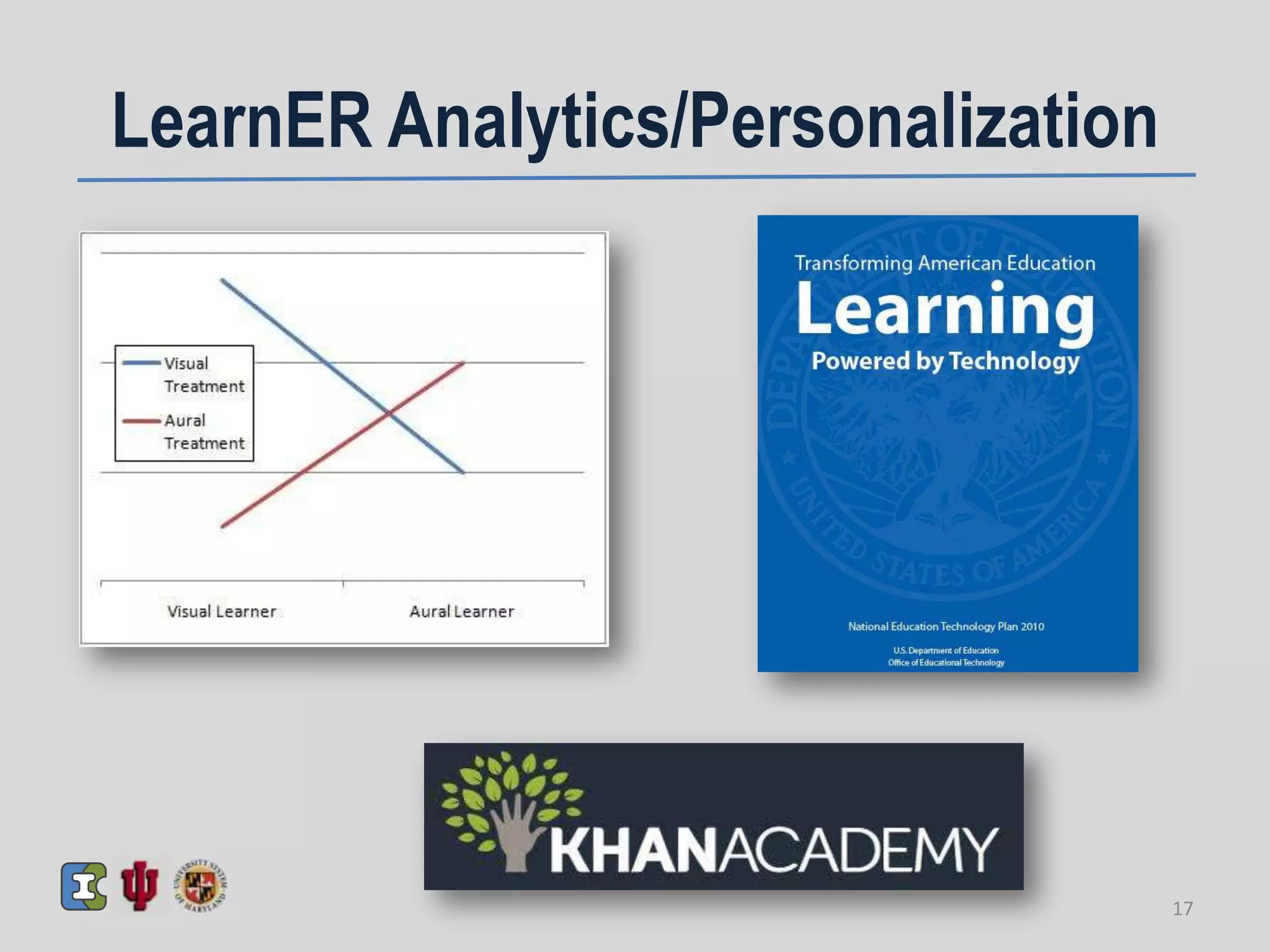

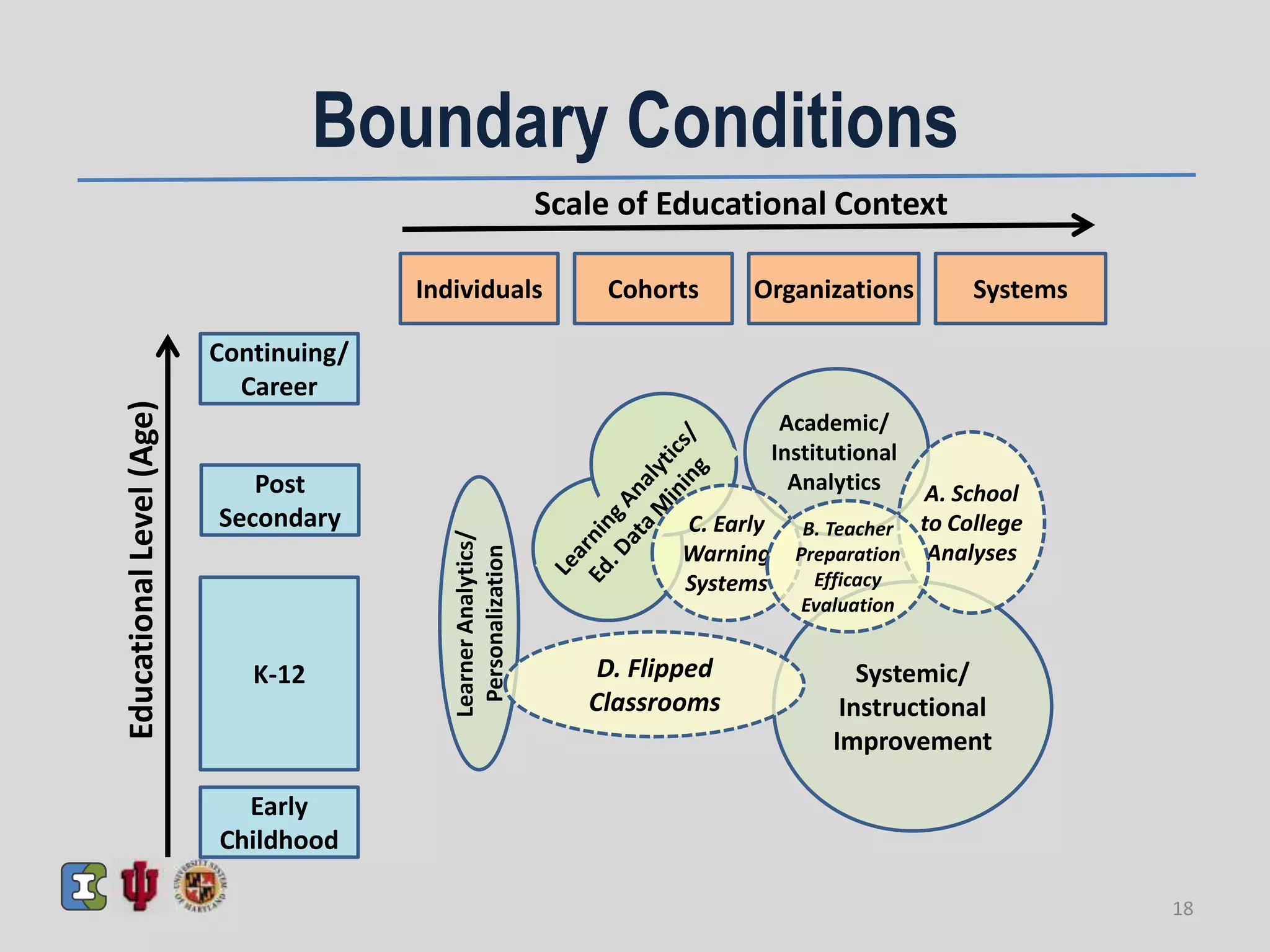

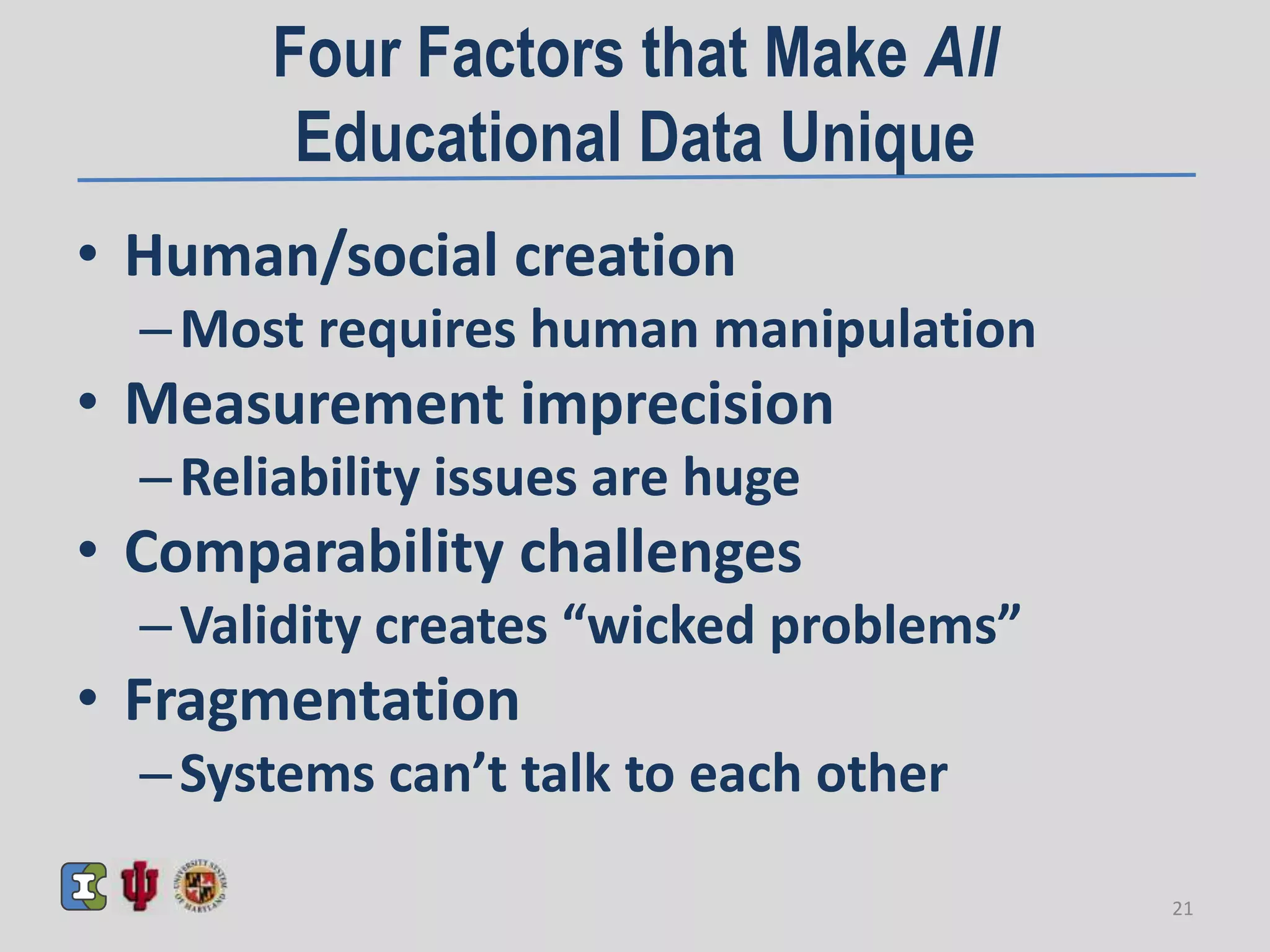

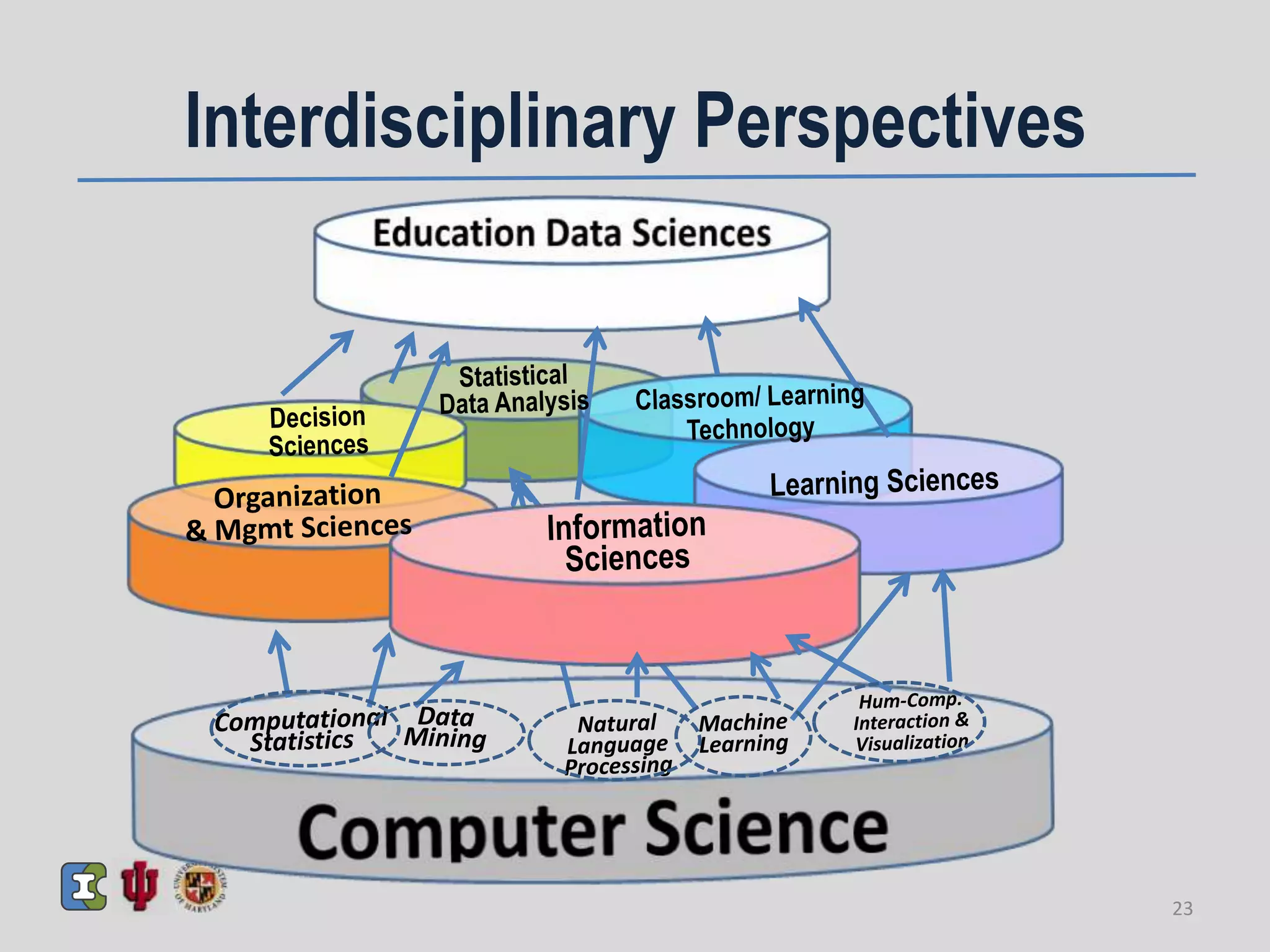

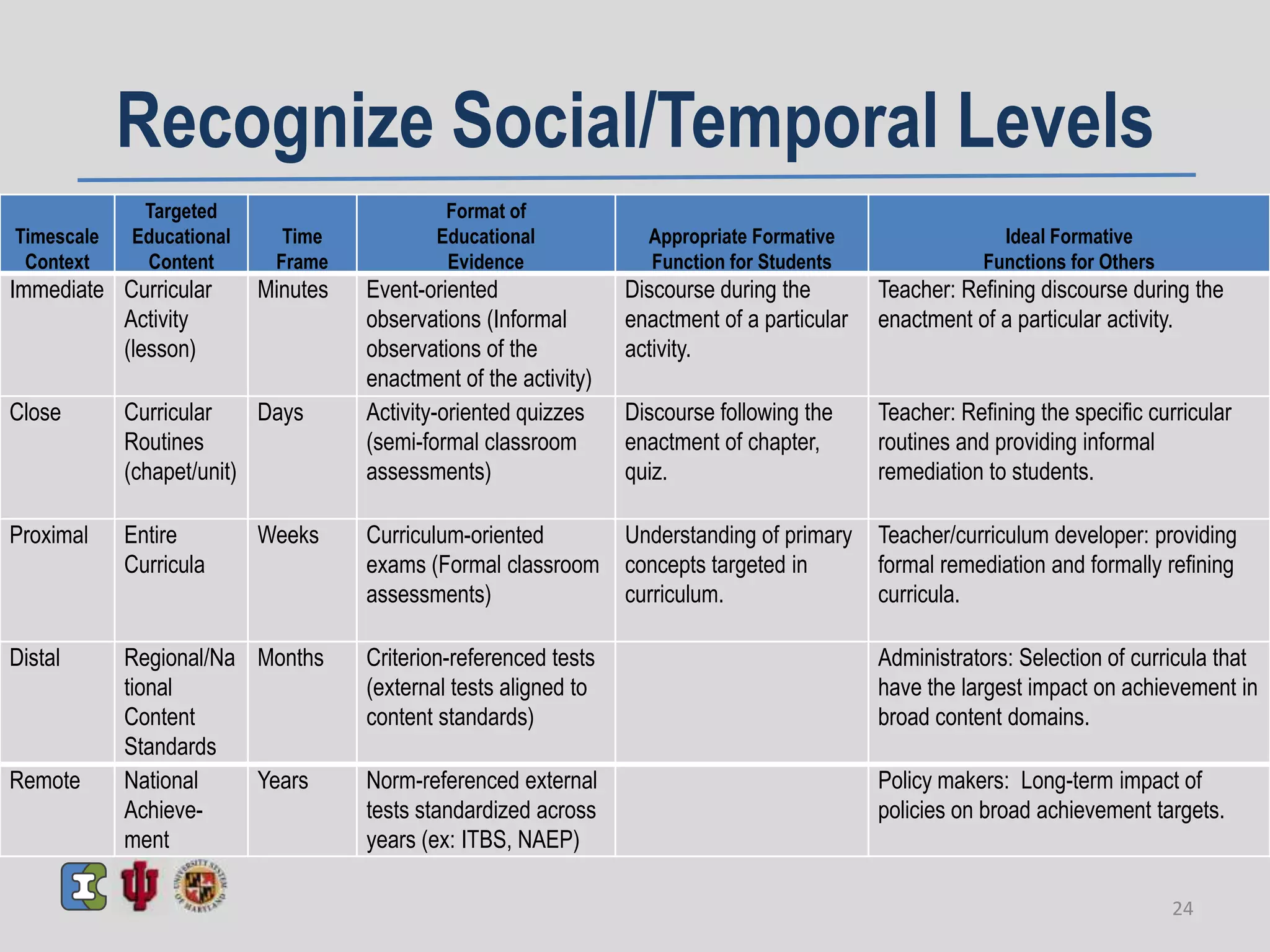

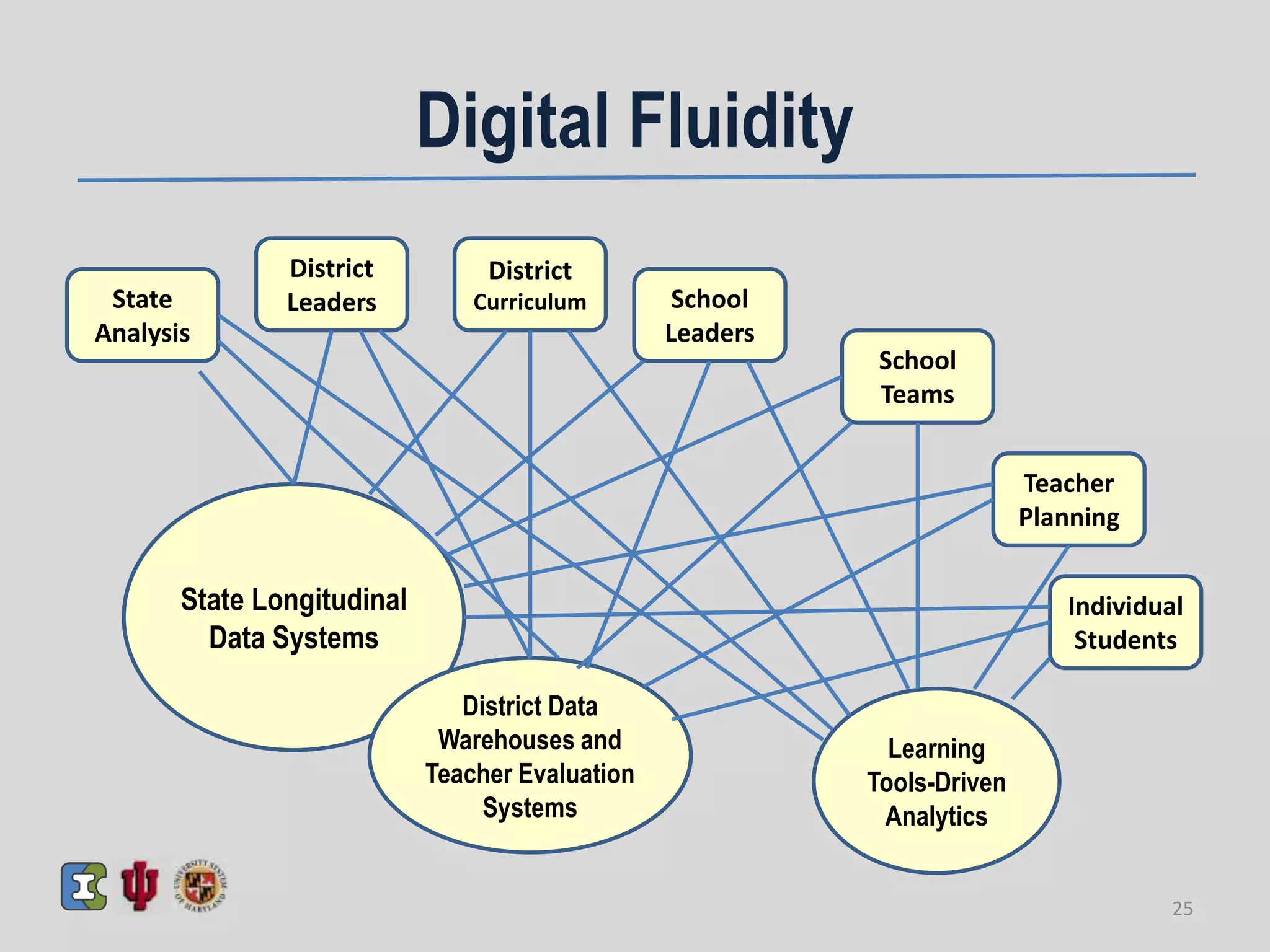

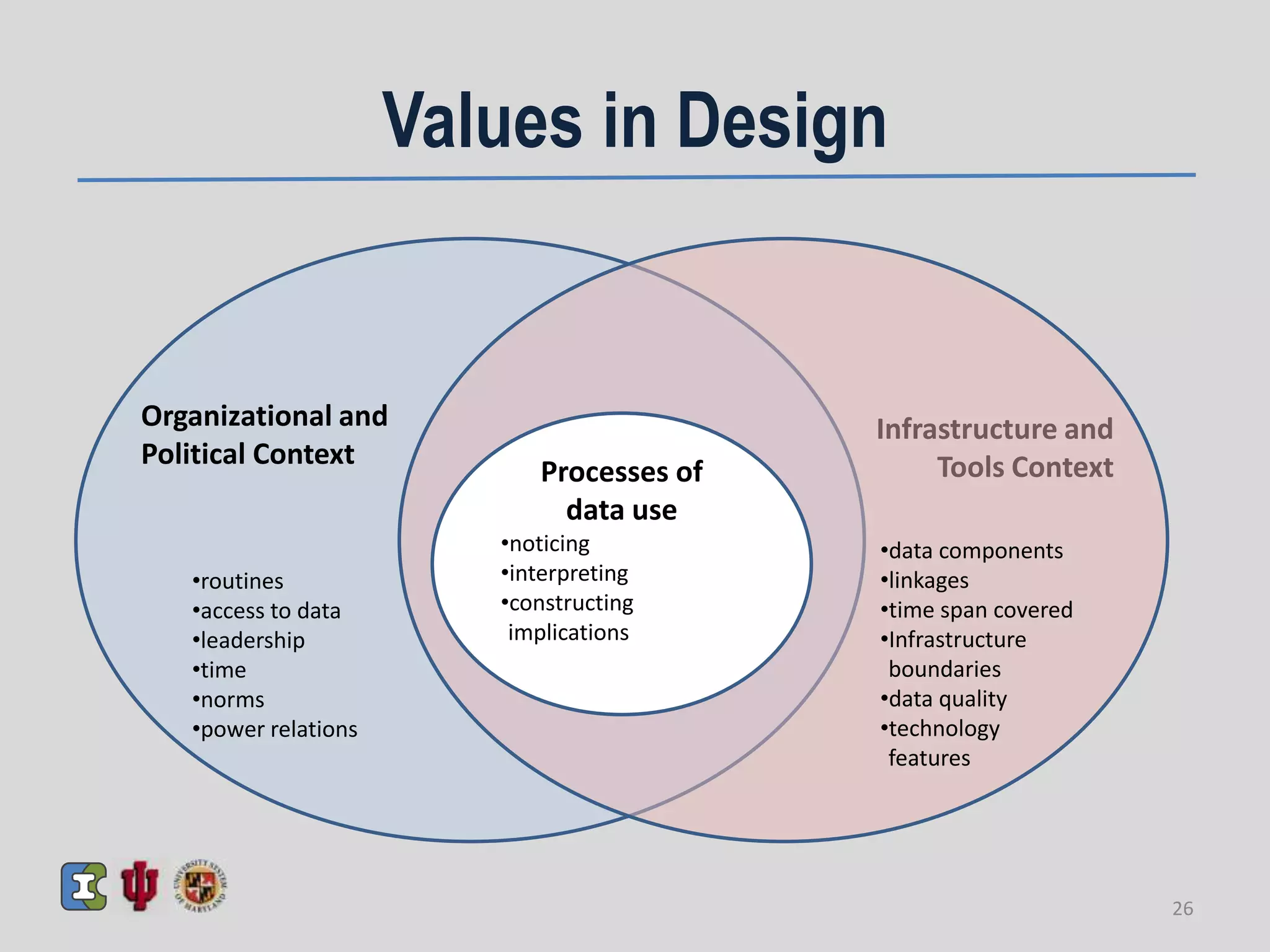

The document discusses the emerging field of Education Data Sciences (EDS). It outlines four main ideas: 1) A sociotechnical paradigm shift in how data is conceived, from external to internal/contextual. 2) The notion of EDS, which includes academic analytics, educational data mining/learning analytics, learner analytics/personalization, and systemic instructional improvement. 3) Common features across these communities, including rapid change, boundary issues, disruption to evidence practices, and ethics/privacy. 4) A proposed framework for EDS that recognizes different social/temporal levels, digital fluidity across contexts, and values in design. The field of EDS analyzes learning, organizations and systems through an interdisciplinary lens.