This paper examines a test conducted to measure the modulation transfer function (MTF) of a KAF-0402 CCD image sensor using sine pattern targets of known spatial frequencies. Two methods were used: a sine pattern method and a slant edge method. The results from the slant edge method followed expected trends more closely for the overall system MTF, but it was still difficult to isolate the sensor MTF due to limitations in the experimental setup and potential misalignments. The paper concludes that measuring MTF is challenging with limited resources and requires near-perfect optical alignment between the sensor, lens, and target.

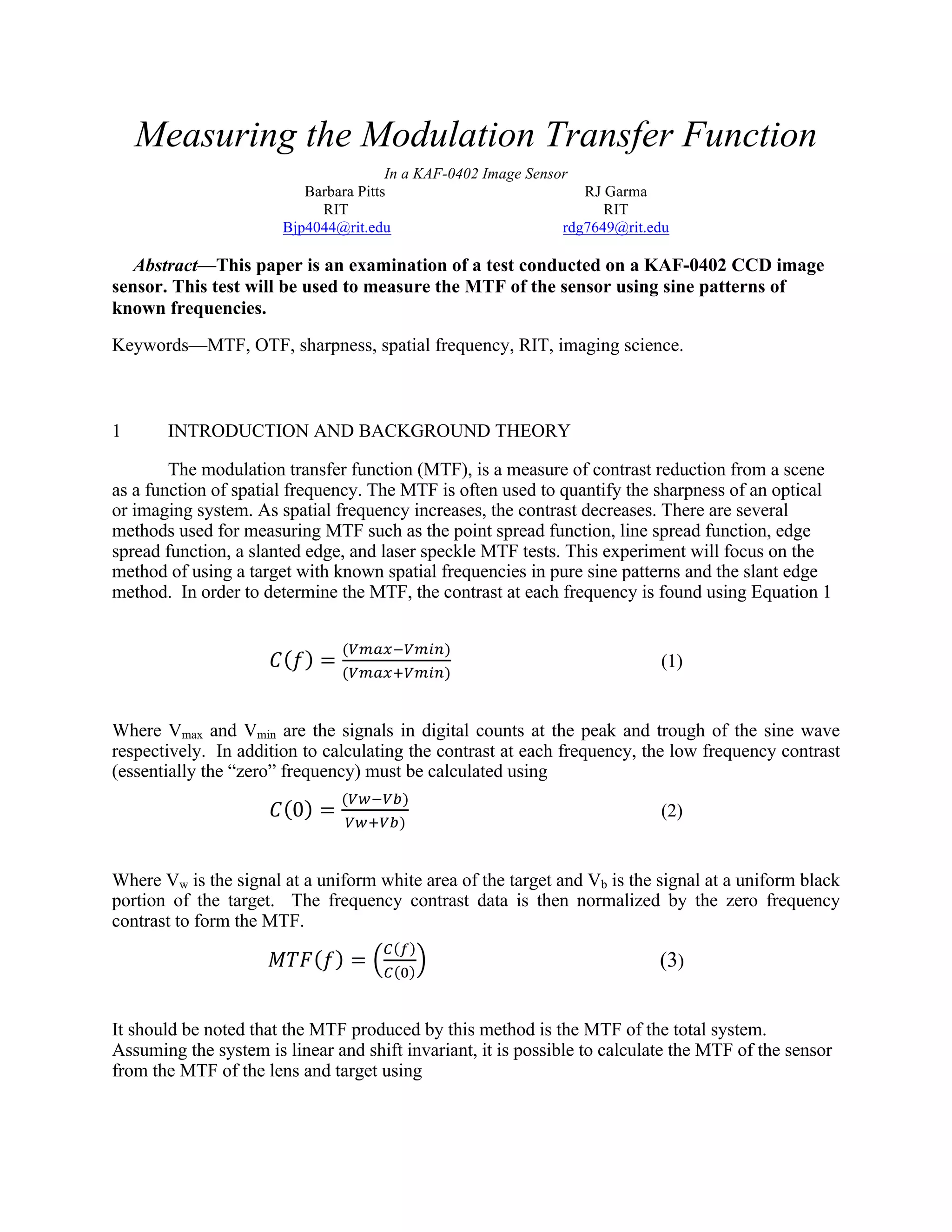

![(a) (b)

(c)

Figure 2: a) Super-sampled edge spread function b) Line spread function c) Modulation transfer function.

Assuming square pixels and a fill factor of one, the pixel pitch (sampling distance between

pixels) is estimated to be the pixel width (physical dimension of the pixel). From this

assumption, the nyquist frequency is calculated by

𝑓!"#$%&'[

!"!!"#

!!

] =

!

!!

(5)

where p is the pixel width in millimeters.

2 Experimental Procedure

2.1 Experimental Set up

For this experiment a 90mm Fuji enlarger lens is used. This lens does not mount to the CCD

camera itself, so alignment into the optical path was necessary. The target being used is a glass,

backlit sine pattern frequency target with varying frequencies. The camera, lens, and target were

mounted onto an optical rail which allowed for adjustments along the z-axis. Using Equation 1

and a 1:1 magnification requirement, the distance to the object (Do) and image (Di) were found to

be 180mm, or approximately 7in. An image of the testing set up is shown in Figure 3. A green

filter was placed in between the lens and the sine pattern resulting in narrow band light centered

about 540nm. Furthermore, the camera was cooled to minimize the contribution of dark current.

!

!

=

!

!!

+

!

!!

(6)](https://image.slidesharecdn.com/7843813f-1431-4b30-ba52-64cdfb22ebee-161108203307/85/Lab4_final-3-320.jpg)