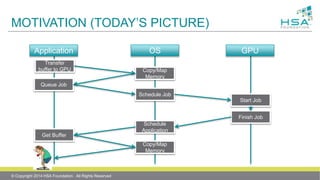

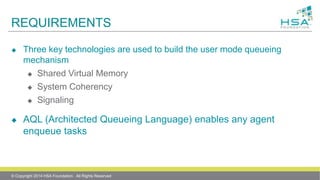

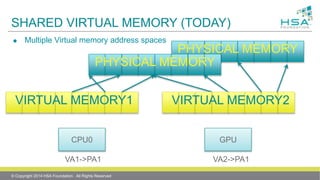

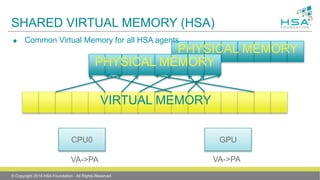

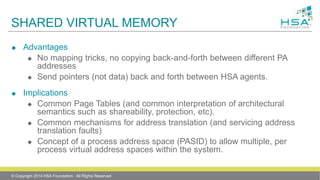

The document discusses an HSA queuing model that allows for direct communication between heterogeneous agents like CPUs and GPUs. It proposes a shared virtual memory, cache coherency, and signaling to enable user-mode queuing. An Architected Queueing Language (AQL) would define standard queue structures, packet formats, and enqueue/dequeue protocols to allow any agent to directly queue work to another.

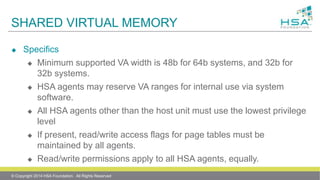

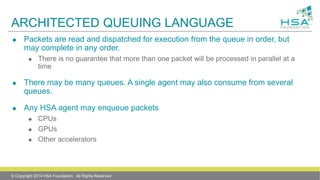

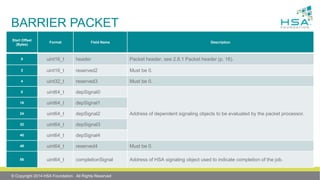

![POTENTIAL MULTI-PRODUCER ALGORITHM

// Allocate packet

uint64_t packetID = hsa_queue_add_write_index_relaxed(q, 1);

// Wait until the queue is no longer full.

uint64_t rdIdx;

do {

rdIdx = hsa_queue_load_read_index_relaxed(q);

} while (packetID >= (rdIdx + q->size));

// calculate index

uint32_t arrayIdx = packetID & (q->size-1);

// copy over the packet, the format field is INVALID

q->baseAddress[arrayIdx] = pkt;

// Update format field with release semantics

q->baseAddress[index].hdr.format.store(DISPATCH, std::memory_order_release);

// ring doorbell, with release semantics (could also amortize over multiple packets)

hsa_signal_send_relaxed(q->doorbellSignal, packetID);

© Copyright 2014 HSA Foundation. All Rights Reserved](https://image.slidesharecdn.com/iscafinalpresentationqueuingmodel-140627141606-phpapp02/85/ISCA-final-presentation-Queuing-Model-38-320.jpg)

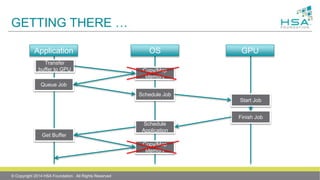

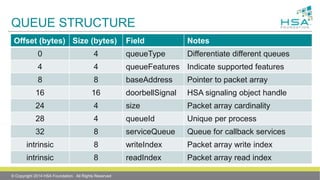

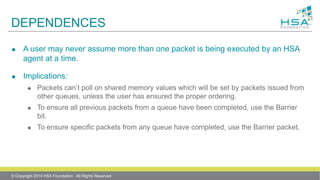

![POTENTIAL CONSUMER ALGORITHM

// Get location of next packet

uint64_t readIndex = hsa_queue_load_read_index_relaxed(q);

// calculate the index

uint32_t arrayIdx = readIndex & (q->size-1);

// spin while empty (could also perform low-power wait on doorbell)

while (INVALID == q->baseAddress[arrayIdx].hdr.format) { }

// copy over the packet

pkt = q->baseAddress[arrayIdx];

// set the format field to invalid

q->baseAddress[arrayIdx].hdr.format.store(INVALID, std::memory_order_relaxed);

// Update the readIndex using HSA intrinsic

hsa_queue_store_read_index_relaxed(q, readIndex+1);

// Now process <pkt>!

© Copyright 2014 HSA Foundation. All Rights Reserved](https://image.slidesharecdn.com/iscafinalpresentationqueuingmodel-140627141606-phpapp02/85/ISCA-final-presentation-Queuing-Model-39-320.jpg)

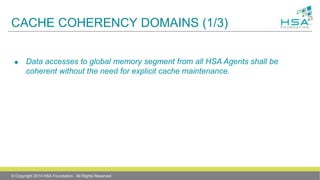

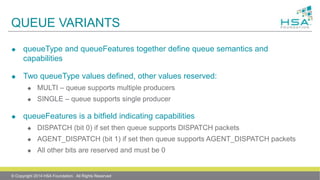

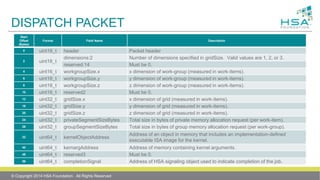

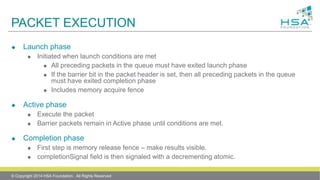

![AGENT DISPATCH PACKET

© Copyright 2014 HSA Foundation. All Rights Reserved

Start Offset

(Bytes)

Format Field Name Description

0 uint16_t header Packet header

2 uint16_t type

The function to be performed by the destination Agent. The type value is

split into the following ranges:

0x0000:0x3FFF – Vendor specific

0x4000:0x7FFF – HSA runtime

0x8000:0xFFFF – User registered function

4 uint32_t reserved2 Must be 0.

8 uint64_t returnLocation Pointer to location to store the function return value in.

16 uint64_t arg[0]

64-bit direct or indirect arguments.

24 uint64_t arg[1]

32 uint64_t arg[2]

40 uint64_t arg[3]

48 uint64_t reserved3 Must be 0.

56 uint64_t completionSignal Address of HSA signaling object used to indicate completion of the job.](https://image.slidesharecdn.com/iscafinalpresentationqueuingmodel-140627141606-phpapp02/85/ISCA-final-presentation-Queuing-Model-44-320.jpg)

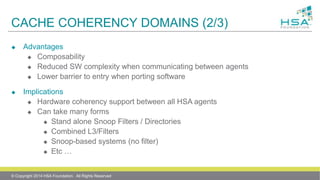

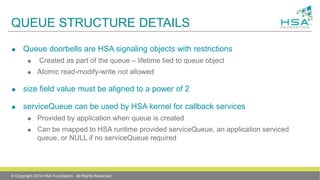

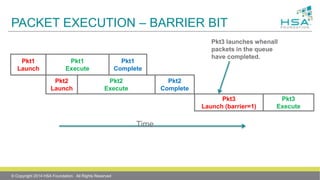

![PUTTING IT ALL TOGETHER (FFT)

© Copyright 2014 HSA Foundation. All Rights Reserved

Packet 1

Packet 2

Packet 3

Packet 4

Packet 5

Packet 6

Barrier Barrier

X[0]

X[1]

X[2]

X[3]

X[4]

X[5]

X[6]

X[7]

Time](https://image.slidesharecdn.com/iscafinalpresentationqueuingmodel-140627141606-phpapp02/85/ISCA-final-presentation-Queuing-Model-51-320.jpg)