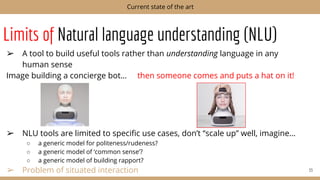

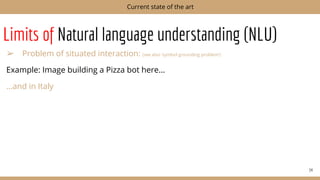

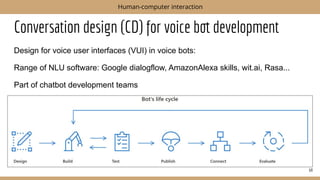

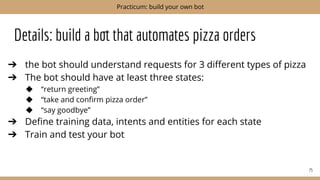

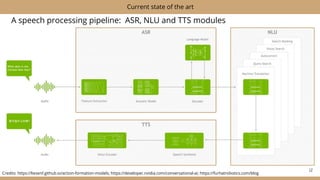

The document introduces conversational AI and discusses its current capabilities and limitations. It defines conversational AI as building machines that can verbally interact with humans. Key points include:

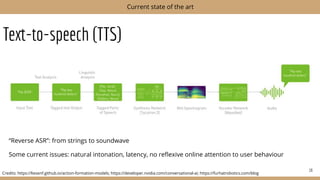

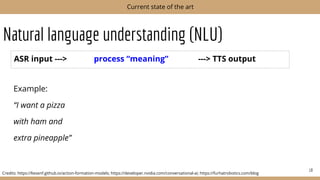

- Speech processing involves modules for speech recognition, natural language understanding, and text-to-speech, but current systems have limited understanding.

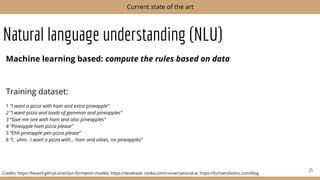

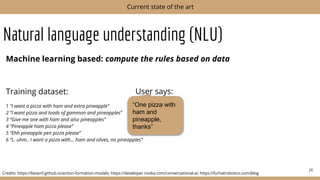

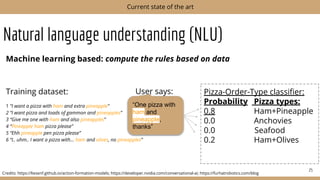

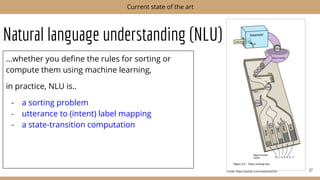

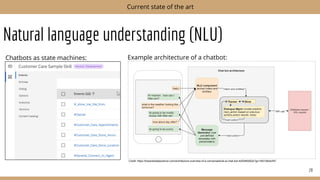

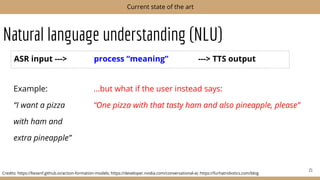

- Natural language understanding involves classifying intents but has difficulty with ambiguity and context.

- While systems can mimic conversations, current AI lacks true understanding of language and cannot genuinely participate in human social interactions.

![Natural language understanding (NLU)

ASR input ---> process “meaning” ---> TTS output

19

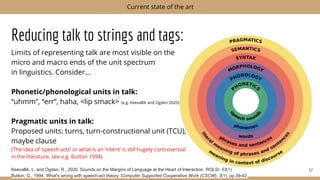

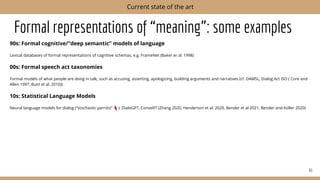

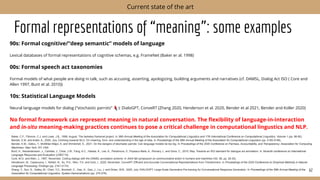

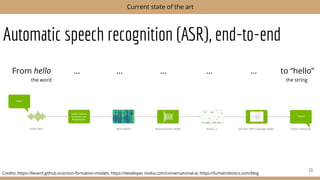

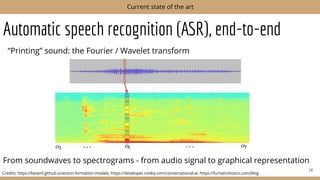

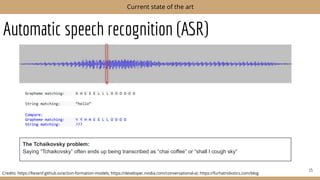

Current state of the art

Credits: https://liesenf.github.io/action-formation-models; https://developer.nvidia.com/conversational-ai; https://furhatrobotics.com/blog

Example:

“I want a pizza

with ham and

extra pineapple”

TakeOrder{

Type= pizza,

Topping: [ham],

Extra: [pineapple]

}](https://image.slidesharecdn.com/intro-lecture-230513072014-66f96425/85/Intro-lecture-pdf-19-320.jpg)

![Natural language understanding (NLU)

ASR input ---> process “meaning” ---> TTS output

20

Current state of the art

Credits: https://liesenf.github.io/action-formation-models; https://developer.nvidia.com/conversational-ai; https://furhatrobotics.com/blog

Example:

“I want a pizza

with ham and

extra pineapple”

TakeOrder{

Type= pizza,

Topping: [ham],

Extra: [pineapple]

}

if TakeOrder=True:

say response[RepeatOrder]

else:

start_seq[TakeOrderFallback]

“Got it, your order is one

pizza with ham and extra

pineapple.”](https://image.slidesharecdn.com/intro-lecture-230513072014-66f96425/85/Intro-lecture-pdf-20-320.jpg)

![Natural language understanding (NLU)

Rule based: define a set of rules to determine pizza type

Keyword spotting: “I want a pizza with ham and extra pineapple”

“One pizza with that tasty ham and also pineapple, please”

NLU processor: [intent][type][topping][topping type1][topping type 2]

22

Current state of the art

Credits: https://liesenf.github.io/action-formation-models; https://developer.nvidia.com/conversational-ai; https://furhatrobotics.com/blog](https://image.slidesharecdn.com/intro-lecture-230513072014-66f96425/85/Intro-lecture-pdf-22-320.jpg)