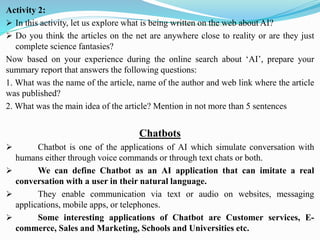

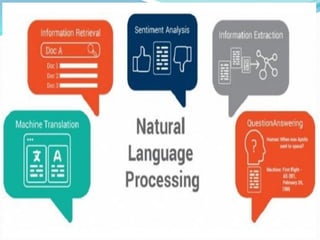

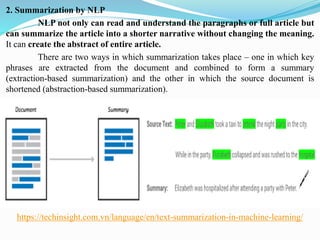

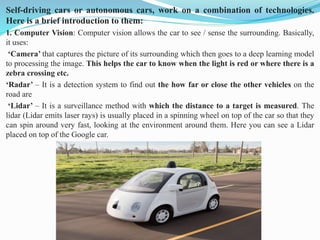

The document discusses various applications of artificial intelligence (AI), including chatbots, computer vision, weather predictions, and self-driving cars. It then focuses on chatbots, explaining that they are powered by natural language processing (NLP) to understand human language. The document outlines different types of chatbots, including rule-based and machine learning-based chatbots. It provides examples of how NLP is used for tasks like text summarization, information extraction, and sentiment analysis. NLP allows machines to understand human language in both written and spoken forms.

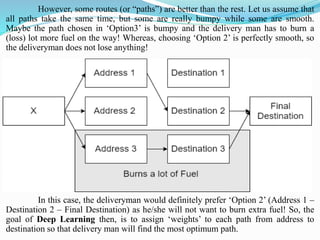

![Activity 1:

➢ Let us do a small exercise on how AI is perceived by our society.

➢ Do you have any idea what people think about AI? Do an online image search for

the term “AI”

➢ The results of your search would help you get an idea about how AI is perceived.

[Note - If you are using Google search, choose "Images" instead of “All” search]

➢ Based on the outcome of your web search, create a short story on “People’s

Perception of AI”](https://image.slidesharecdn.com/artificialintelligenceunit-2-230814123743-88e3b8e4/85/Artificial-Intelligence-Unit-2-pdf-3-320.jpg)

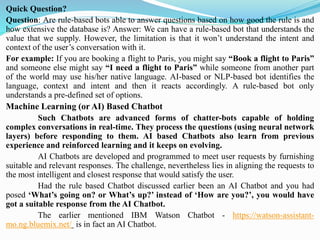

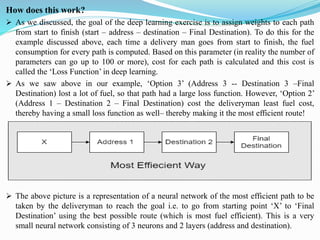

![Types of Chatbots

Chatbots can broadly be divided into Two Types:

1. Rule based Chatbot

2. Machine Learning (or AI) based Chatbot risk

1. Rule – Based Chatbot

This is the simpler form of Chatbot which follows a set of pre-defined rules

in responding to user’s questions. For example, a Chatbot installed at a school reception

area, can retrieve data from the school’s archive to answer queries on school fee structure,

course offered, pass percentage, etc.

Rule based Chatbot is used for simple conversations, and cannot be used for

complex conversations.

For example, let us create the below rule and train the Chatbot: bot.train

( ['How are you?’,

'I am good.’,

'That is good to hear.’,

'Thank you’,

'You are welcome.',])

After training, if you ask the Chatbot, “How are you?”, you would get a

response, “I am good”. But if you ask ‘What’s going on? or What’s up? ‘, the rule

based Chatbot won’t be able to answer, since it has been trained to take only a certain

set of questions.](https://image.slidesharecdn.com/artificialintelligenceunit-2-230814123743-88e3b8e4/85/Artificial-Intelligence-Unit-2-pdf-7-320.jpg)

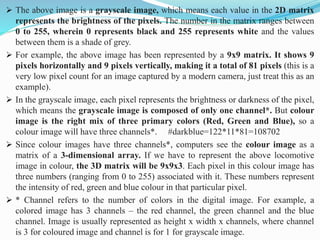

![AI and Society

➢ Business consultant Ronald van Loon asserts, “The role of Artificial Intelligence devices in

augmenting humans and in achieving tasks that were previously considered unachievable is just

amazing.

➢ With the world progressing towards an age of unlimited innovations and unhindered

progress, we can expect that AI will have a greater role in actually serving us for the better.

➢ Below are a number of ways pundits expect AI to benefit society.

In the Workplace:

➢ Many futurists fear AI will make humans redundant dramatically increasing unemployment and

causing social unrest.

➢ Many other futurists believe AI and other Information Age technologies will create more jobs than

it eliminates. Regardless of which viewpoint is correct, there will be job displacements requiring

retraining and reskilling.

➢ Beyond AI’s impact on employment, Peter Daisyme co-founder of Hostt, asserts AI will make the

workplace safer. He explains, “Many jobs, despite a focus on health and safety, still involve a

considerable amount of risk.

➢ Risks involved for employees of U.S. factories can be alleviated by the use of the Industrial

Internet of Things and predictive maintenance.

➢ The role of AI and cognitive computing [involves] improving the link between maintenance and

workplace safety.

➢ These technologies address issues before they put factory workers at risk. In addition, AI can take

on more roles within the factory, eliminating the need for humans to work in potentially dangerous areas.

➢ AI can also help relieve workers from having to perform tedious jobs freeing them to perform

more interesting and satisfying work.](https://image.slidesharecdn.com/artificialintelligenceunit-2-230814123743-88e3b8e4/85/Artificial-Intelligence-Unit-2-pdf-97-320.jpg)