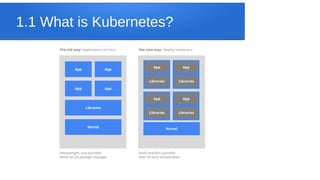

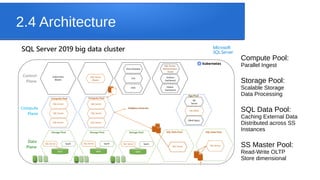

SQL Server Big Data Clusters allow for data exploration and analysis using SQL Server, Spark, HDFS, and Kubernetes. Key features include deploying clusters on any Kubernetes environment, management services for monitoring and high availability, and Polybase for querying external data stores via SQL. The architecture consists of compute, storage, and SQL pools distributed across SQL Server instances managed by Kubernetes. Azure Data Studio provides tools for working with relational and HDFS data on the clusters.