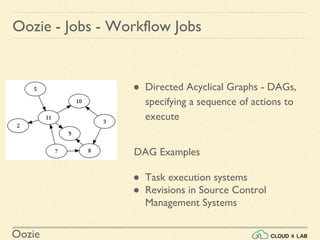

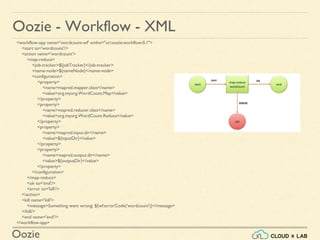

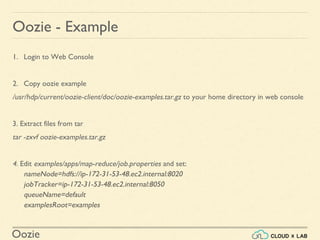

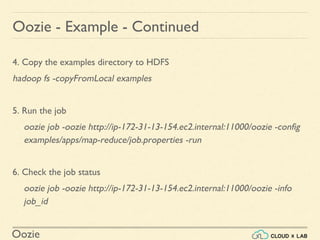

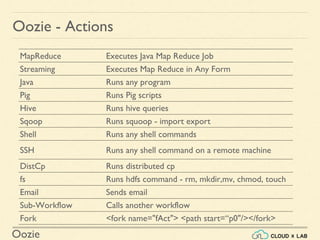

Oozie is a Java web application used to schedule Apache Hadoop jobs, seamlessly integrated with the Hadoop ecosystem, supporting various job types like MapReduce, Pig, and Hive. It features workflow and coordinator jobs that allow the execution of directed acyclic graphs and recurring jobs based on time and data availability. The document also provides step-by-step examples for running jobs, including MapReduce, Sqoop, and Hive processes.