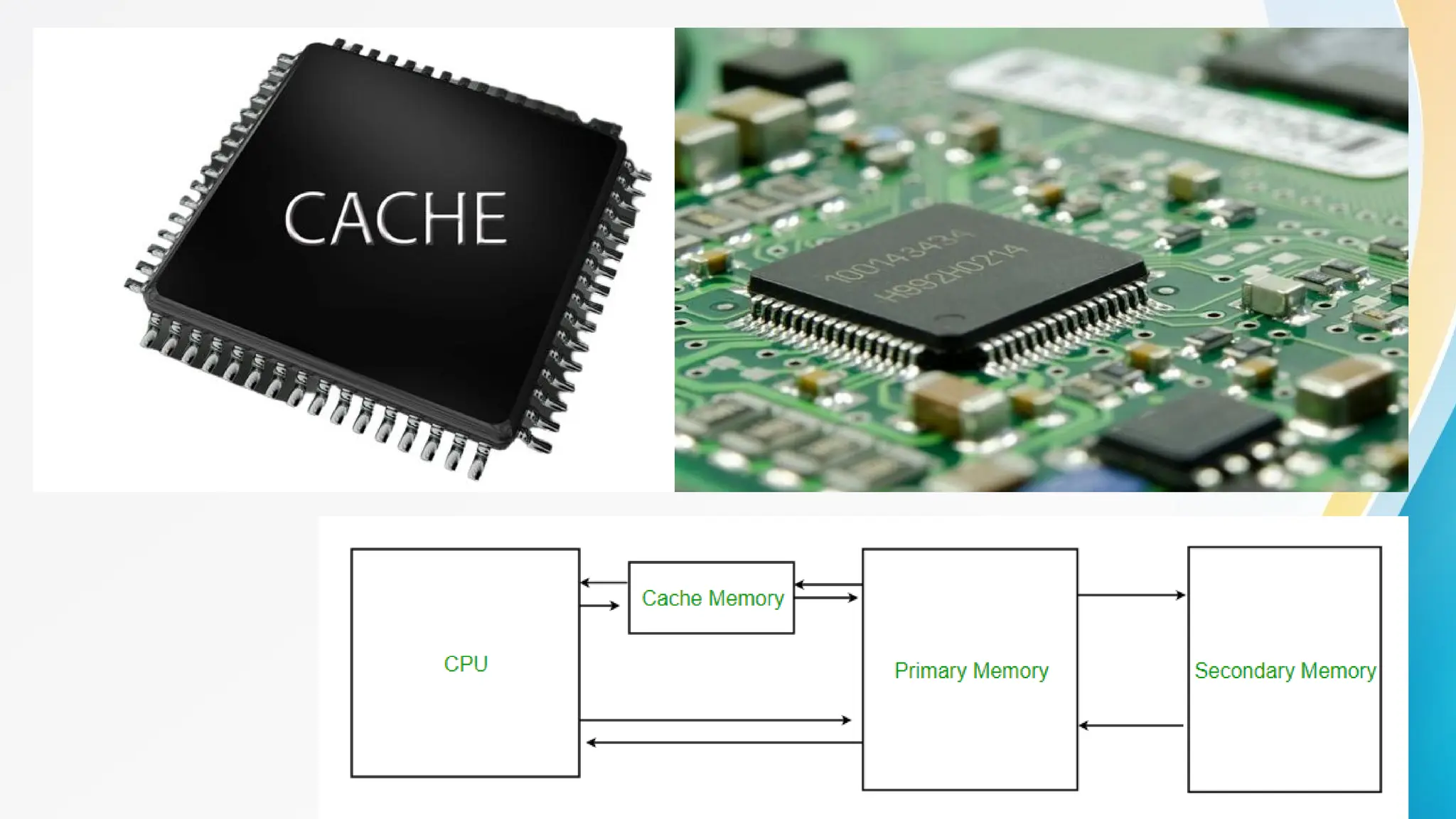

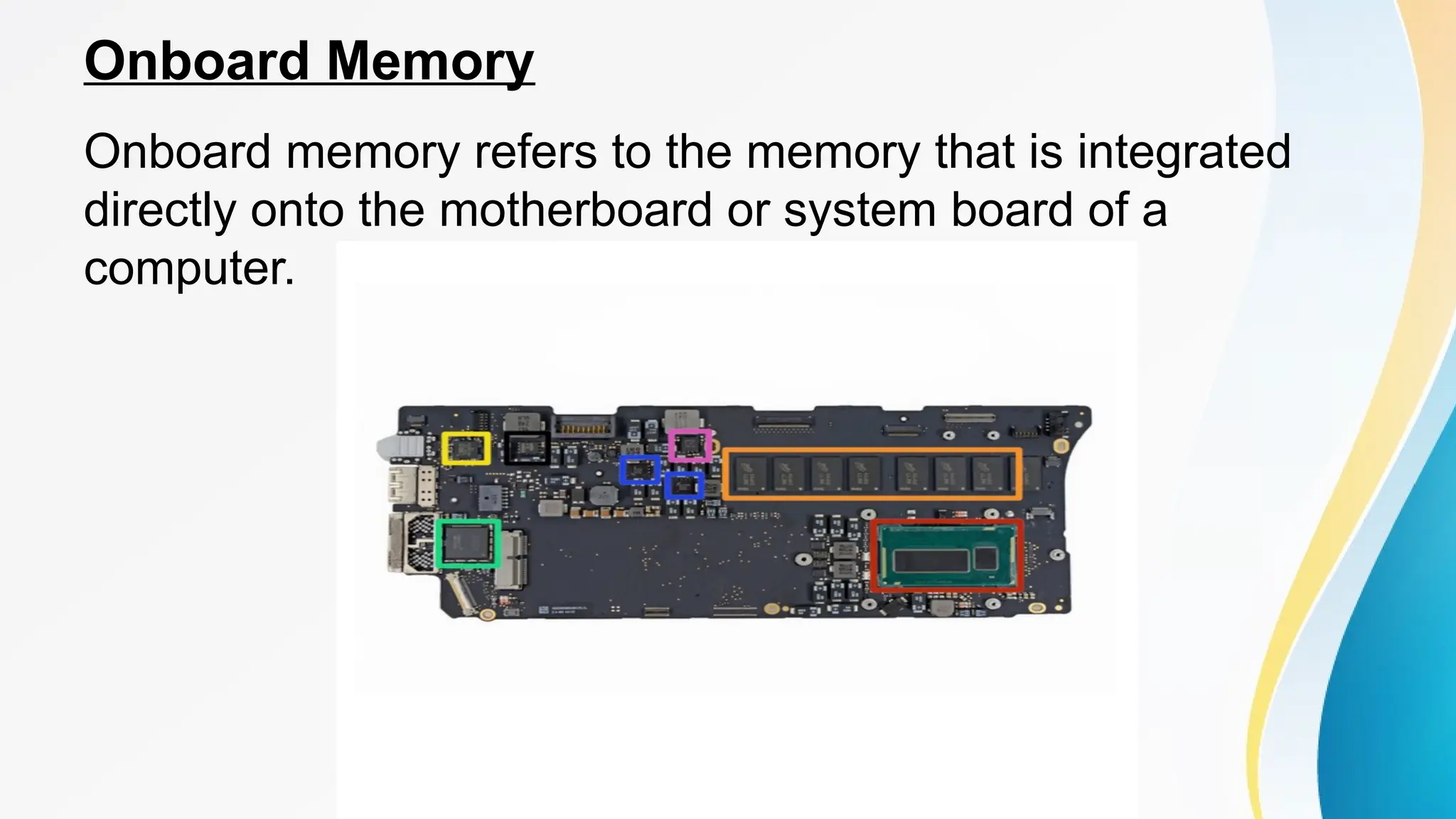

The document provides a comprehensive overview of cache memory, including its characteristics, types, and hierarchy, as well as how it operates and its advantages and disadvantages. It also discusses cache coherence, including protocols and techniques for maintaining consistency in multi-core systems, and elaborates on cache misses and hits, their classifications, and factors influencing their performance. Additionally, the document covers memory types, access methods, and the principles underlying cache memory management.