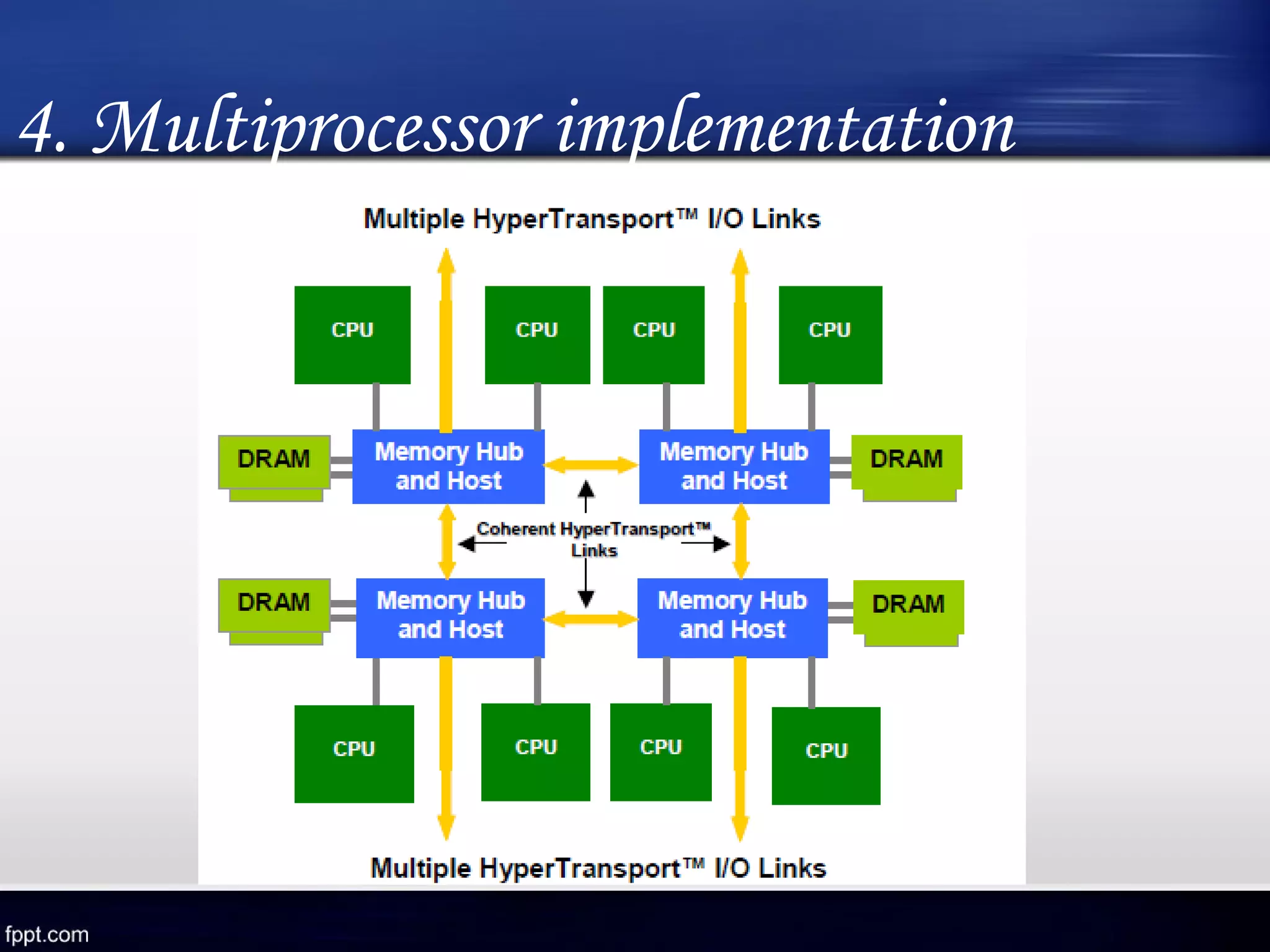

The document discusses HyperTransport technology, an innovative high-bandwidth I/O architecture developed by AMD, designed to improve system performance and simplify design. It highlights key features such as low latency, high bandwidth, and flexibility for CPU and I/O communications while maintaining compatibility with legacy systems. The architecture is structured into five layers, and it addresses various challenges of traditional bus systems, offering a solution to the I/O bandwidth problem.