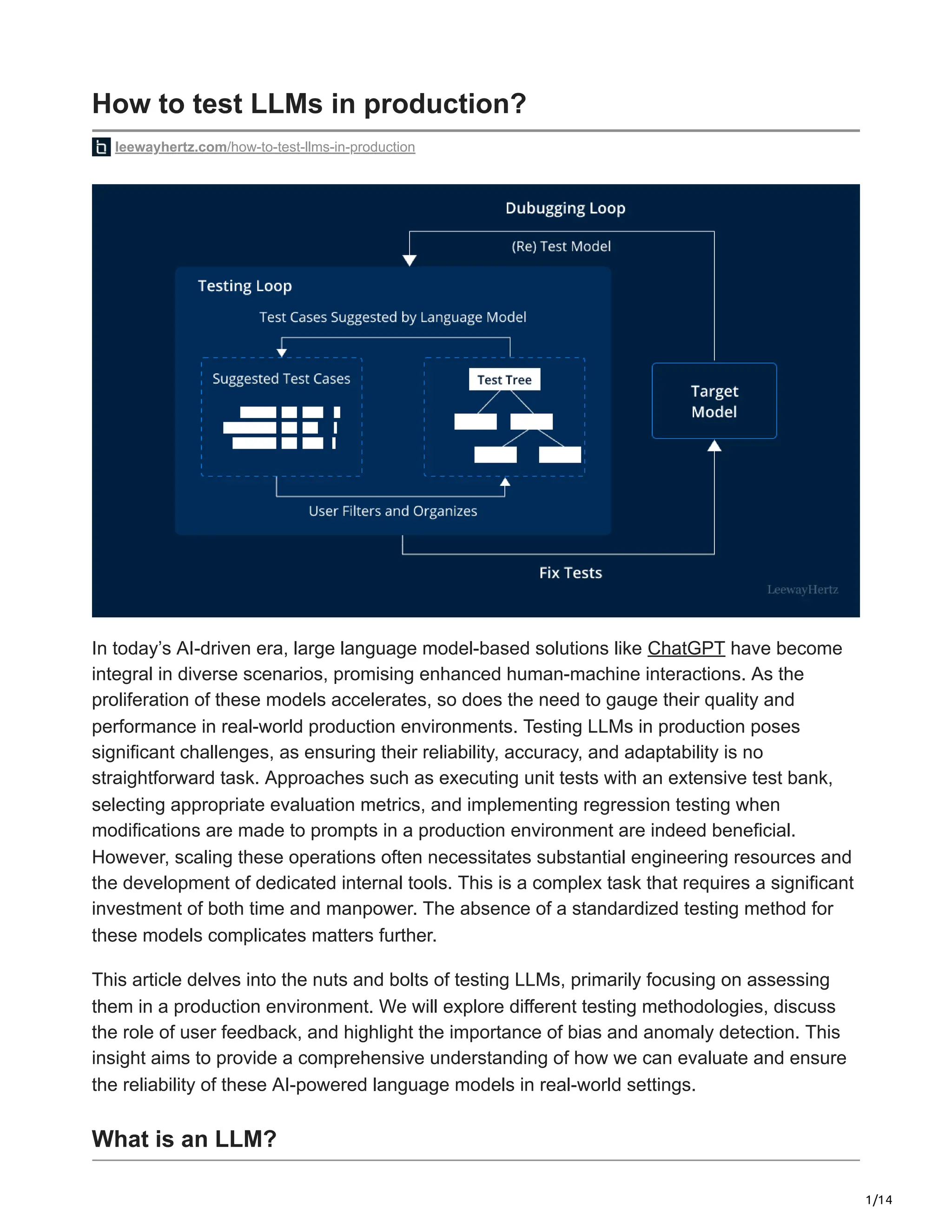

The document discusses the importance and complexities of testing large language models (LLMs) in production, emphasizing the need for reliable evaluation methods to enhance performance and mitigate risks such as bias and adversarial attacks. It outlines various testing approaches, including unit, integration, and regression testing, while highlighting the significance of user feedback in refining model performance. The article provides insights into practical challenges faced during production testing and emphasizes the necessity of adapting LLMs for specific tasks and optimizing system resources.