Embed presentation

Download to read offline

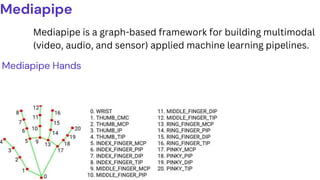

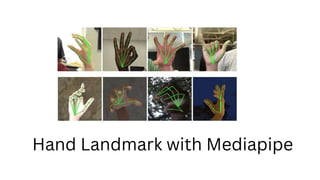

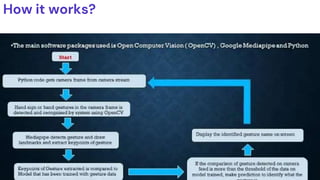

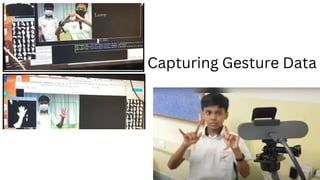

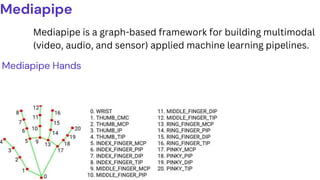

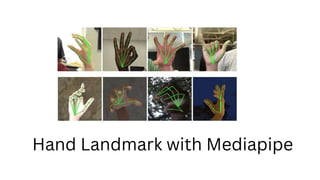

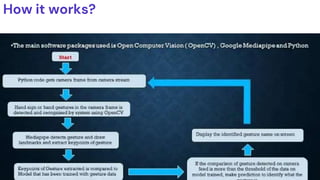

This document describes a proposed system to translate hand gestures and sign language into text in real time using Google's Mediapipe artificial intelligence tools. It would help improve communication for deaf or mute individuals. The system would use machine learning and deep learning neural networks to extract features and classify gestures captured by a camera in real time. Mediapipe is highlighted as an improvement over openCV for more reliable and accurate hand detection by overcoming issues like needing a clean background and proper lighting.