The document contains a Python script that utilizes various libraries including OpenCV and MediaPipe for detecting and classifying human poses. It defines functions for pose detection, angle calculation between landmarks, and displaying pose landmarks on images. Additionally, it includes logic to classify a specific pose related to a golf swing from a right side view using calculated angles of body joints.

![44. landmarks: A list of detected landmarks converted into their original scale.

45. '''

46.

47. # Create a copy of the input image.

48. output_image = image.copy()

49.

50. # Convert the image from BGR into RGB format.

51. # imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

52.

53. # Perform the Pose Detection.

54. results = pose.process(image)

55.

56. # Retrieve the height and width of the input image.

57. height, width, _ = image.shape

58. # Initialize a list to store the detected landmarks.

59. landmarks = []

60. landmarks_world = []

61. # print(height, width)

62. # Check if any landmarks are detected.

63. if results.pose_landmarks:

64. # Draw Pose landmarks on the output image.

65. mp_drawing.draw_landmarks(image=output_image,

landmark_list=results.pose_landmarks,

66. connections=mp_pose.POSE_CONNECTIONS)

67.

68. mp_drawing.draw_landmarks(image=output_image,

landmark_list=results.pose_landmarks,

69. connections=mp_pose.POSE_CONNECTIONS)

70. # Iterate over the detected landmarks.

71. for landmark in results.pose_landmarks.landmark:

72. # # Append the landmark into the list.

73. landmarks.append((int(landmark.x * width), int(landmark.y * height),

74. (landmark.z * width)))

75.

76. for landmark in results.pose_world_landmarks.landmark:

77. # # Append the landmark into the list.

78. landmarks_world.append((int(landmark.x * width), int(landmark.y * height),

79. (landmark.z * width)))

80.

81. # Check if the original input image and the resultant image are specified to be

displayed.

82. if display:

83. # # Display the original input image and the resultant image.

84. # plt.figure(figsize=[22,22])

85. # plt.subplot(121);plt.imshow(image[:,:,::-1]);plt.title("Original Image");plt.axis('off');

86. # plt.subplot(122);plt.imshow(output_image[:,:,::-1]);plt.title("Output

Image");plt.axis('off');

87. # Also Plot the Pose landmarks in 3D.

88. mp_drawing.plot_landmarks(results.pose_landmarks,

mp_pose.POSE_CONNECTIONS)

89. return output_image, landmarks, landmarks_world](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-2-320.jpg)

![137. # Initialize the label of the pose. It is not known at this stage.

138. label = '.'

139. label_1 = '.'

140.

141.

142. # Specify the color (Red) with which the label will be written on the image.

143. color = (0, 0, 255)

144.

145. # Calculate the required angles for the ROMS we are interested in.

146.

147.

#----------------------------------------------------------------------------------------------------------------

148.

149. # Get the angle between the left shoulder, elbow and wrist points.

150. left_elbow_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

151. landmarks_0[mp_pose.PoseLandmark.LEFT_ELBOW.value],

152. landmarks_0[mp_pose.PoseLandmark.LEFT_WRIST.value])

153.

154. # Get the angle between the right shoulder, elbow and wrist points.

155. right_elbow_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

156.

landmarks_0[mp_pose.PoseLandmark.RIGHT_ELBOW.value],

157. landmarks_0[mp_pose.PoseLandmark.RIGHT_WRIST.value])

158.

159. # Get the angle between the left elbow, shoulder and hip points.

160. left_shoulder_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.LEFT_ELBOW.value],

161.

landmarks_0[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

162. landmarks_0[mp_pose.PoseLandmark.LEFT_HIP.value])

163.

164. # Get the angle between the right hip, shoulder and elbow points.

165. right_shoulder_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.RIGHT_HIP.value],

166.

landmarks_0[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

167.

landmarks_0[mp_pose.PoseLandmark.RIGHT_ELBOW.value])

168.

169. # Get the angle between the left hip, knee and ankle points.

170. left_knee_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.LEFT_HIP.value],

171. landmarks_0[mp_pose.PoseLandmark.LEFT_KNEE.value],

172. landmarks_0[mp_pose.PoseLandmark.LEFT_ANKLE.value])

173.

174. # Get the angle between the right hip, knee and ankle points

175. right_knee_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.RIGHT_HIP.value],

176. landmarks_0[mp_pose.PoseLandmark.RIGHT_KNEE.value],](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-4-320.jpg)

![177. landmarks_0[mp_pose.PoseLandmark.RIGHT_ANKLE.value])

178.

179. ##

180. ##

181. ##

182. # Get the angle between the right hip, knee and ankle points

183. right_bending_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

184. landmarks_0[mp_pose.PoseLandmark.RIGHT_HIP.value],

185. landmarks_0[mp_pose.PoseLandmark.RIGHT_KNEE.value])

186. # Get the angle between the right hip, knee and ankle points

187. left_bending_angle =

calculateAngle(landmarks_0[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

188. landmarks_0[mp_pose.PoseLandmark.LEFT_HIP.value],

189. landmarks_0[mp_pose.PoseLandmark.LEFT_KNEE.value])

190.

191.

192.

193. ##

194. x1,y1,z1 = landmarks_0[mp_pose.PoseLandmark.LEFT_ANKLE.value]

195. x2,y2,z2 = landmarks_0[mp_pose.PoseLandmark.RIGHT_ANKLE.value]

196. mid_GROUND_x,mid_GROUND_y,mid_GROUND_z = (x1+x2)/2 , (y1+y2)/2,

(z1+z2)/2

197.

198. x3,y3,z3 = landmarks_0[mp_pose.PoseLandmark.LEFT_HIP.value]

199. x4,y4,z4 = landmarks_0[mp_pose.PoseLandmark.RIGHT_HIP.value]

200. mid_HIP_x,mid_HIP_y,mid_HIP_z = (x3+x4)/2 , (y3+y4)/2, (z3+z4)/2

201.

202. GROUND_HIP_NOSE_angle =

calculateAngle((mid_GROUND_x,mid_GROUND_y,mid_GROUND_z),

203. (mid_HIP_x,mid_HIP_y,mid_HIP_z),

204. landmarks_0[mp_pose.PoseLandmark.NOSE.value])

205.

206. x5,y5,z5 = landmarks_0[mp_pose.PoseLandmark.LEFT_SHOULDER.value]

207. x6,y6,z6 = landmarks_0[mp_pose.PoseLandmark.RIGHT_SHOULDER.value]

208. mid_SHOULDER_x,mid_SHOULDER_y,mid_SHOULDER_z = (x5+x6)/2 ,

(y5+y6)/2, (z5+z6)/2

209.

210. dist_between_shoulders = round(math.sqrt((int(x5)-int(x6))**2 +

(int(y5)-int(y6))**2))

211.

212. x7,y7,z7 = landmarks_0[mp_pose.PoseLandmark.NOSE.value]

213. lenght_of_body = round(math.sqrt((int(x7)-int(mid_GROUND_x))**2 +

(int(y7)-int(mid_GROUND_y))**2))

214.

215.

216. x8,y8,z8 = landmarks_0[mp_pose.PoseLandmark.LEFT_PINKY.value]

217. x9,y9,z9 = landmarks_0[mp_pose.PoseLandmark.RIGHT_PINKY.value]

218.

219. x10,y10,z10 = landmarks_0[mp_pose.PoseLandmark.NOSE.value]

220.](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-5-320.jpg)

![221. x11,y11,z11 = landmarks_0[mp_pose.PoseLandmark.LEFT_KNEE.value]

222. x12,y12,z12 = landmarks_0[mp_pose.PoseLandmark.RIGHT_KNEE.value]

223.

224. ## Drawing shoulder vertical lines

225. cv2.line(output_image_0, (x1,y1), (x1,y1-300), [0,0,255], thickness = 2, lineType =

cv2.LINE_8, shift = 0)

226. cv2.line(output_image_0, (x2,y2), (x2,y2-300), [0,0,255], thickness = 2, lineType =

cv2.LINE_8, shift = 0)

227.

228. cv2.circle(output_image_0,

(round(mid_SHOULDER_x),round(mid_SHOULDER_y)), round(lenght_of_body/2), color

= [128,0,0], thickness =2)

229.

230. cv2.line(output_image_0, (x3,y3), (x4,y4), [0,255,255], thickness = 4, lineType =

cv2.LINE_8, shift = 0)

231. cv2.line(output_image_0, (x5,y5), (x6,y6), [0,255,255], thickness = 4, lineType =

cv2.LINE_8, shift = 0)

232.

233. cv2.line(output_image_0,

(round(mid_SHOULDER_x),round(mid_SHOULDER_y)),

(round(mid_HIP_x),round(mid_HIP_y)), [0,255,255], thickness = 4, lineType =

cv2.LINE_8, shift = 0)

234. cv2.line(output_image_0, (x11,y11), (x12,y12), [0,255,255], thickness = 4, lineType

= cv2.LINE_8, shift = 0)

235.

236. cv2.line(output_image_0, (round(mid_HIP_x),round(mid_HIP_y)),

(round(mid_HIP_x),round(mid_HIP_y-300)), [0,0,255], thickness = 2, lineType =

cv2.LINE_8, shift = 0)

237.

238. midHip_midShoulder_angle_fromVertical =

calculateAngle((mid_HIP_x,mid_HIP_y-300,mid_HIP_z),

239. (mid_HIP_x,mid_HIP_y,mid_HIP_z),

240.

(mid_SHOULDER_x,mid_SHOULDER_y,mid_SHOULDER_z))

241.

242. if midHip_midShoulder_angle_fromVertical > 180:

243. midHip_midShoulder_angle_fromVertical = 360

-midHip_midShoulder_angle_fromVertical

244. cv2.putText(output_image_0, "Core Angle:" +

str(midHip_midShoulder_angle_fromVertical),

(round(mid_HIP_x)+20,round(mid_HIP_y)), fontFace =

cv2.FONT_HERSHEY_SIMPLEX, fontScale = 1,color = [0,0,255], thickness = 2)

245.

246.

247. try:

248. # Check if person is in ADDRESS stage

249. if x8/x9 >0/8 and x8/x9 < 1.2 and y8 > mid_SHOULDER_y and y9 >

mid_SHOULDER_y: #Checking if both hands are on club grip

250. if x8 > x2 and x8 < x1 and x9 >x2 and x9 < x1:

251. label = "ADDRESS pose established"

252. # Check if person HEAD has left the boundary of ankle vertical lines](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-6-320.jpg)

![253. if x10 < x2 or x10 > x1:

254. cv2.circle(output_image_0, (x10,y10), 20, color = [0,0,255], thickness

=2)

255. # print("Keep Head posture within red line boundary")

256. cv2.putText(output_image_0, "Keep Head posture within red line

boundary", (x10+20,y10), fontFace = cv2.FONT_HERSHEY_SIMPLEX, fontScale =

1,color = [0,0,255], thickness = 2)

257.

258. ## While in ADDRESS pose, we want to check the pose correction from

back view as well

259. output_image_1, label_1 =

classifyPose_Golfswing_BACK_SIDE_view(landmarks_1, output_image_1,

display=False)

260.

261. except:

262. pass

263.

264. # Check if person is in TAKE BACK stage

265. try:

266. if x8/x9 >0/8 and x8/x9 < 1.2: #Checking if both hands are on club grip

267. if x8 < x2 and x9 < x2:

268. label = "TAKE BACK pose in process"

269. if x10 < x2 or x10 > x1:

270. cv2.circle(output_image_0, (x10,y10), 20, color = [0,0,255], thickness

=2)

271. print("Keep Head posture within red line boundary")

272. cv2.putText(output_image_0, "Keep Head posture within red line

boundary", (x10+20,y10), fontFace = cv2.FONT_HERSHEY_SIMPLEX, fontScale =

1,color = [0,0,255], thickness = 2)

273. except:

274. pass

275.

276. # Check if person has reached BACKSWING TOP

277. try:

278. if x8/x9 >0/8 and x8/x9 < 1.2 and y8 < mid_SHOULDER_y and y9 <

mid_SHOULDER_y: #Checking if both hands are on club grip

279. if x8 < x1 and x9 < x1: #and x8 > x2 and x9 >x2 #It is not neccasary that the

right and left hands be in the Red Lines defined by Left and Right ankle. It is important

the Left and Right hand be left of the Left ankle and hgiher than the shoulder mid point

280. label = "BACKSWING TOP reached. Ready to launch swing"

281. if x10 < x2 or x10 > x1:

282. cv2.circle(output_image_0, (x10,y10), 20, color = [0,0,255], thickness

=2)

283. print("Keep Head posture within red line boundary")

284. cv2.putText(output_image_0, "Keep Head posture within red line

boundary", (x10+20,y10), fontFace = cv2.FONT_HERSHEY_SIMPLEX, fontScale =

1,color = [0,0,255], thickness = 2)

285.

286. except:

287. pass

288.](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-7-320.jpg)

![289. # Check if person is in FOLLOW THROUGH stage

290. try:

291. if x8/x9 >0/8 and x8/x9 < 1.2 and y8 > mid_SHOULDER_y and y9 >

mid_SHOULDER_y: #Checking if both hands are on club grip

292. if x8 > x1 and x9 > x1:

293. label = "FOLLOW THROUGH stage"

294. except:

295. pass

296.

297. try:

298. if x8/x9 >0/8 and x8/x9 < 1.2 and y8 < mid_SHOULDER_y and y9 <

mid_SHOULDER_y: #Checking if both hands are on club grip

299. if x8 > x1 and x9 > x1:

300. label = "FINISH SWING"

301. except:

302. pass

303.

304. #

#----------------------------------------------------------------------------------------------------------------

305.

306. # Check if the pose is classified successfully

307. if label != 'Unknown Pose':

308. # Update the color (to green) with which the label will be written on the image.

309. color = (0, 255, 0)

310. # Write the label on the output image.

311. cv2.putText(output_image_0, label, (10, 30),cv2.FONT_HERSHEY_PLAIN, 2,

color, 2)

312.

313.

314. ###########################################

315. # Check if the resultant image is specified to be displayed.

316. if display:

317. # Display the resultant image.

318. plt.figure(figsize=[10,10])

319. plt.imshow(output_image_0[:,:,::-1]);plt.title("Output Image");plt.axis('off');

320.

321. else:

322. ######################################333

323. # Return the output image and the classified label.

324. return output_image_0, label, output_image_1, label_1

325.

326. def classifyPose_Golfswing_BACK_SIDE_view(landmarks, output_image,

display=False):

327. '''

328. This function classifies poses depending upon the angles of various body joints.

329. Args:

330. landmarks: A list of detected landmarks of the person whose pose needs to be

classified.

331. output_image: A image of the person with the detected pose landmarks drawn.

332. display: A boolean value that is if set to true the function displays the resultant

image with the pose label](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-8-320.jpg)

![333. written on it and returns nothing.

334. Returns:

335. output_image: The image with the detected pose landmarks drawn and pose

label written.

336. label: The classified pose label of the person in the output_image.

337.

338. '''

339. # Initialize the label of the pose. It is not known at this stage.

340. label = '.'

341.

342. # Specify the color (Red) with which the label will be written on the image.

343. color = (0, 0, 255)

344.

345. # Calculate the required angles for the ROMS we are interested in.

346.

347.

#----------------------------------------------------------------------------------------------------------------

348.

349. # Get the angle between the left shoulder, elbow and wrist points.

350. left_elbow_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

351. landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value],

352. landmarks[mp_pose.PoseLandmark.LEFT_WRIST.value])

353.

354. # Get the angle between the right shoulder, elbow and wrist points.

355. right_elbow_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

356. landmarks[mp_pose.PoseLandmark.RIGHT_ELBOW.value],

357. landmarks[mp_pose.PoseLandmark.RIGHT_WRIST.value])

358.

359. # Get the angle between the left elbow, shoulder and hip points.

360. left_shoulder_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.LEFT_ELBOW.value],

361.

landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

362. landmarks[mp_pose.PoseLandmark.LEFT_HIP.value])

363.

364. # Get the angle between the right hip, shoulder and elbow points.

365. right_shoulder_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value],

366.

landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

367.

landmarks[mp_pose.PoseLandmark.RIGHT_ELBOW.value])

368.

369. # Get the angle between the left hip, knee and ankle points.

370. left_knee_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.LEFT_HIP.value],

371. landmarks[mp_pose.PoseLandmark.LEFT_KNEE.value],

372. landmarks[mp_pose.PoseLandmark.LEFT_ANKLE.value])

373.](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-9-320.jpg)

![374. # Get the angle between the right hip, knee and ankle points

375. right_knee_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value],

376. landmarks[mp_pose.PoseLandmark.RIGHT_KNEE.value],

377. landmarks[mp_pose.PoseLandmark.RIGHT_ANKLE.value])

378.

379. ##

380. ##

381. ##

382. # Get the angle between the right hip, knee and ankle points

383. right_bending_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value],

384. landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value],

385. landmarks[mp_pose.PoseLandmark.RIGHT_KNEE.value])

386.

387. # Get the angle between the right hip, knee and ankle points

388. left_bending_angle =

calculateAngle(landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value],

389. landmarks[mp_pose.PoseLandmark.LEFT_HIP.value],

390. landmarks[mp_pose.PoseLandmark.LEFT_KNEE.value])

391. #########################################

392. ## Bringing angles to within 0 - 180 degree range

393.

394. if right_knee_angle > 180:

395. right_knee_angle = 360 -right_knee_angle

396.

397. if right_bending_angle > 180:

398. right_bending_angle = 360 -right_bending_angle

399. ###############################3

400. ##

401. x1,y1,z1 = landmarks[mp_pose.PoseLandmark.LEFT_ANKLE.value]

402. x2,y2,z2 = landmarks[mp_pose.PoseLandmark.RIGHT_ANKLE.value]

403. mid_GROUND_x,mid_GROUND_y,mid_GROUND_z = (x1+x2)/2 , (y1+y2)/2,

(z1+z2)/2

404.

405. x3,y3,z3 = landmarks[mp_pose.PoseLandmark.LEFT_HIP.value]

406. x4,y4,z4 = landmarks[mp_pose.PoseLandmark.RIGHT_HIP.value]

407. mid_HIP_x,mid_HIP_y,mid_HIP_z = (x3+x4)/2 , (y3+y4)/2, (z3+z4)/2

408.

409. GROUND_HIP_NOSE_angle =

calculateAngle((mid_GROUND_x,mid_GROUND_y,mid_GROUND_z),

410. (mid_HIP_x,mid_HIP_y,mid_HIP_z),

411. landmarks[mp_pose.PoseLandmark.NOSE.value])

412.

413. x5,y5,z5 = landmarks[mp_pose.PoseLandmark.LEFT_SHOULDER.value]

414. x6,y6,z6 = landmarks[mp_pose.PoseLandmark.RIGHT_SHOULDER.value]

415. mid_SHOULDER_x,mid_SHOULDER_y,mid_SHOULDER_z = (x5+x6)/2 ,

(y5+y6)/2, (z5+z6)/2

416.

417. dist_between_shoulders = round(math.sqrt((int(x5)-int(x6))**2 +

(int(y5)-int(y6))**2))](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-10-320.jpg)

![418.

419. x7,y7,z7 = landmarks[mp_pose.PoseLandmark.NOSE.value]

420. lenght_of_body = round(math.sqrt((int(x7)-int(mid_GROUND_x))**2 +

(int(y7)-int(mid_GROUND_y))**2))

421.

422.

423. x8,y8,z8 = landmarks[mp_pose.PoseLandmark.LEFT_PINKY.value]

424. x9,y9,z9 = landmarks[mp_pose.PoseLandmark.RIGHT_PINKY.value]

425.

426. x10,y10,z10 = landmarks[mp_pose.PoseLandmark.NOSE.value]

427.

428. x11,y11,z11 = landmarks[mp_pose.PoseLandmark.LEFT_KNEE.value]

429. x12,y12,z12 = landmarks[mp_pose.PoseLandmark.RIGHT_KNEE.value]

430.

431.

432. # # Check if leading (RIGHT) leg is straight

433.

434.

#----------------------------------------------------------------------------------------------------------------

435.

436. cv2.line(output_image, (x12,y12), (x12,y12-300), [0,255,255], thickness = 4,

lineType = cv2.LINE_8, shift = 0)

437.

438. # Check if one leg is straight

439. if right_knee_angle > 165 and right_knee_angle < 179:

440. # Specify the label of the pose that is tree pose.

441. cv2.putText(output_image, "Leadside Knee Flexion angle" + str(180

-right_knee_angle), (x12+20,y12), fontFace = cv2.FONT_HERSHEY_SIMPLEX,

fontScale = 1,color = [0,0,255], thickness = 2)

442. label = '1. Bend your knees more'

443. else:

444. cv2.putText(output_image, "Leadside Knee Flexion angle" + str(180

-right_knee_angle), (x12+20,y12), fontFace = cv2.FONT_HERSHEY_SIMPLEX,

fontScale = 1,color = [0,0,255], thickness = 2)

445. label = 'Knees flexion posture CORRECT!'

446.

447. # Check if one leg is straight

448. if right_bending_angle > 165 and right_bending_angle < 179:

449. # Specify the label of the pose that is tree pose.

450. cv2.putText(output_image, "Leadside Spine Flexion angle" + str(180

-right_knee_angle), (x4+20,y4), fontFace = cv2.FONT_HERSHEY_SIMPLEX, fontScale

= 1,color = [0,0,255], thickness = 2)

451. label = '2. Bend your Spine- more'

452. else:

453. cv2.putText(output_image, "Leadside Spine Flexion angle" + str(180

-right_knee_angle), (x4+20,y4), fontFace = cv2.FONT_HERSHEY_SIMPLEX, fontScale

= 1,color = [0,0,255], thickness = 2)

454. label = 'Spine Flexion angle posture CORRECT!'

455.

456.](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-11-320.jpg)

![457. #

#----------------------------------------------------------------------------------------------------------------

458.

459. # Check if the pose is classified successfully

460. if label != 'Unknown Pose':

461. # Update the color (to green) with which the label will be written on the image.

462. color = (0, 255, 0)

463. # Write the label on the output image.

464. cv2.putText(output_image, label, (10, 30),cv2.FONT_HERSHEY_PLAIN, 2, color,

2)

465.

466. # Check if the resultant image is specified to be displayed.

467. if display:

468. # Display the resultant image.

469. plt.figure(figsize=[10,10])

470. plt.imshow(output_image[:,:,::-1]);plt.title("Output Image");plt.axis('off');

471.

472. else:

473. # Return the output image and the classified label.

474. return output_image, label

475.

476. ###################################################################

####################

477. ###################################################################

####################

478. # Initialize the VideoCapture object to read video recived from PT session

479. #############

480. ##############################

481. camera_video_0 = cv2.VideoCapture(1)

482. camera_video_1 = cv2.VideoCapture(0)

483. #

484. ##

485. # cap = camera_video

486. # camera_video_0.set(3,1280)

487. # camera_video_0.set(4,960)

488. # camera_video_1.set(3,1280)

489. # camera_video_1.set(4,960)

490.

491. print( "Frame capture Initialized from RIGHT side and BACK side video camera")

492. # print("Select the camera footage you are interested in applying CV Models on:

'1'for RIGHT SIDE VIEW, '2' for LEFT SIDE VIEW")

493. # #listening to input

494. # cam_input = keyboard.read_key()

495. ##

496. # cam_input = 2

497. ##

498.

499.

500. with

mp_face_mesh.FaceMesh(max_num_faces=1,refine_landmarks=True,min_detection_co

nfidence=0.5,min_tracking_confidence=0.5) as face_mesh:](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-12-320.jpg)

![501. # Iterate until the webcam is accessed successfrully.

502. while camera_video_0.isOpened() and camera_video_1.isOpened():

503. # Read a frame.

504. ok, frame_0 = camera_video_0.read()

505. ok, frame_1 = camera_video_1.read()

506. # Check if frame is not read properly.

507. if not ok:

508. continue

509. frame_height, frame_width, _ = frame_0.shape

510. # Resize the frame while keeping the aspect ratio.

511. frame_0 = cv2.resize(frame_0, (int(frame_width * (640 / frame_height)), 640))

512. frame_1 = cv2.resize(frame_1, (int(frame_width * (640 / frame_height)), 640))

513. frame_final_0 = frame_0

514. frame_final_1 = frame_1

515.

516. # # Perform Pose landmark detection.

517. # Check if frame is not read properly.

518. if not ok:

519. # Continue to the next iteration to read the next frame and ignore the empty

camera frame.

520. continue

521. #################################################

522. #################################################

523.

524. # if cam_input=='1':

525. frame_0, landmarks_0, landmarks_world = detectPose(frame_0, pose_video,

display=False)

526. frame_1, landmarks_1, landmarks_world = detectPose(frame_1, pose_video,

display=False)

527.

528. if landmarks_0 and landmarks_1:

529. frame_final_0, label_0, frame_final_1, label_1 =

classifyPose_Golfswing_RIGHT_SIDE_view(landmarks_0, frame_0,landmarks_1,

frame_1, display=False)

530. else:

531. continue

532.

533.

534.

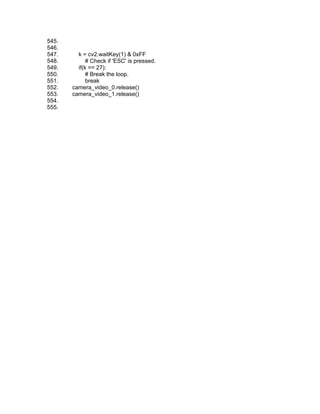

535. if cv2.waitKey(1) & 0xFF==ord('q'): ## EXTRACT THE LABEL OF THE ANGLE

MEASUREMENT AT A PARTICULAR FRAME

536. # breakw

537. print(label_0)

538. print(label_1)

539. #returns the value of the LABEL when q is pressed

540. ###################################################################

######################################

541.

542.

543. stream_final_img = cv2.hconcat([frame_final_0, frame_final_1])

544. cv2.imshow('Combined Video', stream_final_img)](https://image.slidesharecdn.com/golfswinganalysis-240403052438-4971fa7a/85/Golf-Swing-Analysis-and-Posture-Correction-System-13-320.jpg)