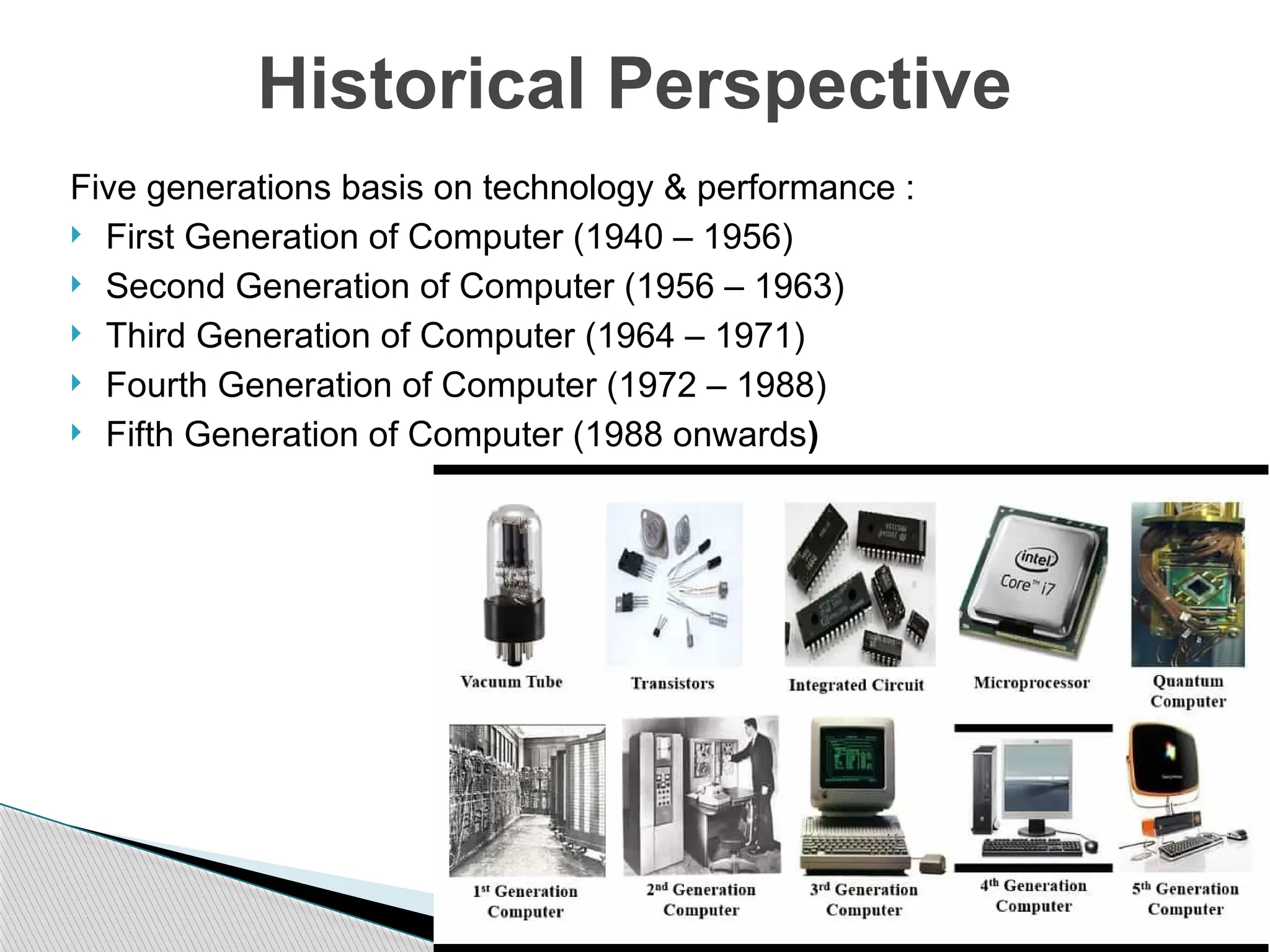

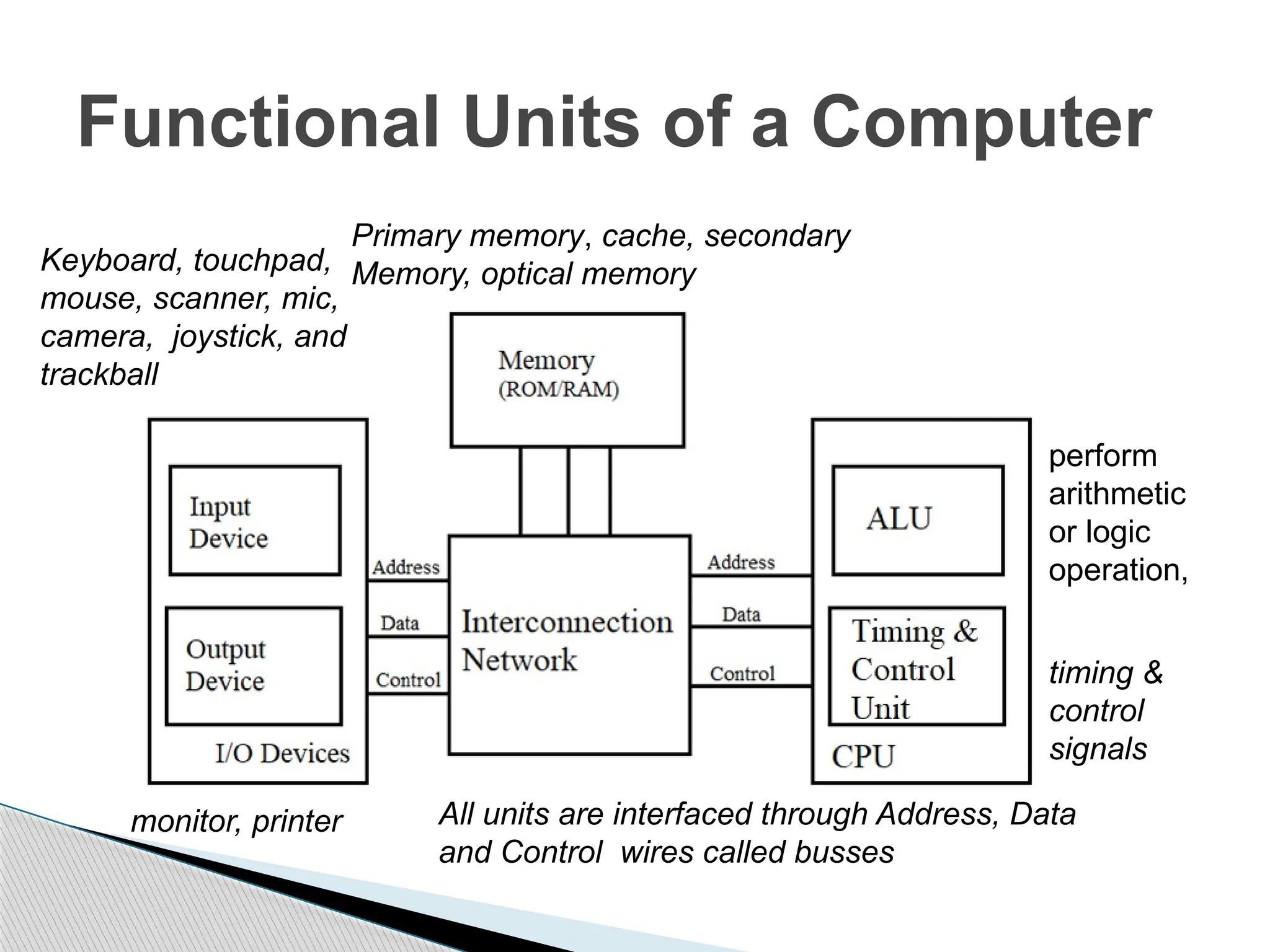

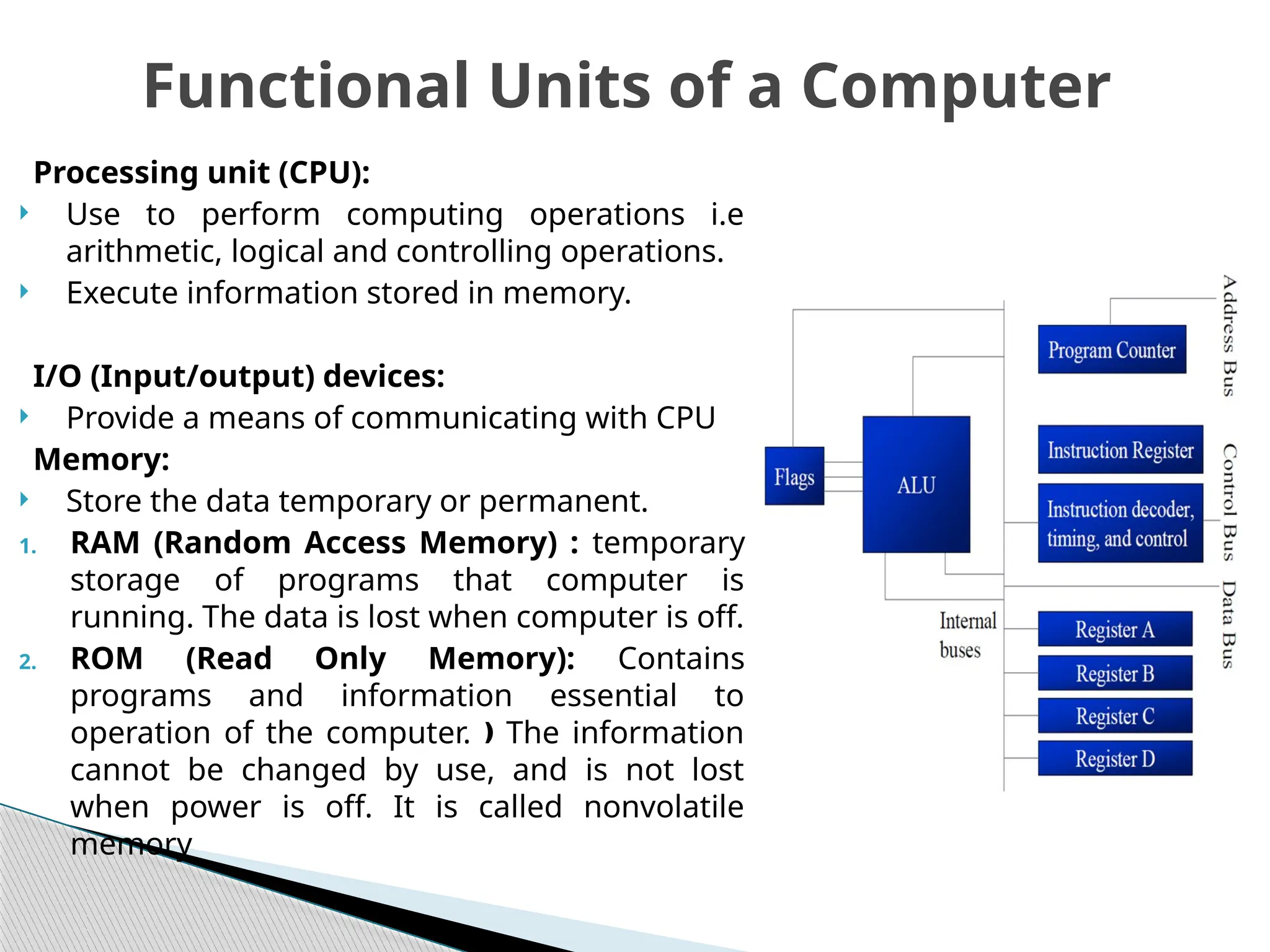

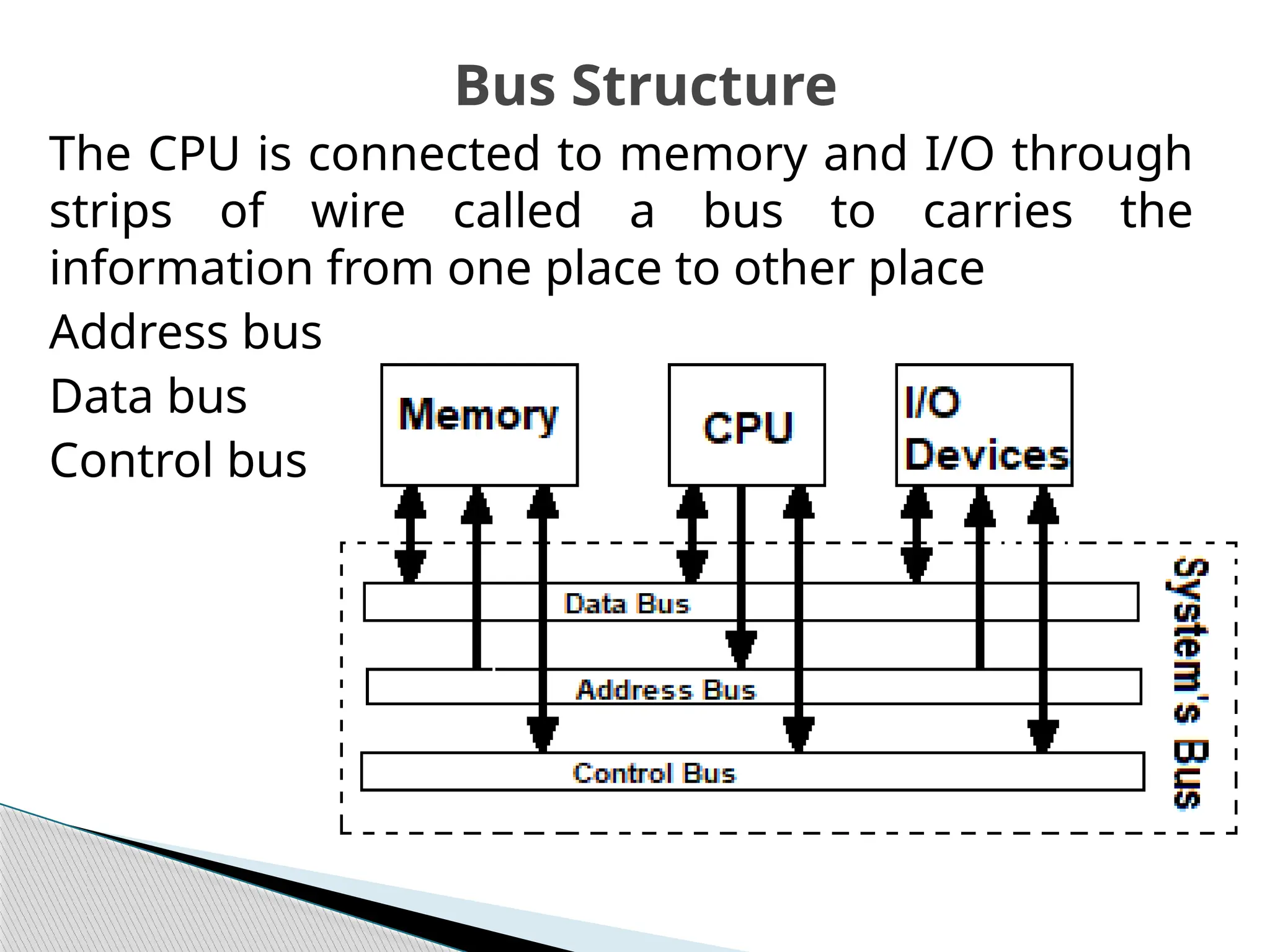

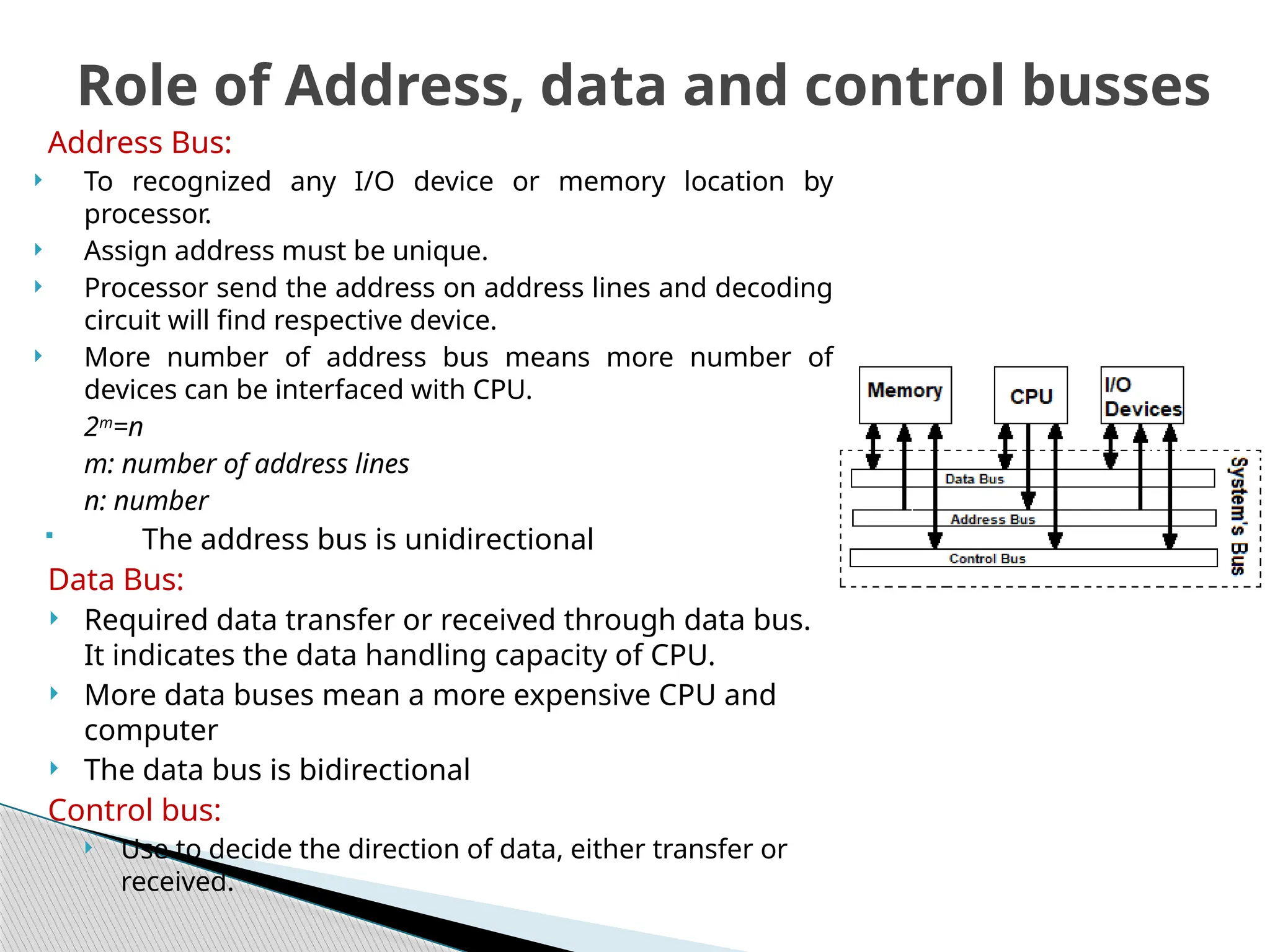

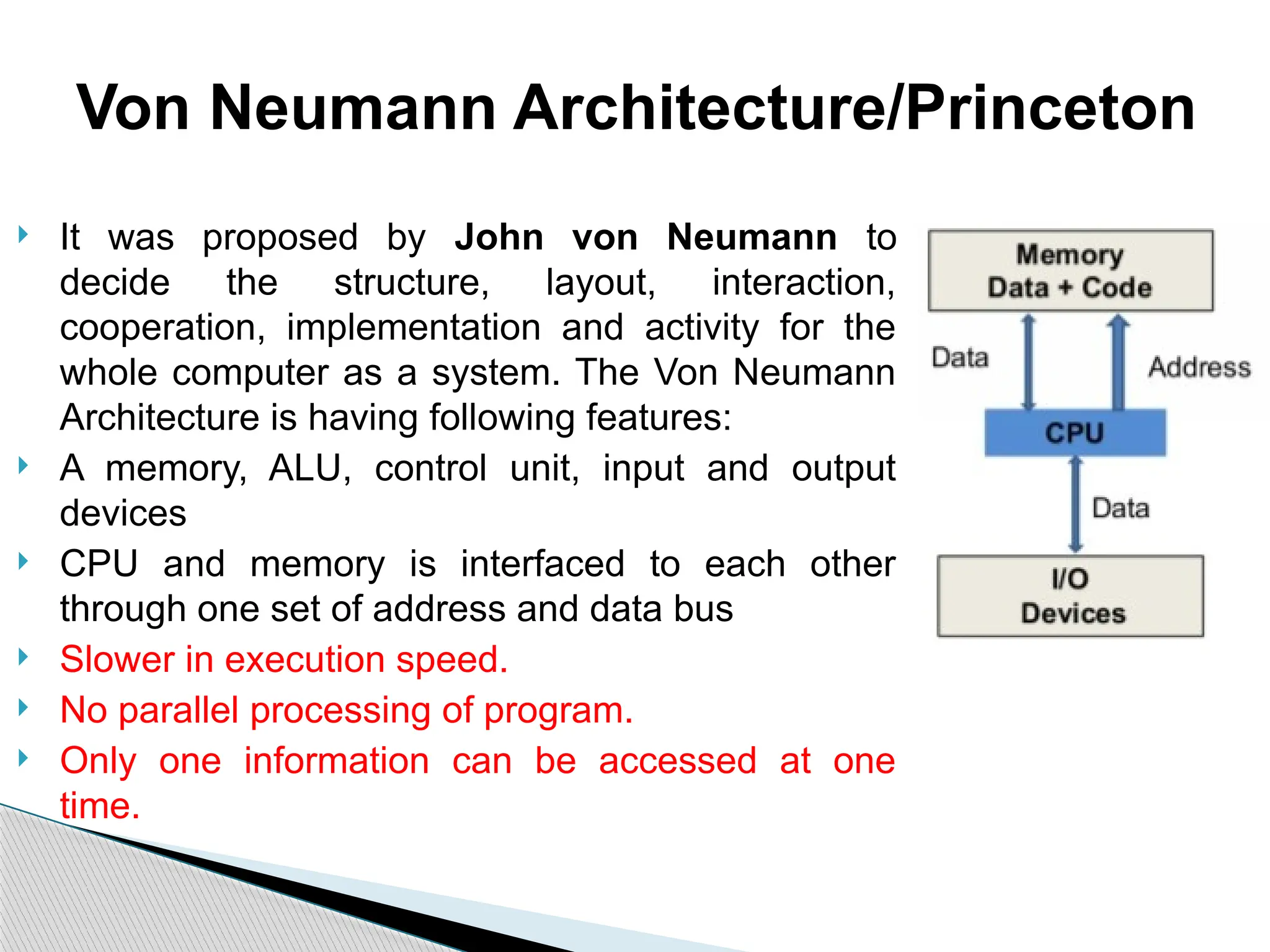

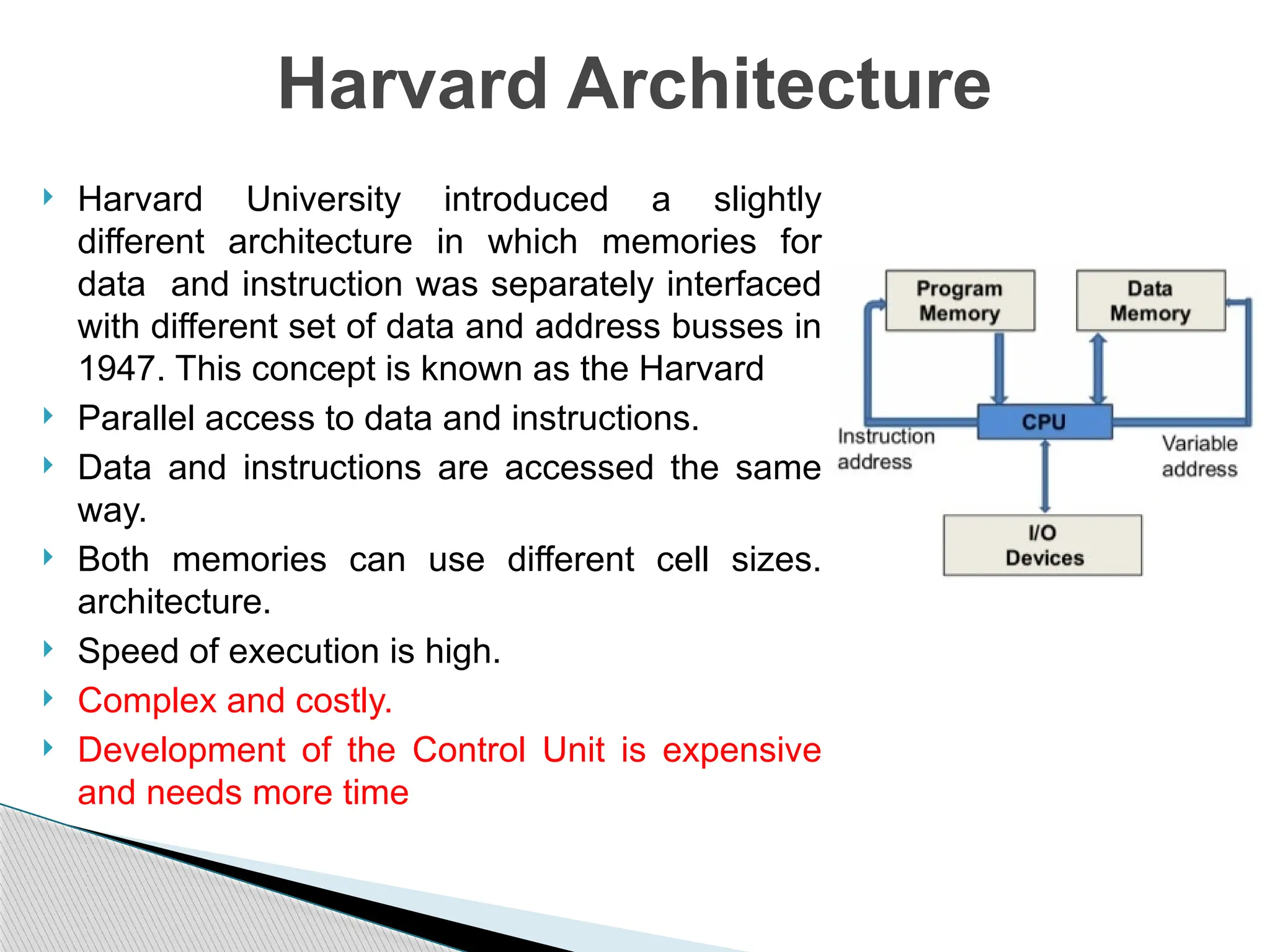

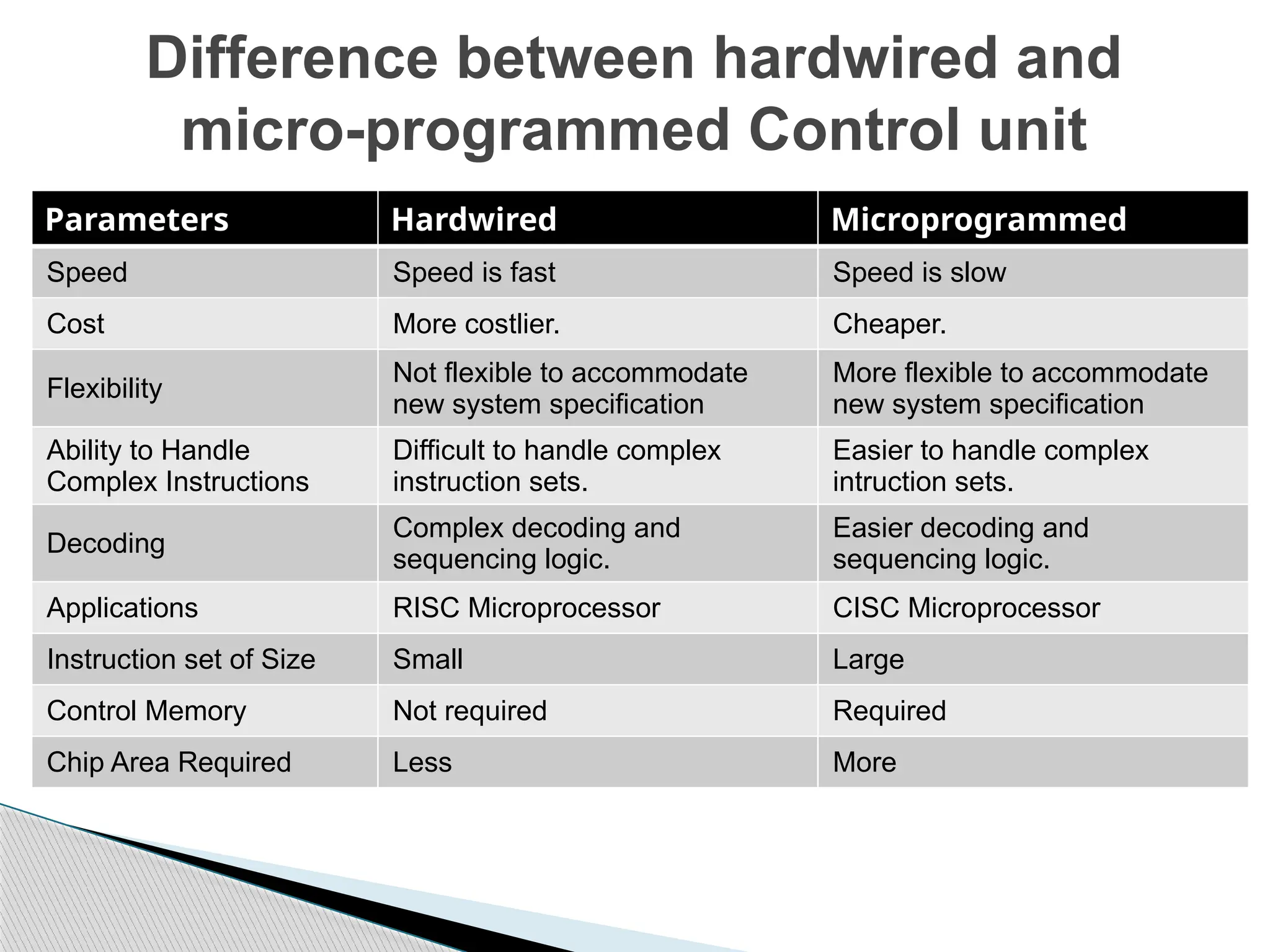

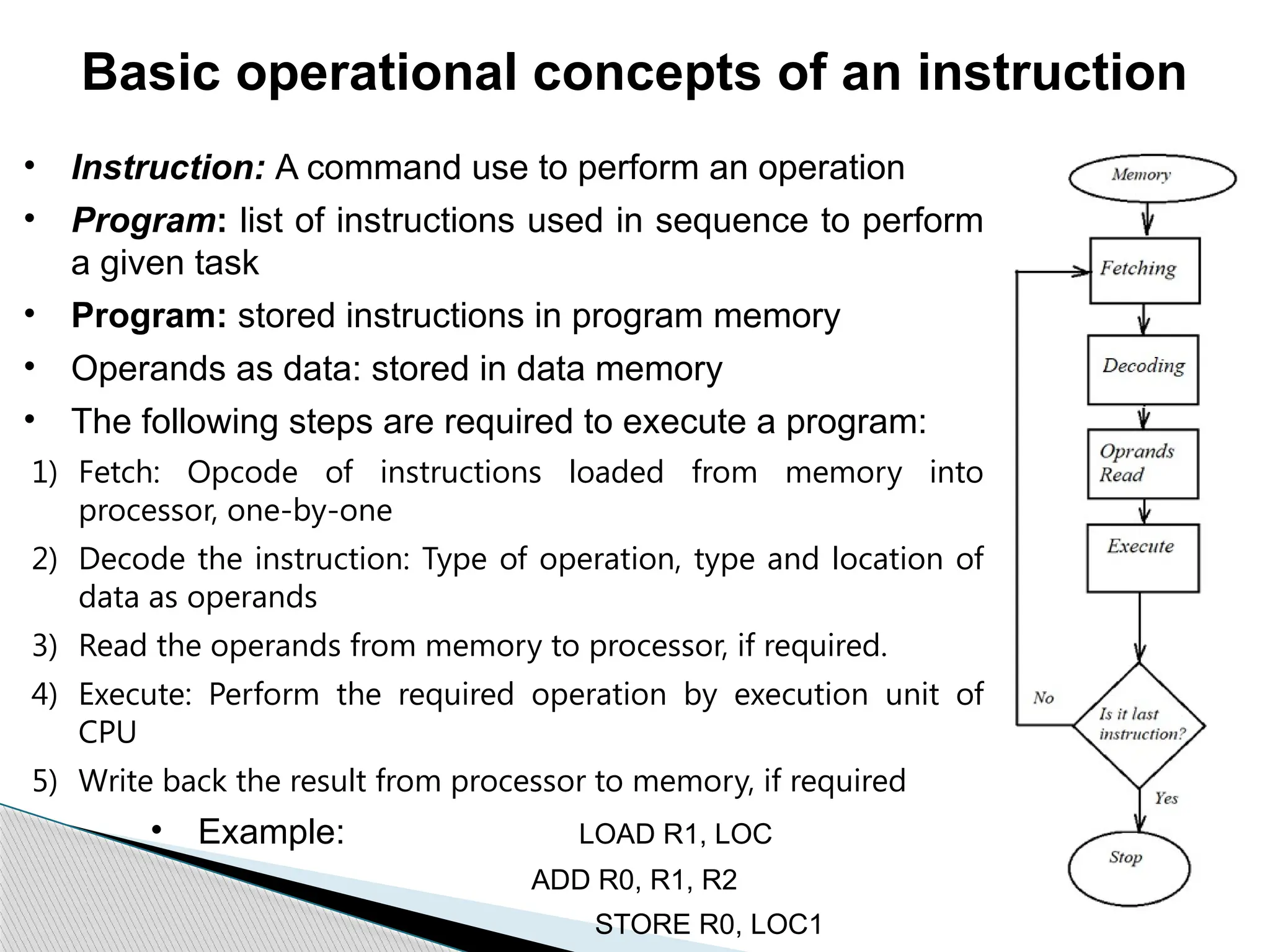

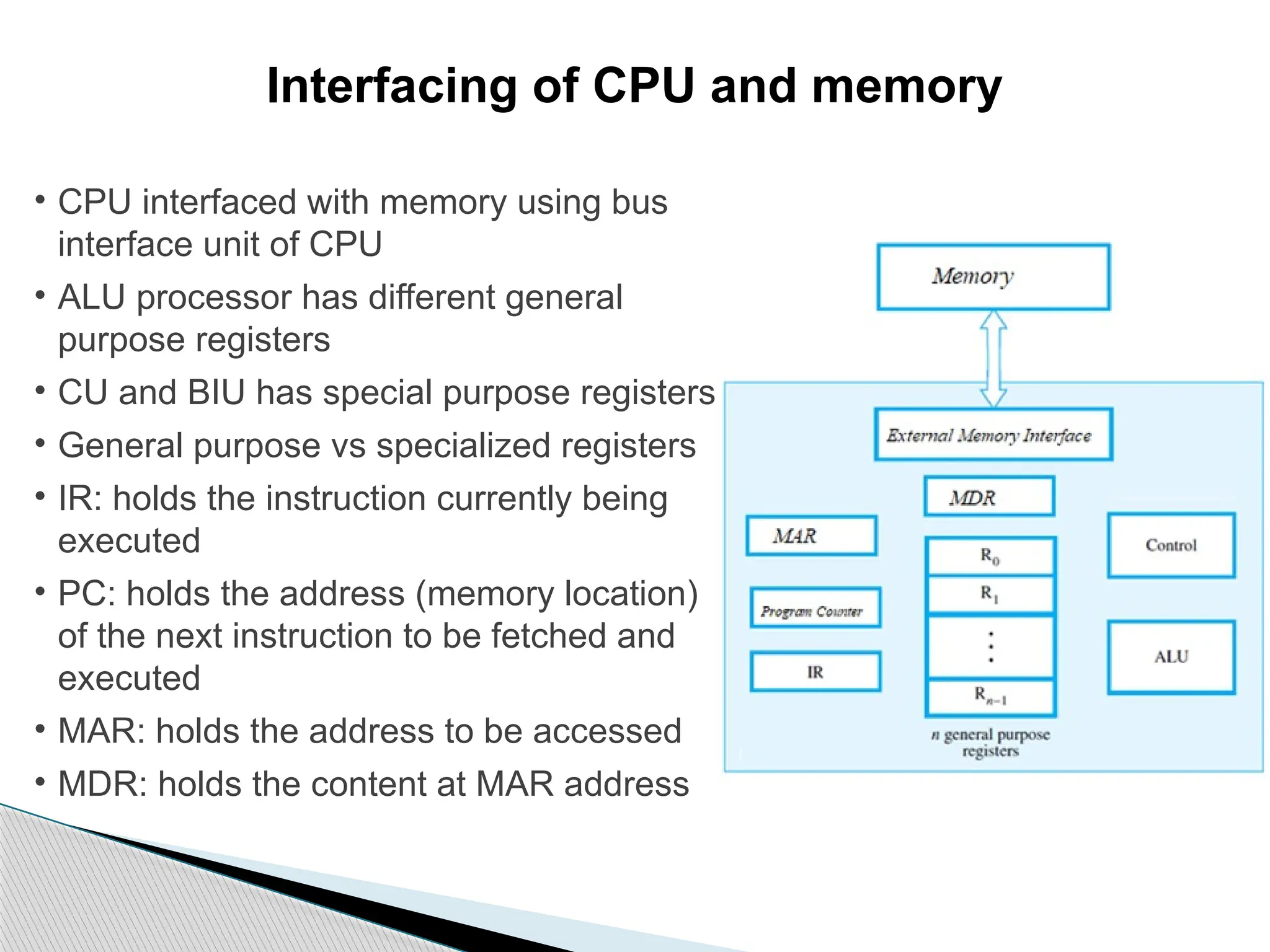

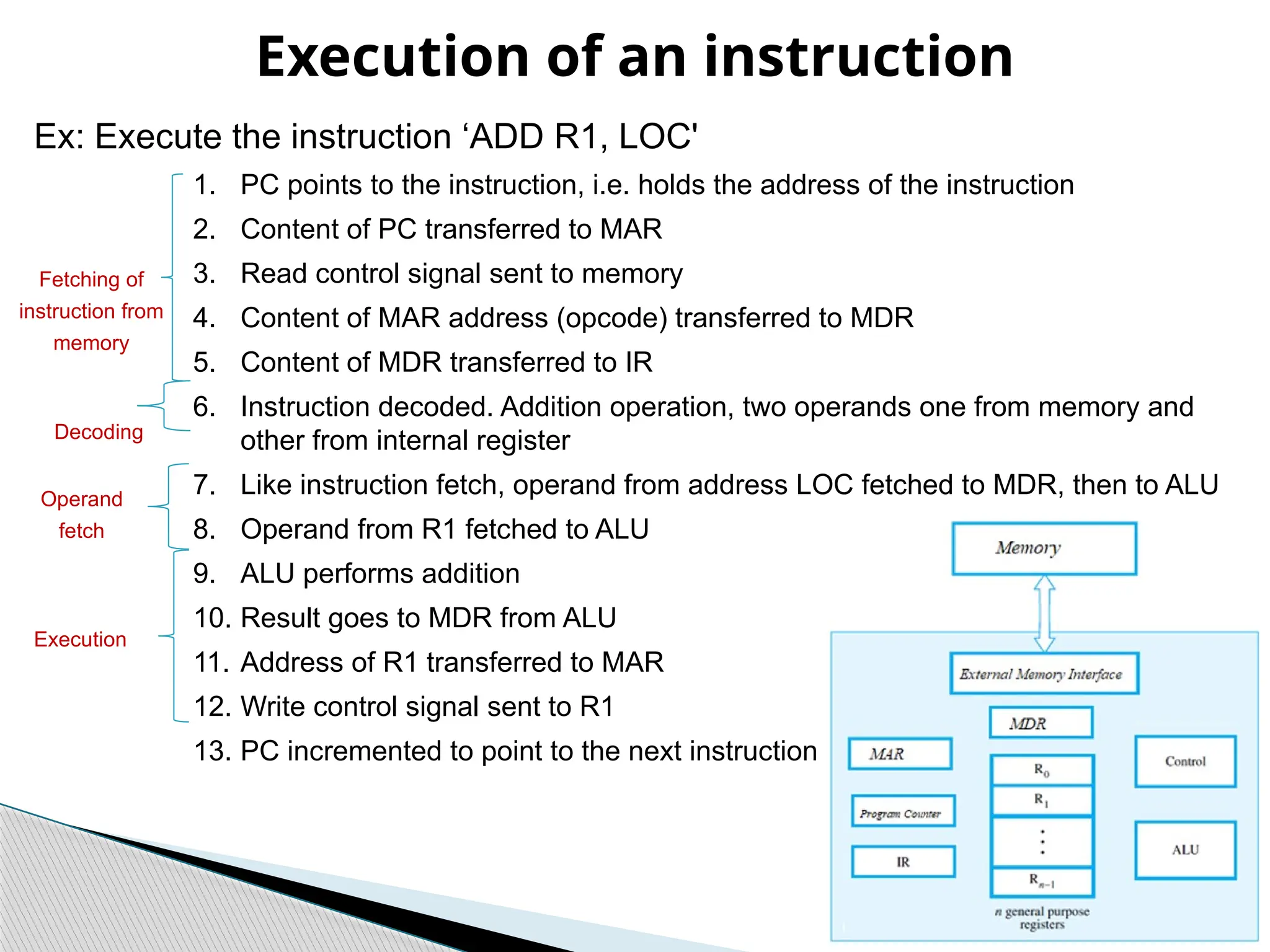

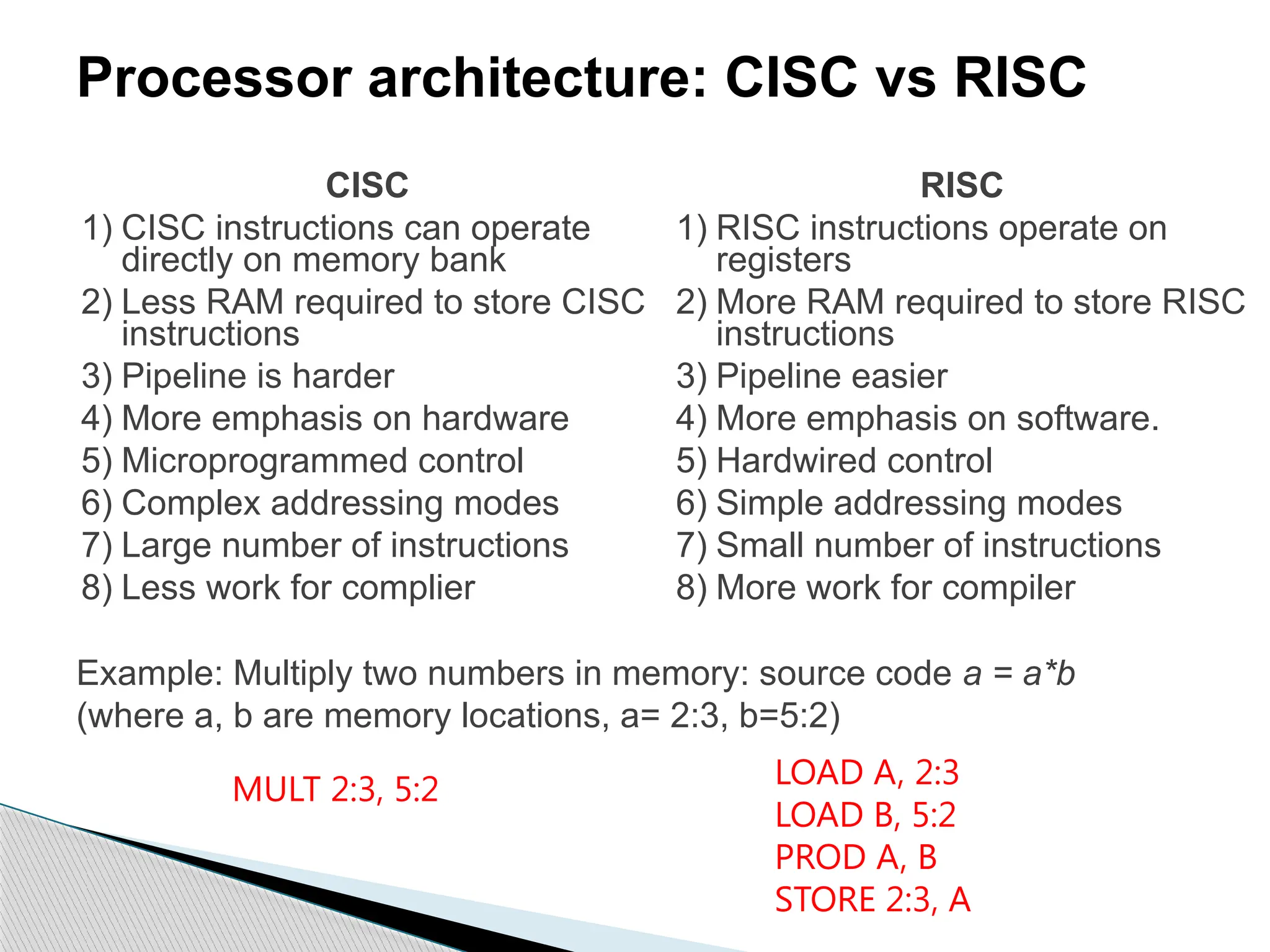

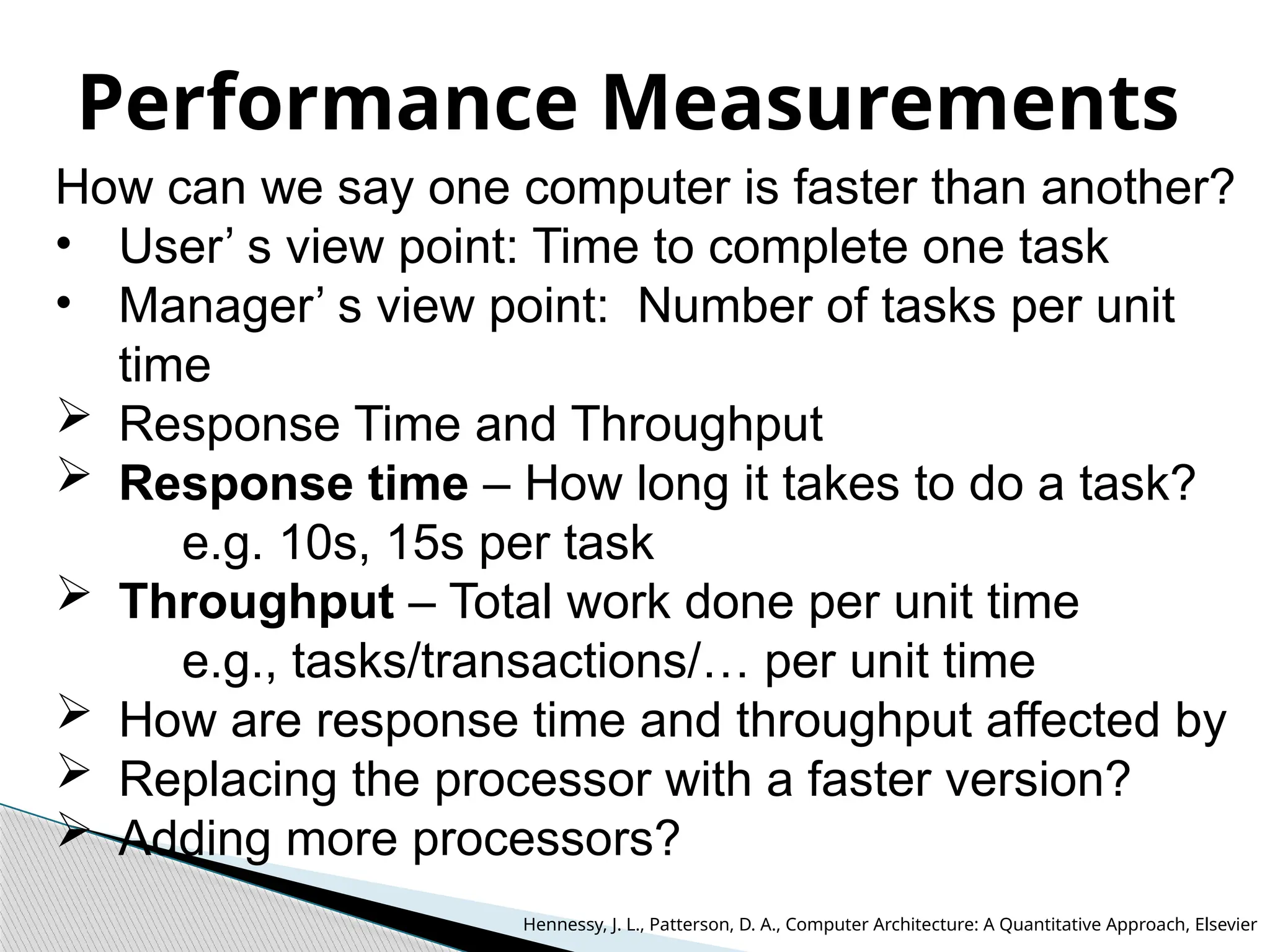

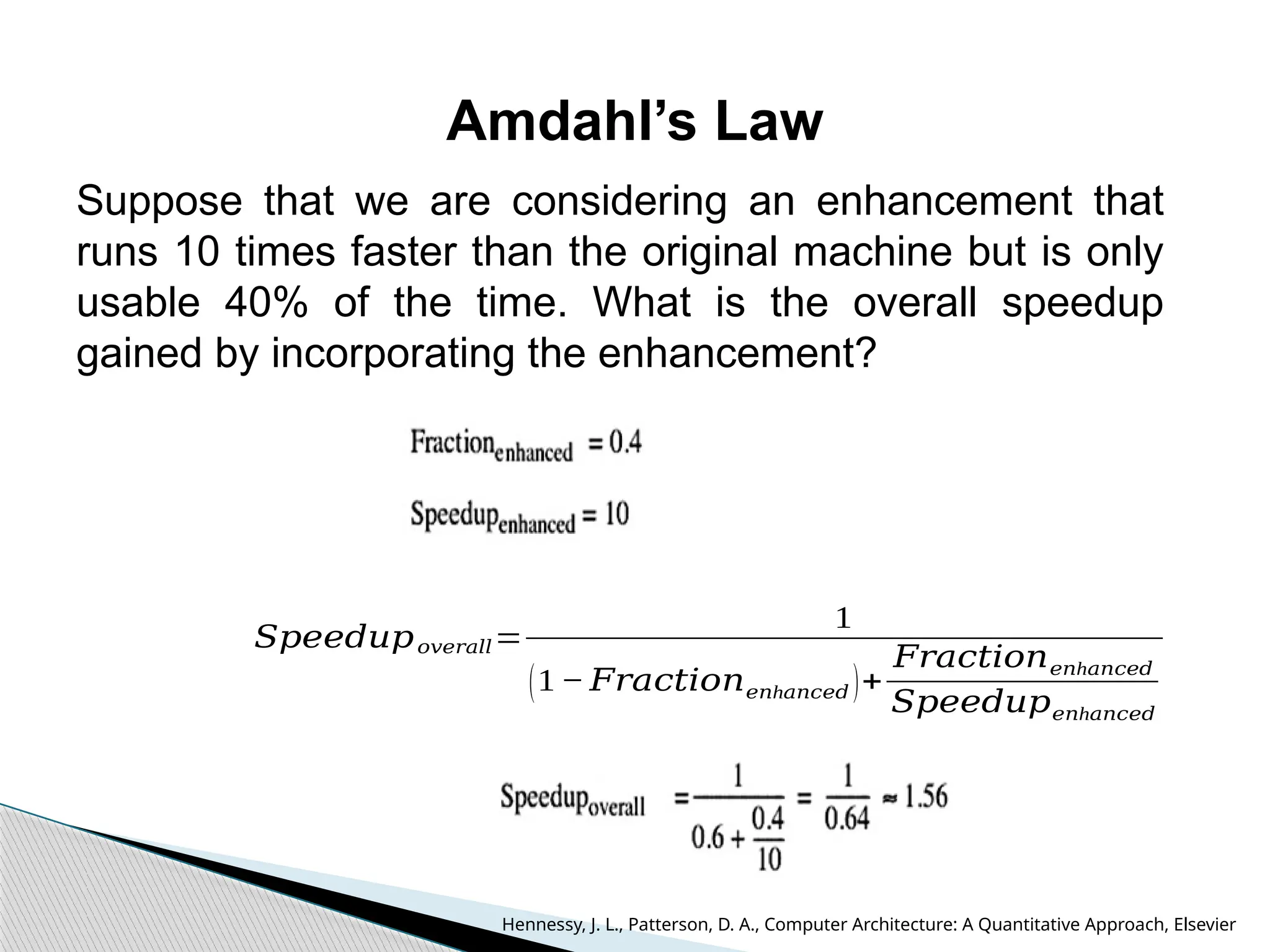

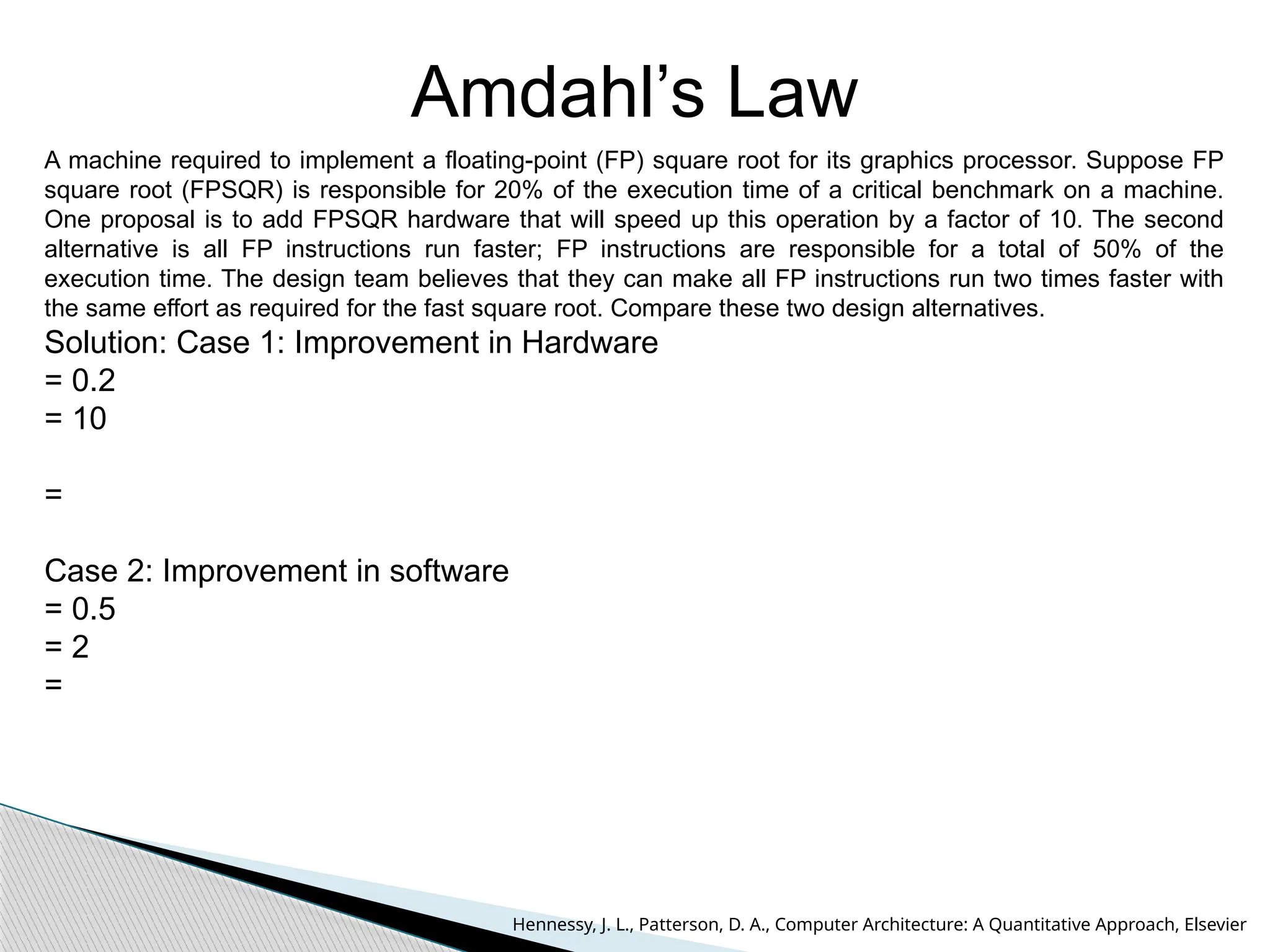

The document outlines the course UEC509 on computer architecture, led by Dr. Karmjit Singh Sandha, focusing on RISC architecture and performance enhancement techniques like pipelining and memory optimization. It covers a wide range of topics including instruction set principles, pipelining, memory hierarchy design, multiprocessors, and input/output organization, aiming to equip students with the abilities to analyze RISC processors and design efficient computing systems. The course also explores the historical evolution of computers across five generations, delving into various computer architectures and the functional units of a computer.