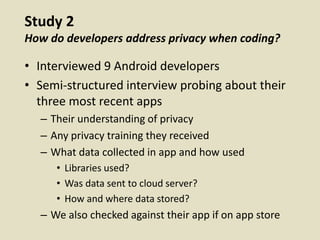

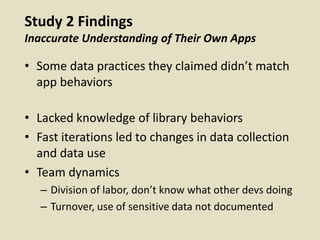

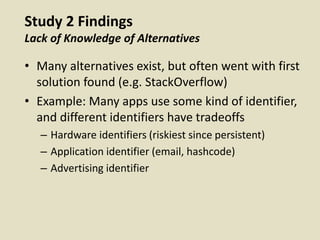

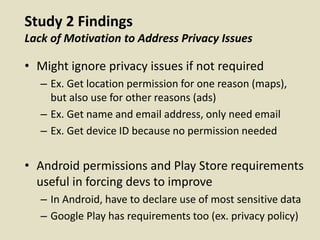

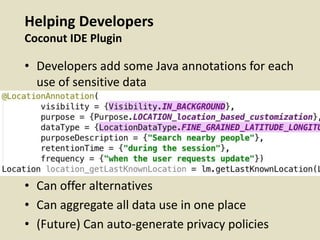

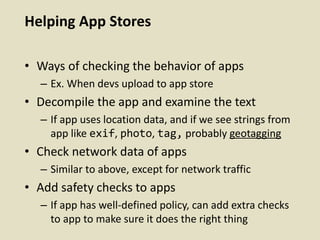

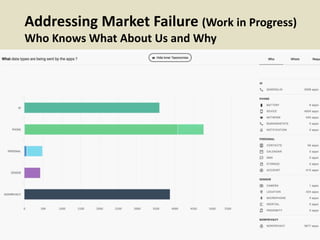

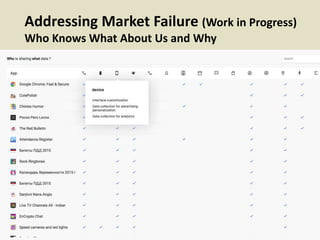

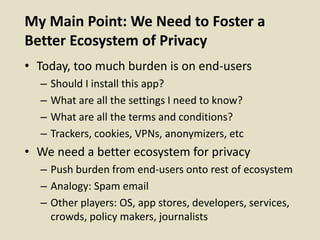

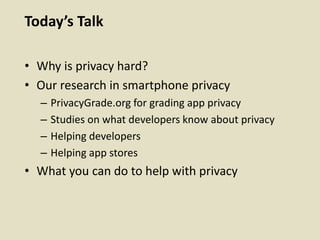

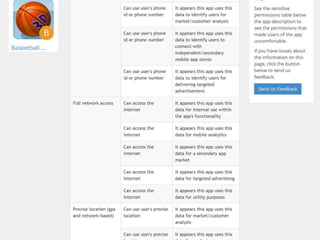

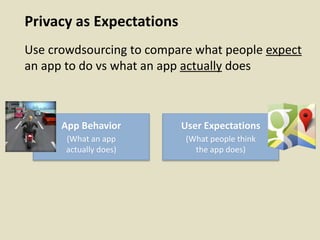

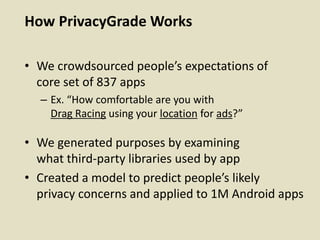

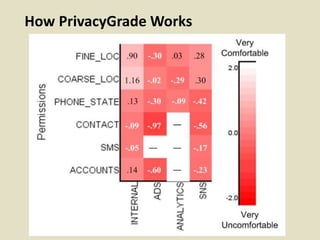

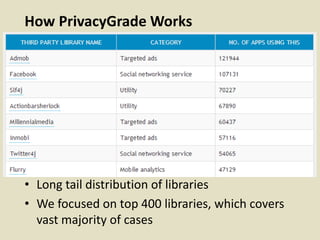

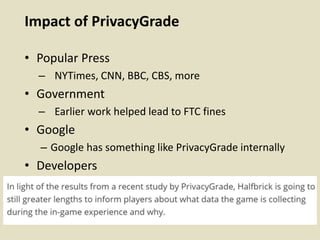

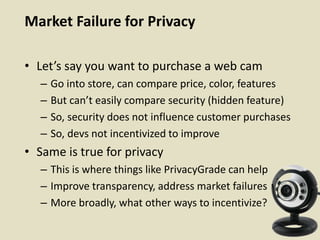

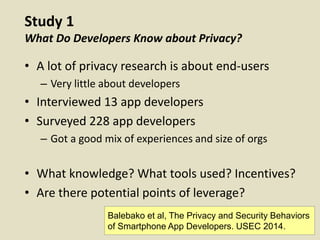

This document discusses fostering an ecosystem for smartphone privacy. It notes that over 1 billion smartphones are sold each year, containing intimate personal data. The researcher's work focused on studying app privacy and building tools like PrivacyGrade.org to grade apps. Studies showed developers have low awareness of privacy issues and tools. The document calls for a better ecosystem where the burden of privacy is shared, and provides opportunities for others to help like improving incentives for developers or addressing economic issues.

![“[An analyst at Target] was able to identify about

25 products that… allowed him to assign each

shopper a ‘pregnancy prediction’ score. [H]e

could also estimate her due date to within a small

window, so Target could send coupons timed to

very specific stages of her pregnancy.” (NYTimes)](https://image.slidesharecdn.com/privacy-asu-nov2018-upload-181103053146/85/Fostering-an-Ecosystem-for-Smartphone-Privacy-20-320.jpg)

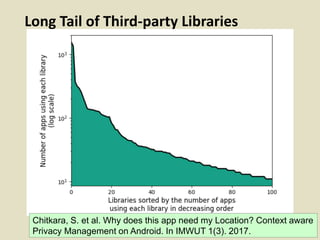

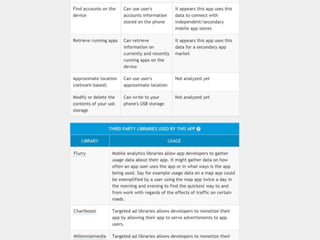

![Study 1 Summary of Findings

Third-party Libraries Problematic

• Use ads and analytics to monetize

• Hard to understand their behaviors

– A few didn’t know they were using libraries

(based on inconsistent answers)

– Some didn’t know the libraries collected data

– “If either Facebook or Flurry had a privacy policy that

was short and concise and condensed into real

English rather than legalese, we definitely would

have read it.”

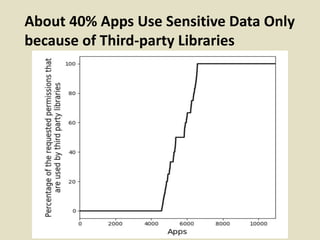

– In a later study we did on apps, we found 40% apps

used sensitive data only b/c of libraries [Chitkara 2017]](https://image.slidesharecdn.com/privacy-asu-nov2018-upload-181103053146/85/Fostering-an-Ecosystem-for-Smartphone-Privacy-38-320.jpg)

![Study 1 Summary of Findings

Devs Don’t Know What to Do

• Low awareness of existing privacy guidelines

– Fair Information Practices, FTC guidelines, Google

– Often just ask others around them

• Low perceived value of privacy policies

– Mostly protection from lawsuits

– “I haven’t even read [our privacy policy]. I mean, it’s

just legal stuff that’s required, so I just put in there.”](https://image.slidesharecdn.com/privacy-asu-nov2018-upload-181103053146/85/Fostering-an-Ecosystem-for-Smartphone-Privacy-39-320.jpg)